Tuning an Akka Application

See an overview of an application that can be tuned with Akka, the load tests and tools that can be used to measure app performance, and some test results.

Join the DZone community and get the full member experience.

Join For FreeThis post presents an example of how to tune an Akka application. The first section presents the application (actually, a simple microservice) that will be used along with this post to illustrate the tuning process. The second section describes the load tests and tools that will be used to measure the application performance. The third section presents different test scenarios and the results obtained for each of them. Finally, the last section provides some final considerations when it comes to extrapolating the results to a Production environment.

Application Description

To keep it brief, the application under study is a microservice that receives requests through a REST endpoint and, in turn, calls to a third-party SOAP endpoint; then the SOAP response is enriched with data extracted from a Redis database, and the final response is sent back to the client.

Components

The application is based on Akka 2.4.11 and Scala 2.11.8 and has these components:

- REST endpoint exposed through Akka HTTP.

- Apache Camel’s CXF component to connect to a third party SOAP endpoint.

- StatsD client to connect to a StatsD server.

- Logback as logging framework.

- Circuit breaker to insulate the application from failures on Redis and the third party SOAP endpoint.

- Redis client that performs non-blocking and asynchronous I/O operations.

Thread Pools

Now, what is the representation of all these components at runtime?

The components described in the previous section are nice, high-level abstractions to let the developers do their jobs easily. However, when it comes to tuning the application, it is necessary to move to a lower level of abstraction in order to analyze the different threads that run the application.

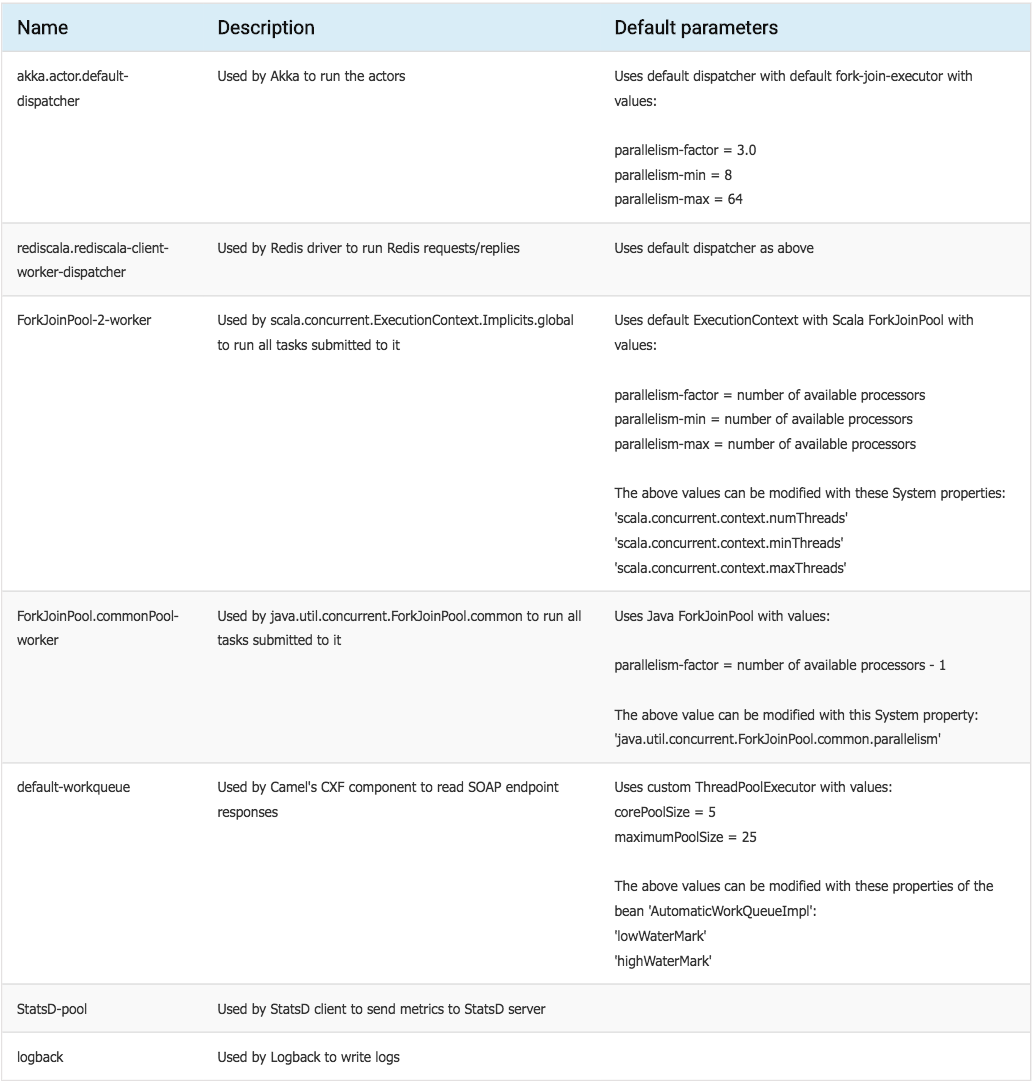

The following table shows the thread pools found after taking a thread dump of the application:

The application is configured to use a router of actors whose number can be changed in between tests. This will allow us to explore the behavior of the application when combining different values of threads and routees.Configuration

The default dispatcher delegates the calls to the third party to scala.concurrent.ExecutionContext.Implicits.global inside a blocking block. The role of the blocking block is to ensure that parallelism level is maintained despite the blocking operation.

scala.concurrent.ExecutionContext.Implicits.global is also used to process all others Future operations in the application, including Redis responses. Admittedly, having too many operations sharing the same limited number of threads of scala.concurrent.ExecutionContext.Implicits.global may lead to starvation. That is why the call to the third party inside a blocking block is so critical.

On the other hand, Redis driver uses its own thread pool, so there is no risk of blocking threads shared with other operations.

Note: The dispatcher used by the actors plays a role similar to the event loop in languages like Node.js. Therefore, it is of paramount importance that the dispatcher threads never block, as that would block the entire Akka machinery. All blocking calls must be delegated to some other thread pool.

When the application runs on my laptop, which has 8 processors, the number of threads is determined by the default configuration specified in the above table:

- Default dispatcher: 24 threads.

- scala.concurrent.ExecutionContext.Implicits.global: 8 threads.

- java.util.concurrent.ForkJoinPool.common: 7 threads.

Load Test Description

The process of tuning the application relies on monitoring under a heavy workload. To generate traffic on the application, we will use a Jmeter script with different variables:

- target.concurrency: number of concurrent clients calling the microservice.

- ramup.time: time (in seconds) to hit the concurrency target.

- ramup.steps: number of steps to reach the concurrency target; it represents the users arrival rate.

- target.time: span of time (in seconds) during which the test runs after reaching the concurrency target; therefore, the total duration of the test is ramup.time + target.time.

The ramp-up time is a transitory period, the shorter it is compared to the target time the more accurate the results will be.

We will configure WireMock to stand in for the third party. It will be configured to generate responses with 1 second delay (http://wiremock.org/docs/simulating-faults/).

Redis runs on a Docker container on localhost and its latency is of the order of a few milliseconds (what makes the third party’s latency the dominant factor when it comes to considering blocking operations)

Tools

This section describes the different tools used to run and monitor the tests.

JMeter

First of all, we need a JMeter script to generate traffic. Here is the properties file used with the script:

# IP to connect to the service

host.ip=localhost

# Port to connect to the service

host.port=8080

# Number of concurrent clients calling the service

target.concurrency=xx

# Time (in seconds) to hit the concurrency target

ramup.time=xx

# Number of steps to reach the concurrency target

ramup.steps=xx

# Span of time (in seconds) during which the test runs after reaching the concurrency target

# Therefore, the total duration of the test is ramup.time + target.time

target.time=xxAnd the command to run the script:

jmeter -n -t jmeterScript.jmx -p jmeterProperties.propertiesThread Analyzer

Based on the script jstackSeries, here is a thread sampler that takes thread dumps at intervals during the time the application runs and presents a summary of the evolution of the number of each type of threads. This thread sampler will help us get information about the behavior of the threads during the load test runs.

#!/bin/bash

extension=tdump

if [ $# -eq 0 ]; then

echo >&2 "Usage: jstackSeries <pid> [ <suffix> [ <count> [ <delay> ] ] ]"

echo >&2 " Defaults: suffix = "dump", count = 10, delay = 60 (seconds)"

exit 1

fi

pid=$1 # required

suffix=${2:-dump} # defaults to "dump"

count=${3:-10} # defaults to 10 times

delay=${4:-60} # defaults to 60 seconds

echo $pid $suffix $count $delay

while [ $count -gt 0 ]

do

jstack -l $pid >jstack.$suffix.$pid.$(date +%H%M%S).$extension

sleep $delay

let count--

echo -n "."

done

dispatcher=akka.actor.default-dispatcher

rediscala=rediscala.rediscala-client-worker-dispatcher

global=ForkJoinPool-2-worker

common=ForkJoinPool.commonPool-worker

apache=default-workqueue

statsd=StatsD-pool

log=logback

echo "" > ./tmp.txt

for f in *$suffix*.tdump

do

echo "===========> $f" >> ./tmp.txt

echo "$dispatcher: $(grep "$dispatcher" $f | wc -l)" >> ./tmp.txt

echo "$rediscala: $(grep "$rediscala" $f | wc -l)" >> ./tmp.txt

#scala.concurrent.ExecutionContext.Implicits.global

echo "$global: $(grep "$global" $f | wc -l)" >> ./tmp.txt

#java.util.concurrent.ForkJoinPool.common

echo "$common: $(grep "$common" $f | wc -l)" >> ./tmp.txt

echo "$apache: $(grep "$apache" $f | wc -l)" >> ./tmp.txt

echo "$statsd: $(grep "$statsd" $f | wc -l)" >> ./tmp.txt

echo "$log: $(grep "$log" $f | wc -l)" >> ./tmp.txt

done

echo "=========== FILES ===========" > result.$suffix.txt

grep ".$extension" ./tmp.txt >> result.$suffix.txt

echo "=========== START ===========" >> result.$suffix.txt

grep "$dispatcher" ./tmp.txt >> result.$suffix.txt

echo "=======================" >> result.$suffix.txt

grep "$rediscala" ./tmp.txt >> result.$suffix.txt

echo "=======================" >> result.$suffix.txt

grep "$global" ./tmp.txt >> result.$suffix.txt

echo "=======================" >> result.$suffix.txt

grep "$common" ./tmp.txt >> result.$suffix.txt

echo "=======================" >> result.$suffix.txt

grep "$apache" ./tmp.txt >> result.$suffix.txt

echo "=======================" >> result.$suffix.txt

grep "$statsd" ./tmp.txt >> result.$suffix.txt

echo "=======================" >> result.$suffix.txt

grep "$log" ./tmp.txt >> result.$suffix.txt

echo "=========== END ===========" >> result.$suffix.txt

rm ./tmp.txtThread Dump Analyzer

In order to examine the content of the thread dumps in detail, a tool like this comes in handy. It is a Java application that can be run with a JAR file:

java -jar <tda home>/tda.jarRedis Connections Script

In order to have the entire picture, it is also necessary to monitor the number of connections to Redis. Here is the script used to count the number of connections (as it was mentioned, we are running Redis inside a Docker container):

#!/bin/bash

echo "Number of Redis connections = $(netstat -av | grep docker.filenet-tms | wc -l)"Scenarios

This section presents the results obtained for the different scenarios resulting from combining the values of concurrent clients, routees, and threads.

20 Clients, 20 Routees, Parallelism-Factor (of Default Dispatcher)=3

Results are as expected, throughput near 20 (ramp-up period affects negatively to the overall result) and response time around one second.

The number of Redis connections is also 20, one per actor:

summary + 273 in 24.1s = 11.3/s Avg: 1047 Min: 1010 Max: 3304 Err: 0 (0.00%) Active: 20 Started: 20 Finished: 0

summary + 598 in 30s = 19.9/s Avg: 1018 Min: 1006 Max: 1082 Err: 0 (0.00%) Active: 20 Started: 20 Finished: 0

summary = 871 in 54.1s = 16.1/s Avg: 1027 Min: 1006 Max: 3304 Err: 0 (0.00%)

summary + 582 in 30s = 19.4/s Avg: 1017 Min: 1006 Max: 1100 Err: 0 (0.00%) Active: 20 Started: 20 Finished: 0

summary = 1453 in 84.1s = 17.3/s Avg: 1023 Min: 1006 Max: 3304 Err: 0 (0.00%)

summary + 598 in 30s = 20.0/s Avg: 1017 Min: 1007 Max: 1096 Err: 0 (0.00%) Active: 20 Started: 20 Finished: 0

summary = 2051 in 114s = 18.0/s Avg: 1021 Min: 1006 Max: 3304 Err: 0 (0.00%)

summary + 540 in 27.5s = 19.7/s Avg: 1016 Min: 1006 Max: 1094 Err: 0 (0.00%) Active: 0 Started: 20 Finished: 20

summary = 2591 in 142s = 18.3/s Avg: 1020 Min: 1006 Max: 3304 Err: 0 (0.00%)The command jstackSeries.sh 2297 _20-20-3 4 30 yields this result. As expected, the number of threads increase as new concurrent clients are added during the test.

Remarkably, the thread pool ForkJoinPool-2-worker (corresponding to scala.concurrent.ExecutionContext.Implicits.global) has exceeded its maximum number of 8. As discussed above, this is down to the use of the statement blocking to enclose the blocking call to the SOAP endpoint.

=========== FILES ===========

===========> jstack.20-20-3.5173.000713.tdump

===========> jstack.20-20-3.5173.000743.tdump

===========> jstack.20-20-3.5173.000813.tdump

===========> jstack.20-20-3.5173.000844.tdump

=========== START ===========

akka.actor.default-dispatcher: 10

akka.actor.default-dispatcher: 14

akka.actor.default-dispatcher: 14

akka.actor.default-dispatcher: 17

=======================

rediscala.rediscala-client-worker-dispatcher: 10

rediscala.rediscala-client-worker-dispatcher: 13

rediscala.rediscala-client-worker-dispatcher: 16

rediscala.rediscala-client-worker-dispatcher: 21

=======================

ForkJoinPool-2-worker: 1

ForkJoinPool-2-worker: 21

ForkJoinPool-2-worker: 21

ForkJoinPool-2-worker: 21

=======================

ForkJoinPool.commonPool-worker: 0

ForkJoinPool.commonPool-worker: 3

ForkJoinPool.commonPool-worker: 3

ForkJoinPool.commonPool-worker: 3

=======================

default-workqueue: 5

default-workqueue: 20

default-workqueue: 20

default-workqueue: 20

=======================

StatsD-pool: 1

StatsD-pool: 1

StatsD-pool: 1

StatsD-pool: 1

=======================

logback: 2

logback: 2

logback: 2

logback: 2

=========== END ===========20 Clients, 1 Routees, Parallelism-Factor=3

Results are similar to the previous ones. Therefore, it turns out that one actor can handle the same amount of traffic as 20. It makes sense as the actor does not perform any blocking operation and therefore is lightning fast.

A further consequence is that, as only 1 thread at a time can run inside an actor, a single thread in the default dispatcher should be enough.

Moreover, given that there is only 1 routee, there is just one Redis connection. Again, this does not seem to penalize the performance (Redis operations take just a few milliseconds which, obviously, is a negligible amount compared to the dominant latency of the SOAP endpoint)

summary + 57 in 11.1s = 5.1/s Avg: 1112 Min: 1014 Max: 3040 Err: 0 (0.00%) Active: 12 Started: 12 Finished: 0

summary + 544 in 30.1s = 18.1/s Avg: 1036 Min: 1008 Max: 1656 Err: 0 (0.00%) Active: 20 Started: 20 Finished: 0

summary = 601 in 41.2s = 14.6/s Avg: 1043 Min: 1008 Max: 3040 Err: 0 (0.00%)

summary + 587 in 30s = 19.7/s Avg: 1017 Min: 1007 Max: 1050 Err: 0 (0.00%) Active: 20 Started: 20 Finished: 0

summary = 1188 in 71s = 16.7/s Avg: 1031 Min: 1007 Max: 3040 Err: 0 (0.00%)

summary + 593 in 30.2s = 19.7/s Avg: 1016 Min: 1006 Max: 1060 Err: 0 (0.00%) Active: 20 Started: 20 Finished: 0

summary = 1781 in 101s = 17.6/s Avg: 1026 Min: 1006 Max: 3040 Err: 0 (0.00%)

summary + 587 in 30s = 19.7/s Avg: 1016 Min: 1005 Max: 1047 Err: 0 (0.00%) Active: 20 Started: 20 Finished: 0

summary = 2368 in 131s = 18.1/s Avg: 1023 Min: 1005 Max: 3040 Err: 0 (0.00%)

summary + 212 in 10.4s = 20.5/s Avg: 1016 Min: 1007 Max: 1041 Err: 0 (0.00%) Active: 0 Started: 20 Finished: 20

summary = 2580 in 141s = 18.3/s Avg: 1023 Min: 1005 Max: 3040 Err: 0 (0.00%)The result of the thread samples is similar to the previous scenario.

=========== FILES ===========

===========> jstack.20-1-3.6096.001838.tdump

===========> jstack.20-1-3.6096.001908.tdump

===========> jstack.20-1-3.6096.001938.tdump

===========> jstack.20-1-3.6096.002009.tdump

=========== START ===========

akka.actor.default-dispatcher: 10

akka.actor.default-dispatcher: 10

akka.actor.default-dispatcher: 12

akka.actor.default-dispatcher: 18

=======================

rediscala.rediscala-client-worker-dispatcher: 8

rediscala.rediscala-client-worker-dispatcher: 11

rediscala.rediscala-client-worker-dispatcher: 12

rediscala.rediscala-client-worker-dispatcher: 13

=======================

ForkJoinPool-2-worker: 1

ForkJoinPool-2-worker: 21

ForkJoinPool-2-worker: 21

ForkJoinPool-2-worker: 21

=======================

ForkJoinPool.commonPool-worker: 0

ForkJoinPool.commonPool-worker: 3

ForkJoinPool.commonPool-worker: 3

ForkJoinPool.commonPool-worker: 3

=======================

default-workqueue: 5

default-workqueue: 20

default-workqueue: 20

default-workqueue: 20

=======================

StatsD-pool: 1

StatsD-pool: 1

StatsD-pool: 1

StatsD-pool: 1

=======================

logback: 2

logback: 2

logback: 2

logback: 2

=========== END ===========20 Clients, 1 Routees, 1 Thread

As mentioned in the previous scenario, only one thread at a time can run inside an actor and therefore a single thread in the default dispatcher should be enough

Putting our theory to test leaving just one routee and one thread in the default dispatcher, the results are very satisfactory — same throughput and response time as previously!

summary + 1 in 3.4s = 0.3/s Avg: 3089 Min: 3089 Max: 3089 Err: 0 (0.00%) Active: 4 Started: 4 Finished: 0

summary + 374 in 26s = 14.6/s Avg: 1028 Min: 1007 Max: 3093 Err: 0 (0.00%) Active: 20 Started: 20 Finished: 0

summary = 375 in 29s = 12.9/s Avg: 1033 Min: 1007 Max: 3093 Err: 0 (0.00%)

summary + 591 in 30s = 19.7/s Avg: 1017 Min: 1007 Max: 1127 Err: 0 (0.00%) Active: 20 Started: 20 Finished: 0

summary = 966 in 59s = 16.4/s Avg: 1023 Min: 1007 Max: 3093 Err: 0 (0.00%)

summary + 588 in 30s = 19.6/s Avg: 1016 Min: 1006 Max: 1049 Err: 0 (0.00%) Active: 20 Started: 20 Finished: 0

summary = 1554 in 89s = 17.5/s Avg: 1020 Min: 1006 Max: 3093 Err: 0 (0.00%)

summary + 596 in 30s = 19.8/s Avg: 1015 Min: 1006 Max: 1047 Err: 0 (0.00%) Active: 20 Started: 20 Finished: 0

summary = 2150 in 119s = 18.1/s Avg: 1019 Min: 1006 Max: 3093 Err: 0 (0.00%)

summary + 442 in 23s = 19.6/s Avg: 1016 Min: 1006 Max: 1083 Err: 0 (0.00%) Active: 0 Started: 20 Finished: 20

summary = 2592 in 142s = 18.3/s Avg: 1018 Min: 1006 Max: 3093 Err: 0 (0.00%)This time, there is just one thread in the default dispatcher thread pool and in the rediscala dispatcher.

=========== FILES ===========

===========> jstack.20-1-1-1.7377.003828.tdump

===========> jstack.20-1-1-1.7377.003858.tdump

===========> jstack.20-1-1-1.7377.003928.tdump

===========> jstack.20-1-1-1.7377.003958.tdump

=========== START ===========

akka.actor.default-dispatcher: 1

akka.actor.default-dispatcher: 1

akka.actor.default-dispatcher: 1

akka.actor.default-dispatcher: 1

=======================

rediscala.rediscala-client-worker-dispatcher: 0

rediscala.rediscala-client-worker-dispatcher: 1

rediscala.rediscala-client-worker-dispatcher: 1

rediscala.rediscala-client-worker-dispatcher: 1

=======================

ForkJoinPool-2-worker: 1

ForkJoinPool-2-worker: 21

ForkJoinPool-2-worker: 21

ForkJoinPool-2-worker: 21

=======================

ForkJoinPool.commonPool-worker: 0

ForkJoinPool.commonPool-worker: 3

ForkJoinPool.commonPool-worker: 3

ForkJoinPool.commonPool-worker: 3

=======================

default-workqueue: 0

default-workqueue: 21

default-workqueue: 21

default-workqueue: 21

=======================

StatsD-pool: 1

StatsD-pool: 1

StatsD-pool: 1

StatsD-pool: 1

=======================

logback: 1

logback: 2

logback: 2

logback: 2

=========== END ===========20 Clients, 20 Routees, Parallelism-Factor=1, 1 Thread

So, what happens when there are 20 routees and only one thread? Do they compete for the thread or share it among them?

Again, given that the actors do not block, the thread is freed instantly so that the next actor can grab hold of it. The results displayed below remain the same as the previous scenarios.

summary + 5 in 5s = 1.1/s Avg: 2005 Min: 1042 Max: 3086 Err: 0 (0.00%) Active: 6 Started: 6 Finished: 0

summary + 468 in 30s = 15.8/s Avg: 1021 Min: 1009 Max: 1138 Err: 0 (0.00%) Active: 20 Started: 20 Finished: 0

summary = 473 in 34.1s = 13.9/s Avg: 1031 Min: 1009 Max: 3086 Err: 0 (0.00%)

summary + 587 in 30s = 19.6/s Avg: 1021 Min: 1008 Max: 1266 Err: 0 (0.00%) Active: 20 Started: 20 Finished: 0

summary = 1060 in 64.1s = 16.5/s Avg: 1026 Min: 1008 Max: 3086 Err: 0 (0.00%)

summary + 592 in 30s = 19.8/s Avg: 1018 Min: 1006 Max: 1111 Err: 0 (0.00%) Active: 20 Started: 20 Finished: 0

summary = 1652 in 94.1s = 17.6/s Avg: 1023 Min: 1006 Max: 3086 Err: 0 (0.00%)

summary + 585 in 30s = 19.5/s Avg: 1018 Min: 1007 Max: 1148 Err: 0 (0.00%) Active: 20 Started: 20 Finished: 0

summary = 2237 in 124s = 18.0/s Avg: 1022 Min: 1006 Max: 3086 Err: 0 (0.00%)

summary + 343 in 17.4s = 19.7/s Avg: 1017 Min: 1007 Max: 1051 Err: 0 (0.00%) Active: 0 Started: 20 Finished: 20

summary = 2580 in 141s = 18.2/s Avg: 1021 Min: 1006 Max: 3086 Err: 0 (0.00%)Regarding the thread pools, just mentioning that even when the number of Redis connections is 20, the rediscala dispatcher contains only 1 thread.

=========== FILES ===========

===========> jstack.20-20-1-1.7095.003420.tdump

===========> jstack.20-20-1-1.7095.003450.tdump

===========> jstack.20-20-1-1.7095.003521.tdump

===========> jstack.20-20-1-1.7095.003551.tdump

=========== START ===========

akka.actor.default-dispatcher: 1

akka.actor.default-dispatcher: 1

akka.actor.default-dispatcher: 1

akka.actor.default-dispatcher: 1

=======================

rediscala.rediscala-client-worker-dispatcher: 0

rediscala.rediscala-client-worker-dispatcher: 1

rediscala.rediscala-client-worker-dispatcher: 1

rediscala.rediscala-client-worker-dispatcher: 1

=======================

ForkJoinPool-2-worker: 1

ForkJoinPool-2-worker: 21

ForkJoinPool-2-worker: 21

ForkJoinPool-2-worker: 21

=======================

ForkJoinPool.commonPool-worker: 0

ForkJoinPool.commonPool-worker: 3

ForkJoinPool.commonPool-worker: 3

ForkJoinPool.commonPool-worker: 3

=======================

default-workqueue: 0

default-workqueue: 22

default-workqueue: 22

default-workqueue: 22

=======================

StatsD-pool: 1

StatsD-pool: 1

StatsD-pool: 1

StatsD-pool: 1

=======================

logback: 1

logback: 2

logback: 2

logback: 2

=========== END ===========40 Clients, 1 Routee, 1 Thread

So far, the results have remained stable, meaning that the application has enough capacity to handle up t0 20 concurrent clients no matters its configuration.

However, when changing to 40 concurrent clients, the application hits the limit of 25 threads imposed by the camel connector thread pool. Therefore, the throughput is capped at 25 requests/sec and as a consequence, the response time goes up as there is not enough capacity to handle 40 clients/sec.

summary + 71 in 12s = 6.1/s Avg: 1019 Min: 1009 Max: 1083 Err: 0 (0.00%) Active: 12 Started: 12 Finished: 0

summary + 647 in 30s = 21.6/s Avg: 1211 Min: 1008 Max: 1927 Err: 0 (0.00%) Active: 40 Started: 40 Finished: 0

summary = 718 in 42s = 17.3/s Avg: 1192 Min: 1008 Max: 1927 Err: 0 (0.00%)

summary + 745 in 30.3s = 24.6/s Avg: 1620 Min: 1264 Max: 1963 Err: 0 (0.00%) Active: 40 Started: 40 Finished: 0

summary = 1463 in 72s = 20.4/s Avg: 1410 Min: 1008 Max: 1963 Err: 0 (0.00%)

summary + 740 in 30s = 24.9/s Avg: 1625 Min: 1277 Max: 1959 Err: 0 (0.00%) Active: 40 Started: 40 Finished: 0

summary = 2203 in 102s = 21.7/s Avg: 1482 Min: 1008 Max: 1963 Err: 0 (0.00%)

summary + 735 in 30s = 24.5/s Avg: 1619 Min: 1272 Max: 1960 Err: 0 (0.00%) Active: 40 Started: 40 Finished: 0

summary = 2938 in 132s = 22.3/s Avg: 1516 Min: 1008 Max: 1963 Err: 0 (0.00%)

summary + 746 in 30s = 24.9/s Avg: 1623 Min: 1280 Max: 1930 Err: 0 (0.00%) Active: 40 Started: 40 Finished: 0

summary = 3684 in 161s = 22.8/s Avg: 1538 Min: 1008 Max: 1963 Err: 0 (0.00%)

summary + 731 in 30s = 24.3/s Avg: 1624 Min: 1247 Max: 1906 Err: 0 (0.00%) Active: 40 Started: 40 Finished: 0

summary = 4415 in 192s = 23.1/s Avg: 1552 Min: 1008 Max: 1963 Err: 0 (0.00%)

summary + 748 in 30.2s = 24.8/s Avg: 1620 Min: 1295 Max: 1859 Err: 0 (0.00%) Active: 16 Started: 40 Finished: 24

summary = 5163 in 222s = 23.3/s Avg: 1562 Min: 1008 Max: 1963 Err: 0 (0.00%)

summary + 15 in 0.5s = 30.9/s Avg: 1769 Min: 1501 Max: 1844 Err: 0 (0.00%) Active: 0 Started: 40 Finished: 40

summary = 5178 in 222s = 23.3/s Avg: 1562 Min: 1008 Max: 1963 Err: 0 (0.00%)That limit is obvious in the result of the thread dumps: ForkJoinPool-2-worker spawns the 40 threads required to deal with all the requests, whereas default-workqueue is limited to 25 threads.

=========== FILES ===========

===========> jstack.40-1-1-1.7377.004241.tdump

===========> jstack.40-1-1-1.7377.004312.tdump

===========> jstack.40-1-1-1.7377.004342.tdump

===========> jstack.40-1-1-1.7377.004412.tdump

=========== START ===========

akka.actor.default-dispatcher: 1

akka.actor.default-dispatcher: 1

akka.actor.default-dispatcher: 1

akka.actor.default-dispatcher: 1

=======================

rediscala.rediscala-client-worker-dispatcher: 1

rediscala.rediscala-client-worker-dispatcher: 1

rediscala.rediscala-client-worker-dispatcher: 1

rediscala.rediscala-client-worker-dispatcher: 1

=======================

ForkJoinPool-2-worker: 1

ForkJoinPool-2-worker: 27

ForkJoinPool-2-worker: 41

ForkJoinPool-2-worker: 41

=======================

ForkJoinPool.commonPool-worker: 0

ForkJoinPool.commonPool-worker: 1

ForkJoinPool.commonPool-worker: 3

ForkJoinPool.commonPool-worker: 3

=======================

default-workqueue: 21

default-workqueue: 25

default-workqueue: 25

default-workqueue: 25

=======================

StatsD-pool: 1

StatsD-pool: 1

StatsD-pool: 1

StatsD-pool: 1

=======================

logback: 2

logback: 2

logback: 2

logback: 2

=========== END ===========We will stop here as the few examples discussed in this post give a good idea of the different factors to take into account when dealing with an Akka application (and in general any application running on the JVM).

Final Thoughts

Despite the fact that one actor with one thread has proven to be enough to handle the scenarios proposed in the post, it would be better to take advantage of all the eight processors. Admittedly, it does not make any difference for the examples considered but it will for much higher volumes.

When running perf tests, it is very important to remember that on Prod, the number of processors may be different and therefore the size of the different thread pools. This is especially true when deploying on the cloud as DevOps will tend to choose the smallest available VMs in order to cut down costs. As a consequence, the number of processors on Prod is likely to be smaller than on your laptop!

Published at DZone with permission of Francisco Alvarez, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments