Using Spring AI With LLMs to Generate Java Tests

Did you ever want to just generate your tests? The goal of this article is to test how well LLMs can help developers create tests.

Join the DZone community and get the full member experience.

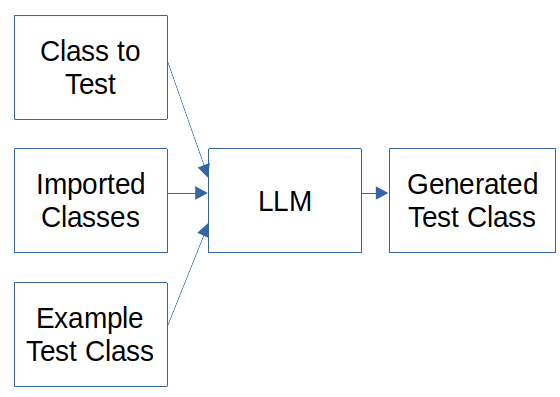

Join For FreeThe AIDocumentLibraryChat project has been extended to generate test code (Java code has been tested). The project can generate test code for publicly available GitHub projects. The URL of the class to test can be provided then the class is loaded, the imports are analyzed and the dependent classes in the project are also loaded. That gives the LLM the opportunity to consider the imported source classes while generating mocks for tests. The testUrl can be provided to give an example to the LLM to base the generated test. The granite-code, deepseek-coder-v2, and codestral models have been tested with Ollama.

The goal is to test how well the LLMs can help developers create tests.

Implementation

Configuration

To select the LLM model the application-ollama.properties file needs to be updated:

spring.ai.ollama.base-url=${OLLAMA-BASE-URL:http://localhost:11434}

spring.ai.ollama.embedding.enabled=false

spring.ai.embedding.transformer.enabled=true

document-token-limit=150

embedding-token-limit=500

spring.liquibase.change-log=classpath:/dbchangelog/db.changelog-master-ollama.xml

...

# generate code

#spring.ai.ollama.chat.model=granite-code:20b

#spring.ai.ollama.chat.options.num-ctx=8192

spring.ai.ollama.chat.options.num-thread=8

spring.ai.ollama.chat.options.keep_alive=1s

#spring.ai.ollama.chat.model=deepseek-coder-v2:16b

#spring.ai.ollama.chat.options.num-ctx=65536

spring.ai.ollama.chat.model=codestral:22b

spring.ai.ollama.chat.options.num-ctx=32768The spring.ai.ollama.chat.model selects the LLM code model to use.

The spring.ollama.chat.options.num-ctx sets the number of tokens in the context window. The context window contains the tokens required by the request and the tokens required by the response.

The spring.ollama.chat.options.num-thread can be used if Ollama does not choose the right amount of cores to use. The spring.ollama.chat.options.keep_alive sets the number of seconds the context window is retained.

Controller

The interface to get the sources and to generate the test is the controller:

@RestController

@RequestMapping("rest/code-generation")

public class CodeGenerationController {

private final CodeGenerationService codeGenerationService;

public CodeGenerationController(CodeGenerationService

codeGenerationService) {

this.codeGenerationService = codeGenerationService;

}

@GetMapping("/test")

public String getGenerateTests(@RequestParam("url") String url,

@RequestParam(name = "testUrl", required = false) String testUrl) {

return this.codeGenerationService.generateTest(URLDecoder.decode(url,

StandardCharsets.UTF_8),

Optional.ofNullable(testUrl).map(myValue -> URLDecoder.decode(myValue,

StandardCharsets.UTF_8)));

}

@GetMapping("/sources")

public GithubSources getSources(@RequestParam("url") String url,

@RequestParam(name="testUrl", required = false) String testUrl) {

var sources = this.codeGenerationService.createTestSources(

URLDecoder.decode(url, StandardCharsets.UTF_8), true);

var test = Optional.ofNullable(testUrl).map(myTestUrl ->

this.codeGenerationService.createTestSources(

URLDecoder.decode(myTestUrl, StandardCharsets.UTF_8), false))

.orElse(new GithubSource("none", "none", List.of(), List.of()));

return new GithubSources(sources, test);

}

}The CodeGenerationController has the method getSources(...). It gets the URL and optionally the testUrl for the class to generate tests for and for the optional example test. It decodes the request parameters and calls the createTestSources(...) method with them. The method returns the GithubSources with the sources of the class to test, its dependencies in the project, and the test example.

The method getGenerateTests(...) gets the url for the test class and the optional testUrl to be url decoded and calls the method generateTests(...) of the CodeGenerationService.

Service

The CodeGenerationService collects the classes from GitHub and generates the test code for the class under test.

The Service with the prompts looks like this:

@Service

public class CodeGenerationService {

private static final Logger LOGGER = LoggerFactory

.getLogger(CodeGenerationService.class);

private final GithubClient githubClient;

private final ChatClient chatClient;

private final String ollamaPrompt = """

You are an assistant to generate spring tests for the class under test.

Analyse the classes provided and generate tests for all methods. Base

your tests on the example.

Generate and implement the test methods. Generate and implement complete

tests methods.

Generate the complete source of the test class.

Generate tests for this class:

{classToTest}

Use these classes as context for the tests:

{contextClasses}

{testExample}

""";

private final String ollamaPrompt1 = """

You are an assistant to generate a spring test class for the source

class.

1. Analyse the source class

2. Analyse the context classes for the classes used by the source class

3. Analyse the class in test example to base the code of the generated

test class on it.

4. Generate a test class for the source class and use the context classes

as sources for creating the test class.

5. Use the code of the test class as test example.

6. Generate tests for each of the public methods of the source class.

Generate the complete source code of the test class implementing the

tests.

{testExample}

Use these context classes as extension for the source class:

{contextClasses}

Generate the complete source code of the test class implementing the

tests.

Generate tests for this source class:

{classToTest}

""";

@Value("${spring.ai.ollama.chat.options.num-ctx:0}")

private Long contextWindowSize;

public CodeGenerationService(GithubClient githubClient, ChatClient

chatClient) {

this.githubClient = githubClient;

this.chatClient = chatClient;

}This is the CodeGenerationService with the GithubClient and the ChatClient. The GithubClient is used to load the sources from a publicly available repository and the ChatClient is the Spring AI interface to access the AI/LLM.

The ollamaPrompt is the prompt for the IBM Granite LLM with a context window of 8k tokens. The {classToTest} is replaced with the source code of the class under test. The {contextClasses} can be replaced with the dependent classes of the class under test and the {testExample} is optional and can be replaced with a test class that can serve as an example for the code generation.

The ollamaPrompt2 is the prompt for the Deepseek Coder V2 and Codestral LLMs. These LLMs can "understand" or work with a chain of thought prompt and have a context window of more than 32k tokens. The {...} placeholders work the same as in the ollamaPrompt. The long context window enables the addition of context classes for code generation.

The contextWindowSize property is injected by Spring to control if the context window of the LLM is big enough to add the {contextClasses} to the prompt.

The method createTestSources(...) collects and returns the sources for the AI/LLM prompts:

public GithubSource createTestSources(String url, final boolean

referencedSources) {

final var myUrl = url.replace("https://github.com",

GithubClient.GITHUB_BASE_URL).replace("/blob", "");

var result = this.githubClient.readSourceFile(myUrl);

final var isComment = new AtomicBoolean(false);

final var sourceLines = result.lines().stream().map(myLine ->

myLine.replaceAll("[\t]", "").trim())

.filter(myLine -> !myLine.isBlank()).filter(myLine ->

filterComments(isComment, myLine)).toList();

final var basePackage = List.of(result.sourcePackage()

.split("\\.")).stream().limit(2)

.collect(Collectors.joining("."));

final var dependencies = this.createDependencies(referencedSources, myUrl,

sourceLines, basePackage);

return new GithubSource(result.sourceName(), result.sourcePackage(),

sourceLines, dependencies);

}

private List<GithubSource> createDependencies(final boolean

referencedSources, final String myUrl, final List<String> sourceLines,

final String basePackage) {

return sourceLines.stream().filter(x -> referencedSources)

.filter(myLine -> myLine.contains("import"))

.filter(myLine -> myLine.contains(basePackage))

.map(myLine -> String.format("%s%s%s",

myUrl.split(basePackage.replace(".", "/"))[0].trim(),

myLine.split("import")[1].split(";")[0].replaceAll("\\.",

"/").trim(), myUrl.substring(myUrl.lastIndexOf('.'))))

.map(myLine -> this.createTestSources(myLine, false)).toList();

}

private boolean filterComments(AtomicBoolean isComment, String myLine) {

var result1 = true;

if (myLine.contains("/*") || isComment.get()) {

isComment.set(true);

result1 = false;

}

if (myLine.contains("*/")) {

isComment.set(false);

result1 = false;

}

result1 = result1 && !myLine.trim().startsWith("//");

return result1;

}The method createTestSources(...) with the source code of the GitHub source url and depending on the value of the referencedSources the sources of the dependent classes in the project provide the GithubSource records.

To do that the myUrl is created to get the raw source code of the class. Then the githubClient is used to read the source file as a string. The source string is then turned in source lines without formatting and comments with the method filterComments(...).

To read the dependent classes in the project the base package is used. For example in a package ch.xxx.aidoclibchat.usecase.service the base package is ch.xxx. The method createDependencies(...) is used to create the GithubSource records for the dependent classes in the base packages. The basePackage parameter is used to filter out the classes and then the method createTestSources(...) is called recursively with the parameter referencedSources set to false to stop the recursion. That is how the dependent class GithubSource records are created.

The method generateTest(...) is used to create the test sources for the class under test with the AI/LLM:

public String generateTest(String url, Optional<String> testUrlOpt) {

var start = Instant.now();

var githubSource = this.createTestSources(url, true);

var githubTestSource = testUrlOpt.map(testUrl ->

this.createTestSources(testUrl, false))

.orElse(new GithubSource(null, null, List.of(), List.of()));

String contextClasses = githubSource.dependencies().stream()

.filter(x -> this.contextWindowSize >= 16 * 1024)

.map(myGithubSource -> myGithubSource.sourceName() + ":" +

System.getProperty("line.separator")

+ myGithubSource.lines().stream()

.collect(Collectors.joining(System.getProperty("line.separator")))

.collect(Collectors.joining(System.getProperty("line.separator")));

String testExample = Optional.ofNullable(githubTestSource.sourceName())

.map(x -> "Use this as test example class:" +

System.getProperty("line.separator") +

githubTestSource.lines().stream()

.collect(Collectors.joining(System.getProperty("line.separator"))))

.orElse("");

String classToTest = githubSource.lines().stream()

.collect(Collectors.joining(System.getProperty("line.separator")));

LOGGER.debug(new PromptTemplate(this.contextWindowSize >= 16 * 1024 ?

this.ollamaPrompt1 : this.ollamaPrompt, Map.of("classToTest",

classToTest, "contextClasses", contextClasses, "testExample",

testExample)).createMessage().getContent());

LOGGER.info("Generation started with context window: {}",

this.contextWindowSize);

var response = chatClient.call(new PromptTemplate(

this.contextWindowSize >= 16 * 1024 ? this.ollamaPrompt1 :

this.ollamaPrompt, Map.of("classToTest", classToTest, "contextClasses",

contextClasses, "testExample", testExample)).create());

if((Instant.now().getEpochSecond() - start.getEpochSecond()) >= 300) {

LOGGER.info(response.getResult().getOutput().getContent());

}

LOGGER.info("Prompt tokens: " +

response.getMetadata().getUsage().getPromptTokens());

LOGGER.info("Generation tokens: " +

response.getMetadata().getUsage().getGenerationTokens());

LOGGER.info("Total tokens: " +

response.getMetadata().getUsage().getTotalTokens());

LOGGER.info("Time in seconds: {}", (Instant.now().toEpochMilli() -

start.toEpochMilli()) / 1000.0);

return response.getResult().getOutput().getContent();

}To do that the createTestSources(...) method is used to create the records with the source lines. Then the string contextClasses is created to replace the {contextClasses} placeholder in the prompt. If the context window is smaller than 16k tokens the string is empty to have enough tokens for the class under test and the test example class. Then the optional testExample string is created to replace the {testExample} placeholder in the prompt. If no testUrl is provided the string is empty. Then the classToTest string is created to replace the {classToTest} placeholder in the prompt.

The chatClient is called to send the prompt to the AI/LLM. The prompt is selected based on the size of the context window in the contextWindowSize property. The PromptTemplate replaces the placeholders with the prepared strings.

The response is used to log the amount of the prompt tokens, the generation tokens, and the total tokens to be able to check if the context window boundary was honored. Then the time to generate the test source is logged and the test source is returned. If the generation of the test source took more than 5 minutes the test source is logged as protection against browser timeouts.

Conclusion

Both models have been tested to generate Spring Controller tests and Spring service tests. The test URLs have been:

http://localhost:8080/rest/code-generation/test?url=https://github.com/Angular2Guy/MovieManager/blob/master/backend/src/main/java/ch/xxx/moviemanager/adapter/controller/ActorController.java&testUrl=https://github.com/Angular2Guy/MovieManager/blob/master/backend/src/test/java/ch/xxx/moviemanager/adapter/controller/MovieControllerTest.javahttp://localhost:8080/rest/code-generation/test?url=https://github.com/Angular2Guy/MovieManager/blob/master/backend/src/main/java/ch/xxx/moviemanager/usecase/service/ActorService.java&testUrl=https://github.com/Angular2Guy/MovieManager/blob/master/backend/src/test/java/ch/xxx/moviemanager/usecase/service/MovieServiceTest.javaThe granite-code:20b LLM on Ollama has a context window of 8k tokens. That is too small to provide contextClasses and have enough tokens for a response. That means the LLM just had the class under test and the test example to work with.

The deepseek-coder-v2:16b and the 'codestral:22b' LLMs on Ollama have a context window of more than 32k tokens. That enabled the addition of the contextClasses to the prompt and the models can work with chain of thought prompts.

Results

The Granite-Code LLM was able to generate a buggy but useful basis for a Spring service test. No test worked but the missing parts could be explained with the missing context classes. The Spring Controller test was not so good. It missed too much code to be useful as a basis. The test generation took more than 10 minutes on a medium-power laptop CPU.

The Deepseek-Coder-V2 LLM was able to create a Spring service test with the majority of the tests working. That was a good basis to work with and the missing parts were easy to fix. The Spring Controller test had more bugs but was a useful basis to start from. The test generation took less than ten minutes on a medium-power laptop CPU.

The Codestral LLM was able to create a Spring service test with 1 test failing. That more complicated test needed some fixes. The Spring Controller test had also only 1 failing test case, but that was because a configuration call was missing that made the tests succeed without doing the testing. Both generated tests were a good starting point. The test generation took more than half an hour on a medium-power laptop CPU.

Opinion

The Deepseek-Coder-V2 and the Codestral LLMs can help with writing tests for Spring applications. Codestal is the better model but needs significantly more processing power and memory. For productive use, both models need GPU acceleration. The LLM is not able to create non-trivial code correctly, even with context classes available. The help a LLM can provide is very limited because LLMs do not understand the code. Code is just characters for a LLM and without an understanding of language syntax, the results are not impressive. The developer has to be able to fix all the bugs in the tests. That means it just saves some time typing the tests.

The experience with GitHub Copilot is similar to the Granite-Code LLM. As of September 2024, the context window is too small to do good code generation and the code completion suggestions need to be ignored too often.

Is a LLM a help -> yes.

Is the LLM a large timesaver -> no.

Published at DZone with permission of Sven Loesekann. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments