About Kubernetes

Kubernetes is a distributed cluster technology that manages a container-based system in a declarative manner using an API. Kubernetes is an open-source project governed by the Cloud Native Computing Foundation (CNCF) and counts all the largest cloud providers and software vendors as its contributors, along with a long list of community supporters.

There are currently many learning resources to get started with the fundamentals of Kubernetes, but there is less information on how to manage Kubernetes infrastructure on an ongoing basis. This Refcard aims to deliver quickly accessible information for operators using any Kubernetes product.

For an organization to deliver and manage Kubernetes clusters to every line of business and developer group, operations need to architect and manage both the core Kubernetes container orchestration, and the necessary auxiliary solutions (platform applications) — for example, monitoring, logging, and CI/CD pipeline. The CNCF maps out many of these solutions and groups them by category in the “CNCF Landscape.”

The widespread adoption of cloud-native Kubernetes is reflected in these Gartner predictions:

“By 2025, Gartner estimates that over 95% of new digital workloads will be deployed on cloud-native platforms, up from 30% in 2021.”

“More than 85% of organizations will embrace a cloud-first principle by 2025 and will not be able to fully execute on their digital strategies without the use of cloud-native architectures and technologies.”

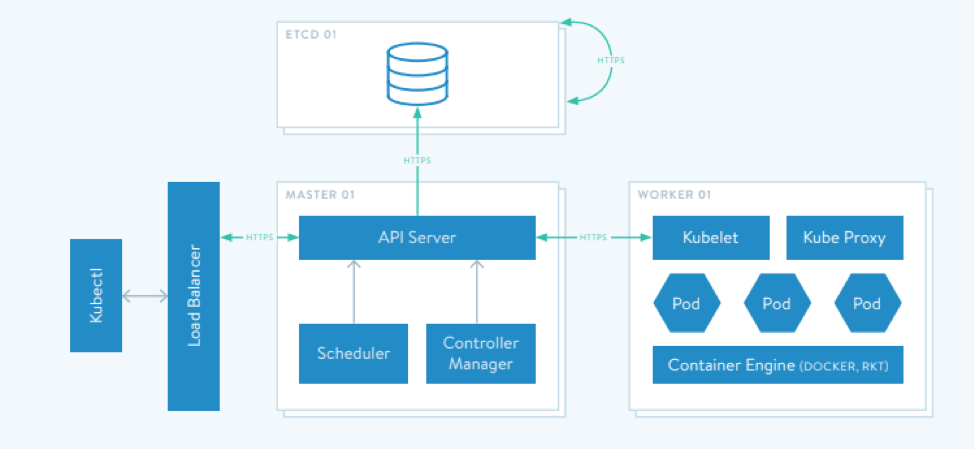

Kubernetes differs from the orchestration offered by configuration management solutions in that it provides a declarative API that collects, stores, and reconciles desired state against observed state in an eventually consistent manner.

A few traits of Kubernetes include:

- Abstraction – Kubernetes abstracts the application orchestration from the infrastructure resource and as-a-service automation. This allows organizations to focus on the APIs of Kubernetes to manage an application at scale in a highly available manner instead of the underlying infrastructure resources.

- Declarative – Kubernetes’ control plane decides how the hosted application is deployed and scaled on the underlying fabric. A user simply defines the logical configuration of the Kubernetes object, and the control plane takes care of the implementation.

- Immutable – Different versions of services running on Kubernetes are completely new and not swapped out. Objects in Kubernetes, say different versions of a pod, are changed by creating new objects.

{{ parent.title || parent.header.title}}

{{ parent.tldr }}

{{ parent.linkDescription }}

{{ parent.urlSource.name }}