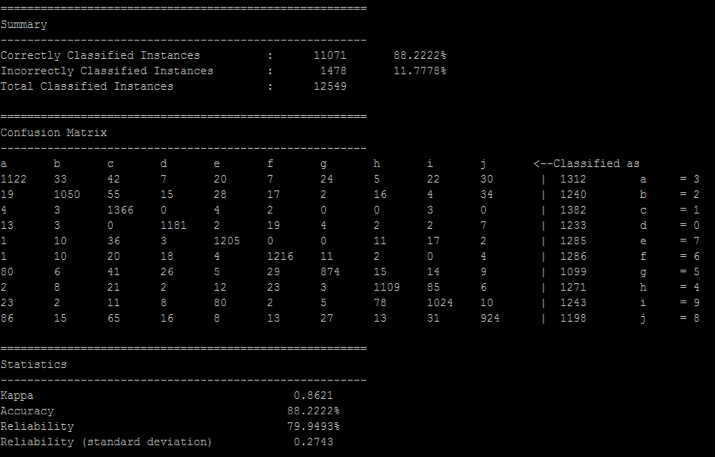

We can use Mahout to recognize handwritten digits (a multi-class classification problem). In our example, the features (input variables) are pixels of an image, and the target value (output) will be a numeric digit—0, 1, 2, 3, 4, 5, 6, 7, 8, or 9.

Creating a Cluster Using Amazon Elastic MapReduce (EMR) While Amazon Elastic MapReduce is not free to use, it will allow you to set up a Hadoop cluster with minimal effort and expense. To begin, create an Amazon Web Services account and follow these steps:

Create a Key Pair

- Go to the Amazon AWS Console and log in.

- Select the “EC2” tab.

- Select “Key Pairs” in the left sidebar.

If you don’t already have a key pair, create one here. Once your key pair is created, download the .pem file.

Note: If you have made a key value pair, but don’t see it, you may have the wrong region selected.

Configure a cluster

- Go to the Amazon AWS Console and log in.

- Select the “Elastic MapReduce” tab.

- Press “Create Cluster”.

- Fill in the following data:

| Cluster configuration |

| Termination protection |

NO |

| Logging |

Disabled |

| Debugging |

Disabled |

| Tags |

| Empty |

| Software Configuration |

| Hadoop distribution |

Amazon AMI version 3.3.2 |

| Additional applications |

Empty |

| Hardware Configuration |

| Network |

Use default setting |

| EC2 availability zone |

Use default setting |

| Master/Core/Task |

Use default setting or change to your own preference (selecting more machines makes it more expensive) |

| Security and Access |

| EC2 key pairs |

Select your key pair (you’ll need this for SSH access) |

| IAM user access |

No other IAM users |

| IAM roles |

| Empty |

| Bootstrap actions |

| Empty |

| Steps |

| Add step |

Empty |

| Auto-terminate |

No |

- Press “Create Cluster” and the magic starts.

Log Into the Cluster

When your cluster is ready, you can login using your key pair with the following command:

You can find the public IP address of your Hadoop cluster master by going to Elastic MapReduce -> Cluster List -> Cluster Details in the AWS Console. Your Mahout home directory is /home/hadoop/mahout.

Don’t forget to terminate your cluster when you’re done, because Amazon will charge for idle time.

Note: If a cluster is terminated, it cannot be restarted; you will have to create a new cluster.