Asynchronous vs Synchronous Programming: When to Use What

In this article, see when you should apply asynchronous programming and when sticking to synchronous execution is the best option.

Join the DZone community and get the full member experience.

Join For FreeAsynchronous programming allows you to offload work. That way you can perform that work without blocking the main process/thread (for instance navigation and utilization of the app). It's often related to parallelization, the art of performing independent tasks in parallel, that is achieved by using asynchronous programming.

With parallelization, you can break what is normally processed sequentially, meaning break it into smaller pieces that can run independently and simultaneously. Parallelization is not just related to processes and capabilities but also with the way systems and software are designed. The biggest advantage of applying parallelization principles is that you can achieve the outcomes much faster and it makes your system easier to evolve and more resilient to failure.

Pretty cool, right? However, not all processes should follow parallelization principles and execute asynchronously. In this blog post, I'll explain when you should apply asynchronous programming and when sticking to synchronous execution is the best option.

Asynchronous vs Synchronous Programming

Before we jump into the juicy stuff, let's start by clarifying the difference between asynchronous and synchronous programming.

In synchronous operations tasks are performed one at a time and only when one is completed, the following is unblocked. In other words, you need to wait for a task to finish to move to the next one.

In asynchronous operations, on the other hand, you can move to another task before the previous one finishes. This way, with asynchronous programming you're able to deal with multiple requests simultaneously, thus completing more tasks in a much shorter period of time.

When to Use Asynchronous Programming

As I said in the beginning, asynchronous execution is not the best scenario for all use cases. You should only use it if you're dealing with independent tasks. So, when you're designing a system, the first step you need to take is to identify the dependencies between processes and define which you can execute independently and which needs to be executed as a consequence of other processes.

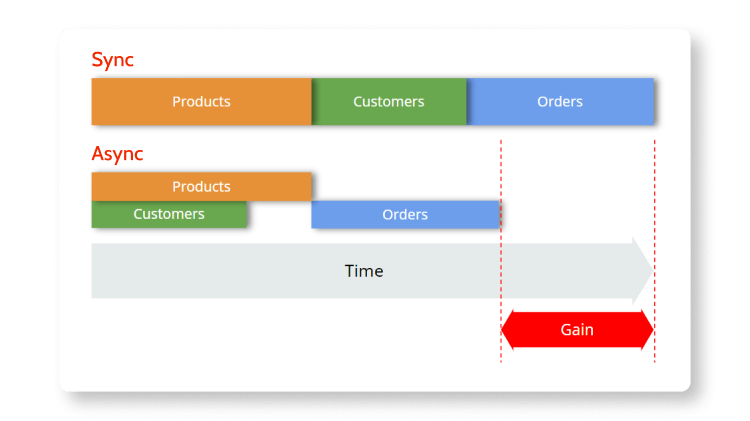

Take a look at the image above. On the top, you can see that in synchronous execution, the tasks are executed in a sequential way; the products are the first to be executed, then customers, and finally orders.

Now imagine that you did an analysis and concluded that customers are independent of products, and vice-versa, but that to execute orders, you need the information from products first — there's a dependency. In that case, the first two tasks can be executed asynchronously, but orders will only be executed when products are completed — so, synchronously.

As a result, by applying parallel computing and asynchronous programming when dealing with independent tasks, you're able to perform these tasks way faster than with synchronous execution because they're executed at the same time. This way, your system releases valuable resources earlier and it's ready to execute other processes that queued.

How to Design a System that Runs Asynchronously

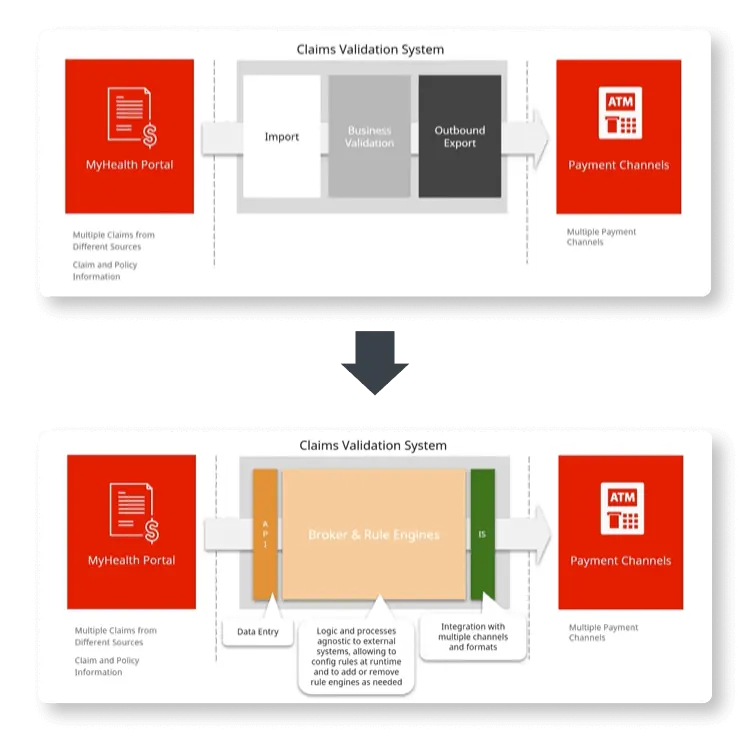

To better illustrate how to design a system that follows asynchronous programming principles, let's try to do it using a typical claims processing portal. Something like this:

In this challenge, we have the portal that policyholders or other entities use to insert and manage claim information. This portal communicates with a claims validation system through an API. The validation system imports data to a business validation engine that includes a broker and business rules that are processes and logic agnostic to external systems. Finally, this system integrates with payment channels to which it exports the outcomes of the business validation.

Now, I work with OutSystems and so I'm going to show you how to implement a system that can run asynchronous processing using the OutSystems platform. But if you're not an OutSystems developer or architect, don't run away just yet; you will see how I was able to automate parallel asynchronous processes in this scenario and adapt it to your preferred technology.

Before we get our hands dirty — I promise, we're almost there — because I'm using OutSystems, I need to clarify some of the terms and capabilities I'll be using. If you're already familiarized with them, or you simply want to see our proposed architecture to solve this challenge, you can skip the next section.

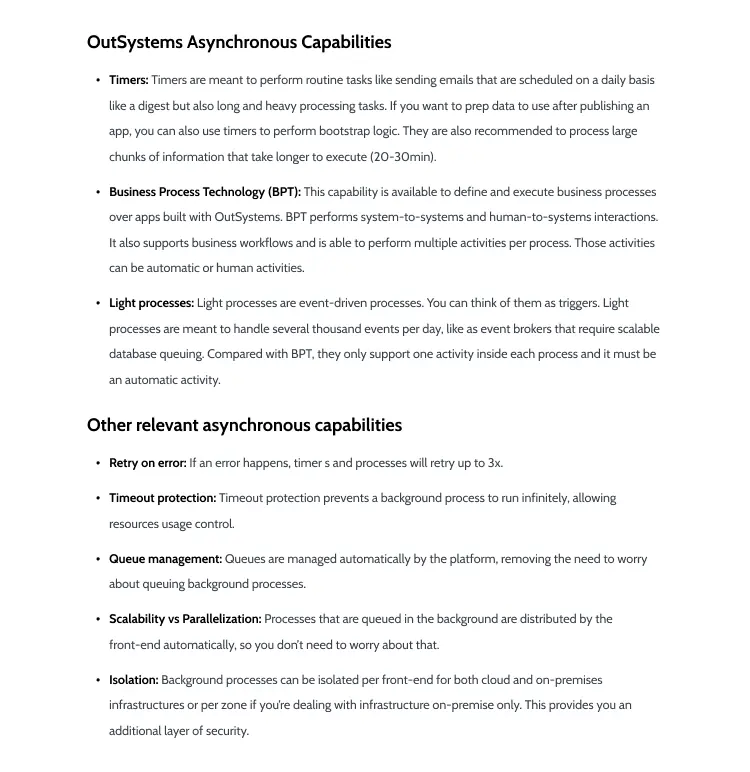

OutSystems Asynchronous Capabilities

OutSystems is a modern, AI-powered application platform that offers several in-built asynchronous capabilities:

- Timers: Timers are meant to perform routine tasks like sending emails that are scheduled on a daily basis like a digest but also long and heavy processing tasks. If you want to prep data to use after publishing an app, you can also use timers to perform bootstrap logic and they are also recommended to process large chunks of information that take some time to execute (20-30min).

- Business Process Technology (BPT): This capability is available to define and execute business processes over apps built with OutSystems. BPT performs system-to-systems and human-to-systems interactions. It also supports business workflows and is able to perform multiple activities per process. Those activities can be automatic or human activities.

- Light Processes: Light processes are event-driven processes. You can think of them as triggers. Light processes are meant to handle several thousand events per day, like as event brokers that require scalable database queuing. Compared with BPT, they only support one activity inside each process and it must be an automatic activity.

In addition to that, there are a few other capabilities important to note:

- Retry on error: If an error happens, timers and processes will retry up to three times.

- Timeout protection: Timeout protection prevents a background process to run infinitely, allowing resources usage control.

- Queue management: Queues are managed automatically by the platform, removing the need to worry about queuing background processes.

- Scalability vs parallelization: Processes that are queued in the background are distributed by the front-end automatically, so you don't need to worry about that.

- Isolation: Background processes can be isolated per front-end for both cloud and on-premises infrastructures or per zone if you're dealing with infrastructure on-premise only. This provides you an additional layer of security.

Automating Parallel Asynchronous Processes of a Claims Processing Portal: Suggested Architecture

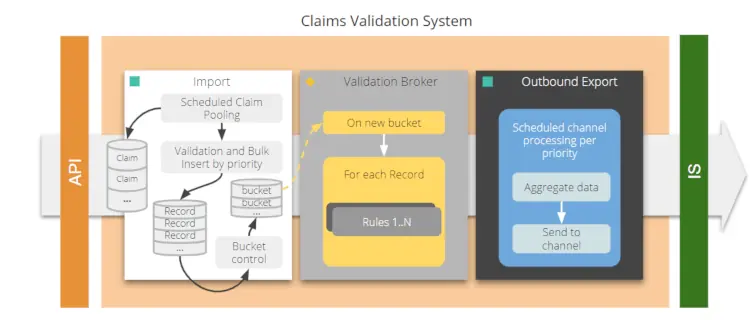

Let's look at the claims validation system in greater detail:

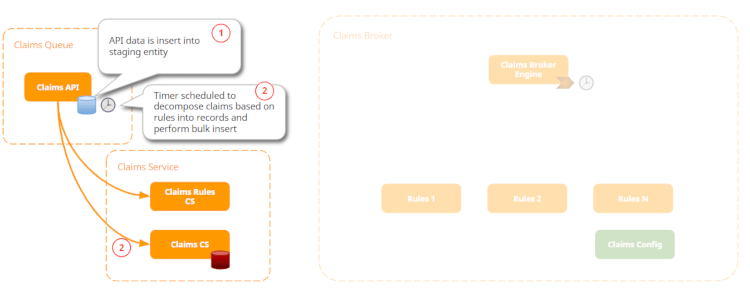

The API is going to insert each of the claims into a staging data. Then a timer, that is scheduled, is going to look into those claims. Note that each claim is a structure that can have multiple records inside. So, the timer is going to validate and decompose those claims and records and perform a Bulk Insert into the business entities for the claims.

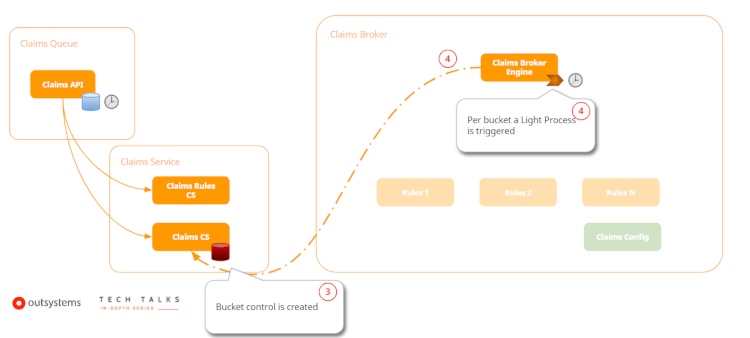

Now, we want to be able to optimize our resources as much as we can. For that reason, we're not going to process each one of the records as soon as they're inserted into the table. We also want to remove the overhead of the starting of a validation process, so we're going to use a bucket control which is basically a record where you're going to specify which is the initial record and which is the end record. So, this bucket is basically an interval of records and claims that are going to be processed.

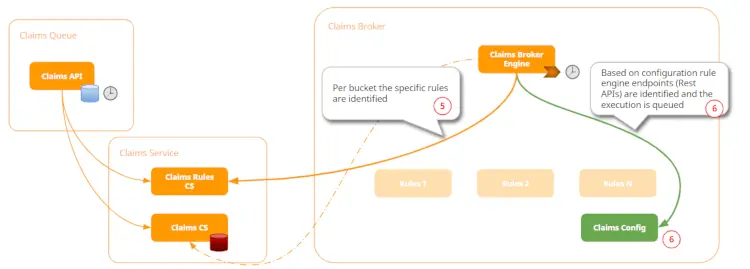

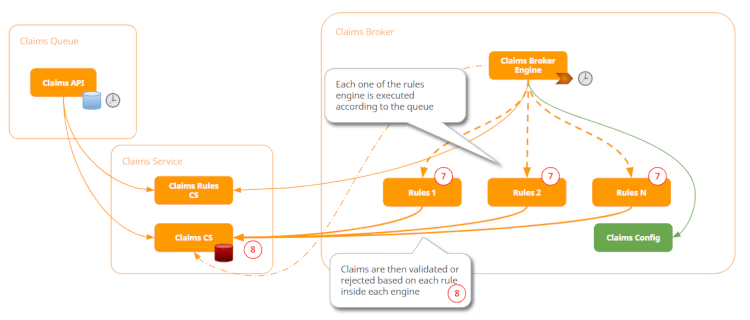

With that, for each bucket record, we're going to trigger a light process that will process each of the records inside the bucket. By processing I mean we're basically applying the rules. These rules are engines that can be plugged and played, so they can be added at runtime into the system.

Once a record is processed, if it's considered valid according to the business rules, it will be set as such and a timer will aggregate the data and send it to the channel accordingly.

That said, here's our proposed architecture:

First, the Claims API will collect all the data that is imported through a JSON structure or XML. Each data is then inserted into the staging entity. The goal here is to speed up the data insertion and prevent losing data.

Once the data is inserted, a timer is launched on a scheduled basis. The purpose of this timer is to decompose claims based on Claims Rules previously pre-defined and perform the Bulk Insert. Based on those rules, the claims will be inserted in the Claims Service.

The number of records inside each bulk is the number of records you can process within a three-minute limit. At this point, you should avoid having parallel capacities still available and only run one light process at a time because you are specifying a bucket with a large number of claims inside.

Once the bucket control is created, then a light process is triggered.

And the triggering is going to execute the process.

So, the Claims Broker Engine is going to get the rules that were predefined in the Claims Rules and, based on the configuration, the rule engine endpoints-which are basically REST APIs because we want to be able to plug and play rules engines in our system-are identified and the execution is queued.

Once we get all the rule engines that we need to execute that particular type and have the endpoints for them, we're now going to call them sequentially. So we're going to apply those rules on top of claims data.

As a result of each rule, a claim will be considered "valid" and thus proceed to the next step, or "not valid" and, in that case, rejected by the system.

Finally, the system will have a timer that based on priority is going to get all the valid claims, aggregate them, and send them to payment channels.

You can also make the prioritization rules more complex. For example, you may want to define that certain claims should be sent as soon as possible so they would need to be sent right after they're validated by the system and are ready to be paid; or you may want to define certain claims as low-priority and, in that case, can be processed by the timer.

Another benefit of using this type of step-by-step rule in the engine is that the system can also recover from a timeout or even a crash. Imagine that after processing rule one but before executing rule two, there's a timeout or a catastrophic failure in the process and the system needs to recover from it. With this system, each of the claims is available to recover from the exact point where it was before the incident. So, in this case, the claim would recover and execute rule two instead of repeating rule one.

Key Takeaways

Hope this article helps you clear up any questions you may have about when you should use asynchronous or synchronous programming. To wrap it up, here are the main key points:

- Use the asynchronous techniques that are more suitable for the outcome.

- Scale front-end servers and configurations to fit your needs. Keep in mind that when you go into millions of records, you need more front-end servers to accomplish your needs.

- Design with flexibility in mind and avoid hard-coded values or site properties. Imagine you use hard-coded values for bucket control; if your claims validation process becomes slower and for some reason, you're not aware, you start having timeouts. Now you're in an even worse situation because you need to publish the changes and not go into a backoffice to change it.

- Don't over-engineer. Try to keep your architecture and system as simple as possible.

If you want to see this scenario in action, you can look at my recent TechTalk, How to Use Asynchronous Techniques in OutSystems. My colleague Davide and I will show you the solution here proposed while leveraging the OutSystems asynchronous capabilities, fostering scalability and resilience to failure and ready to handle large data volumes.

Published at DZone with permission of Ricardo Costa. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments