Automate Azure Databricks Unity Catalog Permissions at the Catalog Level

Detailed script for automating permission management for Databricks Unity Catalog at catalog level.

Join the DZone community and get the full member experience.

Join For FreeDisclaimer: All the views and opinions expressed in the blog belong solely to the author and not necessarily to the author's employer or any other group or individual. This article is not a promotion for any cloud/data management platform. All the images and code snippets are publicly available on the Azure/Databricks website.

What Is Unity Catalog in Databricks?

Databricks Unity Catalog is a data cataloging tool that helps manage and organize data across an organization in a simple, secure way. It allows companies to keep track of all their data, making it easier to find, share, and control who can access it. Unity Catalog works across different cloud storage systems and lets teams manage permissions, governance, and data access from one place, ensuring data is used safely and efficiently.

How Privileges Work in the Unity Catalog Hierarchical Model

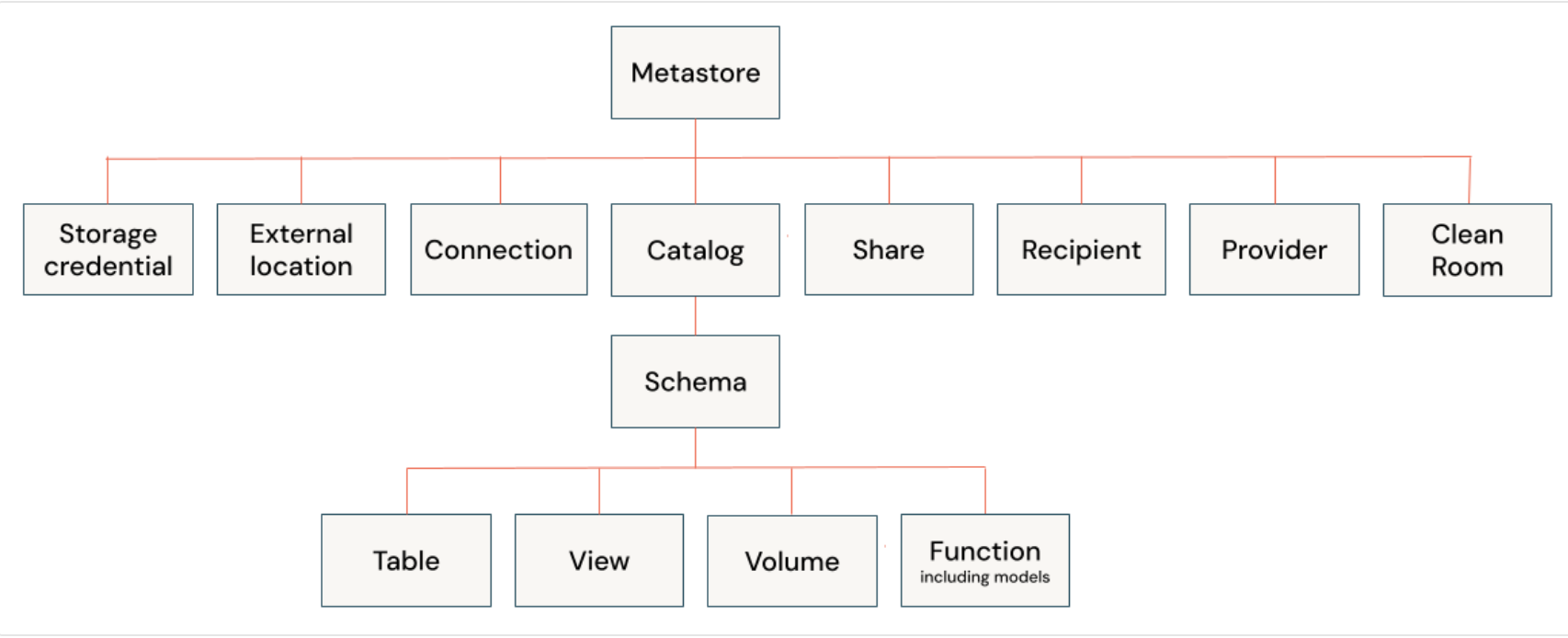

In Unity Catalog, privileges (permissions) work in a hierarchical model, meaning they follow a specific structure from top to bottom. Here's how it works in simple terms:

- At the Top: Metastore —This is like a big container that holds all the databases and data. If someone has access to this level, they can control everything inside.

- Next: Catalog — Inside the metastore, there are catalogs. These are smaller containers that group related data. Privileges here control access to everything in that catalog.

- Inside the Catalog: Schema — Each catalog holds one or more schema. Privileges at this level decide who can access the data tables within a specific schema.

- At the Bottom: Tables/Views — Inside the schema, there are tables and views, which are the actual data. Privileges here allow control over who can read or modify specific pieces of data.

Privileges flow downward. For example, if you have access at the catalog level, you automatically have access to the schema(s) and table(s) within it, unless more specific permissions are set at lower levels.

When to Set Privileges at the Catalog Level

One should set privileges at the catalog level when you want to control access to multiple schemas and tables/views within that catalog at once. This can be useful in several situations:

- Broad Access Control: If you want to give users or teams access to all the schemas and data inside a catalog, setting privileges at the catalog level saves time. For example, granting a data analyst team access to all sales-related data in one go.

- Consistent Permissions: When you need to ensure that everyone with access to the catalog has consistent permissions across its schema(s) and tables. This is helpful for keeping things organized and avoiding mismatches in access rules.

- Ease of Management: If you have many schemas under the same catalog and you don’t want to manage permissions for each one individually, setting privileges at the catalog level simplifies permission management.

- Department/Team-Based Access: If a catalog represents a specific department (like Marketing or Finance), you can set privileges at the catalog level to give that department access to all relevant data without managing each schema(s) separately.

Why We Need to Automate Unity Catalog Privileges

Automating Unity Catalog privileges is important for several key reasons:

- Consistency: Automation ensures that privileges are applied in a standardized and consistent way across the organization, reducing the risk of manual errors like granting too much or too little access.

- Scalability: As data and teams grow, manually managing privileges becomes overwhelming. Automation allows you to scale your data governance efficiently by handling privilege assignments for large volumes of users, databases, and catalogs.

- Time Efficiency: Automating the process saves significant time and effort compared to manually setting privileges for each user or resource. This is especially useful when there are frequent changes to user roles or data structures.

- Compliance and Auditing: Automated privilege management can ensure that access is always aligned with policies and regulations. It provides an audit trail and makes it easier to comply with legal requirements by ensuring that sensitive data is only accessed by authorized users.

- Reduced Risk of Human Error: Manually assigning privileges increases the likelihood of mistakes that could expose sensitive data or lock out important users. Automation minimizes this risk by ensuring accurate and appropriate access.

How the Script Works

Prerequisites

- Unity Catalog is already setup

- Principal(s) is/are associated with the Databricks workspace

Step 1: Declare the Variables

Create a notebook in Databricks workspace. To create a notebook in your workspace, click the "+" New in the sidebar, and then choose Notebook.

A blank notebook opens in the workspace. Make sure Python is selected as the notebook language.

Copy and paste the code snippet below in the notebook cell and run the cell.

catalog = '' # Specify the catalog name in the blank text section

principals_arr = '' # Specify the Comma(,) seperated values for principals in the blank text section (e.g. groups, username)

principals = principals_arr.split(',')

privileges_arr = 'SELECT,BROWSE' # Specify the Comma(,) seperated values for priviledges in the blank text section (e.g. SELECT,BROWSE)

privileges = privileges_arr.split(',')Step 2: Set the Catalog

Copy, paste and run the below code block in a new or in the existing cell.

query = f"USE CATALOG `{catalog}`"

spark.sql(query) Step 3: Loop Through the Principals and Privileges and Apply Grant at the Catalog

Copy, paste, and run the below code block in a new or in the existing cell.

for principal in principals:

for privilege in privileges:

query = f"GRANT `{privilege}` ON CATALOG `{catalog}` TO `{principal}`"

spark.sql(query)Validation

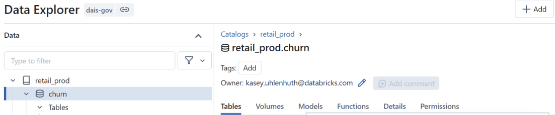

You can validate the privileges by opening Databricks UI and navigating to "Catalog" in the Data Explorer. Once the catalog shows up in the Data section, click on the catalog and go to the "permissions" tab. You can now see all the privileges applied to the catalog.

Below is a screenshot of a publicly available catalog image that shows the permission tab on a unity catalog schema.

Conclusion

Automating privilege management in Databricks Unity Catalog helps ensure consistent and efficient access control. The code provided demonstrates a practical way to assign catalog-level privileges, making it easier to manage permissions across users and groups. This approach reduces the chance of manual errors and supports scalable governance as data and teams grow. By implementing these methods, organizations can maintain better control over their data while simplifying ongoing management tasks.

Opinions expressed by DZone contributors are their own.

Comments