Azure DevOps Agent With Docker Compose

Learn more about using Docker for Azure DevOps Linux Build Agent with Docker Compose.

Join the DZone community and get the full member experience.

Join For Free

in the past, i've dealt with using docker for azure devops linux build agent in a post called configure a vsts linux agent with docker in minutes , and i've blogged on how you can use docker inside a build definition to have some prerequisite for testing (like mongodb and sql server). now, it is time to move a step further and leverage docker compose.

using docker commands in pipeline definition is nice, but it has some drawbacks: first of all, this approach suffers in terms of speed of execution because the container must start each time you run a build (and should be stopped at the end of the build). it is, indeed, true that if the docker image is already present in the agent, machine start-up time is not so high, but some images, like mssql, are not immediately operative, so you need to wait for them to be ready for every build . the alternative is to leave them running, even if the build is finished, but this could lead to resource exhaustion.

another problem is dependency from the docker engine. if i include docker commands in the build definition, i can build only on a machine that has docker installed. if most of my projects use mongodb, mssql, and redis, i can simply install all three on my build machine mabe using a fast ssd as my storage. in that scenario, i'm expecting to use my physical instances, not waiting for docker to spin a new container.

including docker commands in pipeline definition is nice, but it ties the pipeline to docker and can have a penalty in execution speed.

what i'd like to do is leverage docker to spin out an agent and all needed dependencies at once. then we use that agent with a standard build that does not require docker. this gives me the flexibility of setting up a build machine with everything pre-installed, or to simply use docker to spin out in seconds, an agent that can build my code. removing the docker dependency from my pipeline definition gave the user the upmost flexibility.

for my first experiment, i also want to use docker in windows 2109 to leverage the windows container.

first of all, you can read the nice msdn article about how to create a windows docker image that downloads, install and run an agent inside a windows server machine with docker for windows . this allows you to spin out a new docker agent based on windows image in minutes (just the time to download and configure the agent).

thanks to windows containers, running an azure devops agent based on windows is a simple docker run command.

now, i need that agent to be able to use mongodb and mssql to run integration tests. clearly, i can install both db engines on my host machine and let the docker agent use them, but since i've already used my agent in docker, i wish for my dependencies to also run in docker . so, welcome docker compose!

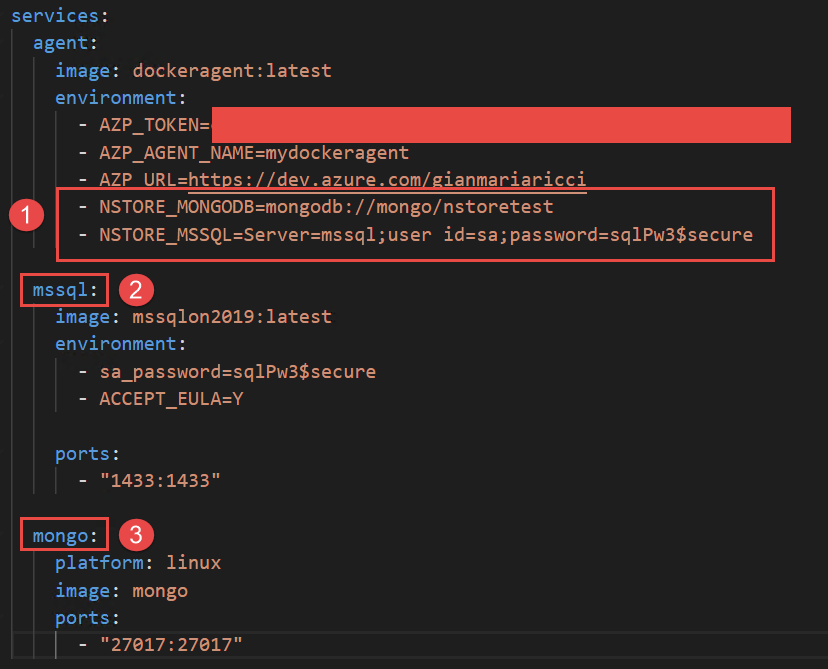

thanks to docker compose, i can define a yaml file with a list of images that are part of a single scenario, so i specified an agent image followed by a sql server and mongodb images. the beauty of docker compose is the ability to refer to other container machines by name. let's do an example; here is my complete docker compose yaml file.

version'2.4'

services

agent

imagedockeragentlatest

environment

azp_token=efk5g3j344xfizar12duju65r34llyw4n7707r17h1$36o6pxsa4q

azp_agent_name=mydockeragent

azp_url=https://dev.azure.com/gianmariaricci

nstore_mongodb=mongodb://mongo/nstoretest

nstore_mssql=server=mssql;user id=sa;password=sqlpw3$secure

mssql

imagemssqlon2019latest

environment

sa_password=sqlpw3$secure

accept_eula=y

ports

"1433:1433"

mongo

platformlinux

imagemongo

ports

"27017:27017"

to simplify everything, all of my integration tests that needs a connection string to mssql or mongodb grab the connection string by the environment variable . this is convenient so each developer can use their db instances of choice, but also, this technique makes it super easy to configure a docker agent, specifying database connection strings as seen in figure 1 . i can specify in environment variables connection string to use for testing and i can simply use other docker service names directly in the connection string.

as you can see (1), connection strings refer to other containers by name, nothing could be easier.

the real advantage of using docker compose is the ability to include docker compose file (as well as dockerfiles for all custom images that you could need) inside your source code. with this approach, you can specify build pipelines leveraging yaml build of azure devops and also the configuration of the agent with all dependencies.

since you can configure as many agents as you want for azure devops (you actually pay for the number of concurrent executing pipelines), thanks to docker compose you can set up an agent suitable for your project in less than one minute. but this is optional, if you would not like to use docker compose, you can simply set up an agent manually, just as you did before.

including a docker compose file in your source code allows the consumer of the code to start a compatible agent with a single command.

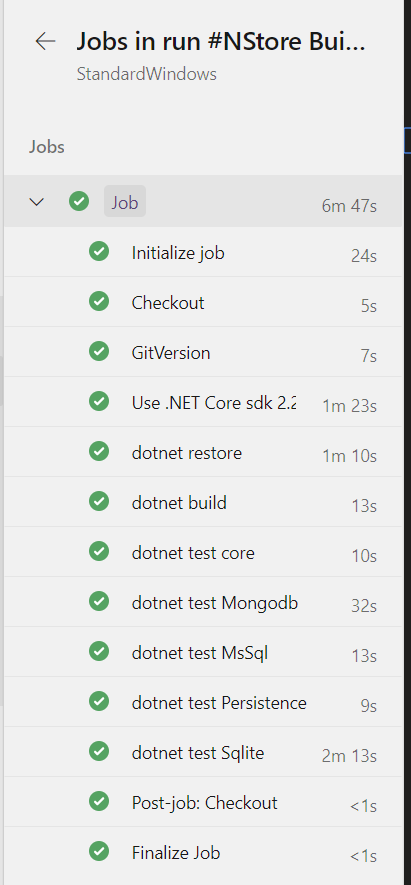

thanks to docker compose, you pay the price of downloading pulling images once. you are paying only once the time needed for any image to become operative (like mssql or other databases that need a little bit before being able to satisfy requests). after everything is up and running, your agent is operative and can immediately run your standard builds — no docker reference inside your yaml build file, no time wasted waiting for your images to become operational .

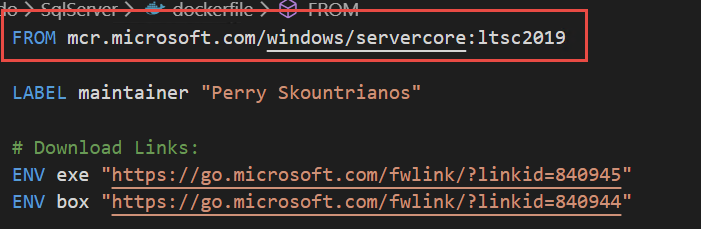

thanks to the experimental feature of windows server 2019, i was able to specify a docker-compose file that contains not only windows images, but also linux images. the only problem i had is that i did not find a windows 2019 image for the sql server. i started getting an error using standard mssql images (built for windows 2016). so, i decided to download the official docker file, change the reference image, and recreate the image, and everything worked like a charm!

since it is used only for tests, i'm pretty confident that it should work, and indeed, my build runs just fine.

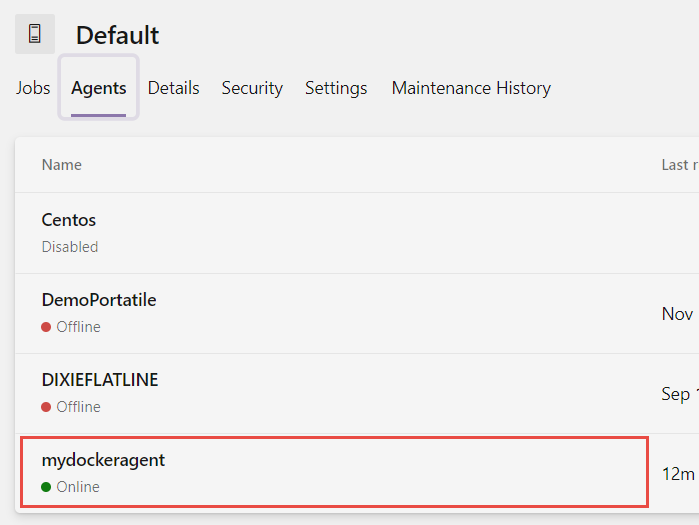

actually, my agent created with docker compose is absolutely equal to all other agents. from the point of view of azure devops, nothing is different, but i've started it with a single line of docker-compose command.

that's all, with a little effort, i'm able to include in my source code from both the yaml build definition as yaml docker compose file to specify the agent with all prerequisites to run the build. this is especially useful for open-source projects where you want to fork a project then activate ci with no effort.

further reading

Published at DZone with permission of Ricci Gian Maria, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments