Big Data Search, Part 2: Setting Up

Join the DZone community and get the full member experience.

Join For Freethe interesting thing about this problem is that i was very careful in how i phrased things. i said what i wanted to happen, but didn’t specify what needs to be done. that was quite intentional. for that matter, the fact that i am posting about what is going to be our acceptance criteria is also intentional. the idea is to have a non trivial task, but something that should be very well understood and easy to research. it also means that the candidate needs to be able to write some non trivial code. and i can tell a lot about a dev from such a project. at the same time, this is a very self contained scenario. the idea is that this is something that you can do in a short amount of time.

the reason that this is an interesting exercise is that this is actually at least two totally different but related problems. first, in a 15tb file, we obviously cannot rely on just scanning the entire file. that means that we have to have an index. and that means we have to build it. interestingly enough, an index being a sorted structure, that means that we have to solve the problem of sorting more data than can fit in main memory.

the second problem is probably easier, since it is just an implementation of external sort, and there are plenty of algorithms around to handle that. note that i am not really interested in actual efficiencies for this particular scenario. i care about being able to see the code. see that it works, etc. my solution, for example, is a single threaded system that make no attempt at parallelism or i/o optimizations. it clocks at over 1 gb / minute and the memory consumption is at under 150mb. queries for a unique value return the result in 0.0004 seconds. queries that returned 153k results completed in about 2 seconds.

when increasing the used memory to about 650mb, there isn’t really any difference in performance, which surprised me a bit.

then again, the entire code is probably highly inefficient. but that is good enough for now.

the process is kicked off with indexing:

1: var options = new directoryexternalstorageoptions("/path/to/index/files");

2: var input = file.openread(@"/path/to/data/crimes_-_2001_to_present.csv");

3: var sorter = new externalsorter(input, options, new int[]

4: {

5: 1,// case number

6: 4, // ichr

7:

8: });

9:

10: sorter.sort();

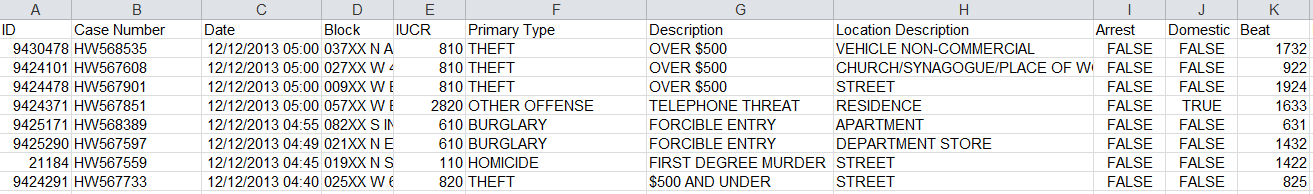

i am actually using the chicago crime data for this. this is a 1gb file that i downloaded from the chicago city portal in csv format. this is what the data looks like:

the externalsorter will read and parse the file, and start reading it into a buffer. when it gets to a certain size (about 64mb of source data, usually), it will sort the values in memory and output them into temporary files.

those file looks like this:

initially, i tried to do that with binary data, but it turns out that that was too complex to be easy, and writing this in a human readable format made it much easier to work with. the format is pretty simple, you have the value of the left, and on the right you have start position of the row for this value.

we generate about 17 such temporary files for the 1gb file. one temporary file per each 64 mb of the original file. this lets us keep our actual memory consumption very low, but for larger data sets, we’ll probably want to actually do the sort every 1 gb or maybe more. our test machine has 16 gb of ram, so doing a sort and outputting a temporary file every 8 gb can be a good way to handle things. but that is beside the point.

the end result is that we have multiple sorted files, but they aren’t sequential. in other words, in file #1 we have values 1,4,6,8 and in file #2 we have 1,2,6,7. we need to merge all of them together. luckily, this is easy enough to do. we basically have a heap that we feed entries from the files into. and that pretty much takes care of this. see merge sort if you want more details about this.

the end result of merging all of those files is… another file, just like them, that contains all of the data sorted. then it is time to actually handle the other issue, actually searching the data.

we can do that using simple binary search, with the caveat that because this is a text file, and there is no fixed size records or pages, it is actually a big hard to figure out where to start reading.

in effect, what i am doing is to select an arbitrary byte position, then walk backward until i find a ‘\n’. once i found the new line character, i can read the full line, check the value, and decide where i need to look next. assuming that i actually found my value, i can now go to the byte position of the value in the original file and read the original line, giving it to the user.

assuming an indexing rate of 1 gb / minute a 15 tb file would take about 10 days to index. but there are ways around that as well, but i’ll touch on them in my next post.

what all of this did was bring home just how much we usually don’t have to worry about such things. but i consider this research well spent, we’ll be using this in the future.

Published at DZone with permission of Oren Eini, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments