Building High Performance Big Data Analytics Systems

Learn various performance considerations when building your own analytics systems

Join the DZone community and get the full member experience.

Join For FreeBig Data and Analytics systems are fast emerging as one of the critical system in an organization’s IT environment. But with such huge amounts of data come the performance challenges. If Big Data systems cannot be used to make or forecast critical business decisions, or provide the insights into business values, hidden under huge amount of data at right time, then these systems lose their relevance. This article talks about some of the critical performance considerations in a technology agnostic way. It talks about some techniques or guidelines, which can be used during different phases of a big data system (i.e. data extraction, data cleansing, processing, storage, as well as presentation ) This should act as a generic guidelines, which can be used by any Big Data professional to ensure that final system meets the performance requirements of the system.

1. What is Big Data?

Big Data is one of the most common terms in IT world these days. Though different terms and definitions are used to explain Big Data, in principal, all conclude the same point that with the generation of huge amounts of data, both from structured and unstructured sources, traditional approaches to handle and process this data are not sufficient.

Big Data systems are generally considered to have five main characteristics of data, commonly called the 5 Vs of data. These are Volume, Variety, Velocity, Veracity, and Value.

According to Gartner, High Volume can be defined as “when the processing capacity of the native data-capture technology and processes is insufficient for delivering business value to subsequent use cases. High volume also occurs when the existing technology was specifically engineered for addressing such volumes – a successful big data solution.”

This high volume data is not coming only from traditional sources, but from new and diverse sources e.g. sensors, devices, logs, automobiles, and so many varied sources. These sources can send both structured and unstructured data.

According to Gartner, high data variety can be defined as follows: "Highly variant information assets include a mix of multiple forms, types and structures without regards to that structure at the time of write or read. And previously under-utilized data, which is enabled for use cases under new innovative forms of processing, also represents variability”.

Velocity can be defined as the speed with which data from different sources are arriving. Data from devices, sensors, and other organized and unorganized streams are constantly entering IT systems. With this, the need of real time analysis and interpretation of this data is also increased.

According to Gartner, high velocity can be defined as follows: "a higher rate of data arrival and/or consumption, but is focused on the variable velocity within a dataset or the shifting rates of data arrival between two or more data sets."

Veracity, or the correctness and accuracy, is another aspect of the data. To make correct business decisions, it is imperative that data, on which all analysis is performed is correct and accurate.

Big data systems can provide huge business Value. Industries and organization like telecom, finance, e-commerce, social media, collaboration, and many more, see their data as a huge business opportunity. They can get insights into user behavior and provide recommendations for relevant products, generate alerts for possible fraud transactions, and so on.

Like any IT system, performance is a critical for any Big Data system to succeed. How to make performance an integral part of a Big Data system is the basic idea this article will talk about.

2. Building Blocks of a Big Data System

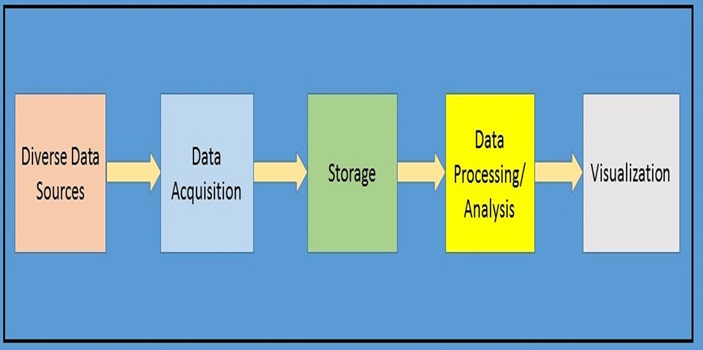

A Big Data system compromises of a number of functional blocks that provide the system the capability for data acquisition from diverse sources, doing pre-processing (e.g. cleansing, validation, etc.) on this data, storing the data, doing processing and analytics on this stored data (e.g. doing predictive analytics, generating recommendations for online uses and so on), and finally presenting and visualizing the summarized and aggregated results.

Following figure depicts these high level components of a Big Data system

The rest of this section briefly describes each of the components as shown in Figure 1.

2.1 Diverse Data Sources

In today’s IT ecosystem, data from multiple sources needs to be analyzed and acted upon. These sources could be anything from an online web applications, batch uploads or feeds, live streaming data, data from sensors, and so on.

Data from these sources can come in different formats and using different protocols. For example, an online web application might be sending data in a SOAP/XML format over http, the feed might be coming in a CSV file format, and devices might be communicating over MQTT protocol.

As performance of these individual systems is not in control of a Big Data system, and often these systems are external applications, owned by external vendors or teams, the rest of the article will not go in details of the performance of these systems.

2.2 Data Acquisition

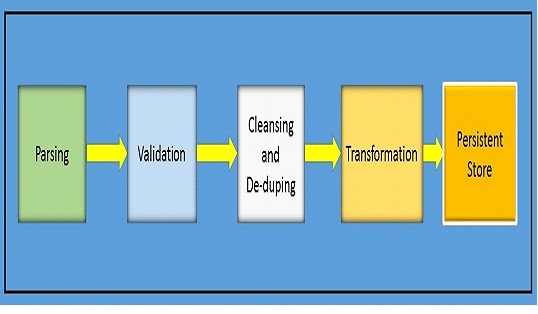

Data from diverse sources has to be acquired before any processing can happen. This involves parsing, validation, cleansing, transforming, de-duping, and storing in a suitable format on some kind of persistent storage.

In the following sections, this article will highlight some of the considerations, which should be taken care of, to achieve a high performance data acquisition component of a Big Data system. Note that this article will not discuss different techniques used for data acquisition.

2.3 Storage

Once the data is acquired, cleansed, and transformed to the required format, it needs to be stored in some kind of storage, where processing and other analytical functions can be performed.

In the following sections, this article will present some of the best practices for storage (both logical and physical), to achieve better performance in a Big Data system. The impact of security requirements on performance will also be discussed at the end of the article.

2.4 Data Processing and Analysis

Some of the steps involved in this stage are de-normalization of the cleansed data, performing some sort of correlation amongst different set of data, aggregating the results based on some pre-defined time intervals, performing ML algorithms, predictive analytics, and so on.

In the following sections, this article will present some of the best practices for carrying out data processing and analysis to achieve better performance in a Big Data System.

2.5 Visualization and Presentation

The last step of a big data flow is to view the output of different analytical functions. This step involves reading from the pre-computed aggregated results (or other such entities) and presenting them in the forms of user friendly tables, charts, and other such methods, which makes it easy to interpret and understand the results.

3. Performance Considerations for Data Acquisition

Data acquisition is the step where data from diverse sources enter the Big Data system. Performance of this component directly impacts how much data a big data system can receive at any given point of time.

Data Acquisition process can vary based on exact requirement of the system, but some of the commonly performed steps in this are — parsing the incoming data, doing necessary validation, cleansing the data e.g. removing duplicate data (de-dupe), transforming the cleansed data to a required format, and storing it to some kind of persistent storage.

Some of the logical steps involved in data acquisition process are shown below in this figure:

Following are some of the performance considerations, which should be worked upon to ensure a high performing data acquisition component:

- Data Transfer from diverse sources should be asynchronous. Some of the ways to achieve this is to either use file feed transfers at regular time intervals or by using some Message-Oriented-Middleware (MoM) for the same. This will allow data from multiple sources to be pumped in at a rate much faster than what big data system can process at a given time. Having an asynchronous data transfer allows decoupling between data sources and big data system. Now, big data infrastructure can scale independently. Traffic bursts of an online application will not overload the big data environment.

- If data is being pulled directly from some external database, make sure to pull data in bulk.

- If data is being parsed from a feed file, make sure to use appropriate parsers. E.g. if reading from an XML file, there are different parsers like JDOM, SAX, DOM and so on. Similarly for CSV, JSON, and other such formats, multiple parsers and APIs are available. Choose the one which meets the given requirements with better performance.

- Always prefer to use in-built or out of the box validation solutions. Most of the parsing/validations workflow generally run in a server environment (ESB/AppServer). These have standard validators available for almost all the scenarios. Under most of the circumstances, these will generally perform much faster than any custom validator you may develop.

- Similarly, if the data format is in XML, prefer using XML schemas (XSD) for validations.

- Even if in scenarios where parsers or validations etc. aren't running in a server environment, but in custom scripts, developed using high level languages like Java, prefer to use built-in libraries and frameworks. Under most of the circumstances, these will generally perform much faster than any custom code you may develop.

- Identify and filter out invalid data as early as possible, so that all the processing after validations works only on a legitimate set of data.

- Most of the systems want invalid data to be stored in some error tables. Keep this in mind while sizing the database and storage.

- If the valid source data needs to cleansed, e.g. removing some information which is not required, making code and descriptions consistent across data received from multiple systems etc., make sure this action is performed on a larger bulk of data in one go, rather than doing it record by record or in smaller chunks. Generally it involves referencing some external tables or data dictionaries for reading some kind of static information. By using the static information retrieved once, for cleansing the bulk of the data, performance can be improved.

- To remove the duplicate records (de-dupe), it is very important to determine what constitutes a unique record. More often than not, some unique identifier like timestamp or ID etc. may need to be appended to the incoming data. Generally, each individual record needs to be updated with such unique field, make sure each generation of this unique ID is not very complicated or else it will impact the performance poorly.

- Data received from multiple sources may or may not be in the same format. Sometimes, it is required to transform data received in multiple formats to use some common format or a set of common formats.

- Like Parsing, it is recommended to use available or built-in transformers, rather than developing something from scratch.

- Transformation is generally the most complex, time-intensive, and resource-consuming step of data acquisition. So ensure to achieve as much parallelization in this.

- Once all the above activities of data acquisition are completed, the transformed data is generally stored in some kind of persistent storage, so that later analytical processing, summarization, aggregation etc. can be done on this data.

- Multiple technology solutions exists to handle this persistent( RDBMS, NoSQL, Distributed file systems like Hadoop and so on).

- Evaluate carefully and choose a solution which meets the requirements. Depending upon the requirements, one may have to choose a combination of different solutions.

4. Performance Considerations for Storage

Once all the required steps of data acquisition are completed, data needs to be stored on some kind of persistent storage.

In this section, some of the important performance guidelines for storing the data will be discussed. Both storage options, logical data storage (and model), and physical storage will be discussed. Note that these guidelines should be considered for all data, whether raw or final output data of some analytical functions like pre-computed aggregated data etc.

- Always consider the level of normalization or de-normalization you choose. The way you model your data has a direct impact on the performance, as well as aspects like data redundancy, disk storage capacity, and so on. For some of the scenarios like simply dumping the source feeds into a database, you may want to store the initial raw data as it is coming from source systems, for some of the scenarios, like performing some analytical calculations like aggregation etc., you may want the data to be in de-normalized forms.

- Most of the big data systems will have NoSQL databases rather than RDBMSs to store and process huge amount of data.

- Different NoSQL databases have different capabilities, some are good for faster reads, some are good for faster inserts, updates, and so on.

- Some database stores are row oriented, some are column oriented, etc.

- Evaluate these databases, based on your exact requirements (e.g., whether you need better read performance or better write) and then choose.

- Similarly each of these databases have configuration properties, which control different aspects of how these databases work. Some of these properties are level of replication, level of consistency, and so on.

- Some of these properties have direct impact on the performance of the database. Keep this in mind before finalizing any such strategy.

- Level of compaction, size of buffer pools, timeouts, and caching are some more properties of different NoSQL databases, which can impact performance.

- Sharding and partitioning are another very important functionality of these databases. The way sharding is configured can have drastic impact on the performance of the system. Choose sharding and partition keys carefully.

- Not all NoSQL databases have built-in support for different techniques like joins, sorts, aggregations, filters, indexes, and so on.

- If you need to use such features extensively, it will be best to use solutions which have these features built-in. Generally, built-in features will give better performance than any custom made solution.

- NoSQLs come with built-in compressors, codecs, and transformers. If these can be utilized to meet some of the requirements, prefer to use them. These can perform various tasks like format conversions, zipping the data, etc. This will not only make the later processing faster, but also reduce the network transfer.

- Many NoSQL databases support multiple types of file-systems to be used as their storage medium. These include local file systems, distributed file systems and even cloud based storage solution.

- Unless inter-operability is the biggest criteria, try to use a file system which is native for the NoSQL (e.g. HDFS for HBase).

- This is because, if some external file-system/format is used, you will need codecs/transformers to perform the necessary conversion while reading/writing the data. It will add another layer in the overall read/write process and will cause extra processing.

- Data models of a big data system is generally modelled on the use-cases these systems are serving. This is in stark contrast to RDMBS data modelling techniques, where the database model is designed to be a generic model, and foreign keys and table relationships are used to depict real world interactions among entities.

- At hardware level, local RAID disks may not be sufficient for a big data systems. Consider using Storage Area Network(SAN) based storage for better performance

5. Performance Considerations for Data Processing

Data Processing and analytical processing is the core of a big data system. This is where the bulk of processing like summarization, forecasting, aggregation, and other such logic is performed.

This section talks about some of the performance tips for data processing. Note that, depending upon the requirements, big data system architecture may have some components for both, real time stream processing and batch processing. This section covers all aspects of data processing without necessarily categorizing them to any particular processing model.

- Choose an appropriate data processing framework after detailed evaluation of the f/w and the requirements.

- Some frameworks are good for batch processing, while others work better for real time stream processing.

- Similarly some frameworks use in-memory model while others work on file and disk based processing.

- Some frameworks may provide more level of parallelization than other frameworks, thus making whole execution faster.

- In memory frameworks are most likely to perform much faster than disk based processing frameworks, but may lead to higher infrastructure costs.

- In a nutshell, it is imperative that choose processing should have the capabilities to meet the requirements. Otherwise one may end up with a wrong framework and will not be able to meet functional or non-functional requirements including performance.

- Some of these frameworks divide the data to be processed into smaller chunks. These smaller chunks of data are then processed independently by individual jobs. A coordinator job managers all these independent sub-jobs

- Carefully analyze how much data is being allocated to individual jobs.

- The smaller the data, the more job overheads like startup and cleaning up job will burden the system.

- If the data size is too big, data transfer may take too long to complete. This may also lead to uneven utilization of processing resources, e.g. one big job running for too long on one server, while other servers are waiting for work.

- Do not forget to view the number of jobs launched for a given task. If needed, tune this setting to change the number of auto-jobs.

- Always keep an eye on the size of data transfers for job processing. Data locality will give you the best performance, because data is always available locally for a job. But achieving higher level of data locality means data needs to be replicated at multiple locations. This again can have a huge performance impact.

- Also, results of a real-time stream event need to be merged with the output of batch analytical processes. Design your system such that this is handled smoothly, without any process impacting the results of other processes in case of failure.

- Many times, re-processing needs to happen on the same set of data. This could be because of reasons like some error/exception occurred in initial processing, or change in some business process and business wants to see the impact on old data as well. Design your system to handle these scenarios.

- It means you may need to store original raw data for longer periods, hence need more storage.

- The final output of processing jobs should be stored in a format/model, which are based on the end results expected from the big data system. E.g. if the end result is that a business user should see the aggregated output in weekly time series intervals, make sure results are stored in a weekly aggregated forms.

- To achieve this, database modelling of big data systems is done on the basis of types of use cases it is expected to address. E.g. it is not unusual for a big-data system to have final output tables having a structure which closely represents the format of the output report, which will be displayed by a presentation layer tool

- More often than not, this can have great impact on how an end user perceives the performance of this system. Consider a scenario where on submitting a request to view last week's aggregated data, business logic tries to aggregate weekly data from the output data, which had results of daily or hourly aggregations. This can be a tremendously slow operation if the data is too big.

- Some frameworks provide features like lazy evaluation of big data queries. This can be a performance booster, as data is not pulled or referenced unless required.

Always monitor and measure the performance using tools provided by different frameworks. This will give idea about how long it is taking to finish a given job.

6. Performance Considerations for Visualization

Carefully designed high performing big data systems provide value by performing deep dive analysis of the data and providing valuable insights based on this analysis. This is where visualization comes into play. Good visualization helps users take a detailed drilled down view of the data.

Note that traditional BI and reporting tools or steps used to build custom reporting systems cannot be scaled up to cater for the visualization demands of a big data system. Many COTS visualization tools are now available.

This article will not go in the details of how these individual tools can be tuned to get better performance, but will present generic guidelines which should be followed while designing a visualization layer.

- Make sure visualization layer displays the data from final summarized output tables. These summarized tables could be aggregations based on time period recommendations based on category or any other use case based summarized tables. Avoid reading the whole raw data directly from visualization layer.

- This will not only minimize the data transfer to minimal, but also help avoiding heavy processing, when user is viewing the reports.

- Maximize the use of caching in a visualization tool. Caching can have very good impact on overall performance of visualization layer

- Materialized views can be another important technique to improve performance.

- Most of the visualization tools allow configurations to increase the number of works (threads) to handle the reporting requests. If capacity is available, and the system is receiving a high number of requests, this could be one of the options for better performance.

- Keep the pre-computed values in the summarized tables. If some calculations need to be done at runtime, make sure those are as minimal as possible and work on the highest level of data possible.

- Visualization tools also allow multiple ways to read the data which needs to be presented. Some of these are disconnected or extract mode, live connect mode and so on. Each of these modes serve different requirements and can perform differently in a given scenario. Evaluate carefully.

- Similarly, some tools allow data to be retrieved incrementally. This minimizes the data transfer and can fasten up the whole visualization.

- Keep the size of generated images like graphs, charts, etc. to minimum.

- Most of the visualization frameworks and tools use Scalable Vector Graphics (SVG). Complex layouts using SVG can have serious performance impacts.

- Once all the practices and guidelines in all the sections of this article are followed, make sure to plan for sufficient resources like CPUs, memory, disk storage, network bandwidth etc.

7. Big Data Security and Its Impact on Performance

Like any IT system, security requirements can also have serious impacts the performance of a big data system. In this section, some high level considerations for designing the security of a big data system without having an adverse impact on the performance will be discussed.

- Ensure that data coming from diverse sources are properly authenticated and authorized at entry point of the big data system. Even if all the data is coming from trusted sources only, and there is no requirement for such authentication of source data, keep your design flexible to handle this.

- Once data is properly authenticated, try to avoid any more authentication of the same data at later point of executions. If needed, tag this authenticated data with some kind of identifier or token to mark it as authenticated, and to use later. This will save duplicate processing trying to authenticate data at every step again and again.

- You may need to support other mechanisms like PKI based solutions or Kerberos. Each of these has different performance characteristics, which should be considered before finalizing any solution.

- More often than not, data needs to be compressed before it is sent to big data systems. This decreases the size of data to be transferred, thus making data transfer faster. But due to need of additional step required to un-compress data, it can slow down the processing.

- Different algorithms and formats are available for this compression, and each can provide different level of compressions. These different algorithms have different CPU requirements, so choose the algorithm carefully.

- Similarly, evaluate encryption logic and algorithms before selecting.

- It is advisable to keep encryption limited to the required fields or information that are sensitive or confidential.

- You may need to maintain the records or logs of different activities like access, updates, etc. in an audit-trail table or log. This may be needed for various regulatory or business policies.

- Note that this requirement not only increases the number of steps involved in processing, but may increase the amount of storage needed. Keep this in mind.

- Always use security options provided by infrastructure entities like OS, DB, etc. These will perform faster than custom solutions built to do the same tasks.

8. Conclusion

This article presented various performance considerations, which can act as guidelines to build a high performance big data and analytics systems. Big Data and Analytics systems can be very complex because of multiple reasons. To meet the performance requirements of such system, it is necessary that system is designed and build from the start up to meet these performance requirements.

This article presented such guidelines which should be followed during different stages of a big data system, including how security requirements can impact performance of big data system.

Opinions expressed by DZone contributors are their own.

Comments