Building Your Own Apache Kafka Connectors

Building connectors for Apache Kafka following a complete code example.

Join the DZone community and get the full member experience.

Join For FreeOne of the best ways to describe what is open source is by saying that it is the art of democratizing knowledge. I like this description because it captures the essence of what open source really does—sharing knowledge that people can use to solve recurring problems. By recurring problem, I mean any problem that can be experienced by different people sharing the same context. Building Apache Kafka connectors is a good example. Different people may need to build one, and the reason is often the need to integrate with external systems. In this blog post, I will share the details of a very nice GitHub repository that does exactly this.

Kafka Connectors: What, Why, and When

Apache Kafka by itself is just a dumb pipe. It allows you to durably and reliably store streams of events and share them with multiple systems interested in either getting a copy of the data or processing it. To bring data in and to send data out, Kafka relies on another layer of technology called Kafka Connect. This is an integration platform that allows builders to connect with different systems via specialized connectors. There is a great number of connectors developed for different systems, available as both commercial and free offerings here. However, there are situations where you simply can't use them. Some of these connectors may not be available for the specific system you intend to connect; maybe you can't pay for the commercial licenses, or perhaps they don't offer the exact support you are expecting.

This is when you may need to develop your own Apache Kafka connector. If you know how to develop applications in Java; you can leverage the Java SDK provided by Kafka Connect to develop your own connectors, whether if they are sources or sinks. Source connectors bring data into Kafka topics. They read source systems and process their data schema. Sinks are the opposite. They pick the existing data available in Kafka topics and write into an external system, using the schema the system expects. To develop a custom connector, it mainly boils down to following a good example that applies the right best practices.

From AWS re:Invent 2022 to the World

During the AWS re:Invent 2022 conference, the BOA (Build On AWS) track included a hands-on workshop to teach how to develop a custom Apache Kafka connector, and how to deploy it in AWS. The primary aim of the workshop was not only to share the nuts and bolts of how to create one from scratch—but how to create one following the right best practices. The workshop was titled BOA301: Building your own Apache Kafka Connectors, and it was a success. Many builders using Kafka with Amazon MSK share the same need, and learning the specifics of their use cases was very rewarding. This is the key driver of the BOA track. To build real-world solutions with the community and to dive deep into code and architecture.

If you missed this workshop, here is some good news for you: I transformed the workshop into a reusable open-source code anyone can use anytime. In this GitHub repository, you can find the code used during the workshop and the tools and best practices that I tried to enforce during it.

The code includes all the latest versions of Apache Kafka APIs, the result of the fruitful conversations and feedback gathered during the workshop, and all the bells and whistles that a connector must have.

A Complete Source Connector

The GitHub repository contains the complete implementation of a source connector. The connector polls a fictitious source system every 5 seconds, accessing 3 different partitions from the source system. Each source system organizes its data using different logical entities, such as tables in a database. This is what a partition means: a logical entity that comprises a part of the dataset. The connector also shows how to handle schemas. For each record processed, it uses the schema that the source system added to it.

The connector also shows how to implement dynamic re-partitioning. This is when the source connector must be able to detect when the number of partitions to be read has changed and decides the number of tasks needed to handle the new workload. A task is the connector's unit of execution. Creating different tasks allows the connector to process records concurrently. For instance, let's say that after the connector is deployed, the number of partitions in the source system doubles from 3 to 6. Since the connector periodically monitors the source system for situations like this, a request for a connector re-configuration is made on the fly. No human intervention is needed because of this mechanism.

Along with the connector implementation, you will find a set of Java tests created for the most simple tasks a connector must do. This includes tests for the connector configuration, to the connector lifecycle and parameters validation, and for the thread that handles records. This should be enough for you to know how to strengthen the tests to more elaborated scenarios. Speaking about tests, if you need to play with the connector locally, the connector implementation has a Docker Compose file that creates a Kafka broker and a Kafka Connect server. Once both containers are up and running, you can use the example provided with the code (found in the /examples folder) to test the connector. The instructions for all of this can be found in the README of the GitHub repository.

Would you like to debug the connector code using your favorite Java IDE debugging tool? You are for a treat there, too. The Docker Compose file was carefully changed to enable remote debugging via the JDWP protocol. Whenever you need to debug the code with breakpoints from your IDE, just start a remote debugging session pointing to localhost:8888. This is the endpoint where the Kafka Connect server has been configured to bind the JDWP protocol. This is useful if you use the code to create your own, and you want to make sure that everything works as expected.

To the Cloud and Beyond

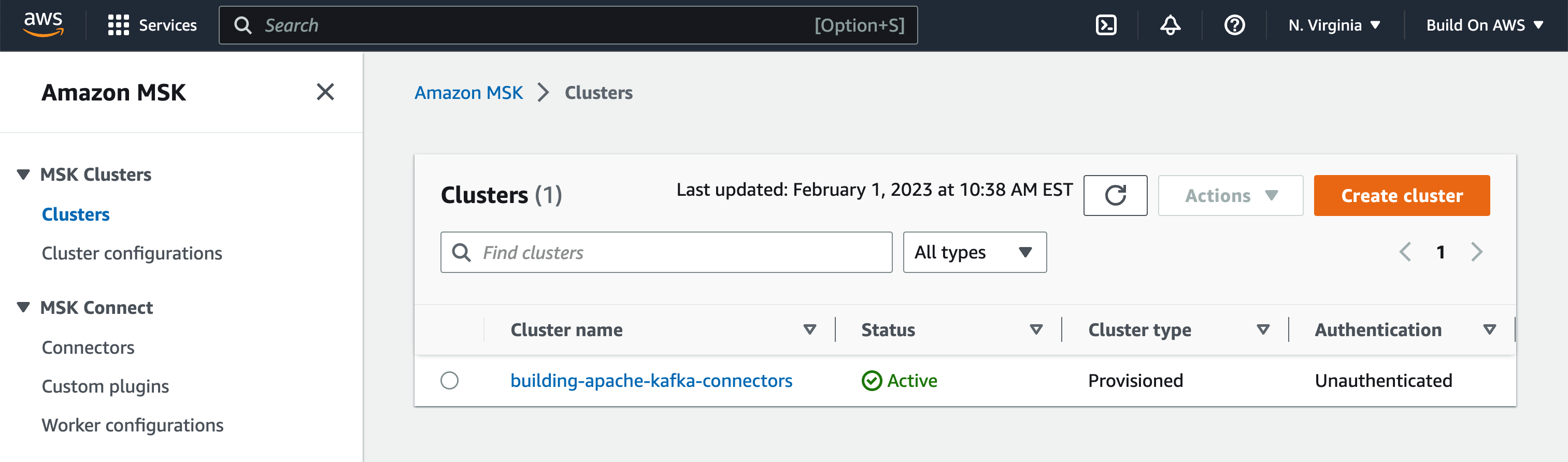

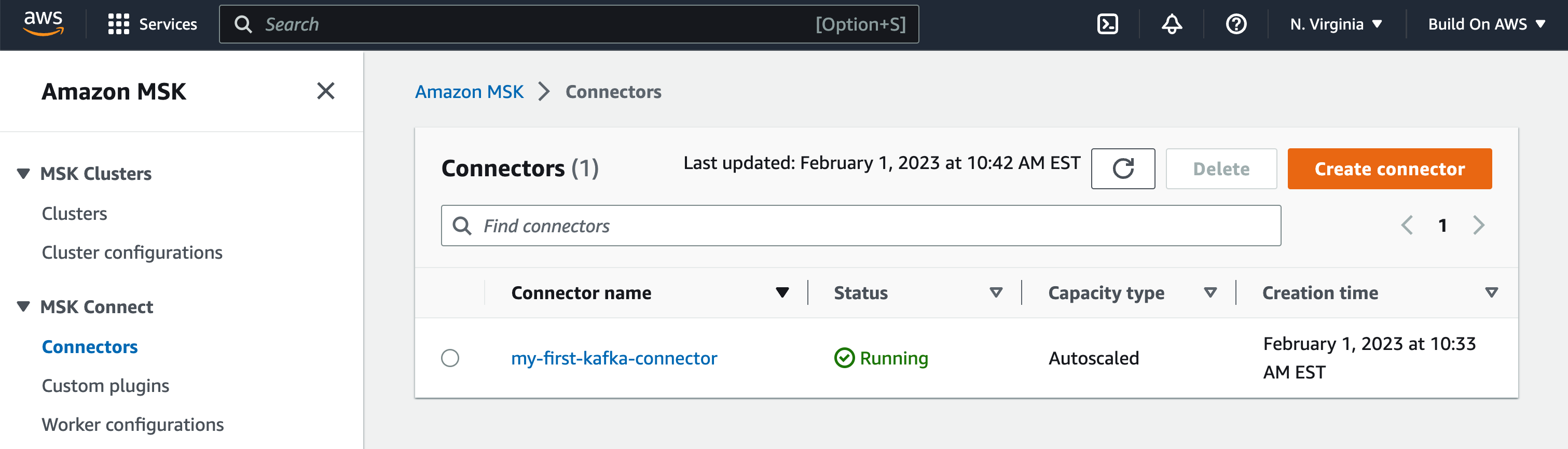

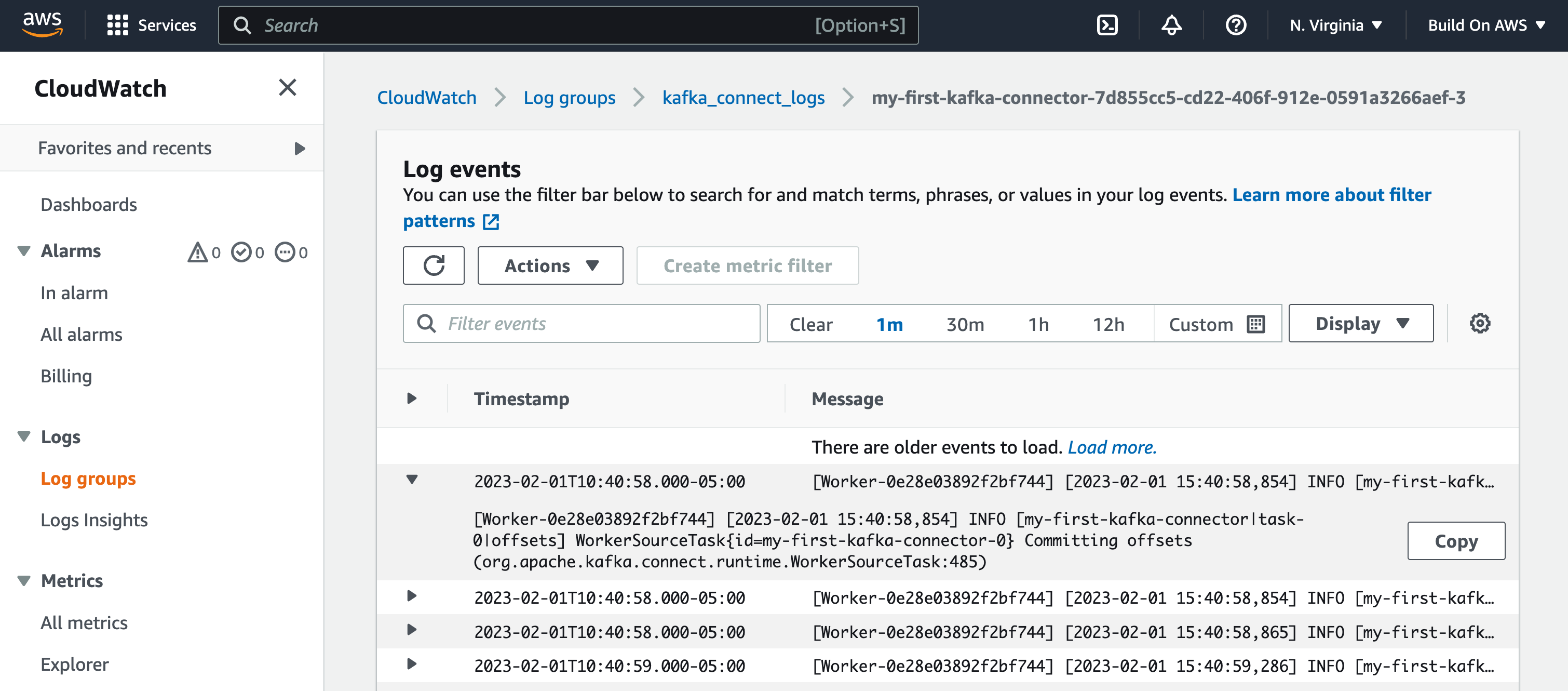

Another cool feature added to the connector implementation is a complete example of how to deploy the connector using Terraform. Within a few minutes, you can have the connector code up and running on AWS, with a Kafka cluster created for you on Amazon MSK and the connector properly deployed on Amazon MSK Connect. Once everything is fully deployed, the code also creates a bastion server that you can connect from your machine using SSH to verify if everything works as they supposed to.

Do You Like Video Tutorials?

If you are more of a visual person who likes to follow the steps to build something with a nice recorded tutorial, then I would encourage you to subscribe to the Build On AWS YouTube channel. This is the place you should go for dive deeps into code and architecture, with people from the AWS community sharing their expertise in a visual and entertaining manner.

Opinions expressed by DZone contributors are their own.

Comments