Data-First IDP: Driving AI Innovation in Developer Platforms

A Data-First IDP integrates governance, traceability, and quality into workflows, transforming how data is managed, enabling scalable, AI-ready ecosystems.

Join the DZone community and get the full member experience.

Join For FreeTraditional internal developer platforms (IDPs) have transformed how organizations manage code and infrastructure. By standardizing workflows through tools like CI/CD pipelines and Infrastructure as Code (IaC), these platforms have enabled rapid deployments, reduced manual errors, and improved developer experience. However, their focus has primarily been on operational efficiency, often treating data as an afterthought.

This omission becomes critical in today's AI-driven landscape. While traditional IDPs excel at managing infrastructure, they fall short when it comes to the foundational elements required for scalable and compliant AI innovation:

- Governance: Ensuring data complies with policies and regulatory standards is often a manual or siloed effort.

- Traceability: Tracking data lineage and transformations across workflows is inconsistent, if not entirely missing.

- Quality: Validating data for reliability and AI readiness lacks automation and standardization.

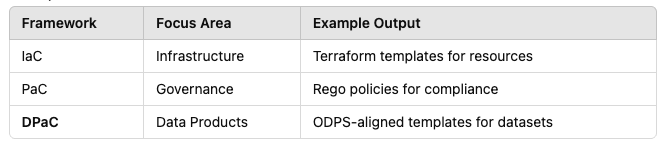

To meet these challenges, data must be elevated to a first-class citizen within the IDP. A data-first IDP goes beyond IaC, directly embedding governance, traceability, quality, and Policy as Code (PaC) into the platform's core. This approach transforms traditional automation into a comprehensive framework that operationalizes data workflows alongside infrastructure, enabling Data Products as Code (DPaC).

This architecture supports frameworks like the Open Data Product Specification (ODPS) and the Open Data Contract (ODC), which standardize how data products are defined and consumed.

While resource identifiers (RIDs) are critical in enabling traceability and interoperability, the heart of the data-first IDP lies in meta-metadata, which provides the structure, rules, and context necessary for scalable and compliant data ecosystems.

The Data-First Approach: Extending Automation

Templates and recipes are critical technologies that enable the IDP to achieve a high level of abstraction and componentize the system landscape.

A recipe is a parameterized configuration, IaC, that defines how specific resources or workloads are provisioned, deployed, or managed within the platform. Recipes are customized and reusable to fit particular contexts or environments, ensuring standardization while allowing flexibility for specific use cases.

A template is a group of recipes forming a "Golden Path" for developers. An architectural design pattern, such as a data ingestion pattern, for either Streaming, API, or File, the template creates a manifest, which is built, validated, and executed in the delivery plane.

A Data-First IDP adds the "Data Product" specification as a component, a resource, and, therefore, a recipe to the IDP; this could be a parameterized version of the ODPS and ODC.

The lifecycle and management of software are far more mature than that of data. The concept of a DPaC goes a long way toward changing this; it aligns the maturity of data management with the well-established principles of software engineering.

DPaC transforms data management by treating data as a programmable, enforceable asset, aligning its lifecycle with proven software development practices. By bridging the maturity gap between data and software, DPaC empowers organizations to scale data-driven operations with confidence, governance, and agility. As IaC revolutionized infrastructure, DPaC is poised to redefine how we manage and trust our data.

The Data Marketplace, discussed in the previous article, is a component, a resource, and a recipe, which may rely on other services such as observability, a data quality service, and a graph database, which are also components and part of the CI/CD pipeline.

Governance and Engineering Baseline

Governance and engineering baselines can be codified into policies that are managed, versioned, and enforced programmatically through PaC. By embedding governance rules and engineering standards into machine-readable formats (e.g., YAML, JSON, Rego), compliance is automated, and consistency across resources.

- Governance policies: Governance rules define compliance requirements, access controls, data masking, retention policies, and more. These ensure that organizational and regulatory standards are consistently applied.

- Engineering baselines: Baselines establish the minimum technical standards for infrastructure, applications, and data workflows, such as resource configurations, pipeline validation steps, and security protocols.

The Role of RIDs

While meta-metadata drives the data-first IDP, RIDs operationalize its principles by providing unique references for all data-related resources. RIDs ensure the architecture supports traceability, quality, and governance across the ecosystem.

- Facilitating lineage: RIDs are unique references for data products, storage, and compute resources, allowing external tools to trace dependencies and transformations.

- Simplifying observability: This allows objects to be tracked across the landscape.

Example RID Format

rid:<context>:<resource-type>:<resource-name>:<version>

- Data product RID: rid:customer-transactions:data-product:erp-a:v1.0

- Storage RID: rid:customer-transactions:storage:s3-bucket-a:v1.0

Centralized Management and Federated Responsibility With Community Collaboration

A data-first IDP balances centralized management, federated responsibility, and community collaboration to create a scalable, adaptable, and compliant platform. Centralized governance provides the foundation for consistency and control, while federated responsibility empowers domain teams to innovate and take ownership of their data products. Integrating a community-driven approach results in a dynamically evolving framework to meet real-world needs, leveraging collective expertise to refine policies, templates, and recipes.

Centralized Management: A Foundation for Consistency

Centralized governance defines global standards, such as compliance, security, and quality rules, and manages critical infrastructure like unique RIDs and metadata catalogs. This layer provides the tools and frameworks that enable decentralized execution.

Standardized Policies

Global policies are codified using PaC and integrated into workflows for automated enforcement.

Federated Responsibility: Shift-Left Empowerment

Responsibility and accountability are delegated to domain teams, enabling them to customize templates, define recipes, and manage data products closer to their sources. This shift-left approach ensures compliance and quality are applied early in the lifecycle while maintaining flexibility:

- Self-service workflows: Domain teams use self-service tools to configure resources, with policies applied automatically in the background.

- Customization within guardrails: Teams can adapt central templates and policies to fit their context, such as extending governance rules for domain-specific requirements.

- Real-time validation: Automated feedback ensures non-compliance is flagged early, reducing errors and fostering accountability.

Community Collaboration: Dynamic and Adaptive Governance

The environment encourages collaboration to evolve policies, templates, and recipes based on real-world needs and insights. This decentralized innovation layer ensures the platform remains relevant and adaptable:

- Contributions and feedback: Domain teams contribute new recipes or propose policy improvements through version-controlled repositories or pull requests.

- Iterative improvement: Cross-domain communities review and refine contributions, ensuring alignment with organizational goals.

- Recognition and incentives: Teams are incentivized to share best practices and reusable artifacts, fostering a culture of collaboration.

Automation as the Enabler

Automation ensures that governance and standards are consistently applied across the platform, preventing deviation over time. Policies and RIDs are managed programmatically, enabling:

- Compliance at scale: New policies are integrated seamlessly, validated early, and enforced without manual intervention.

- Measurable outcomes

Extending the Orchestration and Adding the Governance Engine

A data-first IDP extends the orchestration engine to automate data-centric workflows and introduces a governance engine to enforce compliance and maintain standards dynamically.

Orchestration Enhancements

- Policy integration: Validates governance rules (PaC) during workflows, blocking non-compliant deployments.

- Resource awareness: Uses RIDs to trace and enforce lineage, quality, and compliance

- Data automation: Automates schema validation, metadata enrichment, and lineage registration.

Governance Engine

- Centralized policies: Defines compliance rules as PaC and applies them automatically.

- Dynamic enforcement: Monitors and remediates non-compliance, preventing drift from standards.

- Real-time feedback: Provides developers with actionable insights during deployment.

Together, these engines ensure proactive compliance, scalability, and developer empowerment by embedding governance into workflows, automating traceability, and maintaining standards over time.

The Business Impact

- Governance at scale: Meta-metadata and ODC ensure compliance rules are embedded and enforced across all data products.

- Improved productivity: Golden paths reduce cognitive load, allowing developers to deliver faster without compromising quality or compliance.

- Trust and transparency: ODPS and RIDs ensure that data products are traceable and reliable, fostering stakeholder trust.

- AI-ready ecosystems: The framework enables reliable AI model training and operationalization by reducing data prep and commoditizing data with all the information that adds value and resilience to the solution.

The success of a data-first IDP hinges on meta-metadata, which provides the foundation for governance, quality, and traceability. Supported by frameworks like ODPS and ODC and operationalized through RIDs, this architecture reduces complexity for developers while meeting the business's needs for scalable, compliant data ecosystems. The data-first IDP is ready to power the next generation of AI-driven innovation by embedding smart abstractions and modularity.

Opinions expressed by DZone contributors are their own.

Comments