Determine Payload Size Using Nginx

We wanted to evaluate which protocol is suitable for our micro-service architecture as our payload size was getting increased quite considerably.

Join the DZone community and get the full member experience.

Join For FreeNowadays any application is API based or distributed where one request is not just served by one application but a series of applications either parallel or serial. Application talks to each other using various protocols like REST, RPC, WebSocket, and the payload formats varies from JSON / XML / Binary / propriety.

Recently we wanted to evaluate which protocol is suitable for our micro-service architecture as our payload size was getting increased quite considerably. To determine that we want to know maximum payload size and minimum, as our payload size is directly proportional to items selected.

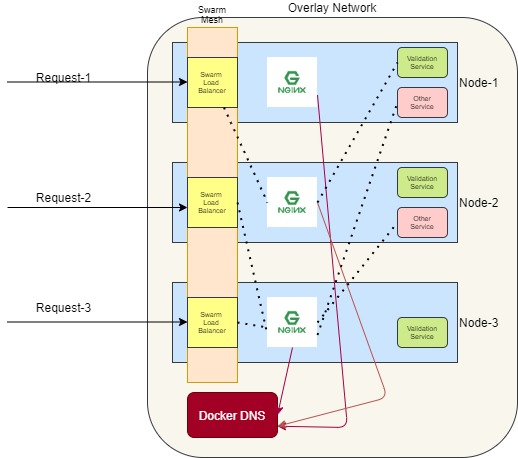

Our tech stack is open source and our deployment is containerized polyglot micro-service architecture using docker swarm. Some of the services are using REST, and some are using gRPC (protobuff) and the payload is JSON, JSON+gzip, and binary. So to determine the payload size, we need a common proxy that will able to serve HTTP request and gRPC request. After looking at all tools, we decided to front our swarm service using Nginx so that we can capture payload size and uniquely logs each service request.

Let’s start how to configure Nginx for the same. The first challenge is to serve all types of the payload so we are going to use stream rather than HTTP. For each service, we are going to dedicate the incoming ports and upstream ports. There will be separate log files for each service as Nginx can only log the upstream IP address. If we combine the logs of all service, it will be difficult to identify based on IP as these will be container IP which can change if we restart or scale.

Nginx configuration:

xxxxxxxxxx

worker_processes 1;

events {

worker_connections 1024;

}

stream {

upstream stream_backend_service1 {

server swarm_service1:8080;

}

upstream stream_backend_service2 {

server swarm_service2:9090;

}

log_format combined '$upstream_addr = Request Payload Size - $upstream_bytes_sent Response Payload Size - $upstream_bytes_received';

server {

listen 127.0.0.1:8090;

proxy_pass stream_backend_service1;

access_log logs/payloadsize.log combined;

}

server {

listen 127.0.0.1:8091;

proxy_pass stream_backend_service2;

access_log logs/payloadsize_sever2.log combined;

}

}

If you go to the respective logs following will get printed.

xxxxxxxxxx

payloadsize.log

10.0.1.2:8080 = Request Payload Size – 377 Response Payload Size – 180

payloadsize_server2.log

10.0.1.3:9090 = Request Payload Size – 837 Response Payload Size – 282

Our swarm_service1 is using REST over HTTP and swarm_service2 is using gRPC.

Hope this will help others who want to discover Nginx benefits.

Happy coding.

Published at DZone with permission of Milind Deobhankar. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments