Docker Compose + NATS: Microservices Development Made Easy

How to keep Docker environments lean and scalable using the open source NATS messaging protocol.

Join the DZone community and get the full member experience.

Join For FreeBuzzwords are everywhere in our industry. “Microservices”, “Serverless computing”, “Nanoservices”, “Containerized” — you could fill a whole blog post just with these overused phrases. If you cut through the terminology flavor of the month, there are some very important common thread for application developers. One is simplicity. Regardless of what architectural approach you’re using - and what you may or may not refer to it as — you want it just work. You don’t want to spend days trying getting various pieces of your infrastructure up and running. Another important need is performance at scale. This requires both low latency and high throughput. Fast solutions are great — but they are not much good if they cannot scale up to meet demands of users. Low latency solutions are nice, but what good is low resource utilization without speed?

Simplicity and performance are even more important to keep in mind when your infrastructure runs Docker containers. The whole point of using containers in the first place is to have a decoupled, scalable, dependency free set of services that just work. Why use a messaging layer that isn’t designed for cloud or containers? Enter NATS.

The NATS Docker Image is incredibly simple, and has a very lightweight footprint. Imagelayers.io is a great tool by Centurylink to scan various Docker Containers and report back on the various ‘layers’ (components within the Dockerfile, and size). See here for a comparison of NATS to a few other messaging systems.

At just a few MB, and handful of layers, you can keep your Docker environment lean and scalable; you won’t even notice NATS is running on your container. As NATS has no external dependencies, and is simple plain-text messaging protocol - regardless of what your current or future infrastructure may look like NATS just works.

The simplicity of containers is perfectly aligned with the simplicity of NATS, but what about scale? If you’re looking to have a bunch of decoupled services functioning in real-time NATS is a great option. Various benchmarks have shown a single NATS Server capable of sending 10 million+ messages per second.

Now, onto the fun stuff!

Docker Compose + NATS.io

Full example: https://gist.github.com/wallyqs/7f72efdc3fd6371364f8b28cbe32c5ee

In this basic example we will have a simple NATS based microservice setup, consisting of:

- An HTTP API external users of the service can make requests against

- A worker which processes the tasks being dispatched by the API server

The frontend HTTP API exposes a ‘/createTask’ to which we can send a requests and receive a response. Internally, the server will send a NATS request to the “tasks” subject and wait for a response from a worker which is subscribed to that subject to reply back once it has finished processing the request (or timeout, in case the response does not come back after 5 seconds).

For this example the server looks like:

package main

import (

"fmt"

"log"

"net/http"

"os"

"time"

"github.com/nats-io/nats"

)

type server struct {

nc *nats.Conn

}

func (s server) baseRoot(w http.ResponseWriter, r *http.Request) {

fmt.Fprintln(w, "Basic NATS based microservice example v0.0.1")

}

func (s server) createTask(w http.ResponseWriter, r *http.Request) {

requestAt := time.Now()

response, err := s.nc.Request("tasks", []byte("help please"), 5*time.Second)

if err != nil {

log.Println("Error making NATS request:", err)

}

duration := time.Since(requestAt)

fmt.Fprintf(w, "Task scheduled in %+v\nResponse: %v\n", duration, string(response.Data))

}

func (s server) healthz(w http.ResponseWriter, r *http.Request) {

fmt.Fprintln(w, "OK")

}

func main() {

var s server

var err error

uri := os.Getenv("NATS_URI")

for i := 0; i < 5; i++ {

nc, err := nats.Connect(uri)

if err == nil {

s.nc = nc

break

}

fmt.Println("Waiting before connecting to NATS at:", uri)

time.Sleep(1 * time.Second)

}

if err != nil {

log.Fatal("Error establishing connection to NATS:", err)

}

fmt.Println("Connected to NATS at:", s.nc.ConnectedUrl())

http.HandleFunc("/", s.baseRoot)

http.HandleFunc("/createTask", s.createTask)

http.HandleFunc("/healthz", s.healthz)

fmt.Println("Server listening on port 8080...")

if err := http.ListenAndServe(":8080", nil); err != nil {

log.Fatal(err)

}

}

And the worker processing subscribed to NATS which will be processing the requests looks like:

package main

import (

"fmt"

"log"

"net/http"

"os"

"time"

"github.com/nats-io/nats"

)

func healthz(w http.ResponseWriter, r *http.Request) {

fmt.Println(w, "OK")

}

func main() {

uri := os.Getenv("NATS_URI")

var err error

var nc *nats.Conn

for i := 0; i < 5; i++ {

nc, err = nats.Connect(uri)

if err == nil {

break

}

fmt.Println("Waiting before connecting to NATS at:", uri)

time.Sleep(1 * time.Second)

}

if err != nil {

log.Fatal("Error establishing connection to NATS:", err)

}

fmt.Println("Connected to NATS at:", nc.ConnectedUrl())

nc.Subscribe("tasks", func(m *nats.Msg) {

fmt.Println("Got task request on:", m.Subject)

nc.Publish(m.Reply, []byte("Done!"))

})

fmt.Println("Worker subscribed to 'tasks' for processing requests...")

fmt.Println("Server listening on port 8181...")

http.HandleFunc("/healthz", healthz)

if err := http.ListenAndServe(":8181", nil); err != nil {

log.Fatal(err)

}

}Let’s say that both of these workloads have their own set of dependencies, so our directory structure may look like something like the below. The only dependency then is the NATS client itself.

Code examples for api-server.go and worker.go are here: https://gist.github.com/wallyqs/7f72efdc3fd6371364f8b28cbe32c5ee

First, in our Docker Compose file (build.yml), we will declare how to build our dev setup and have it run as containers managed by the Docker engine:

version: "2"

services:

nats:

image: 'nats:0.8.0'

entrypoint: "/gnatsd -DV"

expose:

- "4222"

ports:

- "8222:8222"

hostname: nats-server

api:

build:

context: "./api"

entrypoint: /go/api-server

links:

- nats

environment:

- "NATS_URI=nats://nats:4222"

depends_on:

- nats

ports:

- "8080:8080"

worker:

build:

context: "./worker"

entrypoint: /go/worker

links:

- nats

environment:

- "NATS_URI=nats://nats:4222"

depends_on:

- nats

ports:

- "8181:8181"Next, we use docker-compose -f build.ym build to first create our containers:

$ docker-compose -f build.yml up

nats uses an image, skipping

Building api

Step 1 : FROM golang:1.6.2

---> 5850add7ecc2

Step 2 : COPY . /go

---> 188fa03a7cf5

Removing intermediate container 37ee6fd35e0d

Step 3 : RUN go build api-server.go

---> Running in 048e4010e539

---> 21dbaf567bbb

Removing intermediate container 048e4010e539

Step 4 : EXPOSE 8080

---> Running in b07dde665ad2

---> 3a6a59102f81

Removing intermediate container b07dde665ad2

Step 5 : ENTRYPOINT /go/api-server

---> Running in e3c5de88d6d4

---> faf4d12797f3

Removing intermediate container e3c5de88d6d4

Successfully built faf4d12797f3

Building worker

Step 1 : FROM golang:1.6.2

---> 5850add7ecc2

Step 2 : COPY . /go

---> Using cache

---> 0376e8a54e9d

Step 3 : RUN go build worker.go

---> Using cache

---> 1a53e068ad91

Step 4 : EXPOSE 8080

---> Using cache

---> 77b8a0e0ab4f

Step 5 : ENTRYPOINT /go/worker

---> Using cache

---> 7d23b0d4e846

Successfully built 7d23b0d4e846$ docker-compose -f build.yml up

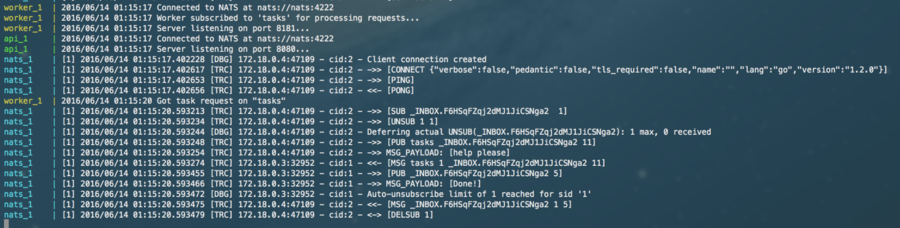

nats_1 | [1] 2016/06/14 01:30:25.563332 [INF] Starting nats-server version 0.8.0

nats_1 | [1] 2016/06/14 01:30:25.564371 [DBG] Go build version go1.6.2

nats_1 | [1] 2016/06/14 01:30:25.564392 [INF] Listening for client connections on 0.0.0.0:4222

nats_1 | [1] 2016/06/14 01:30:25.564444 [DBG] Server id is VYcRrgxx6c097Tq62NIIVp

nats_1 | [1] 2016/06/14 01:30:25.564451 [INF] Server is ready

nats_1 | [1] 2016/06/14 01:30:25.799723 [DBG] 172.18.0.3:32999 - cid:1 - Client connection created

nats_1 | [1] 2016/06/14 01:30:25.800213 [TRC] 172.18.0.3:32999 - cid:1 - ->> [CONNECT {"verbose":false,"pedantic":false,"tls_required":false,"name":"","lang":"go","version":"1.2.0"}]

nats_1 | [1] 2016/06/14 01:30:25.800308 [TRC] 172.18.0.3:32999 - cid:1 - ->> [PING]

nats_1 | [1] 2016/06/14 01:30:25.800311 [TRC] 172.18.0.3:32999 - cid:1 - <<- [PONG]

nats_1 | [1] 2016/06/14 01:30:25.800703 [TRC] 172.18.0.3:32999 - cid:1 - ->> [SUB tasks 1]

worker_1 | 2016/06/14 01:30:25 Connected to NATS at nats://nats:4222

worker_1 | 2016/06/14 01:30:25 Worker subscribed to 'tasks' for processing requests...

worker_1 | 2016/06/14 01:30:25 Server listening on port 8181...

nats_1 | [1] 2016/06/14 01:30:26.009136 [DBG] 172.18.0.4:47156 - cid:2 - Client connection created

nats_1 | [1] 2016/06/14 01:30:26.009536 [TRC] 172.18.0.4:47156 - cid:2 - ->> [CONNECT {"verbose":false,"pedantic":false,"tls_required":false,"name":"","lang":"go","version":"1.2.0"}]

nats_1 | [1] 2016/06/14 01:30:26.009562 [TRC] 172.18.0.4:47156 - cid:2 - ->> [PING]

nats_1 | [1] 2016/06/14 01:30:26.009566 [TRC] 172.18.0.4:47156 - cid:2 - <<- [PONG]

api_1 | 2016/06/14 01:30:26 Connected to NATS at nats://nats:4222

api_1 | 2016/06/14 01:30:26 Server listening on port 8080...In this example, we have the API server expose a `/createTask` route, and doing a quick smoke test by sending a request with curl to confirm that requests are flowing through NATS:

NOTE: This is ok for a dev/test environment (and for the purposes of this example) but not recommended for production, as it impacts performance.

So! There you have it. A quick example showing how simple NATS is. NATS — much like containers themselves — is all about simplicity and scalability.

Want to get involved in the NATS Community and learn more? We would be happy to hear from you, and answer any questions you may have!

Follow us on Twitter: @nats_io

Opinions expressed by DZone contributors are their own.

Comments