Fast Deployments of Microservices Using Ansible and Kubernetes

This article demonstrates a faster way to develop Spring Boot microservices using a bare-metal Kubernetes cluster that runs on your own development machine.

Join the DZone community and get the full member experience.

Join For FreeDoes the time your CI/CD pipeline takes to deploy hold you back during development testing? This article demonstrates a faster way to develop Spring Boot microservices using a bare-metal Kubernetes cluster that runs on your own development machine.

Recipe for Success

This is the fourth article in a series on Ansible and Kubernetes. In the first post, I explained how to get Ansible up and running on a Linux virtual machine inside Windows. Subsequent posts demonstrated how to use Ansible to get a local Kubernetes cluster going on Ubuntu 20.04. It was tested on both native Linux- and Windows-based virtual machines running Linux. The last-mentioned approach works best when your devbox has a separate network adaptor that can be dedicated for use by the virtual machines.

This article follows up on concepts used during the previous article and was tested on a cluster consisting of one control plane and one worker. As such a fronting proxy running HAProxy was not required and commented out in the inventory.

The code is available on GitHub.

When to Docker and When Not to Docker

The secret to faster deployments to local infrastructure is to cut out on what is not needed. For instance, does one really need to have Docker fully installed to bake images? Should one push the image produced by each build to a formal Docker repository? Is a CI/CD platform even needed?

Let us answer the last question first. Maven started life with both continuous integration and continuous deployment envisaged and should be able to replace a CI/CD platform such as Jenkins for local deployments. Now, it is widely known that all Maven problems can either be resolved by changing dependencies or by adding a plugin. We are not in jar-hell, so the answer must be a plugin. The Jib build plugin does just this for the sample Spring Boot microservice we will be deploying:

<build>

<plugins>

<plugin>

<groupId>com.google.cloud.tools</groupId>

<artifactId>jib-maven-plugin</artifactId>

<version>3.1.4</version>

<configuration>

<from>

<image>openjdk:11-jdk-slim</image>

</from>

<to>

<image>docker_repo:5000/rbuhrmann/hello-svc</image>

<tags>

<tag>latest10</tag>

</tags>

</to>

<allowInsecureRegistries>false</allowInsecureRegistries>

</configuration>

</plugin>

</plugins>

</build>

Here we see how the Jib Maven plugin is configured to bake and push the image to a private Docker repo. However, the plugin can be steered from the command line as well. This Ansible shell task loops over one or more Spring Boot microservices and does just that:

- name: Git checkouts

ansible.builtin.git:

repo: "{{ item.git_url }}"

dest: "~/{{ item.name }}"

version: "{{ item.git_branch }}"

loop:

"{{ apps }}"

****************

- name: Run JIB builds

ansible.builtin.command: "mvn clean compile jib:buildTar -Dimage={{ item.name }}:{{ item.namespace }}"

args:

chdir: "~/{{ item.name }}/{{ item.jib_dir }}"

loop:

"{{ apps }}"

The first task clones, while the last integrates the Docker image. However, it does not push the image to a Docker repo. Instead, it dumps it as a tar ball. We are therefore halfway towards removing the Docker repo from the loop. Since our Kubernetes cluster uses Containerd, a spinout from Docker, as its container daemon, all we need is something to load the tar ball directly into Containerd. It turns out such an application exists. It is called ctr and can be steered from Ansible:

- name: Load images into containerd

ansible.builtin.command: ctr -n=k8s.io images import jib-image.tar

args:

chdir: "/home/ansible/{{ item.name }}/{{ item.jib_dir }}/target"

register: ctr_out

become: true

loop:

"{{ apps }}"

Up to this point, task execution has been on the worker node. It might seem stupid to build the image on the worker node, but keep in mind that:

- It concerns local testing and there will seldom be a need for more than one K8s worker - the build will not happen on more than one machine.

- The base image Jib builds from is smaller than the produced image that normally is pulled from a Docker repo. This results in a faster download and a negligent upload time since the image is loaded directly into the Container daemon of the worker node.

- The time spent downloading Git and Maven is amortized over all deployments and therefore makes up less and less percentage of time as usage increases.

- Bypassing a CI/CD platform such as Jenkins or Git runners shared with other applications can save significantly on build and deployment time.

You Are Deployment, I Declare

Up to this point, I have only shown the Ansible tasks, but the variable declarations that are ingested have not been shown. It is now an opportune time to list part of the input:

apps:

- name: hello1

git_url: https://github.com/jrb-s2c-github/spinnaker_tryout.git

jib_dir: hello_svc

image: s2c/hello_svc

namespace: env1

git_branch: kustomize

application_properties:

application.properties: |

my_name: LocalKubeletEnv1

- name: hello2

git_url: https://github.com/jrb-s2c-github/spinnaker_tryout.git

jib_dir: hello_svc

image: s2c/hello_svc

namespace: env2

config_map_path:

git_branch: kustomize

application_properties:

application.properties: |

my_name: LocalKubeletEnv2

It concerns the DevOps characteristics of a list of Spring Boot microservices that steer Ansible to clone, integrate, deploy, and orchestrate. We already saw how Ansible handles the first three. All that remains are the Ansible tasks that create Kubernetes deployments, services, and application.properties ConfigMaps:

- name: Create k8s namespaces

remote_user: ansible

kubernetes.core.k8s:

kubeconfig: /home/ansible/.kube/config

name: "{{ item.namespace }}"

api_version: v1

kind: Namespace

state: present

loop:

"{{ apps }}"

- name: Create application.property configmaps

kubernetes.core.k8s:

kubeconfig: /home/ansible/.kube/config

namespace: "{{ item.namespace }}"

state: present

definition:

apiVersion: v1

kind: ConfigMap

metadata:

name: "{{ item.name }}-cm"

data:

"{{ item.application_properties }}"

loop:

"{{ apps }}"

- name: Create deployments

kubernetes.core.k8s:

kubeconfig: /home/ansible/.kube/config

namespace: "{{ item.namespace }}"

state: present

definition:

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: "{{ item.name }}"

name: "{{ item.name }}"

spec:

replicas: 1

selector:

matchLabels:

app: "{{ item.name }}"

strategy: { }

template:

metadata:

creationTimestamp: null

labels:

app: "{{ item.name }}"

spec:

containers:

- image: "{{ item.name }}:{{ item.namespace }}"

name: "{{ item.name }}"

resources: { }

imagePullPolicy: IfNotPresent

volumeMounts:

- mountPath: /config

name: config

volumes:

- configMap:

items:

- key: application.properties

path: application.properties

name: "{{ item.name }}-cm"

name: config

status: { }

loop:

"{{ apps }}"

- name: Create services

kubernetes.core.k8s:

kubeconfig: /home/ansible/.kube/config

namespace: "{{ item.namespace }}"

state: present

definition:

apiVersion: v1

kind: List

items:

- apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

app: "{{ item.name }}"

name: "{{ item.name }}"

spec:

ports:

- port: 80

protocol: TCP

targetPort: 8080

selector:

app: "{{ item.name }}"

type: ClusterIP

status:

loadBalancer: {}

loop:

"{{ apps }}"

These tasks run on the control plane and configure the orchestration of two microservices using the kubernetes.core.k8s Ansible task. To illustrate how different feature branches of the same application can be deployed simultaneously to different namespaces, the same image is used. However, each is deployed with different content in its application.properties. Different Git branches can also be specified.

It should be noted that nothing prevents us from deploying two or more microservices into a single namespace to provide the backend services for a modern JavaScript frontend.

The imagePullPolicy is set to "IfNotPresent". Since ctr already deployed the image directly to the container runtime, there is no need to pull the image from a Docker repo.

Ingress Routing

Ingress instances are used to expose microservices from multiple namespaces to clients outside of the cluster. The declaration of the Ingress and its routing rules are lower down in the input declaration partially listed above:

ingress:

host: www.demo.io

rules:

- service: hello1

namespace: env1

ingress_path: /env1/hello

service_path: /

- service: hello2

namespace: env2

ingress_path: /env2/hello

service_path: /

Note that the DNS name should be under your control or not be entered as a DNS entry on a DNS server anywhere in the world. Should this be the case, the traffic might be sent out of the cluster to that IP address.

The service variable should match the name of the relevant microservice in the top half of the input declaration. The ingress path is what clients should use to access the service and the service path is the endpoint of the Spring controller that should be routed to.

The Ansible tasks that interpret and enforce the above declarations are:

- name: Create ingress master

kubernetes.core.k8s:

kubeconfig: /home/ansible/.kube/config

namespace: default

state: present

definition:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-master

annotations:

nginx.org/mergeable-ingress-type: "master"

spec:

ingressClassName: nginx

rules:

- host: "{{ ingress.host }}"

- name: Create ingress minions

kubernetes.core.k8s:

kubeconfig: /home/ansible/.kube/config

namespace: "{{ item.namespace }}"

state: present

definition:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/rewrite-target: " {{ item.service_path }} "

nginx.org/mergeable-ingress-type: "minion"

name: "ingress-{{ item.namespace }}"

spec:

ingressClassName: nginx

rules:

- host: "{{ ingress.host }}"

http:

paths:

- path: "{{ item.ingress_path }}"

pathType: Prefix

backend:

service:

name: "{{ item.service }}"

port:

number: 80

loop:

"{{ ingress.rules }}"

We continue where we left off in my previous post and use Nginx Ingress Controller and MetalLB to establish Ingress routing. Once again, the use is made of the Ansible loop construct to cater to multiple routing rules. In this case, routing will proceed from the /env1/hello route to the Hello K8s Service in the env1 namespace and from the /env2/hello route to the Hello K8s Service in the env2 namespace.

Routing into different namespaces is achieved using Nginx mergeable ingress types. More can be read here, but basically, one annotates Ingresses as being the master or one of the minions. Multiple instances thus combine together to allow for complex routing as can be seen above.

The Ingress route can and probably will differ from the endpoint of the Spring controller(s). This certainly is the case here and a second annotation was required to change from the Ingress route to the endpoint the controller listens on:

nginx.ingress.kubernetes.io/rewrite-target: " {{ item.service_path }} "This is the sample controller:

@RestController

public class HelloController {

@RequestMapping("/")

public String index() {

return "Greetings from " + name;

}

@Value(value = "${my_name}")

private String name;

}

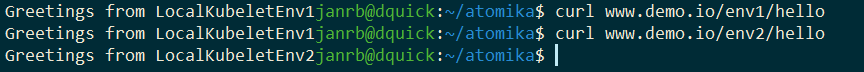

Since the value of the my_name field is replaced from what is defined in the application.properties and each instance of the microservice has a different value for it, we would expect a different welcome message from each of the K8S Services/Deployments. Hitting the different Ingress routes, we see this is indeed the case:

On Secrets and Such

It can happen that your Git repository requires token authentication. For such cases, one should add the entire git URL to the Ansible vault:

apps:

- name: mystery

git_url: "{{ vault_git_url }}"

jib_dir: harvester

image: s2c/harvester

namespace: env1

git_branch: main

application_properties:

application.properties: |

my_name: LocalKubeletEnv1

The content of variable vault_git_url is encrypted in all/vault.yaml and can be edited with:

ansible-vault edit jetpack/group_vars/all/vault.yaml

Enter the password of the vault and add/edit the URL to contain your authentication token:

vault_git_url: https://AUTH TOKEN@github.com/jrb-s2c-github/demo.git

Enough happens behind the scenes here to warrant an entire post. However, in short, group_vars are defined for inventory groups with the vars and vaults for each inventory group in its own sub-directory of the same name as the group. The "all" sub-folder acts as the catchall for all other managed servers that fall out of this construct. Consequently, only the "all" sub-directory is required for the master and workers groups of our inventory to use the same vault.

It follows that the same approach can be followed to encrypt any secrets that should be added to the application.properties of Spring Boot.

Conclusion

We have seen how to make deployments of Sprint Boot microservices to local infrastructure faster by bypassing certain steps and technologies used during the CI/CD to higher environments.

Multiple namespaces can be employed to allow the deployment of different versions of a micro-service architecture. Some thought will have to be given when secrets for different environments are in play though. The focus of the article is on a local environment and a description of how to use group vars to have different secrets for different environments is out of scope. It might be the topic of a future article.

Please feel free to DM me on LinkedIn should you require assistance to get the rig up and running. Thank you for reading!

Opinions expressed by DZone contributors are their own.

Comments