Fresh Data for AI With Spring AI Function Calls

Learn to integrate AI function calls using Spring AI for APIs like OpenLibrary, with JSON and text responses, and display results in Angular Material Tree.

Join the DZone community and get the full member experience.

Join For FreeThe LLM can work with the knowledge it has from its training data. To extend the knowledge retrieval-augmented generation (RAG) can be used that retrieves relevant information from a vector database and adds it to the prompt context. To provide really up-to-date information, function calls can be used to request the current information (flight arrival times, for example) from the responsible system. That enables the LLM to answer questions that require current information for an accurate response.

The AIDocumentLibraryChat has been extended to show how to use the function call API of Spring AI to call the OpenLibrary API. The REST API provides book information for authors, titles, and subjects. The response can be a text answer or an LLM-generated JSON response. For the JSON response, the Structured Output feature of Spring AI is used to map the JSON in Java objects.

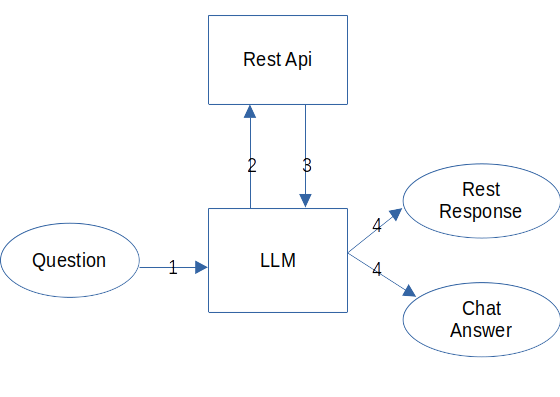

Architecture

The request flow looks like this:

- The LLM gets the prompt with the user question.

- The LLM decides if it calls a function based on its descriptions.

- The LLM uses the function call response to generate the answer.

- The Spring AI formats the answer as JSON or text according to the request parameter.

Implementation

Backend

To use the function calling feature, the LLM has to support it. The Llama 3.1 model with function calling support is used by the AIDocumentLibraryChat project. The properties file:

# function calling

spring.ai.ollama.chat.model=llama3.1:8b

spring.ai.ollama.chat.options.num-ctx=65535The Ollama model is set, and the context window is set to 64k because large JSON responses need a lot of tokens.

The function is provided to Spring AI in the FunctionConfig class:

@Configuration

public class FunctionConfig {

private final OpenLibraryClient openLibraryClient;

public static final String OPEN_LIBRARY_CLIENT = "openLibraryClient";

public FunctionConfig(OpenLibraryClient openLibraryClient) {

this.openLibraryClient = openLibraryClient;

}

@Bean(OPEN_LIBRARY_CLIENT)

@Tool(description = "Search for books by author, title or subject.")

public Function<OpenLibraryClient.Request,

OpenLibraryClient.Response> openLibraryClient() {

return this.openLibraryClient::apply;

}

}First, the OpenLibraryClient gets injected. Then, a Spring Bean is defined with its annotation, and the @Tool annotation that provides the context information for the LLM to decide if the function is used. Spring AI uses the OpenLibraryClient.Request for the call and the OpenLibraryClient.Response for the answer of the function. The method name openLibraryClient is used as a function name by Spring AI.

The request/response definition for the openLibraryClient() is in the OpenLibraryClient:

public interface OpenLibraryClient extends

Function<OpenLibraryClient.Request, OpenLibraryClient.Response> {

@JsonIgnoreProperties(ignoreUnknown = true)

record Book(@JsonProperty(value= "author_name", required = false)

List<String> authorName,

@JsonProperty(value= "language", required = false)

List<String> languages,

@JsonProperty(value= "publish_date", required = false)

List<String> publishDates,

@JsonProperty(value= "publisher", required = false)

List<String> publishers, String title, String type,

@JsonProperty(value= "subject", required = false) List<String> subjects,

@JsonProperty(value= "place", required = false) List<String> places,

@JsonProperty(value= "time", required = false) List<String> times,

@JsonProperty(value= "person", required = false) List<String> persons,

@JsonProperty(value= "ratings_average", required = false)

Double ratingsAverage) {}

@JsonInclude(Include.NON_NULL)

@JsonClassDescription("OpenLibrary API request")

record Request(@JsonProperty(required=false, value="author")

@ToolParam(description = "The book authors name")

@JsonPropertyDescription("The book author") String author,

@ToolParam(description = "The book title")

@JsonProperty(required=false, value="title")

@JsonPropertyDescription("The book title") String title,

@ToolParam(description = "The book subject")

@JsonProperty(required=false, value="subject")

@JsonPropertyDescription("The book subject") String subject) {}

@JsonIgnoreProperties(ignoreUnknown = true)

record Response(Long numFound, Long start, Boolean numFoundExact,

List<Book> docs) {}

}The annotation @ToolParam is used by Spring AI to describe the function parameters for the LLM. The annotation is used on the request record and each of its parameters to enable the LLM to provide the right values for the function call. The response JSON is mapped in the response record by Spring and does not need any description.

The FunctionService processes the user questions and provides the responses:

@Service

public class FunctionService {

private static final Logger LOGGER = LoggerFactory

.getLogger(FunctionService.class);

private final ChatClient chatClient;

@JsonPropertyOrder({ "title", "summary" })

public record JsonBook(String title, String summary) { }

@JsonPropertyOrder({ "author", "books" })

public record JsonResult(String author, List<JsonBook> books) { }

private final String promptStr = """

Make sure to have a parameter when calling a function.

If no parameter is provided ask the user for the parameter.

Create a summary for each book based on the function response subject.

User Query:

%s

""";

@Value("${spring.profiles.active:}")

private String activeProfile;

public FunctionService(Builder builder) {

this.chatClient = builder.build();

}

public FunctionResult functionCall(String question,

ResultFormat resultFormat) {

if (!this.activeProfile.contains("ollama")) {

return new FunctionResult(" ", null);

}

var result = new FunctionResult(" ", null);

int i = 0;

while (i < 3 &&

(result.jsonResult() == null && result.result() == " ")) {

try {

result = switch (resultFormat) {

case ResultFormat.Text -> this.functionCallText(question);

case ResultFormat.Json -> this.functionCallJson(question);

};

} catch (Exception e) {

LOGGER.warn("AI Call failed.", e);

}

i++;

}

return result;

}

private FunctionResult functionCallText(String question) {

var result = this.chatClient.prompt().user(

this.promptStr + question).tools(FunctionConfig.OPEN_LIBRARY_CLIENT)

.call().content();

return new FunctionResult(result, null);

}

private FunctionResult functionCallJson(String question) {

var result = this.chatClient.prompt().user(this.promptStr +

question).tools(FunctionConfig.OPEN_LIBRARY_CLIENT)

.call().entity(new ParameterizedTypeReference<List<JsonResult>>() {});

return new FunctionResult(null, result);

}

}In the FunctionService are the records for the responses defined. Then, the prompt string is created, and the profiles are set in the activeProfile property. The constructor creates the chatClient property with its Builder.

The functionCall(...) method has the user question and the result format as parameters. It checks for the ollama profile and then selects the method for the result format. The function call methods use the chatClient property to call the LLM with the available functions (multiple possible). The method name of the bean that provides the function is the function name, and they can be comma-separated. The response of the LLM can be either got with .content() as an answer string or with .Entity(...) as a JSON mapped in the provided classes because the Json response of the LLM can be buggy the LLM call can be retried up to 3 times. Then, the FunctionResult record is returned.

Conclusion

Spring AI provides an easy-to-use API for function calling that abstracts the hard parts of creating the function call and returning the response as JSON. Multiple functions can be provided to the ChatClient. The descriptions can be provided easily by annotation on the function method and on the request with its parameters. The JSON response can be created with just the .entity(...) method call. That enables the display of the result in a structured component like a tree. Spring AI is a very good framework for working with AI and enables all its users to work with LLMs easily.

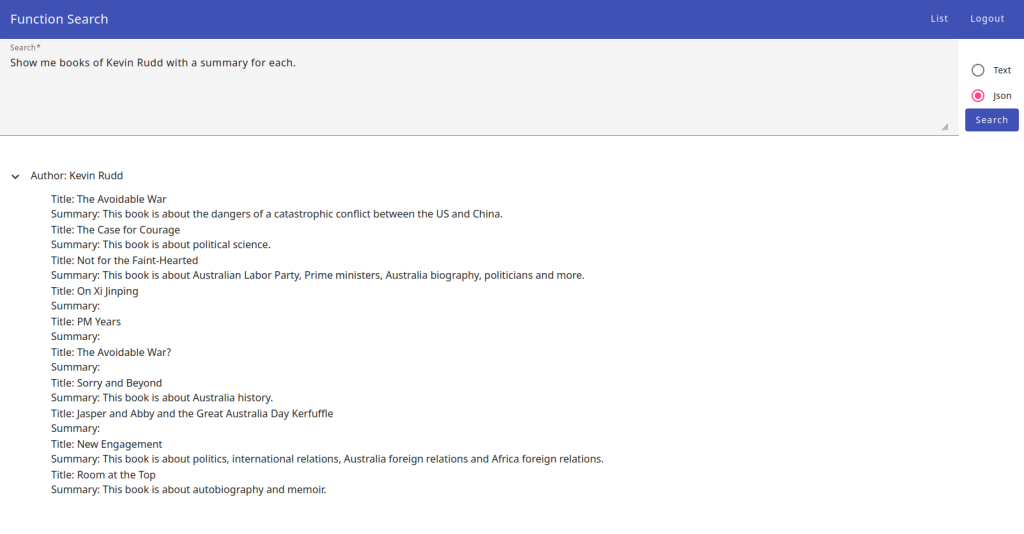

Frontend

The frontend supports the request for a text response and a JSON response. The text response is displayed in the frontend. The JSON response enables the display in an Angular Material Tree Component.

Response with a tree component:

The component template looks like this:

<mat-tree

[dataSource]="dataSource"

[treeControl]="treeControl"

class="example-tree">

<mat-tree-node *matTreeNodeDef="let node" matTreeNodeToggle>

<div class="tree-node">

<div>

<span i18n="@@functionSearchTitle">Title</span>: {{ node.value1 }}

</div>

<div>

<span i18n="@@functionSearchSummary">Summary</span>: {{ node.value2 }}

</div>

</div>

</mat-tree-node>

<mat-nested-tree-node *matTreeNodeDef="let node; when: hasChild">

<div class="mat-tree-node">

<button

mat-icon-button

matTreeNodeToggle>

<mat-icon class="mat-icon-rtl-mirror">

{{ treeControl.isExpanded(node) ?

"expand_more" : "chevron_right" }}

</mat-icon>

</button>

<span class="book-author" i18n="@@functionSearchAuthor">

Author</span>

<span class="book-author">: {{ node.value1 }}</span>

</div>

<div

[class.example-tree-invisible]="!treeControl.isExpanded(node)"

role="group">

<ng-container matTreeNodeOutlet></ng-container>

</div>

</mat-nested-tree-node>

</mat-tree>The Angular Material Tree needs the dataSource, hasChild and the treeControl to work with. The dataSource contains a tree structure of objects with the values that need to be displayed. The hasChild checks if the tree node has children that can be opened. The treeControl controls the opening and closing of the tree nodes.

The <mat-tree-node ... contains the tree leaf that displays the title and summary of the book.

The mat-nested-tree-node ... is the base tree node that displays the author's name. The treeControl toggles the icon and shows the tree leaf. The tree leaf is shown in the <ng-container matTreeNodeOutlet> component.

The component class looks like this:

export class FunctionSearchComponent {

...

protected treeControl = new NestedTreeControl<TreeNode>(

(node) => node.children

);

protected dataSource = new MatTreeNestedDataSource<TreeNode>();

protected responseJson = [{ value1: "", value2: "" } as TreeNode];

...

protected hasChild = (_: number, node: TreeNode) =>

!!node.children && node.children.length > 0;

...

protected search(): void {

this.searching = true;

this.dataSource.data = [];

const startDate = new Date();

this.repeatSub?.unsubscribe();

this.repeatSub = interval(100).pipe(map(() => new Date()),

takeUntilDestroyed(this.destroyRef))

.subscribe((newDate) =>

(this.msWorking = newDate.getTime() - startDate.getTime()));

this.functionSearchService

.postLibraryFunction({question: this.searchValueControl.value,

resultFormat: this.resultFormatControl.value} as FunctionSearch)

.pipe(tap(() => this.repeatSub?.unsubscribe()),

takeUntilDestroyed(this.destroyRef),

tap(() => (this.searching = false)))

.subscribe(value =>

this.resultFormatControl.value === this.resultFormats[0] ?

this.responseText = value.result?.trim() || '' :

this.responseJson = this.addToDataSource(this.mapResult(

value.jsonResult ||

[{ author: "", books: [] }] as JsonResult[])));

}

...

private addToDataSource(treeNodes: TreeNode[]): TreeNode[] {

this.dataSource.data = treeNodes;

return treeNodes;

}

...

private mapResult(jsonResults: JsonResult[]): TreeNode[] {

const createChildren = (books: JsonBook[]) => books.map(value => ({

value1: value.title, value2: value.summary } as TreeNode));

const rootNode = jsonResults.map(myValue => ({ value1: myValue.author,

value2: "", children: createChildren(myValue.books) } as TreeNode));

return rootNode;

}

...

}The Angular FunctionSearchComponent defines the treeControl, dataSource, and the hasChild for the tree component.

The search() method first creates a 100ms interval to display the time the LLM needs to respond. The interval gets stopped when the response has been received. Then, the function postLibraryFunction(...) is used to request the response from the backend/AI. The .subscribe(...) function is called when the result is received and maps the result with the methods addToDataSource(...) and mapResult(...) into the dataSource of the tree component.

Conclusion

The Angular Material Tree component is easy to use for the functionality it provides. The Spring AI structured output feature enables the display of the response in the tree component. That makes the AI results much more useful than just text answers. Bigger results can be displayed in a structured manner that would be otherwise a lengthy text.

A Hint at the End

The Angular Material Tree component creates all leafs at creation time. With a large tree with costly components in the leafs like Angular Material Tables the tree can take seconds to render. To avoid this treeControl.isExpanded(node) can be used with @if to render the tree leaf content at the time it is expanded. Then the tree renders fast, and the tree leafs are rendered fast, too.

Opinions expressed by DZone contributors are their own.

Comments