How to Integrate Event-Driven Ansible With Kafka

Integrate Ansible with Kafka for real-time automation: trigger playbooks via Kafka events, enhance incident response, optimize workflows, and scale seamlessly.

Join the DZone community and get the full member experience.

Join For FreeIntegrating event-driven Ansible with Kafka enables seamless real-time automation by continuously monitoring Kafka topics and triggering Ansible playbooks based on specific events. This integration is particularly beneficial in IT operations, where it streamlines automated incident response, reducing reliance on manual intervention.

Leveraging Kafka’s distributed architecture ensures high scalability, rapid event processing, and improved system resilience. Ultimately, this approach enhances operational efficiency by enabling proactive, intelligent automation that minimizes downtime and optimizes resource management.

In this article, I will guide you through a practical example of how Ansible can be used to consume messages from a Kafka topic in real time. I will demonstrate how to utilize the ansible.eda.kafka module within Ansible event-driven automation (EDA) to listen for events from a Kafka topic and trigger an automated workflow.

About the Module

ansible.eda.kafka is an event source plugin within Ansible event-driven automation (EDA) that facilitates real-time event processing by allowing Ansible to subscribe to and consume messages from Apache Kafka topics.

This plugin integrates with Kafka, enabling Ansible EDA to monitor event streams continuously and respond dynamically to incoming data. When a relevant event is detected, Ansible evaluates predefined rules and conditions to determine whether an automation workflow should be executed.

Demo

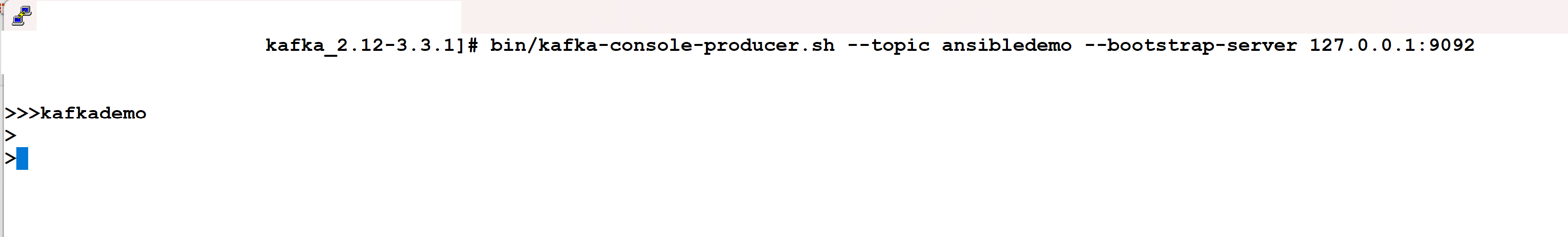

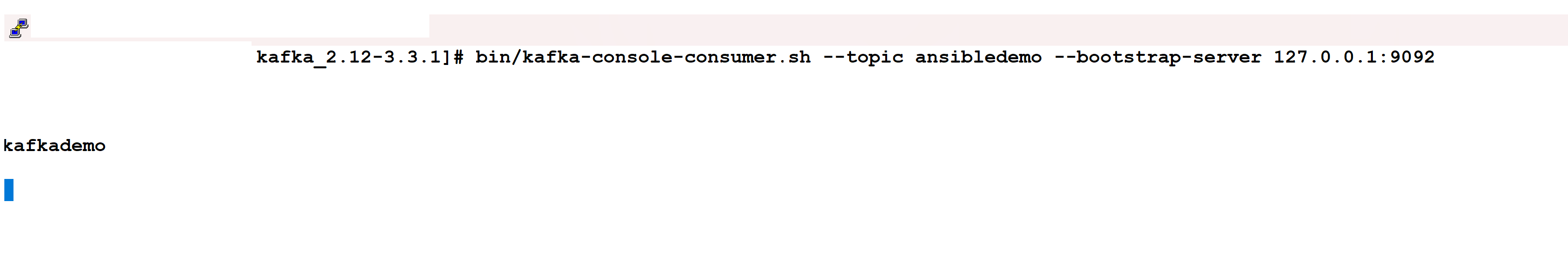

For this demo, I created a Kafka topic called ansibledemo and executed the producer to send messages while a consumer listens for them in separate terminal windows. I am also running an Ansible rulebook that checks each message in the Kafka topic. If a message contains the word kafkademo, the rulebook will trigger another Ansible playbook, which will print the event details.

We can enhance this setup to manage more advanced automation tasks by processing different types of messages from Kafka. Rather than just triggering a single playbook, we can define multiple rules to dynamically respond to various events.

Demo Rulebook

- name: Ansible EDA Kafka Demo

hosts: localhost

sources:

- ansible.eda.kafka:

topic: ansibledemo

host: 127.0.0.1

port: 9092

rules:

- name: Check whether the event body matches kafkademo

condition: event.body == "kafkademo"

action:

run_playbook:

name: kafkamessage.ymlAction Playbook

---

- hosts: localhost

gather_facts: false

connection: local

tasks:

- name: printing event details

debug:

msg: "{{ ansible_eda.event }}"Producer Screenshot

Consumer Screenshot

Ansible Rulebook

Conclusion

In this demo, we explored how the ansible.eda.kafka module enables Ansible to consume messages from a Kafka topic and trigger automated workflows based on specific messages. By creating an Ansible rulebook and running a playbook in response to a matching event, we showcased the effectiveness of event-driven automation.

This integration allows IT teams to automate real-time incident response, infrastructure management, and operational tasks with minimal manual intervention. This approach can be expanded to more complex use cases, such as security monitoring, auto-scaling, and proactive system maintenance.

Note: The views expressed on this blog are my own and do not necessarily reflect the views of Oracle.

Opinions expressed by DZone contributors are their own.

Comments