How to Move IBM App Connect Enterprise to Containers

IBM App Connect Enterprise was initially created at a time when much integration was performed using messaging, and specifically IBM MQ.

Join the DZone community and get the full member experience.

Join For FreeThis article is part of a series and follows the previous article, Deploying a Queue Manager from the OpenShift Web Console.

IBM App Connect Enterprise was initially created at a time when much integration was performed using messaging, and specifically IBM MQ. Indeed, despite the popularity of more recent protocols such as RESTful APIs, there are still many integration challenges that are better suited to messaging. Today, IBM App Connect Enterprise is used to mediate various protocols, but nearly all existing customers will have some MQ-based integration in their landscape.

This article looks at the widespread scenario of an existing back-end system with only an MQ interface that needs to be exposed as an HTTP-based API. IBM App Connect Enterprise performs the protocol transformation from HTTP to MQ and back again.

The Move to Remote MQ Connections

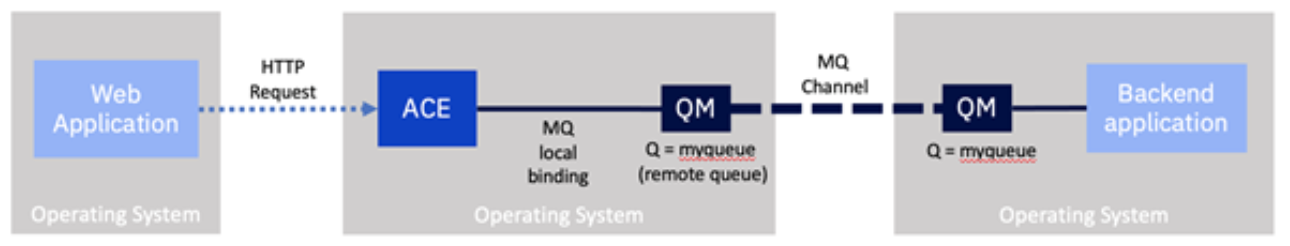

Existing interfaces based on older versions of IBM App Connect Enterprise will likely have a local IBM MQ server running on the same machine as IBM App Connect Enterprise. This was a mandatory part of the installation up to and including v9 (called IBM Integration Bus). As a result, integrations would primarily use local bindings to connect to the local MQ server, which would then be distributed onto the broader MQ network.

The starting point for this example will be an integration built using local bindings to a co-installed MQ server. Then, we will explore what changes we should consider bringing that integration into containers, and for this example, on OpenShift.

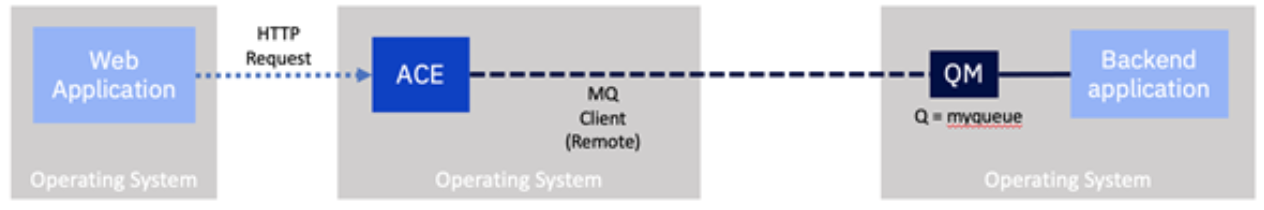

Since v9 the local MQ server became optional. Networks had become more consistent and reliable, so 'remote bindings' to MQ became more prevalent. Today, IBM App Connect Enterprise (v12 at the time of writing) no longer mandates a local MQ server for any of the nodes on the toolkit palette. For more detailed information on this topic, look at the following article.

As we will see shortly, many integrations can be switched from local to remote bindings with minor configuration changes.

There are several advantages to switching to remote MQ communication.

- Simplicity: The architecture is more straightforward as it has fewer moving parts. The installation and operation of IBM App Connect Enterprise no longer require any knowledge of IBM MQ. Only the MQ Client software is needed.

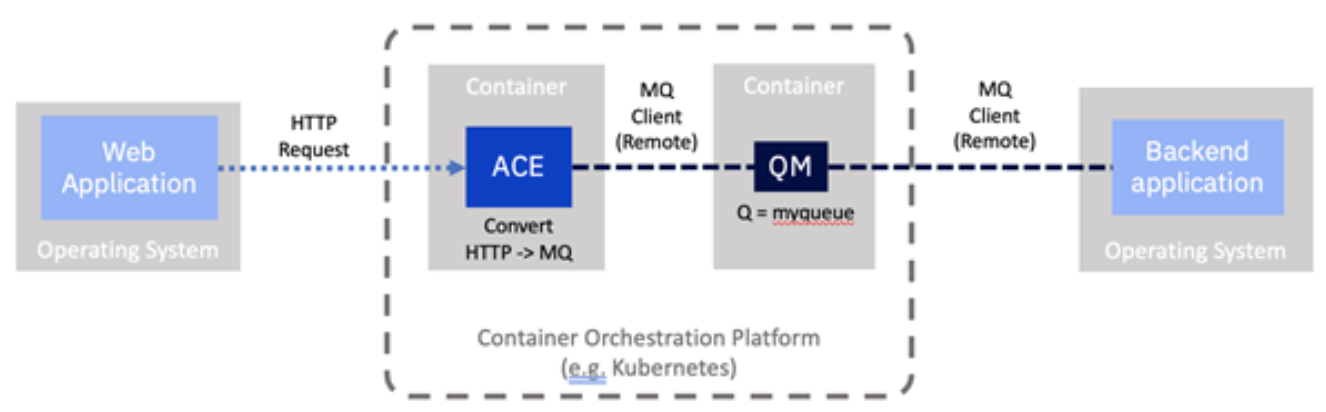

- Stateless: IBM App Connect Enterprise becomes 'stateless.' It no longer requires persistent storage. This significantly simplifies architecting for high availability and disaster recovery. Conveniently, it also makes it better suited for cloud-native deployment in containers. For example, it makes it possible for a container platform like Kubernetes to add or replace instances of IBM App Connect Enterprise wherever it likes for scalability or availability without worrying about whether they will have access to the same storage.

- Single-purpose per container: Containers should aim to perform a single activity. This creates clarity both architecturally and operationally. The platform (Kubernetes in our case) can look after them based on role-specific policies around availability, replication, security, persistence, and more. For example, IBM App Connect Enterprise is a stateless integration engine. IBM MQ is a persistent messaging layer. They have very different needs and should be looked after independently by the platform.

There would be the same benefits of simplification and statelessness for the back end if we changed that to use remote MQ connections. Indeed many applications have already moved to this pattern.

With both IBM App Connect Enterprise and the backend using remote connections, MQ is no longer a local dependency and becomes more like an independent remote persistence store. This means it could effectively be run on any platform as the MQ clients see it as a component available over the network. So, for example, we could move MQ to run on a separate virtual machine, use the IBM MQ Appliance, or even consider using the IBM MQ on Cloud managed service or run it in a separate container within our platform.

In line with our focus on containers in this series, for this example, we’re going to run MQ in a container since we have already explored how to achieve this in a previous article.

Do You Have to Use Remote MQ Connections When Moving to Containers?

It is worth noting that it is not mandatory to move to remote connections when moving to containers, but as we will see, it is strongly recommended.

It is technically possible to run your existing flow with no changes and have a local MQ server running alongside IBM App Connect Enterprise in a single container. However, it should not be considered the preferred path. It goes against cloud-native and container deployment principles on several levels, and you would not gain the benefits noted above.

For this reason, remote queues are the recommended adoption path, and IBM has made many enhancements specifically to enable and simplify remote MQ connectivity.

- IBM App Connect has no hard dependency on an MQ local server as it did in the past, and all of the nodes in the flow assembly can now work with remote MQ servers.

- The IBM App Connect Certified Container that we have been using in our examples does not have a local MQ server, but instead, it has the MQ Client.

- IBM MQ has been significantly enhanced to adopt cloud-native principles. Examples include native HA and uniform clusters.

Exploring Our HTTP to MQ Integration Flow

Let’s look at our existing example flow in a bit of detail.

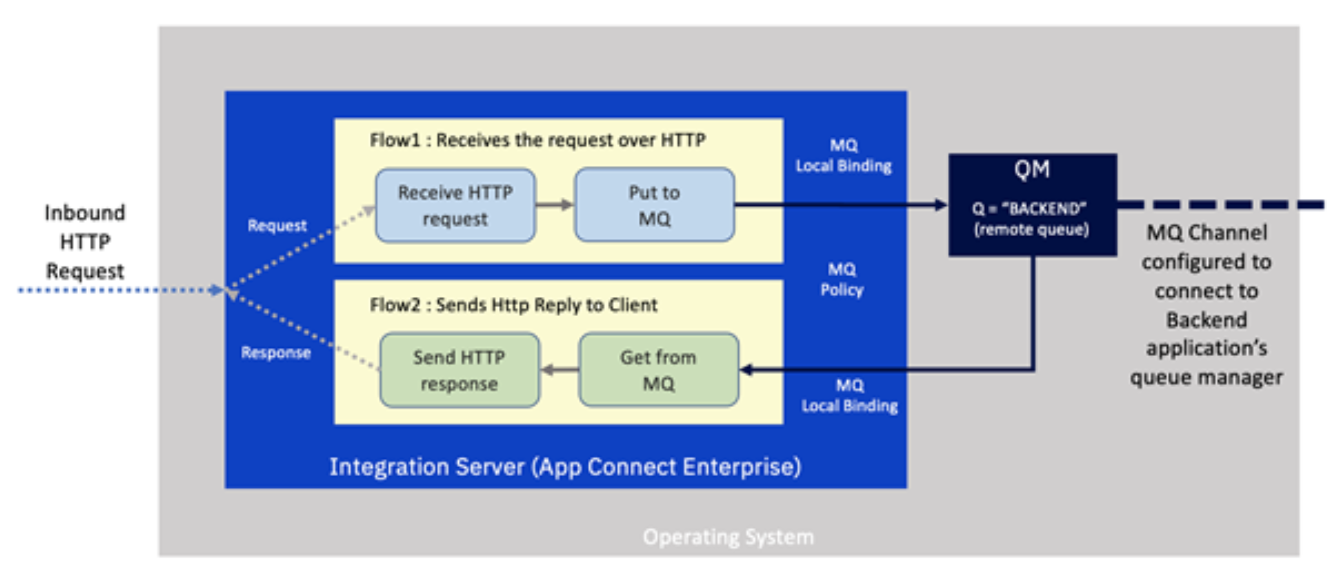

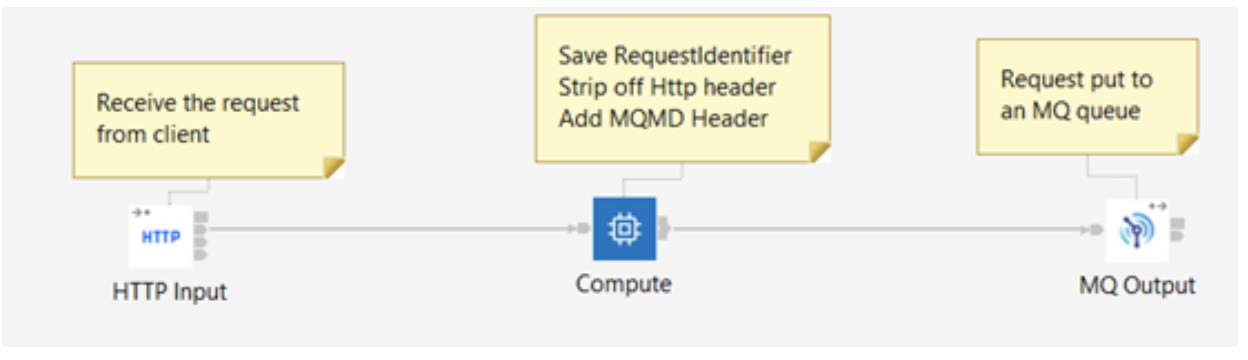

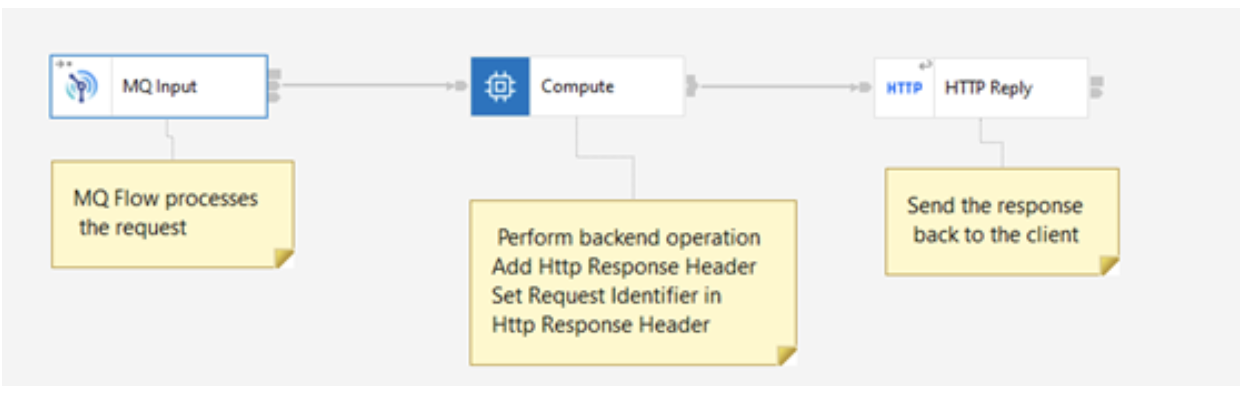

IBM App Connect Enterprise receives an incoming request over HTTP. This is handled by an integration that is composed of two separate flows.

The first receives the request over HTTP and converts it to an MQ message, embedding the correlation id required to enable us to match up the response. It then places it on a queue on the local MQ server, which will ultimately be propagated over an MQ channel to a queue manager on the backend system.

The second flow awaits a response message with the correct correlation from the backend system delivered onto the local MQ server via the MQ channel. It then turns this into an HTTP message. The correlation id matches it with the original HTTP request, and the response is sent back to the caller.

This is a common pattern since it is a highly efficient way of handling the switch from a synchronous (HTTP) protocol to an asynchronous one (IBM MQ). The separation of request and response flows enables the integration server to handle more excellent concurrency. In addition, the integration flow's resources are not held up while awaiting a response from the backend server.

Notice also that the local queue manager has a 'channel' configured to enable it to talk to the backend's queue manager. A remote queue is present to reflect the queue on the back end.

How Will Our Flow Change When It Moves to Containers?

Let's consider what this integration would look like if we moved it to a containerized environment.

There are only two changes.

- MQ is now running in a separate container rather than locally alongside IBM App Connect.

- ACE has been configured to use a remote connection to separate the MQ server.

This means our Integration Server will need to know where to find the MQ server on the network. This information could be different per deployment, such as per environment (e.g., development, test, production), so we would not want it to be baked into the container image. Instead, itInstead, it will be loaded in from a 'configuration' stored by the container platform. In this case, an MQ Endpoint policy. Configuration objects were described in one of our earlier examples.

Deploying an MQ Queue Manager in a Container

We will build on a previous example. Please follow the following instructions to deploy a queue manager on OpenShift using the user interface or command line.

Creating a queue manager in OpenShift

This should result in a queue manager running in a container with something like the following connection properties:

- Queue manager name: QUICKSTART

- Queues for applications to PUT and GET messages: BACKEND

- Channel for client communication with the integration server: ACECLIENT

- MQ hostname and listener port: quickstart-cp4i-mq-ibm-mq/1414

Note that we will have no actual back end in our example, so we will use a single queue ('BACKEND') to which we will put and get messages. There would be sending and receive queues and channels configured for communication with the backend application in an actual situation.

Exploring the Current Integration Flow

Let us first take a quick look at the actual message flows that we will be using for this scenario.

You will need an installation of the App Connect Toolkit to view the flow and create the MQ Endpoint policy. Since this series is targeted at existing users of the product, we will assume you have knowledge of the Toolkit. Note that although you will be able to view the flow in older versions of the toolkit, you will need to have a more recent version (v11/12) of the toolkit to create the MQ Endpoint policy.

Sample Project Interchange and MQ policy project exports are available on GitHub at https://github.com/amarIBM/hello-world/tree/master/Samples/Scenario-5

After importing the Project Interchange file into the Toolkit, the Application project with request and response flows will appear as below;

Request Flow:

- Receive the request over the HTTP protocol.

- Prepare an MQ message with the content of the HTTP request, and include the correlation information.

Put the message in the 'BACKEND' MQ queue for the backend application to collect.

In an actual situation, the backend application would read the message from the queue, process it, then respond into a different queue. However, for our simple example, we do not have an existing backend system, so we will read the original message straight back from the same queue and pretend that it is a back-end response.

Response Flow:

- Reads the response message off the queue.

- Generates the HTTP response, including the correlation data

- Sends the HTTP reply by coordinating reply with the request using Correlation id.

Re-configuring From Local to Remote Queue Managers

We will now re-configure our flow to use the new remote queue manager. First, we will create an MQ Endpoint policy that specifies all the details about the remote queue manager; then, we will embed this in a configuration object to apply it to the container platform. We will then change the flow to use this configuration object instead of its current local queue manager settings.

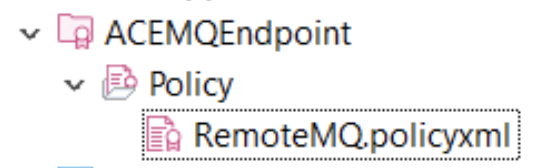

Create the MQ Endpoint Policy Containing the Remote MQ Connection Properties

In the App, Connect Toolkit creates a Policy project called 'ACEMQEndpoint,' and creates a policy of type MQEndpoint called 'RemoteMQ.'

Fill in the Policy fields details as shown below using the MQ connection details from our earlier queue manager deployment.

One thing to note here is the Queue manager hostname. If the queue manager is running in a different namespace than the ACE Integration Server, you need to qualify the service name using the namespace. So the queue manager hostname would take the form:

<MQ service name>.<MQ namespace>.svc

<?xml version="1.0" encoding="UTF-8"?>

<policies>

<policy policyType="MQEndpoint" policyName="RemoteMQ" policyTemplate="MQEndpoint">

<connection>CLIENT</connection>

<destinationQueueManagerName>QUICKSTART</destinationQueueManagerName>

<queueManagerHostname>quickstart-cp4i-mq-ibm-mq.mq.svc</queueManagerHostname>

<listenerPortNumber>1414</listenerPortNumber>

<channelName>ACECLIENT</channelName>

<securityIdentity></securityIdentity>

<useSSL>false</useSSL>

<SSLPeerName></SSLPeerName>

<SSLCipherSpec></SSLCipherSpec>

</policy>

</policies>Embed the MQ Endpoint Policy in a Configuration Object

All runtime settings for the App Connect Enterprise certified container are provided via 'configuration objects.' This is a specifically formatted file with the runtime settings embedded that can then be applied to the container platform in advance of the container being deployed.

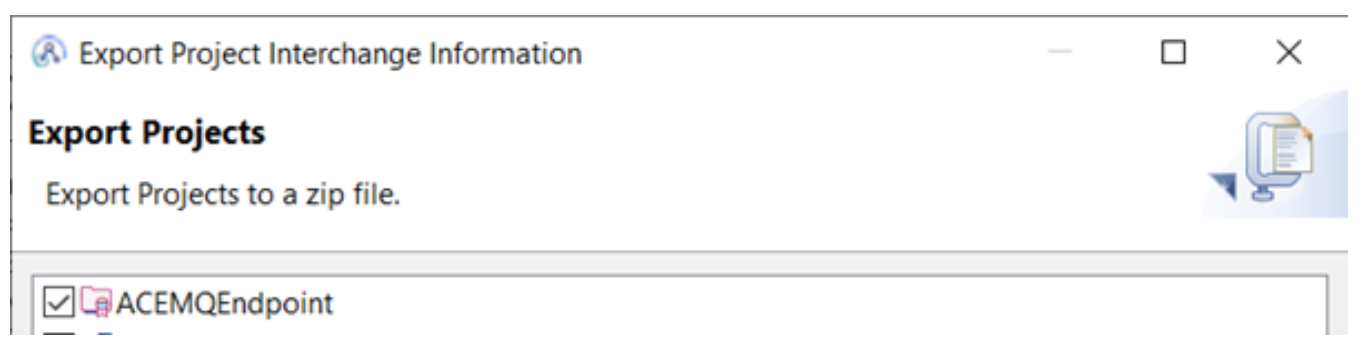

Export the policy project from the Toolkit as a 'Project Interchange' zip file.

Policy projects are embedded into the configuration object in base64 form. So let’s first create the base64 representation of the policy project zip file that we just exported.

# cat ACEMQPolicy.zip |base64

Copy the output of your encoded file and paste it under the spec.contents section of a configuration file as shown below.

apiVersion: appconnect.ibm.com/v1beta1

kind: Configuration

metadata:

name: ace-mq-policy

namespace: ace

spec:

type: policyproject

contents: >-

UEsDBBQACAgIAM1delMAAAAAAAAAAAAAAAAWAAAAQUNFTVFFbmRwb2ludC8ucHJvamVjdK1Rywr

CMBA8K/gP0ruJ3jzEis+boqgf0KZriTQPklT0703SqBTBg3jbmczODFkyvfGqfwVtmBSTZISGSR

8ElQUT5SQ5HdeDcTJNe12itLwAtUswVDNlndqxHSIyDulssdrsV6JQkglLcOD8I5Wcg7Apwc/Js

9HJBIBbKK9ZVRwUUI8iXLjVTBSBiXnODbGcI7BSVgbxs0VKVozeUc1QWAKNdoGZN+hdynlkuqx9

GxMxbhMEf+T+1qSZ8n80iMzza1y4rTVEdQO+d9kGjS8RtMHybfI6Q/u8D1BLBwhcj8Ry2AAAAB0

CAABQSwMEFAAICAgAzV16UwAAAAAAAAAAAAAAACAAAABBQ0VNUUVuZHBvaW50L1JlbW90ZU1RLn

BvbGljeXhtbH1S227CMAx9n7R/qPpesko87CEtQlWnoQGitHxASD2I1lxIUjT+fmkbWNHQpEj2O

T527MR49s2b4AzaMCmSMJ68hAEIKmsmDkm4q96i13CWPj9hJRtGGRjnB8GALsFgqouCJFwVuaiV

ZMKGnl8T7vgtcGlhVVzZCrhqiL3P6Ku6ulQKAdS6XtJsucjXFUYjyotqMJYJ0lFFCy2siCAH0N1

1abFbZB9lNd+6zP90vtRpxL9LY0UXO7WMfhlLtI2omrKInyK2585M3DFnitHDNF+yYcaCAL2R2q

5bvgedxtN4itGDwHXqI3EzNn1j8yy/TT6ivdIAbTWzl0UNwjqbYvSH8tLWQFku00/SGMDIIx9z7

gb8S2A0Rr+CjKkj6FIBHSQj3K8AGr6z2w10W44fUEsHCJY7gKguAQAATwIAAFBLAwQUAAgICADN

XXpTAAAAAAAAAAAAAAAAHwAAAEFDRU1RRW5kcG9pbnQvcG9saWN5LmRlc2NyaXB0b3KNjkEKwjA

QRfeCdwizN1FXUpp2I65d6AHadKqRJFMyg9jbGxEEN+L+v/df3T5iUHfM7ClZ2Og1KEyOBp8uFs

6nw2oHiqVLQxcooYUZGdpmuagTb6uJgnfzMdMNneyRXfaTUFbFmdjCVWSqjHEUte+jRiEKrOMoe

vhsdd8xwpuoivNP6usZSo9SdcYRc4lHNq9A86OweQJQSwcILsMb/JYAAAD4AAAAUEsBAhQAFAAI

CAgAzV16U1yPxHLYAAAAHQIAABYAAAAAAAAAAAAAAAAAAAAAAEFDRU1RRW5kcG9pbnQvLnByb2p

lY3RQSwECFAAUAAgICADNXXpTljuAqC4BAABPAgAAIAAAAAAAAAAAAAAAAAAcAQAAQUNFTVFFbm

Rwb2ludC9SZW1vdGVNUS5wb2xpY3l4bWxQSwECFAAUAAgICADNXXpTLsMb/JYAAAD4AAAAHwAAA

AAAAAAAAAAAAACYAgAAQUNFTVFFbmRwb2ludC9wb2xpY3kuZGVzY3JpcHRvclBLBQYAAAAAAwAD

AN8AAAB7AwAAAAA=Save this as a file named ace-mq-policy.yaml

Apply the Configuration Object to the Container Platform

Use the command shown below to create the configuration object in our OpenShift environment using the policy file that we just created in the previous step.

# oc apply -f ace-mq-policy.yaml

Re-Configure to Use the MQEndpoint

Now that we have created an MQ policy and applied it to the OpenShift environment as a configuration, we now need to update the flow to use it.

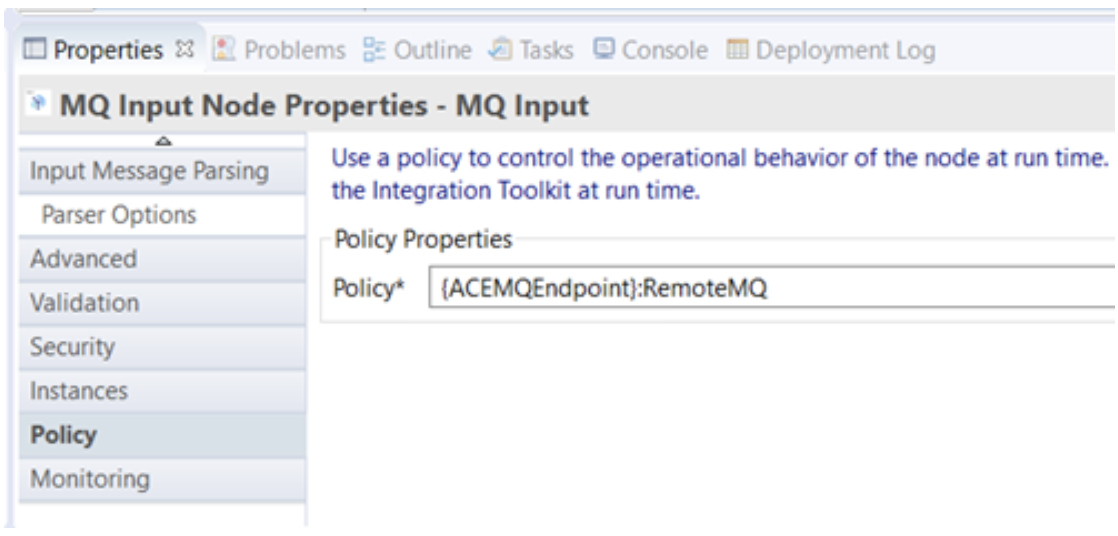

To do this, we simply need to update the MQInput and MQOutput node property ‘Policy' with the policy name. At runtime, MQ nodes will then resolve the connection parameters for the queue manager using the details in the policy configuration.

Re-Configuring the Flow Using the App Connect Toolkit

As shown below, we could do this by bringing the flow up in the Toolkit and amending the policyUrl Policy on each MQ node.

Alternative Way to Re-configure the Flow Using Mqsiapplybaroverride

Using the Toolkit is straightforward enough for this single flow. It’s worth noting, however, that there is a more command-line-based approach using mqsiapplybaroverride should we have to make an amendment like this across many flows at once.

To know the exact names of properties on the node to override, you can run the mqsireadbar command on the BAR file, which will list all the overridable properties. An example is shown below:

# mqsireadbar -b SimpleHttpMQBackendApp.bar -r

BIP1052I: Reading Bar file using runtime mqsireadbar...

SimpleHttpMQBackendApp.bar:

HttpMQApp.appzip (3/12/21 7:50 PM):

RequestFlow.msgflow (3/12/21 7:50 PM):

SimpleMQBackendFlow_Compute2.esql (3/12/21 7:50 PM):

SimpleMQBackendFlow_inputMessage.xml (3/12/21 7:50 PM):

SimpleMQBackendFlow_Compute.esql (3/12/21 7:50 PM):

ResponseFlow.msgflow (3/12/21 7:50 PM):

SimpleMQBackendFlow_Compute3.esql (3/12/21 7:50 PM):

simple_inputMessage.xml (3/12/21 7:50 PM):

ResponseFlow_Compute.esql (3/12/21 7:50 PM):

application.descriptor (3/12/21 7:50 PM):

SimpleMQBackendFlow_Compute1.esql (3/12/21 7:50 PM):

Deployment descriptor:

RequestFlow#HTTP Input.URLSpecifier = /BackendService

RequestFlow#MQ Output.queueName = BACKEND

RequestFlow#MQ Output.connection

RequestFlow#MQ Output.policyUrl

ResponseFlow#notificationThresholdMsgsPerSec

ResponseFlow#MQ Input.queueName = BACKEND

ResponseFlow#MQ Input.connection

ResponseFlow#MQ Input.policyUrlUse the mqsiapplybaroverride command to change the properties of the MQInput and MQOutput nodes.

In order to specify our new MQ Endpoint policy name on MQInput and MQOutput node properties, we can then use the mqsiapplybaroverride command and set the policyUrl parameter. The format to specify the policy URL is - {Policy Project Name}:policyName, as shown below:

mqsiapplybaroverride -b C:\HttpMQApp.bar -k HttpMQApp -m "RequestFlow#MQ Output.policyUrl"="{ACEMQEndpoint}:RemoteMQ"

mqsiapplybaroverride -b C:\HttpMQApp.bar -k HttpMQApp -m "ResponseFlow#MQ Output.policyUrl"="{ACEMQEndpoint}:RemoteMQ"

Deploy the Integration to OpenShift

We will now deploy the integration onto the OpenShift platform just as we have in previous scenarios. This time, the only difference is that we will inform the deployment that a new MQ Endpoint policy is stored in a configuration.

Create Integration Server Definition File Pointing to the New MQ Endpoint Policy

The sample Integration server YAML definition points to the new configuration “ace-mq-policy” that we created in the previous section. For convenience, it pulls down a pre-prepared bar file from GitHub that already has the amendments to the MQ Input and MQ Output nodes to look for an MQ Endpoint, just like we described in the previous sections.

Copy the following contents to a YAML file as IS-mq-backend-service.yaml

apiVersion: appconnect.ibm.com/v1beta1

kind: IntegrationServer

metadata:

name: http-mq-backend-service

namespace: ace

labels: {}

spec:

adminServerSecure: false

barURL: >-

https://github.com/amarIBM/hello-world/raw/master/Samples/Scenario-5/SimpleHttpMQBackendApp.bar

configurations:

- github-barauth

- ace-mq-policy

createDashboardUsers: true

designerFlowsOperationMode: disabled

enableMetrics: true

license:

accept: true

license: L-KSBM-C37J2R

use: AppConnectEnterpriseProduction

pod:

containers:

runtime:

resources:

limits:

cpu: 300m

memory: 350Mi

requests:

cpu: 300m

memory: 300Mi

replicas: 1

router:

timeout: 120s

service:

endpointType: http

version: '12.0'Deploy Integration Server

We now use the YAML definition file created above to deploy the Integration Server.

#oc apply -f IS-mq-backend-service.yaml

Verify the Deployment

You can check the logs of the Integration Server pod to verify that the deployment has been successful and that a listener has started listening for the incoming HTTP request on the service endpoint.

#oc logs <IS pod>

2021-11-26 11:50:59.255912: BIP2155I: About to 'Start' the deployed resource 'HttpMQApp' of type

'Application'.

An http endpoint was registered on port '7800', path '/BackendService'.

2021-11-26 11:50:59.332248: BIP3132I: The HTTP Listener has started listening on port '7800' for

'http' connections.

2021-11-26 11:50:59.332412: BIP1996I: Listening on HTTP URL '/BackendService'.

Started native listener for HTTP input node on port 7800 for URL /BackendService

2021-11-26 11:50:59.332780: BIP2269I: Deployed resource 'SimpleHttpMQBackendFlow'

(uuid='SimpleHttpMQBackendFlow',type='MessageFlow') started successfully.

Testing the Message Flow

Let us test the message flow by invoking the service url using the Curl command.

Request: We have already seen in previous articles how to get the public url using the ‘oc route’ command. Once you obtain the external URL, invoke the Request flow using the following command parameters.

# curl -X POST http://ace-mq-backend-service-http-ace.apps.cp4i-2021-demo.xxx.com/BackendService --data "<Request>Test Request</Request>"Output:

You should see the response below from end-to-end processing by Request-Response flow.

<Response><message>This is a response from backend service</message></Response>

Common Error Scenarios:

If you encounter any errors related to remote MQ connectivity during runtime, they are usually the result of some missing configuration or incorrect configuration. A list of possible errors a user might encounter and possible resolutions are compiled in the following document.

https://github.com/tdolby-at-uk-ibm-com/ace-remoteQM-policy/blob/main/README-errors.md

Advanced Topics

So we have seen the most straightforward path for an integration involving MQ.

- We reconfigured our flow to use remote calls and provided it with the MQ remote connection properties using a 'configuration' pre-loaded onto OpenShift.

- We then deployed the reconfigured bar file to OpenShift using the IBM App Connect certified container, which already containers the MQ Client.

Let’s consider some slightly more advanced scenarios and what they might entail.

What if the MQ Queue Manager We Want to Talk to Isn’t Running in a Container in OpenShift?

The good news here is that since we’ve moved to use a remote client connection, App-Connect doesn’t care where the queue manager is running. In theory, it could connect directly to a remote queue manager that is, for example, running on a mainframe, as long as the integration server can see the queue manager host and port over the network. As with any networking scenario, we should consider the latency and reliability of the connection. Suppose we know the network between the two points has high latency or has availability issues. It might be better to introduce a nearer queue manager in a container in OpenShift, then create a server to server MQ channel to the backend’s queue manager. The inherent benefits of MQ’s mature channel management will manage network issues as they have always done.

What if We Do Need a Local MQ Queue Manager?

It is still possible to run a local MQ server in the same container as the integration server. However, the circumstances where this is required are hopefully rare. If you do need a local queue manager, this does mean that the integration server becomes more stateful, which may make it harder to scale. You would need to build a customer container image with the MQ server included (although you could base that on the certified container image as a starting point). However, the good news is that we can deploy in a more fine-grained style in containers. It’s likely that not all of your integrations require local MQ servers. So, you could use the standard certified container for those integrations and only use your custom container where necessary.

How Many Queue Managers Should I Have?

If originally you had 10 integrations, all using the same local queue manager, should you create one remote queue manager or 10? In all honesty, this probably deserves a dedicated article of its own, as there are many different possibilities to be explored here. There are a few simple statements we can make, however. It really comes down to ownership. Who is going to be looking after those queue managers operationally? Who is most affected by their availability? Who is likely to be making changes to them? The main benefits of splitting out queue managers would be so that they can be operated independently, changed independently, have independently configured availability characteristics, etc. If none of that independence is required, you could continue to have all the queues relating to these integrations on the queue manager.

As mentioned, many of the above-advanced topics may well make good candidates for a future post. If you’re particularly interested in a particular topic (maybe even one we’ve not yet covered), please let us know in this post's comments.

Acknowledgment and thanks to Kim Clark for providing valuable technical input to this article.

Published at DZone with permission of Amar Shah. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments