How to Test and Benchmark Database Clusters

In this post, we'll look at the best practices for NoSQL database benchmarking and a stress testing tool you can use to fail fast with your database projects.

Join the DZone community and get the full member experience.

Join For FreeBenchmarking will help you fail fast and recover fast before it’s too late.

Teams should always consider benchmarking as part of the acceptance procedure for any application. What is the overall user experience that you want to bake into the application itself? Ensure that you can support scaling your customer base without compromising user experience or the actual functionality of the app.

Users should also consider their disaster recovery targets. Ask yourself “How much infrastructure overhead am I willing to purchase in order to maintain the availability of my application in dire times?” And, of course, think about the database’s impacts on the day-to-day lives of your DBAs.

Here are some best practices that we developed at ScyllaDB while helping hundreds of teams get started with ScyllaDB, a monstrously fast and scalable open-source NoSQL database.

1. Set Tangible Targets

Here are some considerations for your team to set tangible targets:

- What is the use case? — This should determine what database technology you are benchmarking. You have to choose databases that have dominant features to address the needs of your use case.

- Timeline for testing and deployment — Your timeline should drive your plans. What risks and delays may you be facing?

- MVP, medium, and long-term application scale — Many times people just look at the MVP without considering the application lifecycle. You’re not going to need 1,000 nodes on day one, but not thinking ahead can lead to dead-end development. Have a good estimate on the scaling that you need to support.

- Budget for your database — Do this both for your POC and your production needs. Overall application costs, including servers, software licenses, and manpower. Consider how some technologies can start out cheap, but do not scale linearly.

2. Create a Testing Schedule

Your timeline needs to take into consideration getting consensus from the different stakeholders and bringing them into the process. Deploying a service or servers might be as easy as the click of a button. But that doesn’t take into account any bureaucratic procedures you may need to bake into your schedule.

Bake into your schedule the processes needed. Also, make sure you have the right resources to help you evaluate the system. Developers, DBAs, and systems administrators are limited resources in any organization. Make sure you schedule your testing with their availability in mind.

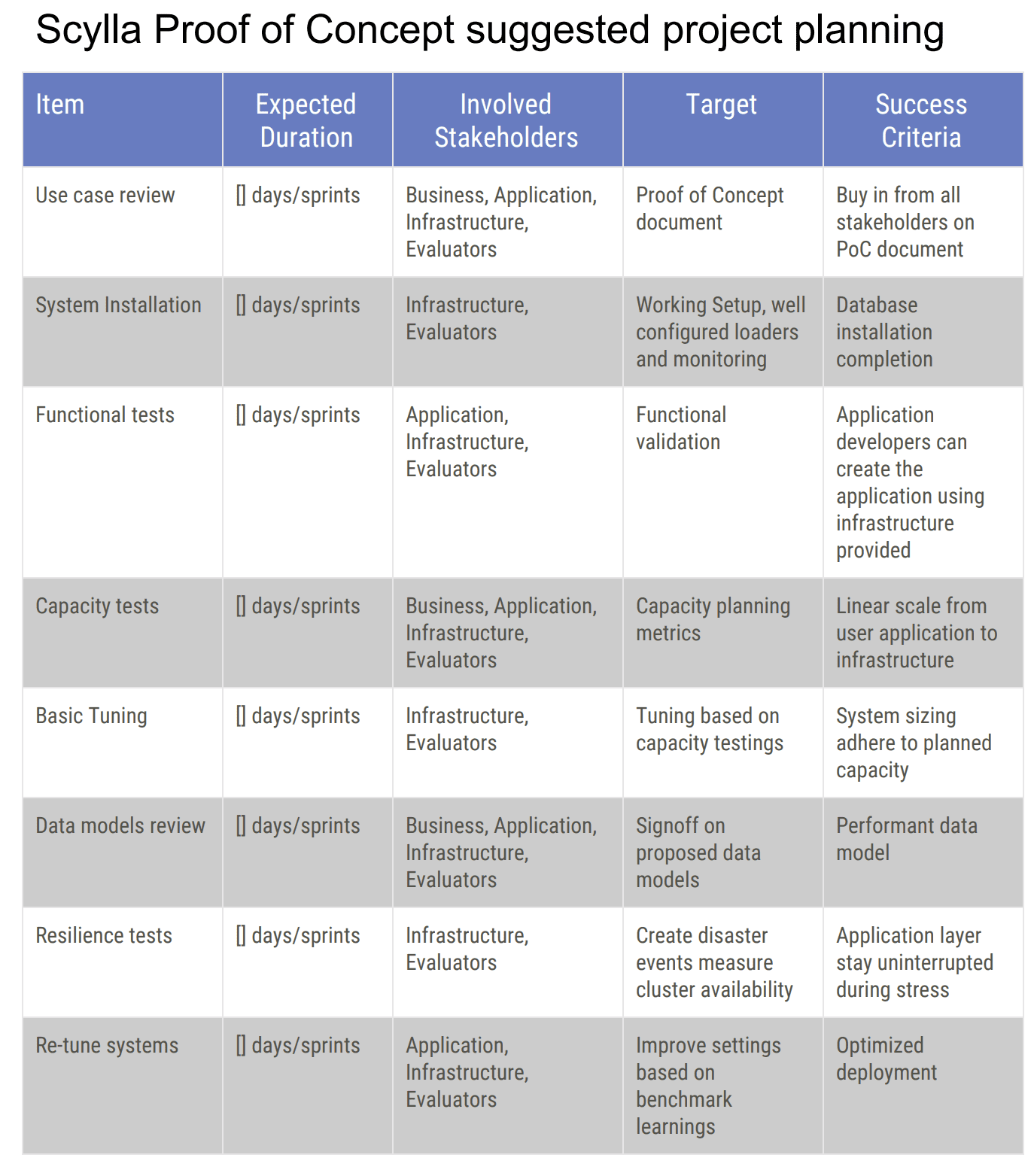

Here is an example of a project plan checklist that you can use for your own projects:

Download the proof of concept checklist

This is the checklist that we recommend in every proof of concept that we conduct. We’re taking into consideration many of the aspects that you sometimes do not think are part of the benchmarking but do take a lot of resources and a lot of time from your perspective and from your team.

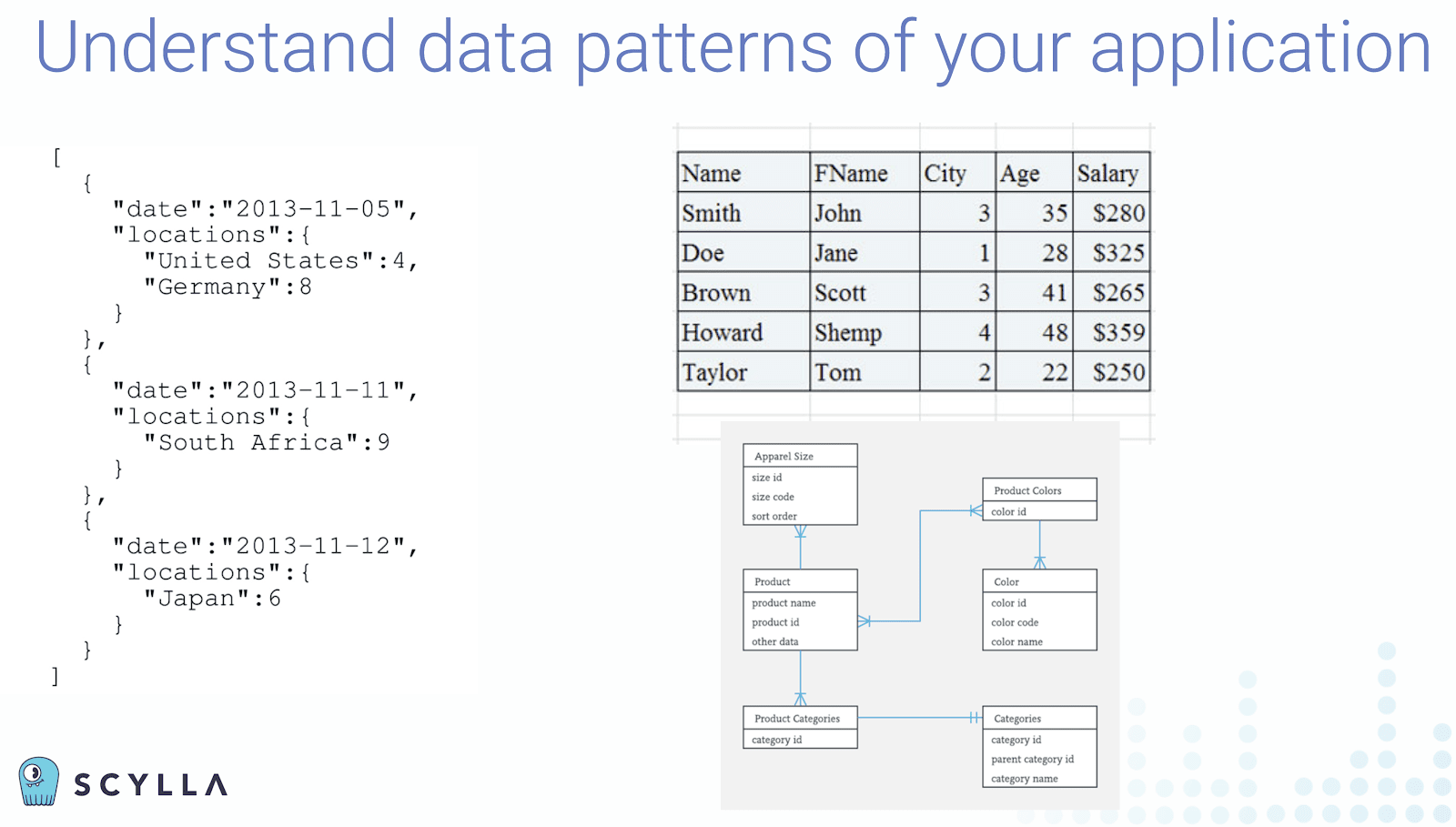

3. Understand Data Patterns of Your Application

Data modeling itself can lead to many horror stories with benchmarking. For example, one user tried to force a blob of one, two, or even five gigabytes into a single cell. Keep only the metadata in the database, and put the actual object in storage outside of the database. Not only will this make better sense operationally, but also financially.

Databases are optimized for certain data models and access patterns from your application. Ensure that the application data can be easily stored and accessed. Also, make sure that the developers you have for the POC are trained on data modeling for the target database.

Also, realize that not all benchmarking stressing tools have data modeling capabilities in them. Go beyond generic tests. If you have your own data model requirement and can benchmark your application with it, that’s the best case. Look at the synthetic tools to see if they were capable of data model modifications.

4. Benchmarking Hardware

While it is understandable, many benchmarks fail because of underpowered testing environments; using hardware systems too small to provide an adequate test experience--especially compared to what their production systems would be like. Too many times we see evaluators trying to squeeze the life out of a dime. These underpowered test systems can highly but unfairly bias your results. Think about the amount of CPUs you’re going to give to the system. To see a system’s benefits, and for a fair performance evaluation, don’t run it on a laptop.

5. Stress Test Tools and Infrastructure

Make sure you have dedicated servers as stressing nodes executing the stressing tools like Cassandra-stress and Yahoo Cloud Server Benchmark (YCSB). If you run the stress tools on the same server as the database, it can cause resource contentions and collisions. It also helps set up the test as realistically as possible.

In the same vein, use a realistic amount of data, to make sure you’re pushing and creating enough unique data. There’s a huge difference in performance if you have only 10 gigabytes of data in the server or 10 terabytes. This is not an apples-to-apples comparison. (We’ve been pointing out for many years how other databases do not scale linearly in performance.)

Look at the defaults that various stress tests use, and modify them for your use case where and if possible. Let’s say Cassandra-stress is doing a 300-byte partition key. That might not be representative of your actual workload. So you want to change that. You want to change how many columns are there, so on and so forth. Make sure that your stressing tool is representing your actual workload.

6. Disaster Recovery Testing

You need to test your ability to sustain regular life events. Nodes will crash. Disks will corrupt. And network cables will be disconnected. That will happen for sure, and it always happens during peak times. It’s going to be on your Black Friday. It’s going to be during the middle of your game. Things happen at exactly the wrong time. So you need to take disaster into account and test capacity planning with reduced nodes, a network partition, or other undesired events. This has the added benefit of teaching you about the true capabilities of the resiliency of a system.

The challenging task of coming alive back from a backup must be tested. That means you should also spend a little bit more time (and money) by testing a backup and restore process to see how fast and easy your restoration will be, and that restoration works properly.

7. Observability

Be sure to the capabilities of your observability. A powerful monitoring system is required for you to understand the database and system capabilities. This allows you to understand the performance of your data model and your application efficiency. You can also discover data patterns.

Moving to Production

Also, once you have finished with your POC you aren’t done yet! For the next steps of getting into production, you will need a checklist to make sure you are ready. Feel free to use the checklist that we prepared, or modify it to create your own.

Published at DZone with permission of Peter Corless. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments