Iceberg Catalogs: A Guide for Data Engineers

Learn about Apache Iceberg catalogs, their types, configurations, and the best-fit solutions for managing metadata and large datasets in different environments.

Join the DZone community and get the full member experience.

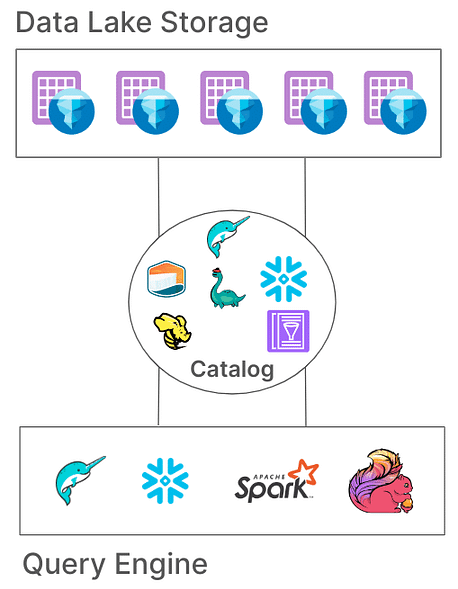

Join For FreeApache Iceberg has become a popular choice for managing large datasets with flexibility and scalability. Catalogs are central to Iceberg’s functionality, which is vital in table organization, consistency, and metadata management. This article will explore what Iceberg catalogs are, their various implementations, use cases, and configurations, providing an understanding of the best-fit catalog solutions for different use cases.

What Is an Iceberg Catalog?

In Iceberg, a catalog is responsible for managing table paths, pointing to the current metadata files that represent a table’s state. This architecture is essential because it enables atomicity, consistency, and efficient querying by ensuring that all readers and writers access the same state of the table. Different catalog implementations store this metadata in various ways, from file systems to specialized metastore services.

Core Responsibilities of an Iceberg Catalog

The fundamental responsibilities of an Iceberg catalog are:

- Mapping Table Paths: Linking a table path (e.g., “db.table”) to the corresponding metadata file.

- Atomic Operations Support: Ensuring consistent table state during concurrent reads/writes.

- Metadata Management: Storing and managing the metadata, ensuring accessibility and consistency.

Iceberg catalogs offer various implementations to accommodate diverse system architectures and storage requirements. Let’s examine these implementations and their suitability for different environments.

Types of Iceberg Catalogs

1. Hadoop Catalog

The Hadoop Catalog is typically the easiest to set up, requiring only a file system. This catalog manages metadata by looking up the most recent metadata file in a table’s directory based on file timestamps. However, due to its reliance on file-level atomic operations (which some storage systems like S3 lack), the Hadoop catalog may not be suitable for production environments where concurrent writes are common.

Configuration Example

To configure the Hadoop catalog with Apache Spark:

spark-sql --packages org.apache.iceberg:iceberg-spark-runtime-3.3_2.12:0.14.0 \

--conf spark.sql.extensions=org.apache.iceberg.spark.extensions.IcebergSparkSessionExtensions \

--conf spark.sql.catalog.my_catalog=org.apache.iceberg.spark.SparkCatalog \

--conf spark.sql.catalog.my_catalog.type=hadoop \

--conf spark.sql.catalog.my_catalog.warehouse=file:///D:/sparksetup/iceberg/spark_warehouseA different way to set catalog in the spark job itself:

SparkConf sparkConf = new SparkConf()

.setAppName("Example Spark App")

.setMaster("local[*]")

.set("spark.sql.extensions","org.apache.iceberg.spark.extensions.IcebergSparkSessionExtensions")

.set("spark.sql.catalog.local","org.apache.iceberg.spark.SparkCatalog")

.set("spark.sql.catalog.local.type","hadoop")

.set("spark.sql.catalog.local.warehouse", "file:///D:/sparksetup/iceberg/spark_warehouse")In the above example, we set the catalog name to “local” as configured in spark “spark.sql.catalog.local". This can be a choice of your name.

Pros:

- Simple setup, no external metastore required.

- Ideal for development and testing environments.

Cons:

- Limited to single file systems (e.g., a single S3 bucket).

- Not recommended for production

2. Hive Catalog

The Hive Catalog leverages the Hive Metastore to manage metadata location, making it compatible with numerous big data tools. This catalog is widely used for production because of its integration with existing Hive-based infrastructure and compatibility with multiple query engines.

Configuration Example

To use the Hive catalog in Spark:

spark-sql --packages org.apache.iceberg:iceberg-spark-runtime-3.3_2.12:0.14.0 \

--conf spark.sql.extensions=org.apache.iceberg.spark.extensions.IcebergSparkSessionExtensions \

--conf spark.sql.catalog.my_catalog=org.apache.iceberg.spark.SparkCatalog \

--conf spark.sql.catalog.my_catalog.type=hive \

--conf spark.sql.catalog.my_catalog.uri=thrift://<metastore-host>:<port>Pros:

- High compatibility with existing big data tools.

- Cloud-agnostic and flexible across on-premises and cloud setups.

Cons:

- Requires maintaining a Hive metastore, which may add operational complexity.

- Lacks multi-table transaction support, limiting atomicity for operations across tables

3. AWS Glue Catalog

The AWS Glue Catalog is a managed metadata catalog provided by AWS, making it ideal for organizations heavily invested in the AWS ecosystem. It handles Iceberg table metadata as table properties within AWS Glue, allowing seamless integration with other AWS services.

Configuration Example

To set up AWS Glue with Iceberg in Spark:

spark-sql --packages org.apache.iceberg:iceberg-spark-runtime-x.x_x.xx:x.x.x,software.amazon.awssdk:bundle:x.xx.xxx \

--conf spark.sql.catalog.my_catalog=org.apache.iceberg.spark.SparkCatalog \

--conf spark.sql.catalog.my_catalog.catalog-impl=org.apache.iceberg.aws.glue.GlueCatalog \

--conf spark.sql.catalog.my_catalog.io-impl=org.apache.iceberg.aws.s3.S3FileIO \

--conf spark.hadoop.fs.s3a.access.key=$AWS_ACCESS_KEY \

--conf spark.hadoop.fs.s3a.secret.key=$AWS_SECRET_ACCESS_KEYPros:

- Managed service, reducing infrastructure and maintenance overhead.

- Strong integration with AWS services.

Cons:

- AWS-specific, which limits cross-cloud flexibility.

- No support for multi-table transactions

4. Project Nessie Catalog

Project Nessie offers a “data as code” approach, allowing data version control. With its Git-like branching and tagging capabilities, Nessie enables users to manage data branches in a way similar to source code. It provides a robust framework for multi-table and multi-statement transactions.

Configuration Example

To configure Nessie as the catalog:

spark-sql --packages "org.apache.iceberg:iceberg-spark-runtime-x.x_x.xx:x.x.x" \

--conf spark.sql.catalog.my_catalog=org.apache.iceberg.spark.SparkCatalog \

--conf spark.sql.catalog.my_catalog.catalog-impl=org.apache.iceberg.nessie.NessieCatalog \

--conf spark.sql.catalog.my_catalog.uri=http://<host>:<port>Pros:

- Provides “data as code” functionalities with version control.

- Supports multi-table transactions.

Cons:

- Requires self-hosting, adding infrastructure complexity.

- Limited tool support compared to Hive or AWS Glue

5. JDBC Catalog

The JDBC Catalog allows you to store metadata in any JDBC-compliant database, like PostgreSQL or MySQL. This catalog is cloud-agnostic and ensures high availability by using reliable RDBMS systems.

Configuration Example

spark-sql --packages org.apache.iceberg:iceberg-spark-runtime-x.x_x.xx:x.x.x \

--conf spark.sql.catalog.my_catalog=org.apache.iceberg.spark.SparkCatalog \

--conf spark.sql.catalog.my_catalog.catalog-impl=org.apache.iceberg.jdbc.JdbcCatalog \

--conf spark.sql.catalog.my_catalog.uri=jdbc:<protocol>://<host>:<port>/<database> \

--conf spark.sql.catalog.my_catalog.jdbc.user=<username> \

--conf spark.sql.catalog.my_catalog.jdbc.password=<password>Pros:

- Easy to set up with existing RDBMS infrastructure.

- High availability and cloud-agnostic.

Cons:

- No support for multi-table transactions.

- Increases dependencies on JDBC drivers for all accessing tools

6. Snowflake Catalog

Snowflake offers robust support for Apache Iceberg tables, allowing users to leverage Snowflake’s platform as the Iceberg catalog. This integration combines Snowflake’s performance and query semantics with the flexibility of Iceberg’s open table format, enabling efficient management of large datasets stored in external cloud storage. Refer to the snowflake documentation for further configuration it at the link

Pros:

- Seamless Integration: Combines Snowflake’s performance and query capabilities with Iceberg’s open table format, facilitating efficient data management.

- Full Platform Support: Provides comprehensive read and write access, along with features like ACID transactions, schema evolution, and time travel.

- Simplified Maintenance: Snowflake handles lifecycle tasks such as compaction and reducing operational overhead.

Cons:

- Cloud and Region Constraints: The external volume must be in the same cloud provider and region as the Snowflake account, limiting cross-cloud or cross-region configurations.

- Data Format Limitation: Supports only the Apache Parquet file format, which may not align with all organizational data format preferences.

- Third-Party Client Restrictions: Prevents third-party clients from modifying data in Snowflake-managed Iceberg tables, potentially impacting workflows that rely on external tools.

7. REST-Based catalogs

Iceberg supports REST-based catalogs to address several challenges associated with traditional catalog implementations.

Challenges With Traditional Catalogs

- Client-Side Complexity: Traditional catalogs often require client-side configurations and dependencies for each language (Java, Python, Rust, Go), leading to inconsistencies across different programming languages and processing engines. Read more about it here.

- Scalability Constraints: Managing metadata and table operations at the client level can introduce bottlenecks, affecting performance and scalability in large-scale data environments.

Benefits of Adopting the REST Catalog

- Simplified Client Integration: Clients can interact with the REST catalog using standard HTTP protocols, eliminating the need for complex configurations or dependencies.

- Scalability: The REST catalog's server-side architecture allows for scalable metadata management, accommodating growing datasets and concurrent access patterns.

- Flexibility: Organizations can implement custom catalog logic on the server side, tailoring the REST catalog to meet specific requirements without altering client applications.

Several implementations of the REST catalog have emerged, each catering to specific organizational needs:

- Gravitino: An open-source Iceberg REST catalog service that facilitates integration with Spark and other processing engines, offering a straightforward setup for managing Iceberg tables.

- Tabular: A managed service providing a REST catalog interface, enabling organizations to leverage Iceberg’s capabilities without the overhead of managing catalog infrastructure. Read more at Tabular.

- Apache Polaris: An open-source, fully-featured catalog for Apache Iceberg, implementing the REST API to ensure seamless multi-engine interoperability across platforms like Apache Doris, Apache Flink, Apache Spark, StarRocks, and Trino. Read more at GitHub.

One of my favorite and simple ways to try out the REST catalog with Iceberg tables is using plain Java REST implementation. Please check the GitHub link here.

Conclusion

Selecting the appropriate Apache Iceberg catalog is crucial for optimizing your data management strategy. Here’s a concise overview to guide your decision:

- Hadoop Catalog: Best suited for development and testing environments due to its simplicity. However, consistency issues may be encountered in production scenarios with concurrent writes.

- Hive Metastore Catalog: This is ideal for organizations with existing Hive infrastructure. It offers compatibility with a wide range of big data tools and supports complex data operations. However, maintaining a Hive Metastore service can add operational complexity.

- AWS Glue Catalog: This is optimal for those heavily invested in the AWS ecosystem. It provides seamless integration with AWS services and reduces the need for self-managed metadata services. However, it is AWS-specific, which may limit cross-cloud flexibility.

- JDBC Catalog: Suitable for environments preferring relational databases for metadata storage, allowing the use of any JDBC-compliant database. This offers flexibility and leverages existing RDBMS infrastructure but may introduce additional dependencies and require careful management of database connections.

- REST Catalog: This is ideal for scenarios requiring a standardized API for catalog operations, enhancing interoperability across diverse processing engines and languages. It decouples catalog implementation details from clients but requires setting up a REST service to handle catalog operations, which may add initial setup complexity.

- Project Nessie Catalog: This is perfect for organizations needing version control over their data, similar to Git. It supports branching, tagging, and multi-table transactions. It provides robust data management capabilities but requires deploying and managing the Nessie service, which may add operational overhead.

Understanding these catalog options and their configurations will enable you to make informed choices and optimize your data lake or lakehouse setup to meet your organization’s specific needs.

Opinions expressed by DZone contributors are their own.

Comments