Import Data From Hadoop Into CockroachDB

In this article, we are going to take a slight detour from docker compose and evaluate ingestion of data from Hadoop into CockroachDB.

Join the DZone community and get the full member experience.

Join For Free- Information on CockroachDB can be found here.

- Information on Hadoop Ozone can be found here.

- Information on Minio can be found here.

- Upload a file to HDFS.

I have a CSV file I created with my favorite data generator tool, Mockaroo.

curl "https://api.mockaroo.com/api/38ef0ea0?count=1000&key=a2efab40" > "part5.csv" hdfs dfs -mkdir /data hdfs dfs -chmod -R 777 /data hdfs dfs -put part5.csv /data

Data looks like this:

id,first_name,last_name fc2482b8-48e1-470d-976c-3b4134f44e65,Sandro,Bredee 719e2c11-e1fd-4129-b162-7557d20db728,Rock,Larkings b6101f8c-a22b-4c51-bbab-058cd27aec6c,Nicol,Commins 63fc4f80-2d82-4dea-a86f-fa6d2a7f10cf,Maye,Columbine 5be11b08-80fb-4a24-bb32-edf565126cde,Marnie,Batte 75280e86-0558-4a67-8900-431fa8c3f3b2,Malena,Delves cf8f5056-1cb0-4838-9afc-d1f58a56df0a,Shae,Betteson e337f5a1-10c6-4d97-9590-38d2d6502285,Averil,Twigge 553e83d6-ce93-43c2-a64e-f8361cd65820,Amanda,Brundle

- Enable webhdfs on your Hadoop cluster.

The main thing task here is to edit hdfs-site.xml file and make sure the following property is set to true.

<property> <name>dfs.webhdfs.enabled</name> <value>true</value> <final>true</final> </property>

A more in-depth overview of webhdfs can be found on the Hadoop website.

- Make sure the file is accessible via WebHDFS:

hdfs dfs -ls webhdfs://hadoop.example.com:50070/data/

Found 1 items -rw-r--r-- 1 vagrant hdfs 52061 2020-01-16 15:40 webhdfs://hadoop.example.com:50070/data/part5.csv

Unfortunately, webhdfs scheme is not supported and we need to rely on the http protocol for our test. If you're curious, CockroachDB will output the following message if we attempt to import using webhdfs scheme.

root@:26257/defaultdb> IMPORT TABLE importhdfs

(

id UUID NOT NULL PRIMARY KEY,

first_name STRING NOT NULL,

last_name STRING NOT NULL,

INDEX idx_last_name (last_name ASC)

) CSV DATA ('webhdfs://hadoop.example.com:50070/data/part5.csv')

WITH skip = '1';

pq: unsupported storage scheme: "webhdfs"

Let's try accessing the file over http using curl

curl -i "http://hadoop.example.com:50070/webhdfs/v1/data/?op=LISTSTATUS"

HTTP/1.1 200 OK

Cache-Control: no-cache

Expires: Thu, 16 Jan 2020 15:52:18 GMT

Date: Thu, 16 Jan 2020 15:52:18 GMT

Pragma: no-cache

Expires: Thu, 16 Jan 2020 15:52:18 GMT

Date: Thu, 16 Jan 2020 15:52:18 GMT

Pragma: no-cache

Content-Type: application/json

X-FRAME-OPTIONS: SAMEORIGIN

Transfer-Encoding: chunked

{"FileStatuses":{"FileStatus":[

{"accessTime":1579189250309,"blockSize":134217728,"childrenNum":0,"fileId":16405,"group":"hdfs","length":52061,"modificationTime":1579189251025,"owner":"vagrant","pathSuffix":"part5.csv","permission":"644","replication":1,"storagePolicy":0,"type":"FILE"}

]}}

Again, depending on your situation, you will need to authenticate and configure Kerberos.

- Read the file using curl:

curl -i -L "http://hadoop.example.com:50070/webhdfs/v1/data/part5.csv?op=OPEN&user.name=vagrant"

- Import into CockroachDB:

root@:26257/defaultdb> IMPORT TABLE importhdfs

(

id UUID NOT NULL PRIMARY KEY,

first_name STRING NOT NULL,

last_name STRING NOT NULL,

INDEX idx_last_name (last_name ASC)

) CSV DATA ('http://hadoop.example.com:50070/webhdfs/v1/data/part5.csv')

WITH skip = '1';

pq: error response from server: 400 Bad Request "{\"RemoteException\":{\"exception\":\"UnsupportedOperationException\",\"javaClassName\":\"java.lang.UnsupportedOperationException\",\"message\":\"op=NULL is not supported\"}}"

Let's try again adding ?op=OPEN to the path.

IMPORT TABLE importhdfs

(

id UUID NOT NULL PRIMARY KEY,

first_name STRING NOT NULL,

last_name STRING NOT NULL,

INDEX idx_last_name (last_name ASC)

) CSV DATA ('http://hadoop.example.com:50070/webhdfs/v1/data/part5.csv?op=OPEN')

WITH skip = '1';

root@:26257/defaultdb> IMPORT TABLE importhdfs

(

id UUID NOT NULL PRIMARY KEY,

first_name STRING NOT NULL,

last_name STRING NOT NULL,

INDEX idx_last_name (last_name ASC)

) CSV DATA ('http://hadoop.example.com:50070/webhdfs/v1/data/part5.csv?op=OPEN')

WITH skip = '1';

job_id | status | fraction_completed | rows | index_entries | system_records | bytes

+--------------------+-----------+--------------------+------+---------------+----------------+-------+

521406882554839041 | succeeded | 1 | 1000 | 1000 | 0 | 81215

(1 row)

Time: 153.8283ms

And here we are, we just loaded data into CockroachDB from Hadoop:

root@:26257/defaultdb> select * from importhdfs limit 10;

id | first_name | last_name

+--------------------------------------+------------+-----------+

00aca9b0-3d78-4664-9f5c-9a58ba481aff | Kerk | Viney

00d37d61-f78b-4755-9aef-9e3112999f2e | Zita | Brisker

01e8422f-8c07-4989-b740-67b6811c6cef | Sylas | Hatchard

020106fe-f6ca-4715-ba7e-548a883e0a33 | Sapphire | Good

031988cb-e760-458d-b6c6-902ebd52f470 | Rica | Winley

0330c19f-c0da-4b38-aba4-8e0fd264ed04 | Korie | Blazic

033c08b5-fd04-4dc1-a612-2b991e6da619 | Walsh | Kernes

0382a85b-7140-4041-87a0-96da852c5c4d | Fredia | Boliver

038a0ff4-4055-4dec-8762-658dc096b997 | Marven | Baddow

039ca369-113a-4d0b-9b15-24d2c49489fa | Yulma | Adamowicz

(10 rows)

- Use Minio as HDFS Gateway.

I was not aware of this but on a hunch, I searched whether Minio could serve as an HDFS Gateway, and alas it does! Though at the moment, this feature lives on Github and not in their docs so I question the supportability. Also, because we're testing with smaller volumes, using Minio may have additional benefits to load balance the data, how scalable and feasible it is, I leave it to you to test.

Setting it up is also a breeze.

- Set up Minio as an HDFS gateway.

wget https://dl.min.io/server/minio/release/linux-amd64/minio chmod +x minio export MINIO_ACCESS_KEY=minio export MINIO_SECRET_KEY=minio123 ./minio gateway hdfs hdfs://hadoop.example.com:8200

I used the hadoop user to start the gateway, security implications of which should be left to the reader's judgment. My hadoop fs.defaultFS property is mapped to port 9000. Since Minio gateway also uses port 9000 by default, I edited core-site.xml to reflect port 8200.

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop.example.com:8200</value>

</property>

With that, I was able to start the gateway with ./minio gateway hdfs hdfs://hadoop.example.com:8200

When the gateway is up, you should see:

*** Warning: Not Ready for Production *** Endpoint: http://10.0.2.15:9000 http://192.168.33.10:9000 http://127.0.0.1:9000 AccessKey: minio SecretKey: minio123 Browser Access: http://10.0.2.15:9000 http://192.168.33.10:9000 http://127.0.0.1:9000 Command-line Access: https://docs.min.io/docs/minio-client-quickstart-guide $ mc config host add myhdfs http://10.0.2.15:9000 minio minio123 Object API (Amazon S3 compatible): Go: https://docs.min.io/docs/golang-client-quickstart-guide Java: https://docs.min.io/docs/java-client-quickstart-guide Python: https://docs.min.io/docs/python-client-quickstart-guide JavaScript: https://docs.min.io/docs/javascript-client-quickstart-guide .NET: https://docs.min.io/docs/dotnet-client-quickstart-guide

Once you browse to the web UI and input the credentials, you should see the file at the root of the /data dir.

At this point, we should be able to execute import pointing to the file with the HTTP scheme.

- Use Minio to import HDFS data into CockroachDB.

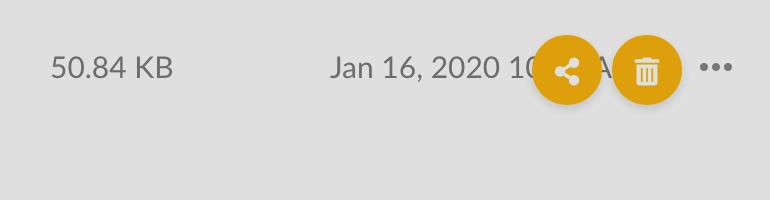

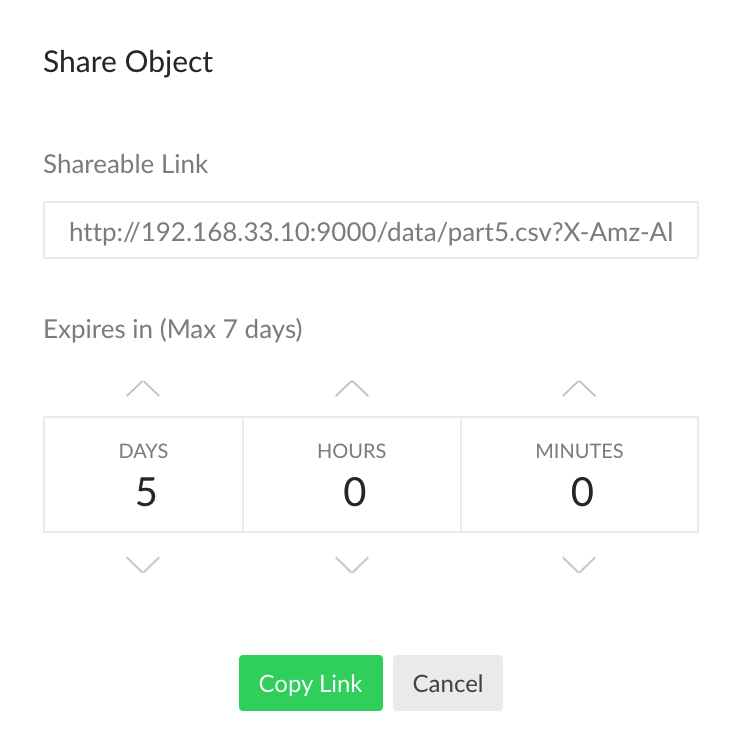

Still in the Minio UI, click on the three dots to the right of the file in the browser and hit share button

Click Copy Link

Then use the copied URL in your IMPORT statement.

IMPORT TABLE importhdfs

(

id UUID NOT NULL PRIMARY KEY,

first_name STRING NOT NULL,

last_name STRING NOT NULL,

INDEX idx_last_name (last_name ASC)

) CSV DATA ('http://192.168.33.10:9000/data/part5.csv?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=minio%2F20200116%2F%2Fs3%2Faws4_request&X-Amz-Date=20200116T163901Z&X-Amz-Expires=432000&X-Amz-SignedHeaders=host&X-Amz-Signature=0a9ecd6731581c193746fd6113a5c094dfc061e2edf194b77554e80a770f68e4')

WITH skip = '1';

Voila!

root@:26257/defaultdb> IMPORT TABLE importhdfs

(

id UUID NOT NULL PRIMARY KEY,

first_name STRING NOT NULL,

last_name STRING NOT NULL,

INDEX idx_last_name (last_name ASC)

) CSV DATA ('http://192.168.33.10:9000/data/part5.csv?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=minio%2F20200116%2F%2Fs3%2Faws4_request&X-Amz-Date=20200116T163901Z&X-Amz-Expires=432000&X-Amz-SignedHeaders=host&X-Amz-Signature=0a9ecd6731581c193746fd6113a5c094dfc061e2edf194b77554e80a770f68e4')

WITH skip = '1';

job_id | status | fraction_completed | rows | index_entries | system_records | bytes

+--------------------+-----------+--------------------+------+---------------+----------------+-------+

521413400760745985 | succeeded | 1 | 1000 | 1000 | 0 | 81215

(1 row)

Time: 169.0506ms

Here's the same example except we're using the S3 scheme for our HDFS data.

IMPORT TABLE importhdfs

(

id UUID NOT NULL PRIMARY KEY,

first_name STRING NOT NULL,

last_name STRING NOT NULL,

INDEX idx_last_name (last_name ASC)

) CSV DATA ('s3://data/part5.csv?AWS_ACCESS_KEY_ID=minio&AWS_SECRET_ACCESS_KEY=minio123&AWS_ENDPOINT=http://hadoop.example.com:9000')

WITH skip = '1';

AWS_ACCESS_KEY_ID, AWS_SECRET_ACCESS_KEY and AWS_ENDPOINT are all required properties. AWS_ENDPOINT is pointing to the machine hosting my Hadoop instance as well as the minio gateway.

At this point you've seen two methods to access HDFS data for import into CockroachDB, I'm wondering if I could use the same methods for Change Data Capture and Backup/Restore in Hadoop! That's an idea for another day.

Please share your feedback in the comments.

Published at DZone with permission of Artem Ervits. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments