Lambda Architecture with Apache Spark

A lot of players on the market have built successful MapReduce workflows to daily process terabytes of historical data. But who wants to wait 24h to get updated analytics?

Join the DZone community and get the full member experience.

Join For FreeGoal

A lot of players on the market have built successful MapReduce workflows to daily process terabytes of historical data. But who wants to wait 24h to get updated analytics? This blog post will introduce you to the Lambda Architecture designed to take advantages of both batch and streaming processing methods. So we will leverage fast access to historical data with real-time streaming data using Apache Spark (Core, SQL, Streaming), Apache Parquet, Twitter Stream, etc. Clear code plus intuitive demo are also included!

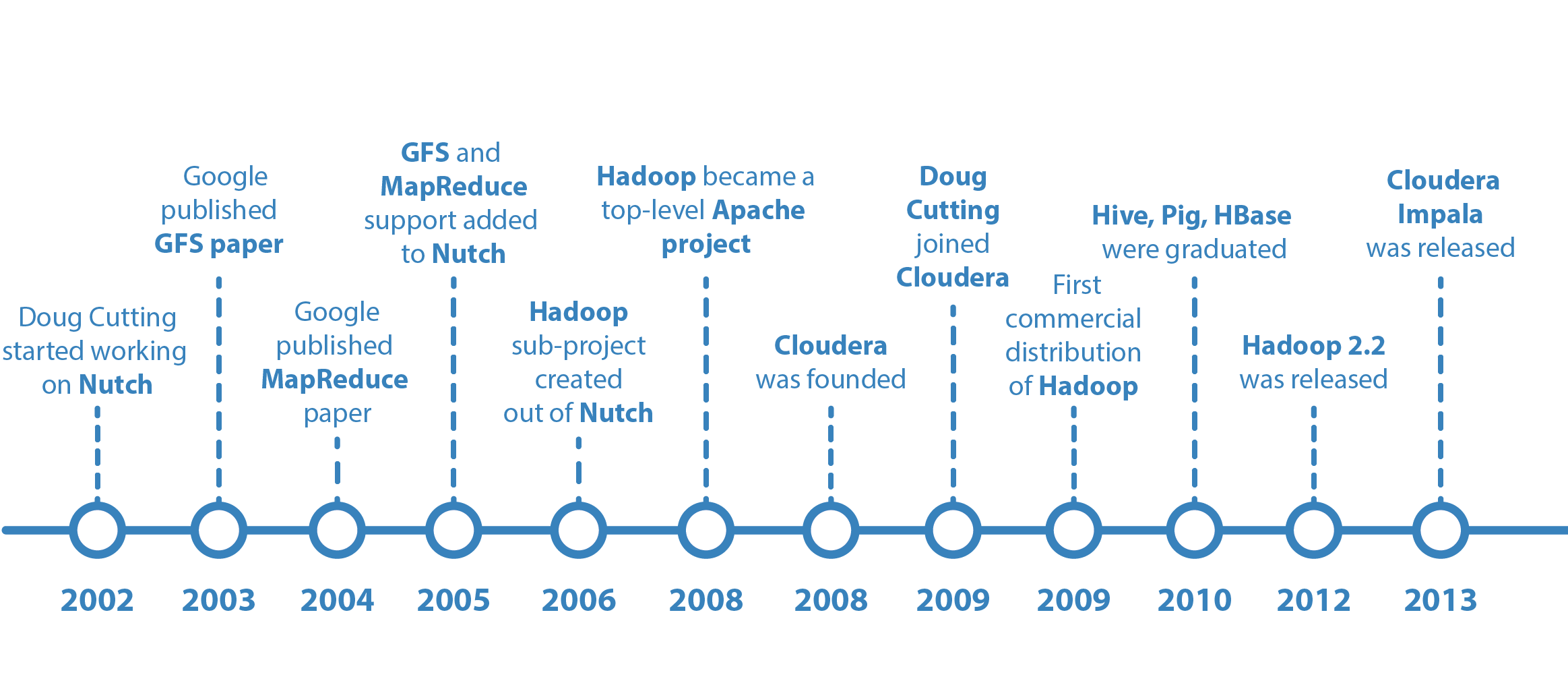

Apache Hadoop: Brief History

Apache Hadoop's rich history started in ~2002. Hadoop was created by Doug Cutting, the creator of Apache Lucene, a widely used text search library. Hadoop has its origins in Apache Nutch, an open source web search engine, itself a part of the Lucene project. It became an independent project ~10 years ago.

As a result, a lot of customers implemented successful Hadoop-based M/R pipelines which are operating today. I have at least a few great examples from real life:

- Oozie-orchestrated workflow operates daily and processes up to 150 TB to generate analytics

- bash managed workflow runs daily and processes up to 8 TB to generate analytics

It is 2016 Now!

Business realities have changed, so now making decisions faster is more valuable. In addition to that, technologies have evolved too. Kafka, Storm, Trident, Samza, Spark, Flink, Parquet, Avro, Cloud providers, etc. are known buzzwords that are widely adopted both by engineers and businesses.

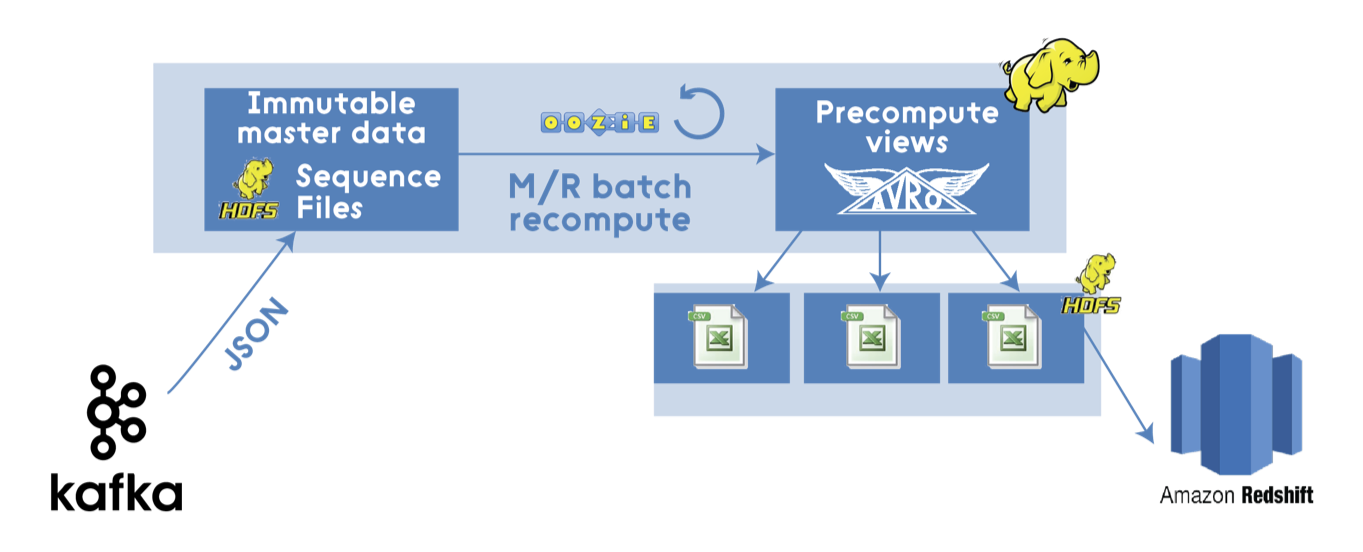

As a result, modern Hadoop-based M/R pipeline (with Kafka, modern binary format such as Avro and data warehouse, i.e. in this case Amazon Redshift, used for ad-hoc queries) might look in the following way:

That looks quite ok, but it is still a traditional batch processing with all the known drawbacks, main of them is stale data for end-users since batch processing usually takes a lot of time to complete while new data is constantly entering into a system.

Lambda Architecture

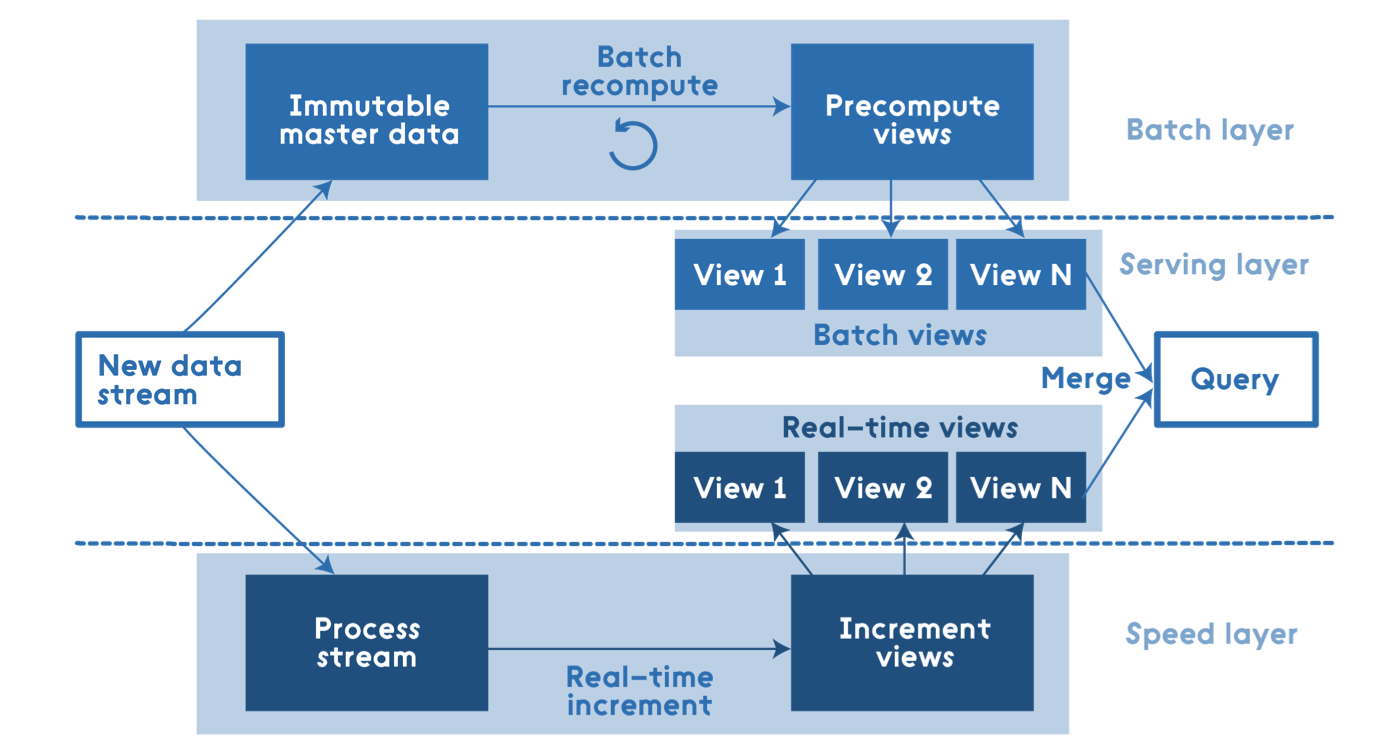

Nathan Marz came up with the term Lambda Architecture for generic, scalable and fault-tolerant data processing architecture. It is data-processing architecture designed to handle massive quantities of data by taking advantage of both batch and stream processing methods.

I strongly recommend reading Nathan Marz book as it gives a complete representation of Lambda Architecture from an original source.

Layers

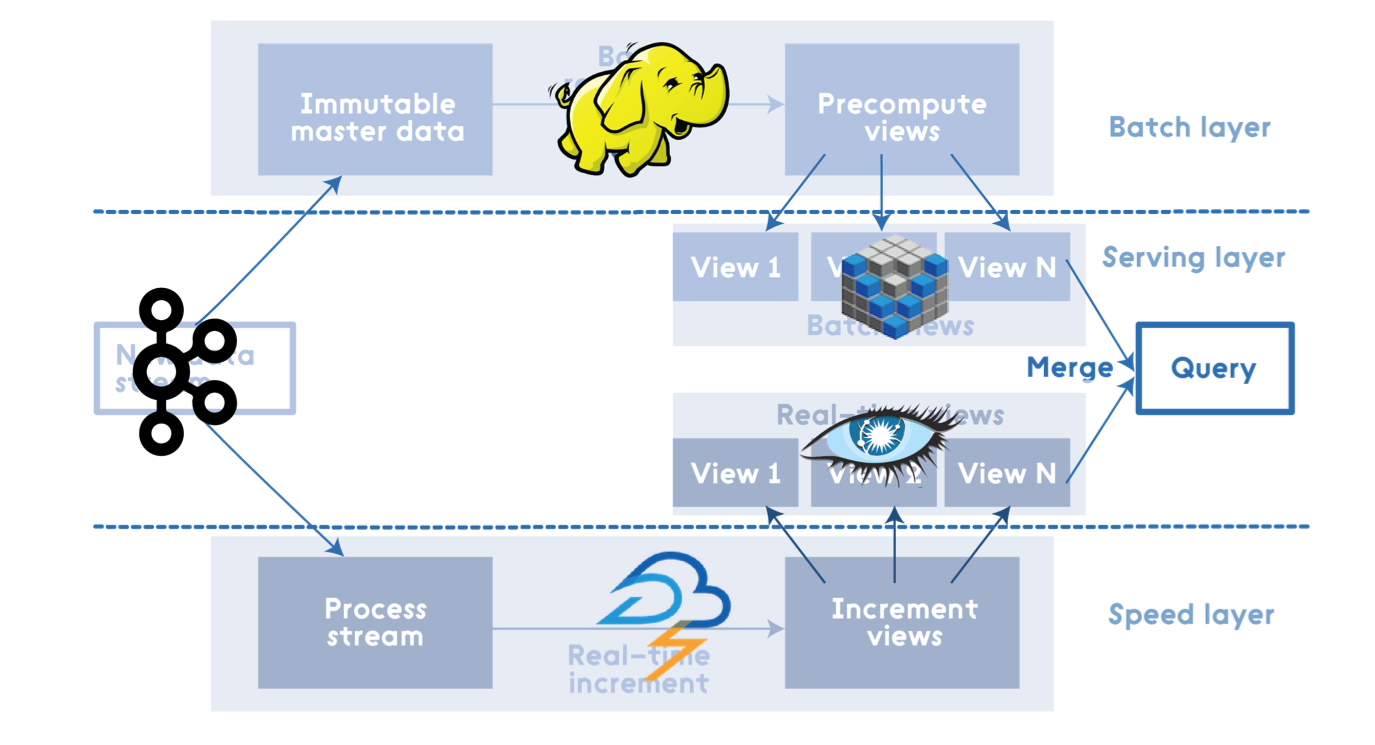

Here’s how it looks, from a high-level perspective:

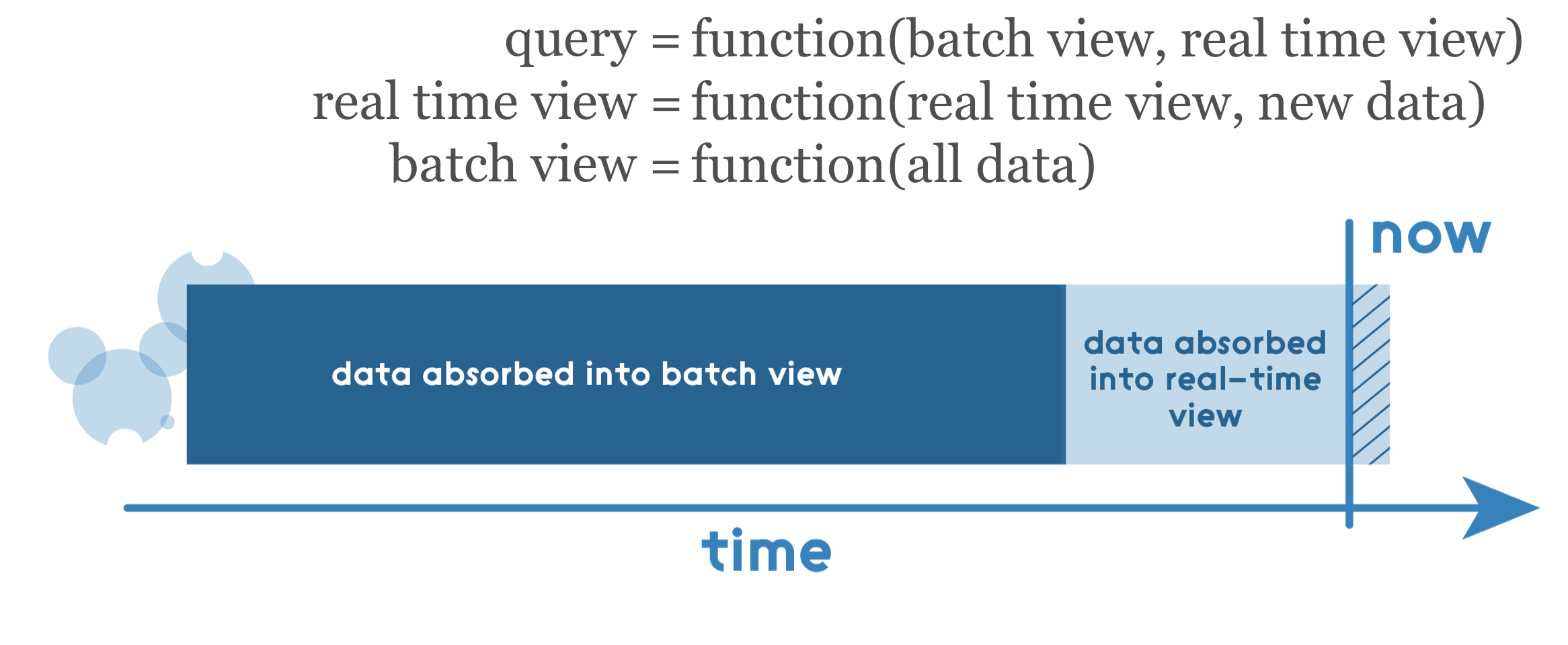

All data entering the system is dispatched to both the batch layer and the speed layer for processing. The batch layer manages the master dataset (an immutable, append-only set of raw data) and pre-computes the batch views. The serving layer indexes the batch views so that they can be queried in ad-hoc with low-latency. The speed layer deals with recent data only. Any incoming query has to be answered by merging results from batch views and real-time views.

Focus

A lot of engineers think that Lambda Architecture is all about these layers and defined data flow, but Nathan Marz in his book puts a focus on other important aspects like:

- think distributed

- avoid incremental architecture

- force data immutability

- create recomputation algorithms

Relevance of Data

As it was mentioned earlier, any incoming query has to be answered by merging results from batch views and real-time views, so those views need to be mergeable. One point to notice here, a real-time view is a function of a previous real-time view and delta of new data so that an incremental algorithm can be used there. A batch view is a function of all data, so a recomputation algorithm should be used there.

Trade-offs

Everything in our life is a trade-off, so Lambda Architecture is not an exception. Usually, there are a few main trade-offs we need to address:

- Full recomputation vs. partial recomputation

- in some cases, it is worth using Bloom filters to avoid complete recomputation

- Recomputational algorithms vs. incremental algorithms

- there is a big temptation to use incremental algorithms, but according to a guideline we have to use recomputational algorithms even if it makes it harder to achieve the same result

- Additive algorithms vs. approximation algorithms

- Lambda Architecture works well with additive algorithms. Thus this is another case we need to consider using approximation algorithms, for instance, HyperLogLog for a count-distinct problem, etc.

Implementation

There are many ways of implementing Lambda Architecture as it is quite agnostic about underlying solutions for each of the layers. Each layer requires specific features of underlying implementation that might help to make a better choice and avoid overkill decisions:

- Batch layer: write-once, bulk read many times

- Serving layer: random read, no random write; batch computation and batch write

- Speed layer: random read, random write; incremental computation

For instance, one of the implementations (using Kafka, Apache Hadoop, Voldemort, Twitter Storm, Cassandra) might look as follows:

Apache Spark

Apache Spark can be considered as an integrated solution for processing on all Lambda Architecture layers. It contains Spark Core that includes high-level API and an optimized engine that supports general execution graphs, Spark SQL for SQL and structured data processing, and Spark Streaming that enables scalable, high-throughput, fault-tolerant stream processing of live data streams. Definitely, batch processing using Spark might be quite expensive and might not fit for all scenarios and data volumes, but, other than that, it is a decent match for Lambda Architecture implementation.

Sample Application

Let's create a sample application with some shortcuts to demonstrate Lambda Architecture. The main goal is to provide hashtags statistics used in the #morningatlohika tweets (that is the local tech talks I lead in Lviv, Ukraine): all time till today + right now.

A source code is on GitHub, more visual information about the mentioned topic is on Slideshare.

Batch View

For simplicity, imagine that our master dataset contains all the tweets since the beginning of times. In addition, we have implemented a batch processing that created a batch view needed for our business goal, so we have one batch view pre-calculated that contains statistics for all hashtags used along with #morningatlohika:

apache – 6

architecture – 12

aws – 3

java – 4

jeeconf – 7

lambda – 6

morningatlohika – 15

simpleworkflow – 14

spark – 5Numbers are quite easy to remember as I just used a number of letters in the appropriate hashtags for simplicity.

Real-time View

Imagine that someone is tweeting right now when application is up and running:

“Cool blog post by @tmatyashovsky about #lambda #architecture using #apache #spark at #morningatlohika”

In this case, an appropriate real-time view should contain the following hashtags and their statistics (just 1 in our case as corresponding hashtags were used just once):

apache – 1

architecture – 1

lambda – 1

morningatlohika – 1

spark – 1Query

When an end-user query comes in order to give a real-time answer about overall hashtags statistics we simply need to merge batch view with the real-time view. So output should look as follows (appropriate hashtags have their statistics incremented by one):

apache – 7

architecture – 13

aws – 3

java – 4

jeeconf – 7

lambda – 7

morningatlohika – 16

simpleworkflow – 14

spark – 6Scenario

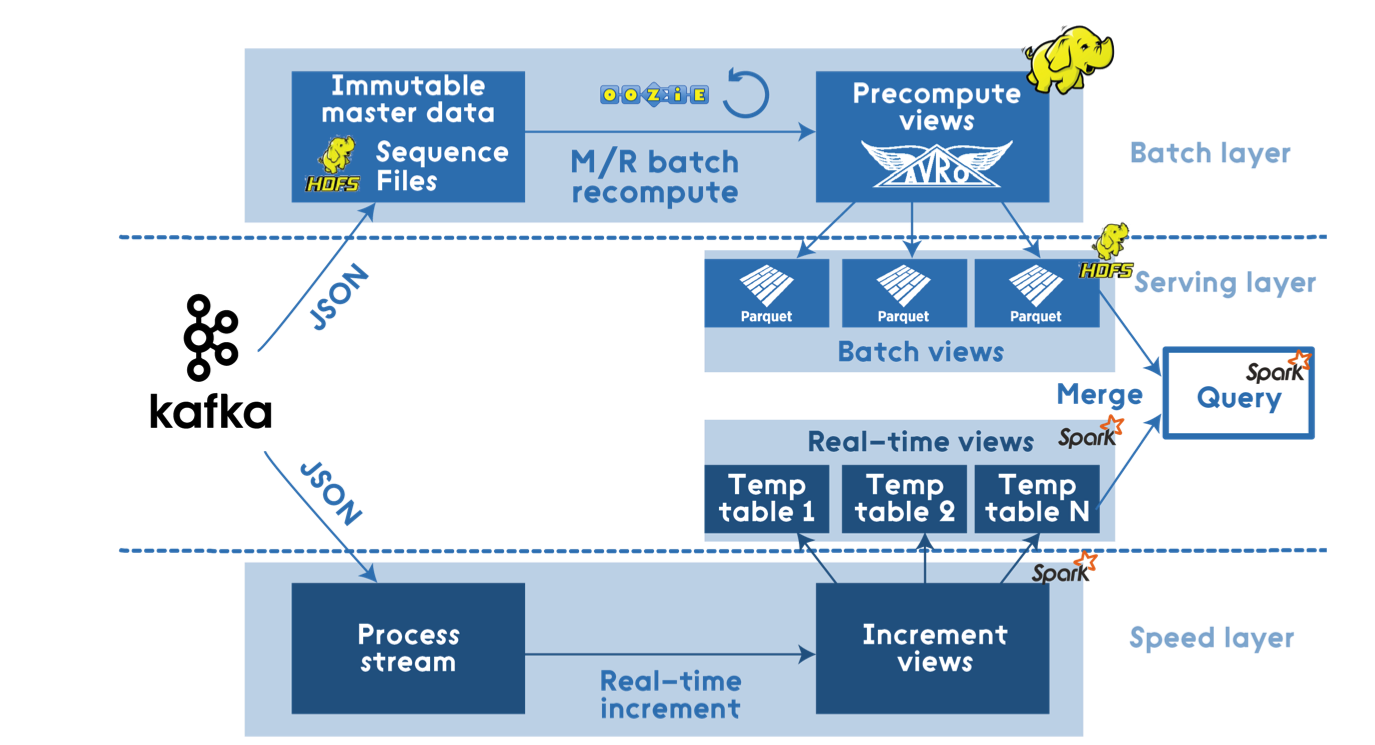

Simplified steps of demo scenario are the following:

- Create batch view (.parquet) via Apache Spark

- Cache batch view in Apache Spark

- Start streaming application connected to Twitter

- Focus on real-time #morningatlohika tweets

- Build incremental real-time views

- Query, i.e. merge batch and real-time views on a fly

Technical Details

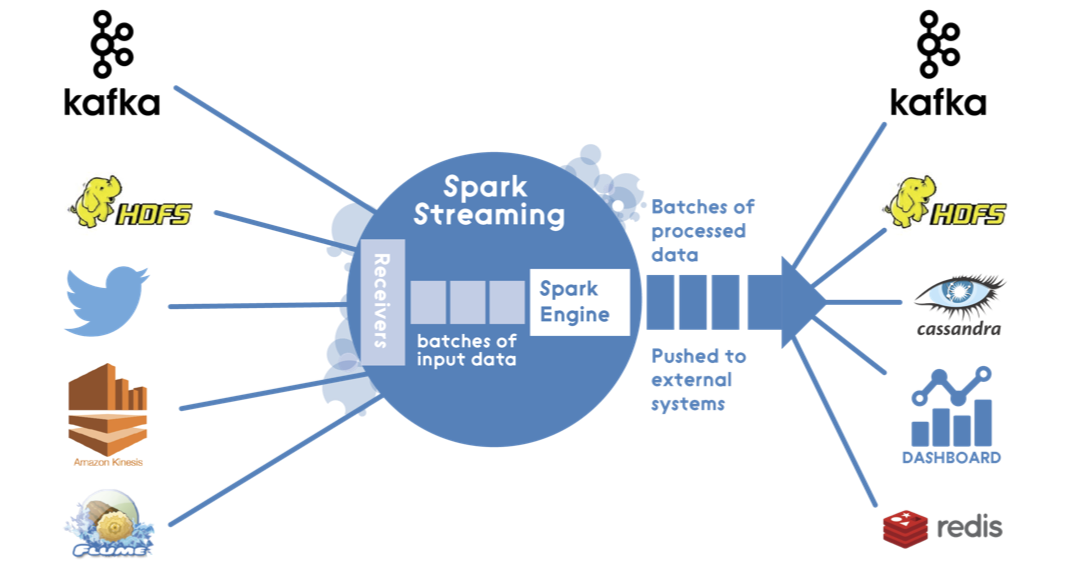

The source code was based on Apache Spark 1.6.x, i.e. before Structured Streaming was introduced. Spark Streaming architecture is pure micro-batch architecture:

So for a streaming application, I was using DStream connected to Twitter using TwitterUtils:.

JavaDStream<Status> twitterStatuses = TwitterUtils.createStream(javaStreamingContext,

createTwitterAuthorization(),

new String[]{twitterFilterText});On each micro-batch (using configurable batch interval) I was performing a calculation of hashtags statistics in new tweets and updating the state of a real-time view using updateStateByKey() stateful transformation. For simplicity, a real-time view is stored in memory using a temp table.

Query service reflects merging of batch and real-time views represented by DataFrame explicitly via code:

DataFrame realTimeView = streamingService.getRealTimeView();

DataFrame batchView = servingService.getBatchView();

DataFrame mergedView = realTimeView.unionAll(batchView)

.groupBy(realTimeView.col(HASH_TAG.getValue()))

.sum(COUNT.getValue())

.orderBy(HASH_TAG.getValue());

List<Row> merged = mergedView.collectAsList();

return merged.stream()

.map(row -> new HashTagCount(row.getString(0), row.getLong(1)))

.collect(Collectors.toList());Outcome

Using the simplified approach the real life Hadoop-based M/R pipeline mentioned at the beginning might be enhanced with Apache Spark and look in the following way:

Instead of Epilogue

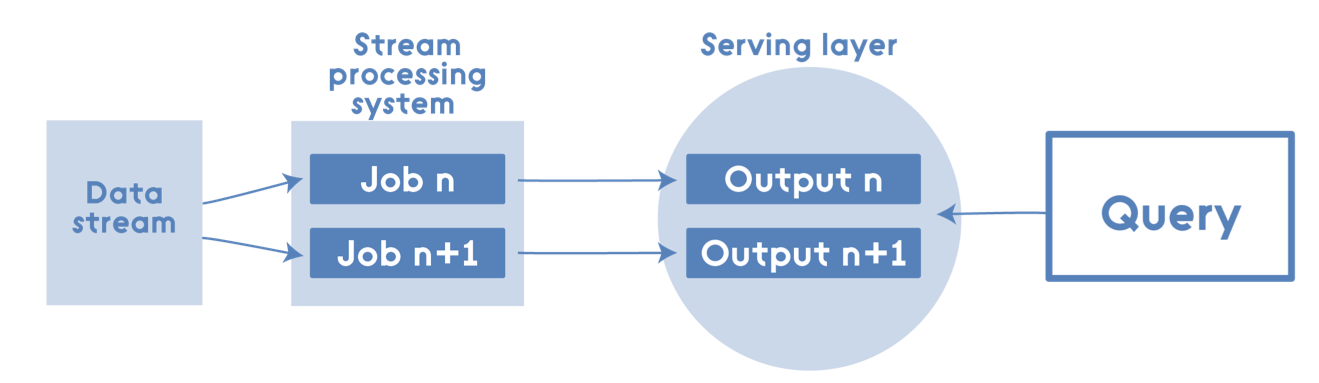

As it was mentioned earlier Lambda Architecture has its pros and cons, and as a result supporters and opponents. Some of them say that a batch view and real-time views have a lot of duplicate logic as, eventually, they need to create mergeable views from a query perspective. So they created a Kappa Architecture - simplification of Lambda Architecture. A Kappa Architecture system is the architecture with the batch processing system removed. To replace batch processing, data is simply fed through the streaming system quickly:

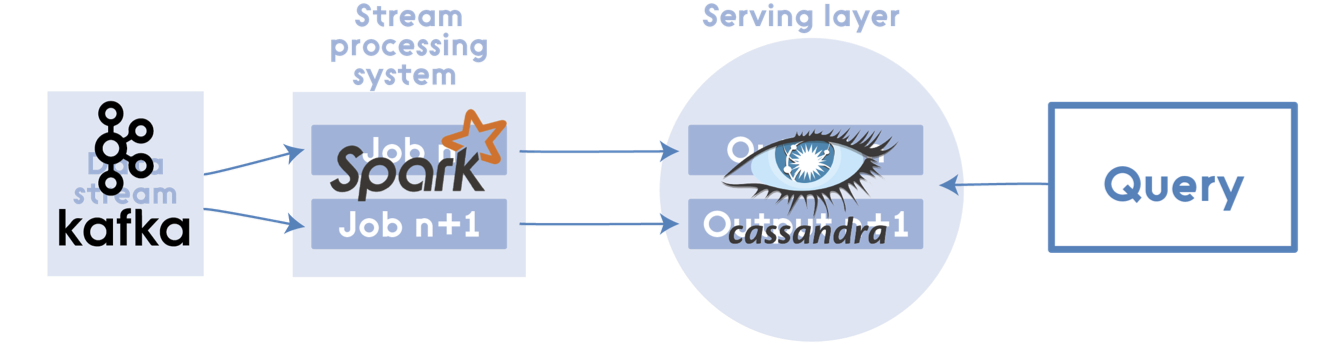

But even in this scenario there is a place for Apache Spark in Kappa Architecture too, for instance for a stream processing system:

Opinions expressed by DZone contributors are their own.

Comments