Live Loading to Deploy Kubernetes on Bare Metal

Here's how to run containerized workloads securely with live loading. In this article, I'll tell you how we deploy Kubernetes on bare metal.

Join the DZone community and get the full member experience.

Join For FreeIn a previous article, I said that basically there are 2 ways to deploy servers: automatic and live download over the network.

Today I want to talk about live loading over the network. This is when your nodes load some pre-prepared system images immediately into the RAM. For example, Core OS or LTSP can do this.

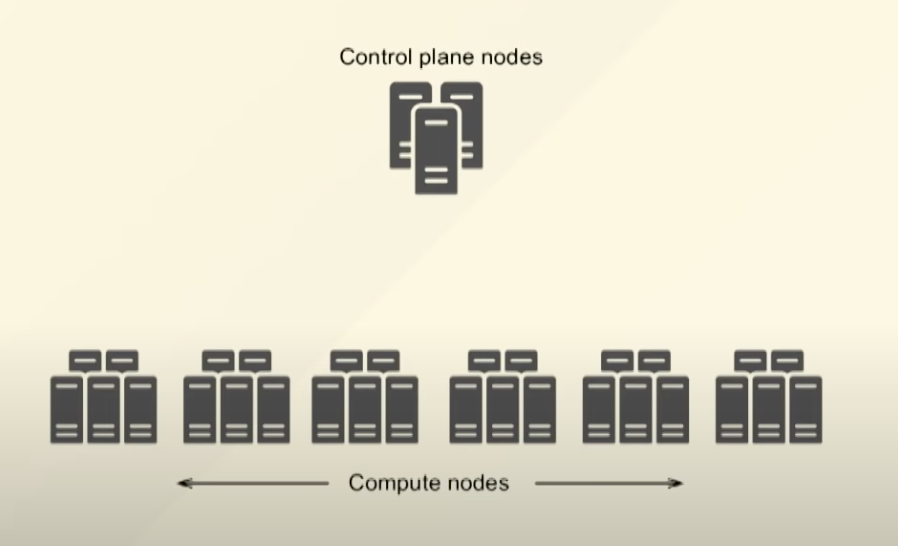

This is what an approximate diagram of each of our clusters looks like, but we will not dwell on it for now.

CoreOS

When building this system, we chose between CoreOS and LTSP. We settled on CoreOS — this is the standard system used in such cases. It is convenient in that you can download the finished image and immediately send it to the nodes without any installation — that is, downloaded and distributed.

You can also update it atomically. A new image came out, the image was updated on the NBD server, the node was rebooted, and it loaded the new image. Another plus is that the image takes up little space on RAM. Considering the "minuses," it is more difficult to add custom software and add additional kernel modules. We solve this problem with LTSP.

We build the NBD entirely through a dockerfile.

Storage

Let's talk about storage now. There are several types of storage in Kubernetes, but the first thing that comes to mind is external storage with access through NFC or iSCSI. It’s a stable solution, but it is inconvenient in that, as a rule, it does not support any downloadability. That is, if you have some kind of box that is provided with access via NFC and if it suddenly falls, you lose access to your data until you subdue the box.

There are also cluster file systems and other software-defined storage solutions. We have Ceph, ClusterFS, Linstor, and so on. In fact, there are a lot of different solutions, and you can choose any of them. It is convenient because by default Kubernetes has all the necessary components that allow you to automate communication with this very storage, that is, create volumes. The disadvantage of this solution is that clustered file systems are quite complex. You need to be aware of all aspects before using them, and many of them are quite slow — that is, there will be overhead.

There is also an option to use object storage. I would even say it is a subclass of clustered file systems. Here, it’s not Kubernetes that communicates with object storage, but your applications must be able to communicate. That is, the object storage represents the API and you must request or download any files through this API.

The last option is to use local volumes — a relatively recent opportunity in Kubernetes to store data locally. Unlike file systems, they do not represent any overhead. They work great if your application can replicate out of the box. For example, consider a database in which local volumes will work fine because all data will be stored entirely on the same node where they are executed.

What You Need to Know When Using Local Volumes

Local volumes are not a hostPath. HostPath was invented for forwarding directories and files or sockets directly from the host. Local volumes were invented just for storing data.

Do not use the root file system for local volumes because you can lose them very easily. There was a cool issue on GitHub: the developer connected the root system as local volumes, then somehow the check on mount-point did not work out, and it turned out that the root file system was lost on 20 nodes. But if you don't have the option to use local volumes — for example, if you're hosted on DigitalOcean and can't create a volume — I suggest using loop devices. They work well and are quite efficient.

Fencing and Kubernetes

Fencing is a mechanism that finishes off a node in case something goes wrong with it. There can be a situation when a node suddenly stops responding and is marked as “not ready.” On a certain node, we had some pods running as a stateful set or pods with attached volumes. In this case, all pods were marked as unknown and were not restarted on other nodes.

Why don't they restart? Because Kubernetes is worried about the safety of your data. Fencing is needed to avoid such situations, and it is guaranteed to extinguish the node. We made sure that the load was not running anywhere else.

There are a huge number of ready-made agents from ClusterLabs. You can just make it ready to use, prescribe the necessary parameters for it, and use it.

How Fencing Works

- Fencing-controller monitors nodes in Kubernetes

- As soon as the node turns into “not ready,” the script is launched

- The script reloads the node using the facing agent

- If the script succeeds, fencing-controller removes the node from the cluster (or recreates it)

- Kubernetes, making sure that the required resources are not used anywhere else, restarts the pods on the remaining nodes

Organization of Access to the Cluster from Outside

I recommend MetalLB for this. MetalLB is a load-balancer implementation for bare metal Kubernetes clusters that uses standard routing protocols. It already uses concepts native to Kubernetes and consists of two components.

The first component is the controller. The controller allows you to organize the issuance of IP addresses to your services. The second component, the speaker, just does that — it announces these IP addresses. The speaker can work in two modes — L2 and BGP.

Features of MetalLB

MetalLB, by default, listens to all interfaces that are on the node. That is, if a request comes to one of the interfaces, it will also respond to any of these interfaces in the same way.

MetalLB requires that on each node you have all the necessary interfaces and routes predefined. That is, if you have some kind of external IP address that is located in the vlan, you must accordingly create this very vlan interface.

Opinions expressed by DZone contributors are their own.

Comments