Maximizing Enterprise Data: Unleashing the Productive Power of AI With the Right Approach

Learn how organizations can unlock the full potential of their AI initiatives by considering critical elements such as infrastructure, cost, and more.

Join the DZone community and get the full member experience.

Join For FreeIn today's digital landscape, data has become the lifeblood of organizations, much like oil was in the industrial era. Yet, the genuine hurdle is converting data into meaningful insights that drive business success. With AI and generative AI revolutionizing data platforms, the critical question is: Are we ready to harness the transformative power of data to propel growth and innovation?

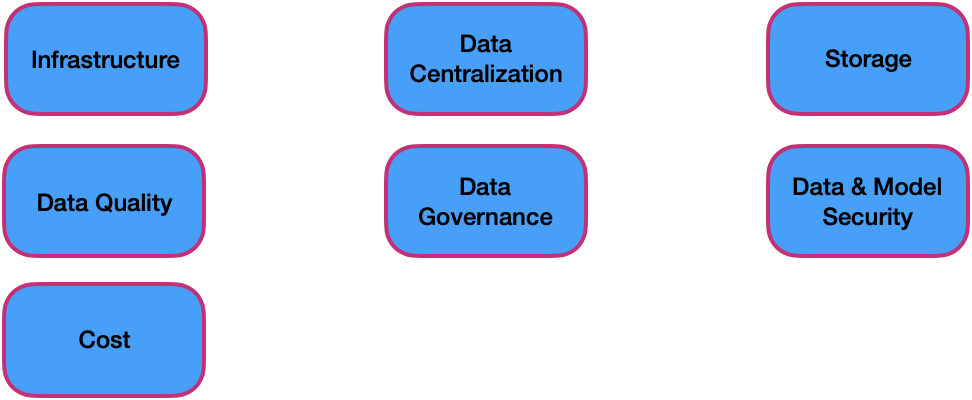

The answer is a mixed bag. While we can derive some benefits from our data, unlocking its full potential requires a purposeful and multi-faceted approach grounded in several essential elements:

Figure 1: Key elements for AI to maximize Enterprise data value

In the following sections, we'll take a closer look at each of these foundational elements, exploring how they can be leveraged to maximize the value of our data assets.

1. Infrastructure

As AI models evolve and demand more computational muscle, it's imperative to equip them with the necessary hardware firepower – including high-performance GPUs [1] – to unlock their full potential. Whether deployed on-premises or in the cloud, our infrastructure must handle these models' intense processing requirements. Consider a scenario where you're operating an intelligent factory, reliant on real-time monitoring and predictive maintenance to avoid costly downtime. Without robust hardware, your AI models will struggle to keep pace with the torrent of data generated by your machines, leading to unplanned outages and expensive repairs. Investing in scalable infrastructure ensures your AI models can operate efficiently and effectively.

Furthermore, a high-speed network is not just important but crucial for handling the large datasets that fuel AI insights. It's the backbone that enables seamless data transfer and processing, ensuring that your AI models can operate at their full potential.

Practical Tip

Conduct a thorough assessment of your current infrastructure to identify areas where computational power is lacking, and explore hybrid solutions that blend on-premises and cloud resources to strike the perfect balance between cost and performance, such as leveraging local servers for real-time data processing and cloud resources for scalable compute capacity.

2. Data Centralization

Many IT infrastructures need help with data fragmentation, hindering the speed and accuracy of data-driven decisions. To overcome this, it's crucial to establish a centralized data warehouse [2] that unifies critical company data in one location. By doing so, organizations can significantly accelerate the time-to-market for insights and decisions. Efficient data integration methods are vital for bringing all data into a central repository, enabling seamless analysis and reporting.

Real-World Example

Implement a data lake that consolidates structured and unstructured data from various sources, enabling comprehensive analysis and reporting. For instance, a retail company could leverage a data lake to combine sales data, customer feedback, and inventory levels, optimizing supply chain management and enhancing customer experience through data-driven insights.

3. Storage: The Foundation of AI Success

Storage infrastructure, the unsung hero of AI and analytics, plays a pivotal role in data centralization. It's not just about housing your organization's data, but about supporting the demands of AI and analytics. To do this effectively, you need storage solutions that offer scalability, cost-effectiveness, and analytics-friendly capabilities. In other words, your storage infrastructure should be able to grow with your data, provide affordable capacity, and facilitate fast data access and analysis.

Investing in high-quality hardware and storage [3] is essential for building a successful AI strategy. By laying a solid foundation for data storage, you can ensure that your AI initiatives are supported by a robust and reliable infrastructure. This infrastructure is not just a storage space, but a key that unlocks the full potential of your data, driving business success.

4. Fueling AI Success With High-Quality Data

High-quality data is the lifeblood of effective AI models. We need to employ the right tools and techniques to ensure that our data is accurate, clean, and reliable. Good data quality is essential for achieving trustworthy AI outcomes, as it directly impacts the insights and decisions generated by our models.

Just as a car engine requires high-quality fuel to run smoothly, AI models require high-quality data to produce reliable results. Our AI models will inevitably produce flawed insights if our data is contaminated with errors or inconsistencies. Regular data audits and cleansing are crucial to ensure our AI models work with the best possible information.

Best Practice

Implement automated data quality tools to regularly audit and cleanse your data and establish data stewardship roles to maintain data integrity over time. For instance, organizations can leverage data quality checks to guarantee accuracy. Similarly, they can follow data quality pillars [4] such as timeliness, consistency, accuracy, etc., for better data. By prioritizing data quality, organizations can unlock the full potential of their AI initiatives and drive business success.

5. Data Governance: The Backbone of AI Implementation

Effective data governance [5] is crucial for successful AI implementation, as it ensures that data is appropriately managed, secured, and utilized. Organizations must establish robust Role-Based Access Control (RBAC) mechanisms to guarantee that only authorized personnel can access sensitive data, thereby preventing unauthorized exposure or breaches.

Furthermore, implementing a comprehensive audit logging system is essential for tracking data movement and changes. This enables organizations to maintain a transparent and tamper-evident record of all data interactions, ensuring accountability, data integrity, and compliance with regulatory requirements.

Key Considerations

Establish clear data ownership and stewardship roles to ensure data quality and integrity. Data stewardship involves the responsibility for data quality and the implementation of data governance policies. It ensures that data is accurate, consistent, and secure throughout its lifecycle. Implementing RBAC and comprehensive audit logging is like having a security blanket for your data. It provides a sense of security and confidence, reassuring you that only authorized personnel can access sensitive data, reducing the risk of data breaches and unauthorized exposure. Detailed audit logs further enhance this feeling, enabling transparent monitoring and compliance. By prioritizing data governance, organizations can ensure their AI initiatives are built on trustworthy, secure, and compliant data. This is not just a best practice, but a crucial step towards driving business success and mitigating risks, underlining the urgency and significance of data governance in AI implementation.

6. Data and LLM Model Security

Safeguarding the integrity and trustworthiness of AI initiatives requires a strong focus on data and model security. This includes crucial elements such as securing models [6], protecting Personally Identifiable Information (PII), complying with Payment Card Industry (PCI) standards, and ensuring adherence to HIPAA and GDPR regulations.

7. Cost

Cost is a significant concern for organizations embarking on AI initiatives. Organizations can mitigate this by conducting a Proof of Concept (POC) locally using small machines and leveraging free, open-source models. By doing so, they can assess the feasibility of their AI project while keeping costs under control.

A Phased Approach to Cost Management

This structured approach provides a sense of security and confidence in managing the costs of AI implementation.

- POC phase: Take control of your AI project by starting with a small-scale POC using local infrastructure and open-source models. This allows you to validate the AI concept and assess costs, putting you in the driver's seat.

- Assessment phase: Evaluate the costs of scaling up the AI project, including infrastructure, talent, and resources.

- Production phase: Once the costs are assessed, move to production, incorporating all the essential elements, including security best practices, data governance, and data quality.

In-House Model

Empower your organization by considering the development of an in-house model. This can significantly reduce reliance on external vendors and lower costs in the long run, giving you more control over your AI initiatives. By adopting a strategic and phased approach to cost management, organizations can ensure a cost-effective AI implementation that delivers business value while minimizing financial risks such as unexpected infrastructure costs, talent shortages, and data quality issues.

Summary

In summary, organizations can unlock the full potential of their AI initiatives by considering the critical elements of infrastructure, data centralization, storage, data quality, data governance, data and model security, and cost. This comprehensive approach enables businesses to:

- Boost productivity and efficiency

- Gain deeper insights into customer needs and preferences

- Make data-driven decisions to drive business growth

- Enhance customer experiences and loyalty

- Ultimately, it increases revenue and competitiveness

By harnessing the power of AI and enterprise data, organizations can overcome business challenges, improve customer satisfaction, and thrive in a rapidly changing market landscape. By prioritizing these essential elements, businesses can ensure a successful AI implementation that drives real business value and fuels long-term success.

References

[2] Data warehouse

[3] A Comprehensive Guide to Types of Data Storage

[4] 6 Pillars of Data Quality and How to Improve Your Data

Opinions expressed by DZone contributors are their own.

Comments