Data Governance Essentials: Policies and Procedures (Part 6)

Learn how data quality, policies, and procedures strengthen data governance by ensuring accuracy, compliance, and security for better decision-making.

Join the DZone community and get the full member experience.

Join For FreeWhat Is Data Governance, and How Do Data Quality, Policies, and Procedures Strengthen It?

Data governance refers to the overall management of data availability, usability, integrity, and security in an organization. It encompasses people, processes, policies, standards, and roles that ensure the effective use of information.

Data quality is a foundational aspect of data governance, ensuring that data is reliable, accurate, and fit for purpose. High-quality data is accurate, complete, consistent, and timely, which is essential for informed decision-making.

Additionally, well-defined policies and procedures play a crucial role in data governance. They provide clear guidelines for data management, ensuring that data is handled properly and complies with relevant regulations.

Data Governance Pillars

What Is Data Quality?

Data quality is the extent to which data meets a company's standards for accuracy, validity, completeness, and consistency. It is a crucial element of data management, ensuring that the information used for analysis, reporting, and decision-making is reliable and trustworthy.

1. Why Is Data Quality Important?

Data quality is crucial for several key reasons, and below are some of them:

Improved Decision-Making

High-quality data supports more accurate and informed decision-making.

Enhanced Operational Efficiency

Clean and reliable data helps streamline processes and reduce errors.

Increased Customer Satisfaction

Quality data leads to better products and services, ultimately enhancing customer satisfaction.

Reduced Costs

Poor data quality can result in significant financial losses.

Regulatory Compliance

Adhering to data quality standards is essential for meeting regulatory requirements.

2. What Are the Key Dimensions of Data Quality?

The essential dimensions of data quality are described as follows:

- Accuracy. Data must be correct and free from errors.

- Completeness. Data should be whole and entire, without any missing parts.

- Consistency. Data must be uniform and adhere to established standards.

- Timeliness. Data should be current and up-to-date.

- Validity. Data must conform to defined business rules and constraints.

- Uniqueness. Data should be distinct and free from duplicates.

3. How to Implement Data Quality

The following steps will assist in implementing data quality in the organization.

- Data profiling. Analyze the data to identify inconsistencies, anomalies, and missing values.

- Data cleansing. Correct errors, fill in missing values, and standardize data formats.

- Data validation. Implement rules and checks to ensure the integrity of data.

- Data standardization. Enforce consistent definitions and formats for the data.

- Master data management (MDM). Centralize and manage critical data to ensure consistency across the organization.

- Data quality monitoring. Continuously monitor data quality metrics to identify and address any issues.

- Data governance. Establish policies, procedures, and roles to oversee data quality.

By prioritizing data quality, organizations can unlock the full potential of their data assets and drive innovation.

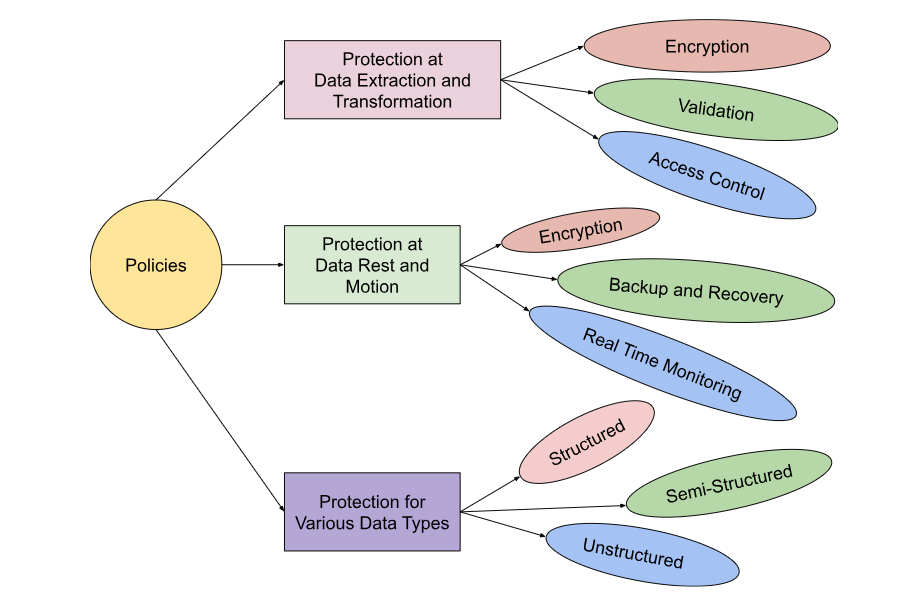

Policies

Data policies are the rules and guidelines that ensure how data is managed and used across the organization. They align with legal and regulatory requirements such as CCPA and GDPR and serve as the foundation for safeguarding data throughout its life cycle.

Below are examples of key policies, including those specific to compliance frameworks like the California Consumer Privacy Act (CCPA) and General Data Protection Regulation (GDPR):

1. Protection Against Data Extraction and Transformation

Data Validation Policies

Define rules to check the accuracy, completeness, and consistency of data during extraction and transformation. Require adherence to data standards such as format, naming conventions, and mandatory fields.

Source System Quality Assurance Policies

Mandate profiling and quality checks on source systems before data extraction to minimize errors.

Error Handling and Logging Policies

Define protocols for detecting, logging, and addressing data quality issues during ETL processes.

Data Access Policies

Define role-based access controls (RBAC) to restrict who can view or modify data during extraction and transformation processes.

Audit and Logging Policies

Require logging of all extraction, transformation, and loading (ETL) activities to monitor and detect unauthorized changes.

Encryption Policies

Mandate encryption for data in transit and during transformation to protect sensitive information.

Data Minimization

Define policies to ensure only necessary data is extracted and used for specific purposes, aligning with GDPR principles.

2. Protection for Data at Rest and Data in Motion

Data Profiling Policies

Establish periodic profiling of data at rest to assess and maintain its quality.

Data Quality Metrics

Define specific metrics (e.g., accuracy rate, completeness percentage, duplication rate) that data at rest must meet.

Real-Time Monitoring Policies

For data in motion, implement policies requiring real-time validation of data against predefined quality thresholds.

Encryption Policies

- Data at rest. Require AES-256 encryption for stored data across structured, semi-structured, and unstructured data formats.

- Data in motion. Enforce TLS (Transport Layer Security) encryption for data transmitted over networks.

Data Classification Policies

Define levels of sensitivity (e.g., Public, Confidential, Restricted) and the required protections for each category.

Backup and Recovery Policies

Ensure periodic backups and the use of secure storage locations with restricted access.

Key Management Policies

Establish secure processes for generating, distributing, and storing encryption keys.

3. Protection for Different Data Types

Structured Data

- Define rules for maintaining referential integrity in relational databases.

- Mandate the use of unique identifiers to prevent duplication.

- Implement database security policies, including fine-grained access controls, masking of sensitive fields, and regular integrity checks.

Semi-Structured Data

- Ensure compliance with schema definitions to validate data structure and consistency.

- Enforce policies requiring metadata tags to document the origin and context of the data.

- Enforce security measures like XML/JSON encryption, validation against schemas, and access rules specific to APIs.

Unstructured Data

- Mandate tools for text analysis, image recognition, or video tagging to assess data quality.

- Define procedures to detect and address file corruption or incomplete uploads.

- Define policies for protecting documents, emails, videos, and other formats using tools like digital rights management (DRM) and file integrity monitoring.

4. CCPA and GDPR Compliance Policies

Accuracy Policies

Align with GDPR Article 5(1)(d), which requires that personal data be accurate and up-to-date. Define periodic reviews and mechanisms to correct inaccuracies.

Consumer Data Quality Policies

Under CCPA, ensure that data provided to consumers upon request is accurate, complete, and up-to-date.

Retention Quality Checks

Require quality validation of data before deletion or anonymization to ensure compliance.

Data Subject Access Rights (DSAR) Policies

Define procedures to allow users to access, correct, or delete their data upon request.

Third-Party Vendor Policies

Require vendors to comply with CCPA and GDPR standards when handling organizational data.

Retention and Disposal Policies

Align with legal requirements to retain data only as long as necessary and securely delete it after the retention period.

Key aspects of data policies include:

- Access control. Defining who can access specific data sets.

- Data classification. Categorizing data based on sensitivity and usage.

- Retention policies. Outlining how long data should be stored.

- Compliance mandates. Ensuring alignment with legal and regulatory requirements.

Clear and enforceable policies provide the foundation for accountability and help mitigate risks associated with data breaches or misuse.

Procedures

Procedures bring policies to life, and they are step-by-step instructions. They provide detailed instructions to ensure policies are effectively implemented and followed. Below are expanded examples of procedures for protecting data during extraction, transformation, storage, and transit, as well as for structured, semi-structured, and unstructured data:

1. Data Extraction and Transformation Procedures

Data Quality Checklists

Implement checklists to validate extracted data against quality metrics (e.g., no missing values, correct formats). Compare transformed data with expected outputs to identify errors.

Automated Data Cleansing

Automated tools are used to detect and correct quality issues, such as missing or inconsistent data, during transformation.

Validation Testing

Perform unit and system tests on ETL workflows to ensure data quality is maintained.

ETL Workflow Monitoring

Regularly review ETL logs and audit trails to detect anomalies or unauthorized activities.

Validation Procedures

Use checksum or hash validation to ensure data integrity during extraction and transformation.

Access Authorization

Implement multi-factor authentication (MFA) for accessing ETL tools and systems.

2. Data at Rest and Data in Motion Procedures

Data Quality Dashboards

Create dashboards to visualize quality metrics for data at rest and in motion. Set alerts for anomalies such as sudden spikes in missing or duplicate records.

Real-Time Data Validation

Integrate validation rules into data streams to catch errors immediately during transmission.

Periodic Data Audits

Schedule regular audits to evaluate and improve the quality of data stored in systems.

Encryption Key Rotation

Schedule periodic rotation of encryption keys to reduce the risk of compromise.

Secure Transfer Protocols

Standardize the use of SFTP (Secure File Transfer Protocol) for moving files and ensure APIs use OAuth 2.0 for authentication.

Data Storage Segmentation

Separate sensitive data from non-sensitive data in storage systems to enhance security.

3. Structured, Semi-Structured, and Unstructured Data Procedures

Structured Data

- Run data consistency checks on relational databases, such as ensuring referential integrity and no orphan records.

- Schedule regular updates of master data to maintain consistency.

- Conduct regular database vulnerability scans.

- Implement query logging to monitor access patterns and detect potential misuse.

Semi-Structured Data

- Use tools like JSON or XML schema validators to ensure semi-structured data adheres to expected formats.

- Implement automated tagging and metadata extraction to enrich the data and improve its usability.

- Validate data against predefined schemas before ingestion into systems.

- Use API gateways with rate limiting to prevent abuse.

Unstructured Data

- Deploy machine learning tools to assess and improve the quality of text, image, or video data.

- Regularly scan unstructured data repositories for incomplete or corrupt files

- Use file scanning tools to detect and classify sensitive information in documents or media files.

- Apply automatic watermarking for files containing sensitive data.

4. CCPA and GDPR Compliance Procedures

Consumer Request Validation

Before responding to consumer requests under CCPA or GDPR, validate the quality of the data to ensure it is accurate and complete. Implement error-handling procedures to address any discrepancies in consumer data.

Data Update Procedures

Establish workflows for correcting inaccurate data identified during regular reviews or consumer requests.

Deletion and Retention Quality Validation

Before data is deleted or retained for compliance, quality checks are performed to confirm its integrity and relevance.

Right to Access/Deletion Requests

Establish a ticketing system for processing data subject requests and verifying user identity before fulfilling the request.

Breach Notification Procedures

Define steps to notify regulators and affected individuals within the time frame mandated by GDPR (72 hours) and CCPA.

Data Anonymization

Apply masking or tokenization techniques to de-identify personal data used in analytics.

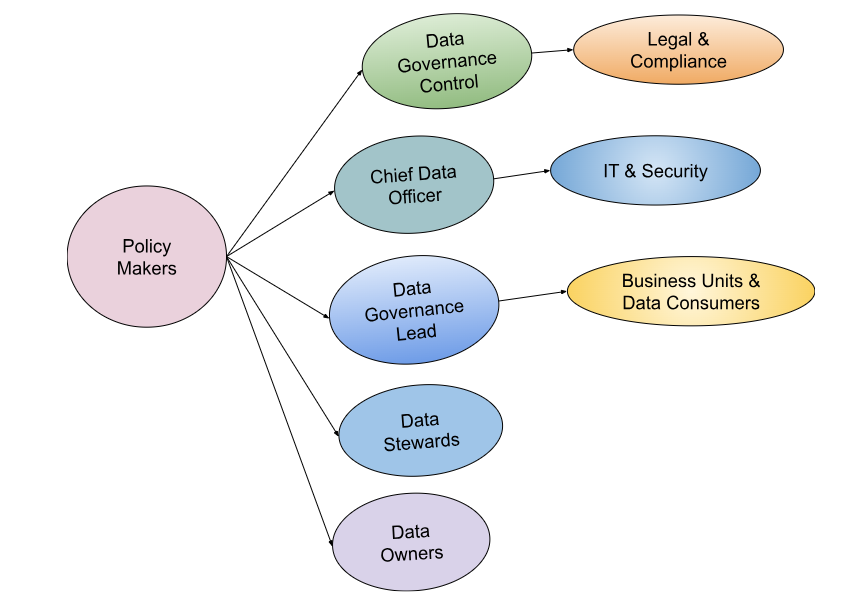

Roles and Responsibilities in Defining Policies and Procedures

The following are the various generalized roles and their responsibilities in defining policies and procedures, which may vary depending on the size and policies of the organization.

Data Governance Policy Makers

1. Data Governance Council (DGC)

Role

A strategic decision-making body comprising senior executives and stakeholders from across the organization.

Responsibilities

- Establish the overall data governance framework.

- Approve and prioritize data governance policies and procedures.

- Align policies with business objectives and regulatory compliance requirements (e.g., CCPA, GDPR).

- Monitor compliance and resolve escalated issues.

2. Chief Data Officer (CDO)

Role

Oversees the entire data governance initiative and ensures policies align with the organization’s strategic goals.

Responsibilities

- Lead the development of data governance policies and ensure buy-in from leadership.

- Define data governance metrics and success criteria.

- Ensure the integration of policies across structured, semi-structured, and unstructured data systems.

- Advocate for resource allocation to support governance initiatives.

3. Data Governance Lead/Manager

Role

Operationally manages the implementation of data governance policies and procedures.

Responsibilities

- Collaborate with data stewards and owners to draft policies.

- Ensure policies address data extraction, transformation, storage, and movement.

- Develop and document procedures based on approved policies.

- Facilitate training and communication to ensure stakeholders understand and adhere to policies.

4. Data Stewards

Role

Serve as subject matter experts for specific datasets, ensuring data quality, compliance, and governance.

Responsibilities

- Enforce policies for data accuracy, consistency, and protection.

- Monitor the quality of structured, semi-structured, and unstructured data.

- Implement specific procedures such as data masking, encryption, and validation during ETL processes.

- Ensure compliance with policies related to CCPA and GDPR (e.g., data classification and access controls).

5. Data Owners

Role

Typically, business leaders or domain experts are responsible for specific datasets within their area of expertise.

Responsibilities

- Define access levels and assign user permissions.

- Approve policies and procedures related to their datasets.

- Ensure data handling aligns with regulatory and internal standards.

- Resolve data-related disputes or issues escalated by stewards.

6. Legal and Compliance Teams

Role

Ensure policies meet regulatory and contractual obligations.

Responsibilities

- Advise on compliance requirements, such as GDPR, CCPA, and industry-specific mandates.

- Review and approve policies related to data privacy, retention, and breach response.

- Support the organization in audits and regulatory inspections.

7. IT and Security Teams

Role

Provide technical expertise to secure and implement policies at a systems level.

Responsibilities

- Implement encryption, data masking, and access control mechanisms.

- Define secure protocols for data in transit and at rest.

- Monitor and log activities to enforce data policies (e.g., audit trails).

- Respond to and mitigate data breaches, ensuring adherence to policies and procedures.

8. Business Units and Data Consumers

Role

Act as end users of the data governance framework.

Responsibilities

- Adhere to the defined policies and procedures in their day-to-day operations.

- Provide feedback to improve policies based on practical challenges.

- Participate in training sessions to understand data governance expectations.

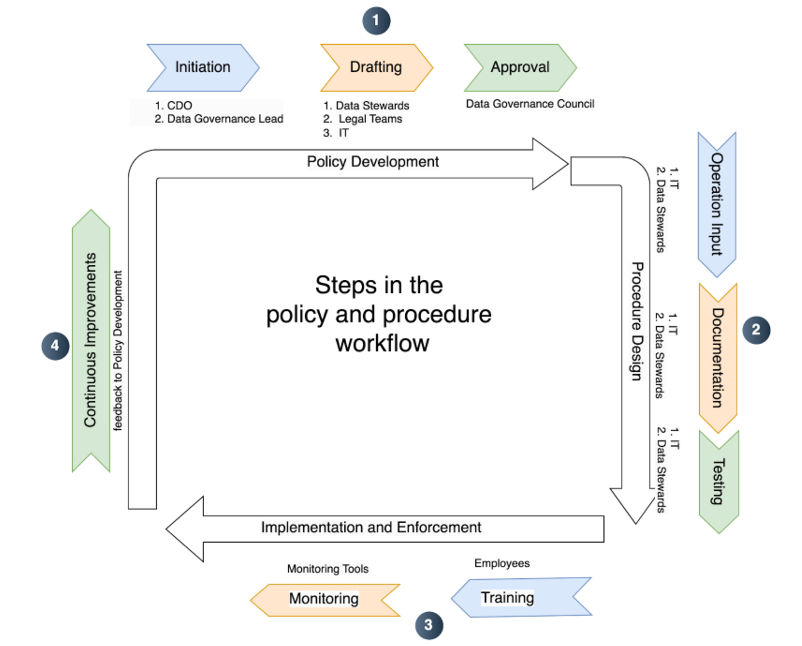

Workflow for Defining Policies and Procedures

1. Policy Development

- Initiation. The CDO and Data Governance Lead identify the need for specific policies based on organizational goals and regulatory requirements.

- Drafting. Data stewards, legal teams, and IT collaborate to draft comprehensive policies addressing technical, legal, and operational concerns.

- Approval. The Data Governance Council reviews and approves the policies.

2. Procedure Design

- Operational input. IT and data stewards define step-by-step procedures to enforce the approved policies.

- Documentation. Procedures are formalized and stored in a central repository for easy access.

- Testing. Procedures are tested to ensure feasibility and effectiveness.

3. Implementation and Enforcement

- Training programs are conducted for employees across roles.

- Monitoring tools are deployed to track adherence and flag deviations.

4. Continuous Improvement

-

Policies and procedures are periodically reviewed to accommodate evolving regulations, technologies, and business needs.

By involving the right stakeholders and clearly defining roles and responsibilities, organizations can ensure their data governance policies and procedures are robust, enforceable, and adaptable to changing requirements.

Popular Tools

The following table lists the top 10 most popular companies that support data governance, data quality, policies, and procedures:

|

|

Tool |

Best For |

Key Features |

Use Cases |

|---|---|---|---|---|

|

1 |

Ataccama |

Data Quality, MDM, Governance |

- Automated data profiling, cleansing, and enrichment - AI-driven data discovery and anomaly detection |

- Ensuring data accuracy during ETL processes - Automating compliance checks (e.g., GDPR, CCPA) |

|

2 |

Collibra |

Enterprise Data Governance, Cataloging |

- Data catalog for structured, semi-structured, and unstructured data - Workflow management - Data lineage tracking |

- Cross-functional collaboration on governance - Automating compliance documentation and audits |

|

3 |

Oracle EDM |

Comprehensive Data Management |

- Data security and lifecycle management - Real-time quality checks - Integration with Oracle Analytics |

- Managing policies in complex ecosystems - Monitoring real-time data quality |

|

4 |

IBM InfoSphere |

Enterprise-Grade Governance, Quality |

- Automated data profiling - Metadata management - AI-powered recommendations for data quality |

- Governing structured and semi-structured data - Monitoring and enforcing real-time quality rules |

|

5 |

OvalEdge |

Unified Governance and Collaboration |

- Data catalog and glossary - Automated lineage mapping - Data masking capabilities |

- Developing and communicating governance policies - Tracking and mitigating policy violations |

|

6 |

Manta |

Data Lineage and Impact Analysis |

- Visual data lineage - Integration with quality and governance platforms |

- Enhancing policy enforcement for data in motion - Strengthening data flow visibility |

|

7 |

Talend Data Fabric |

End-to-End Data Integration, Governance |

- Data cleansing and validation - Real-time quality monitoring - Compliance tools |

- Maintaining data quality in ETL processes - Automating privacy policy enforcement |

|

8 |

Informatica Axon |

Enterprise Governance Frameworks |

- Integrated quality and governance - Automated workflows - Collaboration tools |

- Coordinating governance across global teams - Establishing scalable data policies and procedures |

|

9 |

Microsoft Purview |

Cloud-First Governance and Compliance |

- Automated discovery for hybrid environments - Policy-driven access controls - Compliance reporting |

- Governing hybrid cloud data - Monitoring data access and quality policies |

|

10 |

DataRobot |

AI-Driven Quality and Governance |

- Automated profiling and anomaly detection - Governance for AI models - Real-time quality monitoring |

- Governing data in AI workflows - Ensuring compliance of AI-generated insights |

Conclusion

Together, data quality, policies, and procedures form a robust foundation for an effective data governance framework. They not only help organizations manage their data efficiently but also ensure that data remains a strategic asset driving growth and innovation.

By implementing these policies and procedures, organizations can ensure compliance with legal mandates, protect data integrity and privacy, and enable secure and effective data governance practices. This layered approach safeguards data assets while supporting the organization’s operational and strategic objectives.

References

- Ataccama

- Collibra

- What Is a Data Catalog?, Oracle

- What is a data catalog?, IBM

- 5 Core Benefits of Data Lineage, OvalEdge

Opinions expressed by DZone contributors are their own.

Comments