NGINX Plus as a Cloud Load Balancer for OpenStack

We discuss how NGINX Plus provides advanced load balancing for applications you deploy on OpenStack.

Join the DZone community and get the full member experience.

Join For FreeMore and more organizations are embracing cloud computing. Moving applications into the cloud comes with several benefits, including cost reduction and agility. At the same time, quite often it can be a challenging task.

Many organizations choose to build their own private clouds, either on their premises or hosted at a cloud provider, over using public cloud offerings. Having open source software that allows organizations to effectively build and manage private clouds is highly important. OpenStack is such software. It is a joint development effort of many companies and individuals with a huge community of users across the globe.

The performance and resilience of your applications largely depend on the cloud load balancer you use. In this blog post, we discuss how NGINX Plus provides advanced load balancing for applications you deploy on OpenStack. We compare NGINX Plus with OpenStack’s built‑in load balancer. At the end of this blog post, we show how to deploy and configure NGINX Plus to load balance a web application using the built‑in OpenStack orchestration service, Heat.

LBaaS, the Built‑In OpenStack Load Balancer

OpenStack’s built‑in cloud load balancer is Load Balancer as a Service (LBaaS) from the Neutron project. LBaaS defines an API for configuring load balancing. Vendors can integrate their cloud load balancer with LBaaS by creating a special driver that users install and configure in order to use that particular load balancer. OpenStack provides a default reference implementation of the driver for the HAProxy load balancer.

The LBaaS API has matured to Version 2 with the advanced HTTP load‑balancing features added earlier this year. The default implementation is now provided by the Octavia project, which added robustness and more flexibility to the load‑balancing deployment.

Why Choose NGINX Plus as Your OpenStack Load Balancer?

There are several advantages to using NGINX Plus as the cloud load balancer for your applications on OpenStack, including its advanced features, high performance, and flexible deployment and configuration options.

Feature Comparison for LBaaS API and NGINX Plus

The table compares the load‑balancing features of NGINX Plus and the LBaaS API.

Routing based on values of the HTTP request headers such as the Host header, a cookie, or the URI.

As the table shows, NGINX Plus includes several important load‑balancing features missing from LBaaS:

- Advanced application health checks – NGINX Plus constantly checks the health of your application backend servers (or backends) and immediately stops sending client requests to a backend that becomes unhealthy, ensuring that the client doesn’t receive any errors. NGINX Plus does health checking by sending synthetic requests to the backends and expecting a healthy response from each one. The synthetic request and the expected healthy response are configurable for both HTTP and TCP/UDP load balancing. Advanced health checking, such as the ability to inspect the health check response headers and the body for specific string values or to set the health check request headers (for HTTP load balancing), is not available with LBaaS.

- Advanced HTTP load balancing: NGINX Plus excels at HTTP load balancing. You can configure its load‑balancing decisions based on the value of any of the request parameters, such as headers, URI, source IP address, etc. Although the Layer 7 rules recently added to LBaaS allow you to configure content‑based routing, advanced features – such as URL rewriting and header manipulation – are not available.

- Advanced connection and request limiting: Connection and request limiting, available in NGINX Plus, help you to mitigate DDoS attacks on your applications.

- Authentication and access control: To add security to your applications, NGINX Plus provides several authentication mechanisms, including support for JSON Web Tokens (JWT). Additionally, NGINX Plus can forward authentication requests to external services. For example, you can integrate NGINX Plus with Microsoft Windows Server Active Directory. You can also control access to your applications based on the client IP address or any other values from the client request headers, for example, the user agent.

- Logging and monitoring: The NGINX Plus live activity monitoring API and the dashboard provide you with real‑time metrics that reflect how well your applications are performing. Recently we announced a public beta of a new product for monitoring NGINX Plus and open source NGINX – NGINX Amplify.

- Web application firewall: NGINX Plus R10 introduces a web application firewall (WAF), which helps to protect your applications from various attacks.

- A/B testing: NGINX Plus simplifies A/B testing of your applications.

NGINX Plus is also a web server and a cache. You can significantly improve the performance of your applications by having NGINX Plus serve static files and by caching the responses from the backends.

Flexible Deployment Options

As a software load balancer, NGINX Plus is flexible in its deployment options. The fact that it handles a huge number of requests and at the same time has a low system footprint means you can run it even on commodity hardware or in a virtual machine or container. You can compare the price‑performance of NGINX Plus with Citrix NetScaler and F5 BIG‑IP on our blog.

NGINX Plus supports high‑availability deployments using VRRP with keepalived.

DevOps Friendly Configuration

You configure NGINX Plus by writing textual configuration files, which allows you to automate the configuration process with automation software such as Chef, Ansible, or Puppet. At the end of this blog post, we show how we can use Heat, the built‑in OpenStack orchestration service, to deploy and configure NGINX Plus.

Additionally, NGINX Plus comes with the on‑the‑fly reconfiguration API, which allows you to add or remove backend servers from an upstream group via a simple HTTP API.

Portability and Reusability

Using NGINX Plus doesn’t tie you to any platform‑specific load‑balancing APIs. In case you decide to switch from OpenStack to another cloud platform or you are using multiple platforms simultaneously, you can reuse your load‑balancing configuration across all platforms.

Service Discovery in Microservices Environments

Service discovery is critically important when building microservices applications. NGINX Plus fully supports DNS‑based service discovery. It can dynamically discover the IP addresses of backend servers via the DNS protocol. If the IP addresses later change, NGINX Plus will automatically pick up any updates. NGINX Plus Release 9 and later support DNS SRV records, and so can discover ports along with IP addresses. This comes in very handy if you use Consul or SkyDNS for service discovery.

To learn more how NGINX Plus can help you build microservices applications, read our blog post Introducing the Microservices Reference Architecture from NGINX.

Example: Deploying NGINX Plus with OpenStack Heat

As an example of how NGINX Plus can be deployed and configured as a cloud load balancer for OpenStack via automation software, here we show how to deploy NGINX Plus using OpenStack Heat. We configure NGINX Plus to load balance a simple web application that we also deploy using Heat. On our GitHub repository, you can find the Heat templates as well as instructions for running the example.

When you run the example, Heat performs the following steps:

- Creates two base images:

- A base image with the web application installed.

- A base image with NGINX Plus installed.

- Deploys the web application, creating three virtual machines (or instances) using the web application base image.

- Deploys and configures load balancing:

- Creates an instance using the NGINX Plus base image.

- Configures NGINX Plus to distribute incoming requests among the web application instances.

- Attaches a public, floating IP address to the instance, making it accessible to external clients.

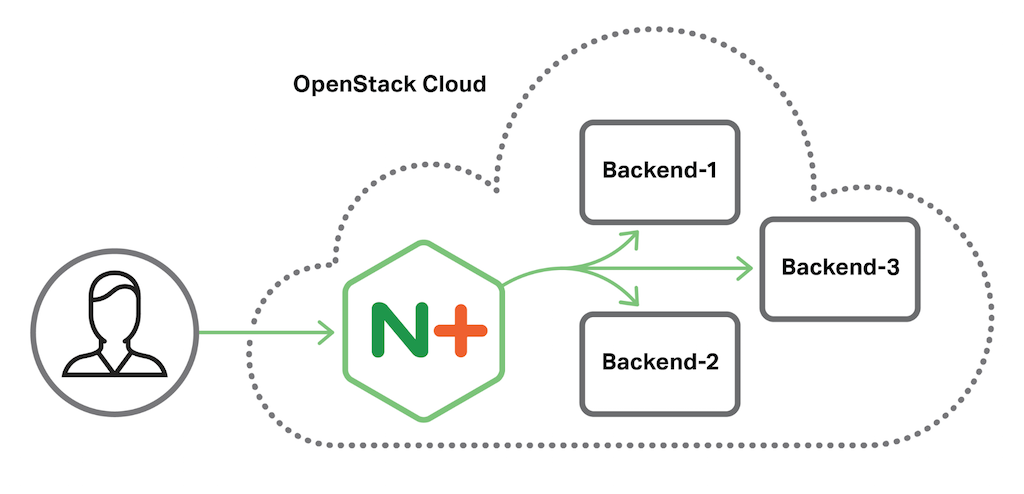

The diagram shows the deployment after Heat runs.

As you can see and test, NGINX Plus distributes the requests from clients among the backend instances.

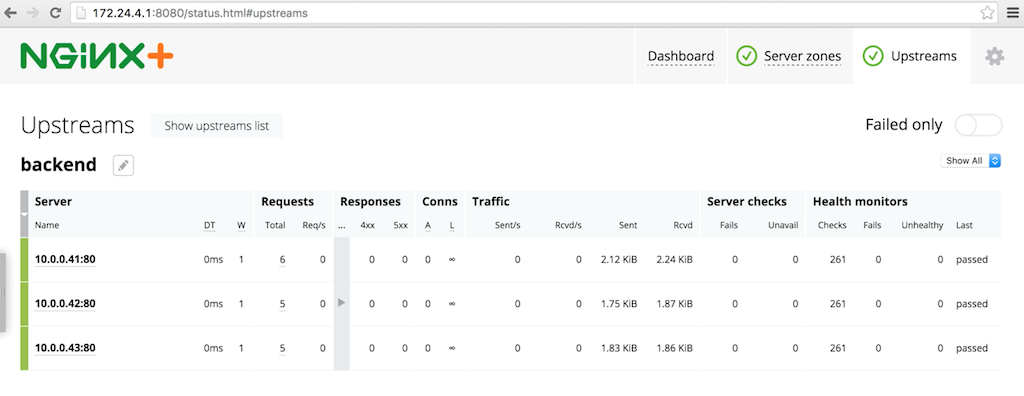

In the example, NGINX Plus is configured to provide the live activity monitoring dashboard, which allows us to see how each instance of our web application is performing. You can access the dashboard via the public IP address of the NGINX Plus instance on port 8080:

The critical part of the example is scaling the web application. We use Heat for that. NGINX Plus gets immediately reconfigured after we scale the application. This is accomplished through the on‑the‑fly reconfiguration API by a simple Python script installed along with NGINX Plus. This script constantly monitors the number of instances of the web application and makes sure the instances are present in the NGINX Plus configuration.

All sample files, including the Python script, are available on our GitHub repository. You can run the example locally using the Devstack environment.

Summary

OpenStack brings the power of cloud computing into your own data center. With its huge community of developers and users and a lot of companies offering commercial support, you can effectively build and manage your own private cloud. We are finding that a lot of our customers are moving their applications to the cloud. They choose NGINX Plus over other cloud load balancers, such as OpenStack LBaaS or AWS ELB, because of its advanced features, the flexibility of deployment and configuration, and its high performance.

Published at DZone with permission of Michael Pleshakov, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments