Using Salesforce Functions With Heroku Postgres

This article is the first of a three-part series on utilizing Heroku Managed Data products from within a Salesforce Function. In this article, we'll focus on use cases for Heroku Postgres

Join the DZone community and get the full member experience.

Join For FreeThis article is the first of a three-part series on utilizing Heroku Managed Data products from within a Salesforce Function. In this article, we will focus on use cases for Heroku Postgres. In parts two and three, we’ll discuss creating Salesforce Functions that use Heroku Data for Redis and Apache Kafka on Heroku.

Introduction to Core Concepts

What Is a Salesforce Function?

A Salesforce Function is a custom piece of code used to extend your Salesforce apps or processes. The custom code can leverage the language and libraries you choose while running in the secure environment of your Salesforce instance.

For example, you could leverage a JavaScript library to update your Heroku Postgres Database based on a triggered process within Salesforce. If you are new to Salesforce Functions in general, “Get to Know Salesforce Functions” is a good place to learn more about what a Salesforce Function is and how it works.

What Is Heroku Postgres?

Heroku Postgres is a Postgres database that Heroku manages for you. That means that Heroku takes care of things like security, backups, and maintenance. All you have to do is use it. The best place to learn the details of Heroku Postgres is in the Heroku Devcenter documentation.

Examples of Salesforce Function + Heroku Postgres

Now that we know more about Functions and Heroku Postgres, what can we do with them together? There are several ways to think of Functions, but one of the most useful is to think about them as aiding the integration or extension of your Org(s). For example, when something happens in Salesforce, do some other task.

At a high level, you would want to use Heroku Postgres with your Salesforce Function if you:

- Need to access a relational database as part of your function’s operation

- Need to integrate with a Heroku Postgres instance that is part of another system

- Need to offload a “heavy” task from your Salesforce Org and store relational data to achieve it

The above patterns are not the only ones, but they are perhaps the most common. The use cases below are examples of these patterns in action.

Use Case #1: De-dupe Account Records for Multiple Salesforce Orgs

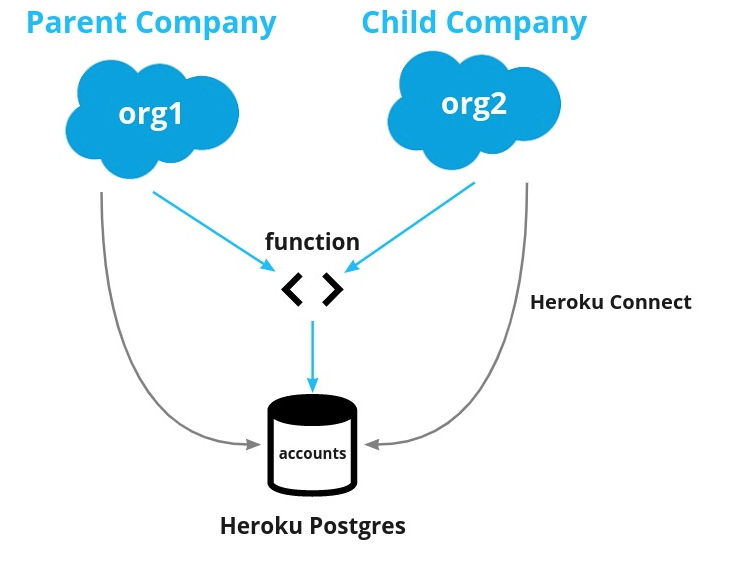

A common problem in a growing company is having multiple Salesforce Orgs with customers duplicated across them. Sometimes, this occurs because the company has acquired another company, and both companies share the customer.

In other cases, the company just had different business lines, and the customer was part of both. In reality, if we know two customers are really the same customer, then we want to treat them as one customer. It often takes a long time (if ever) to merge this Org data, so what do we do?

One approach is to sync account information from both Orgs via Heroku Connect into Heroku Postgres and trigger a Salesforce Function that can look for duplicates. We may run this function on demand for certain activities or set it up to run after creating new accounts. An example of this is whenever the system encounters “Customer’s email already exists in [Parent Company]”. The following diagram illustrates this use case:

Use Case #2: Update the Customer-Facing Website When New Inventory Is Added

Many companies use Salesforce as the “source of truth” for critical information, but they have a customer-facing website on Heroku. In this use case, when an item (such as an inventory number) is updated in Salesforce, a Salesforce Function is triggered to update Heroku Postgres, which backs the website that displays this information for customers.

This pattern could be extended to do other things, such as fetch additional information to store with the record in Heroku Postgres or purge the cache. This can be useful when the information is needed for the website, but you don’t need to store it in Salesforce.

Use Case #3: Generate Reports for Large Data Sets

In a large organization that uses Salesforce heavily, there can be overlapping tasks by many users. Often, end users are unaware of their impact on the shared system. Moving heavy operations or reports of the Org can allow other operations to perform better. An easy way to do this is to sync the required data to Heroku Postgres via Heroku Connect and perform the operation via a Salesforce Function. This opens up options for optimizing and leveraging open-source tools and libraries to process the data.

This pattern also works well if the result needs to be written back to Salesforce. An example of that would be if you needed to calculate sales territories but store the results back in the Salesforce Org.

Salesforce Functions are a powerful new feature in the Salesforce ecosystem. Heroku Postgres is a battle-tested market leader in managed Postgres. Together, they open up many use cases. In combination with additional services offered by Heroku, there are many options for getting the most out of your Salesforce data. In the next section, we’ll briefly examine how to get started with your own use cases.

How Do I Get Started?

There are some things you will need—some on the Salesforce Functions side and some on the Heroku side. These resources will point you in the right direction.

- Prerequisites

- Getting started with Salesforce Functions

Accessing Heroku Postgres From a Salesforce Function

Once you have covered the prerequisites and you create your project, you can run the following commands to create a Function with Heroku Postgres access.

To create the new JavaScript Function, run the following command:

$ sf generate function -n yourfunction -l javascriptThat will give you a /functions folder with a Node.js application template.

Connecting to Your Database

Of course, your Salesforce function code will need to include some specific pieces to ensure a proper connection to the database. The simplest approach would be to include dotenv package for specifying the database URL as an environment variable and the pg package as a Postgres client.

import "dotenv/config";

import pg from "pg";

const { Client } = pg;Within your function code, you’ll need to connect to your Postgres database. Here is an example of a connection helper function:

async function pgConnect() {

const client = new Client({

connectionString: process.env.DATABASE_URL,

ssl: {

rejectUnauthorized: false

}

});

await client.connect();

return client;

}This assumes that you have a .env file that specifies your DATABASE_URL.

Query and Store

In the following example function, we retrieve some data from our Salesforce Org, and then we store it in Postgres.

export default async function (event, context, logger) {

// Send request to Salesforce data API to retrieve data

const sfid = await context.org.dataApi.query(

`SELECT Id FROM Account WHERE Name = 'Example Company'`

);

// Connect to postgres

const pg = await pgConnect();

// Store data result in postgres

client.query(`INSERT INTO companies (sfid) VALUES ($1)`, [sfid]);

// Close connection

pg.end()

} Test Your Salesforce Function Locally

To test your Function locally, you first run the following command:

$ sf run function startThen, you can invoke the Function with a payload from another terminal:

$ sf run function -l http://localhost:8080 -p '{"payloadID": "info"}'

For more information on running Functions locally, see this guide.

Associating Your Salesforce Function and Your Heroku Environment

Now that everything is working as expected, let's associate the Function with a computing environment. (For more information about how to create a compute environment and deploy a function, take a look at the documentation.)

You can associate your Salesforce Function and Heroku environments by adding your Heroku user as a collaborator to your function’s compute environment:

$ sf env compute collaborator add --heroku-user username@example.com

The environments can now share Heroku data.

Next, you will need the name of the computing environment so that you can attach the data store to it.

$ sf env listLastly, you can attach the data store.

$ heroku addons:attach <your-heroku-postgres-database> --app <your-compute-environment-name>

Here are some additional resources that may be helpful for you as you begin implementing your Salesforce Function and accessing Heroku Postgres:

Now, you’re off to the races!

Conclusion

Salesforce Functions open up many possibilities for working within your Salesforce app to access and manipulate Heroku data. In this first part of our series, we’ve touched on using Salesforce Functions to work with Heroku Postgres. In the next part of the series, we’ll integrate Salesforce Functions with Heroku Data for Redis. Then, in our final post for this series, we’ll integrate with Apache Kafka on Heroku. Stay tuned!

Published at DZone with permission of Michael Bogan. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments