Smart Pipes and Smart Endpoints With Service Mesh

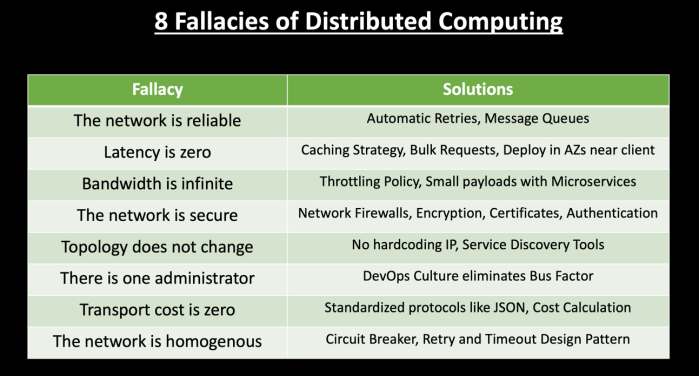

We look into some of the fallacies surrounding distributed computing and how service mesh helps address them.

Join the DZone community and get the full member experience.

Join For FreeMicroservices communicate significantly over the network. As the number of services grows in your architecture, the risks due to an unreliable network grows too. Handling the service to service communication within a microservices architecture is challenging. Hence the recommended solution has been to build services that have dumb pipes and smart endpoints.

The first fallacy from the comprehensive list of ' Eight Fallacies of Distributed Computing ' is that the 'Network is reliable.'

Service calls made over the network might fail. There can be congestion in network or power failure impacting your systems. The request might reach the destination service but it might fail to send the response back to the primary service. The data might get corrupted or lost during transmission over the wire. While architecting distributed cloud applications, you should assume that these type of network failures will happen and design your applications for resiliency.

To handle this scenario, you should implement automatic retries in your code when such a network error occurs. Say one of your services is not able to establish a connection because of a network issue, you can implement retry logic to automatically re-establish the connection.

Implementing message queues can also help in decoupling dependencies. Based on your business use case you can implement queues to make your services more resilient. Messages in the queue won't be lost until they are processed. If your business use case allows 'Fire and Forget' type of requests compared to synchronous Request/Response, queues can be a good solution to reduce the tight coupling between components in your architecture and increase system reliability when there are network issues or systems are down.

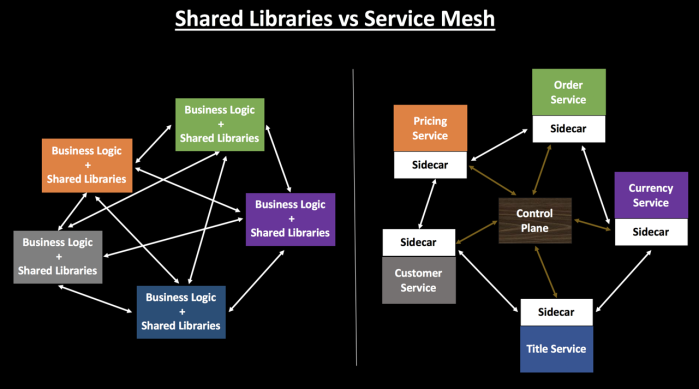

Smart endpoints and dumb pipes has been one of the design principles for microservices during the last decade.

Smart endpoints and dumb pipes has been one of the design principles for microservices during the last decade.

The responsibility of the network is to just transfer messages between Source and Destination. The responsibility of microservices is to handle business logic, transformation, validations, and process the messages.

But with the rise of microservices architecture, containers, DevOps, and Kubernetes, how do we manage functionalities like traffic management, routing, telemetry, and policy enforcement?

A good answer to this question will be by building smarter pipes. Service mesh rises to the challenge in this scenario by making the network much smarter. Instead of building functionalities like load balancing, service discovery, circuit breaking, authentication, security, and routing inside each of your individual microservices, service mesh pushes these functionalities to the network/infrastructure layer.

Conclusion

The evolution of service mesh architecture has been a game changer. It decouples the complexity from your application and puts it in a service proxy and lets it handle it for you. These service proxies can provide you with a bunch of functionalities like traffic management, circuit breaking, service discovery, authentication, monitoring, security, and much more.

Istio is one of the best implementations of service mesh at this point. It enables you to deploy microservices without an in-depth knowledge of the underlying infrastructure. Istio solves complex requirements while not requiring changes to the application code of your microservices. Istio reduces the complexity of running a distributed microservice architecture. It is platform independent and language agnostic — so it does not matter which language you use to containerize your application.

Istio leverages Envoy's many built-in features like service discovery, load balancing, circuit breakers, fault injection, observability, metrics, and more. Envoy is deployed as a sidecar to the relevant service in the same Kubernetes pod. The sidecar proxy model also allows you to add Istio capabilities to an existing deployment with no need to rearchitect or rewrite code.

Published at DZone with permission of Samir Behara, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments