Protecting Your API Ecosystem: The Role of Rate Limiting in Service Stability

Explore effective API rate limiting strategies to safeguard against overloading, promote fair usage, and improve performance.

Join the DZone community and get the full member experience.

Join For FreeIn modern web and mobile applications, APIs are the backbone of communication between different components, services, and users. However, as API usage grows, there is a risk of overloading the system, causing degraded performance or even service outages. One of the most effective ways to prevent such issues is through API rate limiting.

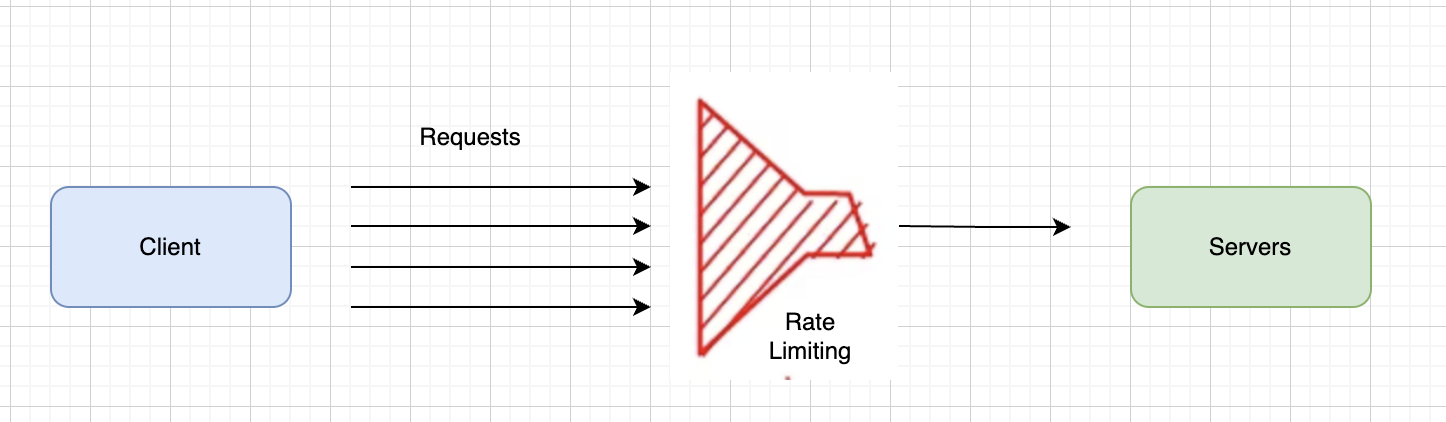

Rate limiting refers to the practice of restricting the number of requests a user or system can make to an API within a specific timeframe, which is measured in requests per second or per minute. This ensures that no single user or client overwhelms the API, allowing for fair usage and protecting the backend from being flooded with excessive traffic.

In this article, we'll explore the different rate-limiting strategies available, their use cases, and best practices for implementing them to safeguard the APIs from overload.

Why Is API Rate Limiting Important?

API rate limiting is essential to:

- Prevent malicious flooding and denial-of-service (DOS) attacks.

- Maintain API performance and reliability.

- Ensure fair usage among users.

- Prevent high costs from overused cloud services.

Common API Rate Limiting Strategies

There are several rate limiting strategies that can be implemented in API Gateways, Load balancers, etc.

1. Fixed Window Rate Limiting

This strategy involves setting a fixed limit on the number of requests allowed within a fixed time window, such as 100 requests per minute. The counter resets when the window ends. The major downside is the possibility of "thundering herd" problems. If several users hit their limit right before the window resets, the system could face a spike in traffic, potentially causing overload.

import time

class FixedWindowRateLimiter:

def __init__(self, limit, window_size):

self.limit = limit

self.window_size = window_size

self.requests = []

def is_allowed(self):

current_time = time.time()

self.requests = [req for req in self.requests if req > current_time - self.window_size]

# Check if the number of requests in the current window exceeds the limit

if len(self.requests) < self.limit:

self.requests.append(current_time)

return True

else:

return False

# Example usage

limiter = FixedWindowRateLimiter(limit=5, window_size=60) # 5 requests per minute

for _ in range(7):

if limiter.is_allowed():

print("Request allowed")

else:

print("Rate limit exceeded")

time.sleep(10) # Sleep for 10 seconds between requests

2. Sliding Window Rate Limiting

This strategy attempts to fix the problem of the "thundering herd" by shifting the window dynamically based on the request timestamp.

In this approach, the window continuously moves forward, and requests are counted based on the most recent period, enabling smoother traffic distribution and less likely to cause sudden bursts. A user is allowed to make 100 requests within any 60-second period. If they made a request 30 seconds ago, they can only make 99 more requests in the next 30 seconds. It is slightly more complex to implement and manage compared to the fixed window strategy.

import time

from collections import deque

class SlidingWindowRateLimiter:

def __init__(self, limit, window_size):

self.limit = limit

self.window_size = window_size

self.requests = deque()

def is_allowed(self):

current_time = time.time()

while self.requests and self.requests[0] < current_time - self.window_size:

self.requests.popleft()

if len(self.requests) < self.limit:

self.requests.append(current_time)

return True

else:

return False

# Example usage

limiter = SlidingWindowRateLimiter(limit=5, window_size=60)

for _ in range(7):

if limiter.is_allowed():

print("Request allowed")

else:

print("Rate limit exceeded")

time.sleep(10) # Sleep for 10 seconds between requests

3. Token Bucket Rate Limiting

Token bucket is one of the most widely used algorithms. In this approach, tokens are generated at a fixed rate and stored in a bucket. Each request removes one token from the bucket. If the bucket is empty, the request is denied until new tokens are generated.

This algorithm requires careful tracking of tokens and bucket state and may introduce some complexity in implementation. It's more flexible than fixed or sliding windows and allows bursts of requests while enforcing a maximum rate over time.

import time

class TokenBucketRateLimiter:

def __init__(self, rate, capacity):

self.rate = rate

self.capacity = capacity

self.tokens = capacity

self.last_checked = time.time()

def is_allowed(self):

current_time = time.time()

elapsed = current_time - self.last_checked

self.tokens += elapsed * self.rate

if self.tokens > self.capacity:

self.tokens = self.capacity

self.last_checked = current_time

if self.tokens >= 1:

self.tokens -= 1

return True

else:

return False

# Example usage

limiter = TokenBucketRateLimiter(rate=1, capacity=5)

for _ in range(7):

if limiter.is_allowed():

print("Request allowed")

else:

print("Rate limit exceeded")

time.sleep(1) # Sleep for 1 second between requests

4. Leaky Bucket Rate Limiting

Similar to the token bucket algorithm, the leaky bucket model enforces a maximum rate by controlling the flow of requests into the system.

In this model, requests are added to a "bucket" at varying rates, but the bucket leaks at a fixed rate. If the bucket overflows, further requests are rejected. This strategy helps to smooth out bursty traffic while ensuring that requests are handled at a constant rate. Similar to the token bucket, it can be complex to implement, especially for systems with high variability in request traffic.

import time

class LeakyBucketRateLimiter:

def __init__(self, rate, capacity):

self.rate = rate

self.capacity = capacity

self.water_level = 0

self.last_checked = time.time()

def is_allowed(self):

current_time = time.time()

elapsed = current_time - self.last_checked

self.water_level -= elapsed * self.rate

if self.water_level < 0:

self.water_level = 0

self.last_checked = current_time

if self.water_level < self.capacity:

self.water_level += 1

return True

else:

return False

# Example usage

limiter = LeakyBucketRateLimiter(rate=1, capacity=5)

for _ in range(7):

if limiter.is_allowed():

print("Request allowed")

else:

print("Rate limit exceeded")

time.sleep(1) # Sleep for 1 second between requests

5. IP-Based Rate Limiting

In this strategy, the rate limit is applied based on the user's IP address. This ensures that requests from a single IP address are limited to a specific threshold. This approach can be bypassed by users employing VPNs or proxies. Additionally, it might unfairly affect users sharing an IP address.

import time

class IpRateLimiter:

def __init__(self, limit, window_size):

self.limit = limit

self.window_size = window_size

self.ip_requests = {}

def is_allowed(self, ip):

current_time = time.time()

if ip not in self.ip_requests:

self.ip_requests[ip] = []

self.ip_requests[ip] = [req for req in self.ip_requests[ip] if req > current_time - self.window_size]

if len(self.ip_requests[ip]) < self.limit:

self.ip_requests[ip].append(current_time)

return True

else:

return False

# Example usage

limiter = IpRateLimiter(limit=5, window_size=60)

for ip in ['192.168.1.1', '192.168.1.2']:

for _ in range(7):

if limiter.is_allowed(ip):

print(f"Request from {ip} allowed")

else:

print(f"Rate limit exceeded for {ip}")

time.sleep(10) # Sleep for 10 seconds between requests

6. User-Based Rate Limiting

This is a more personalized rate-limiting strategy, where the limit is applied to each individual user or authenticated account rather than their IP address. For authenticated users, rate limiting can be done based on their account (e.g., via API keys or OAuth tokens).

import time

class UserRateLimiter:

def __init__(self, limit, window_size):

self.limit = limit

self.window_size = window_size

self.user_requests = {}

def is_allowed(self, user_id):

current_time = time.time()

if user_id not in self.user_requests:

self.user_requests[user_id] = []

self.user_requests[user_id] = [req for req in self.user_requests[user_id] if req > current_time - self.window_size]

if len(self.user_requests[user_id]) < self.limit:

self.user_requests[user_id].append(current_time)

return True

else:

return False

# Example usage

limiter = UserRateLimiter(limit=5, window_size=60)

for user_id in ['user1', 'user2']:

for _ in range(7):

if limiter.is_allowed(user_id):

print(f"Request from {user_id} allowed")

else:

print(f"Rate limit exceeded for {user_id}")

time.sleep(10) # Sleep for 10 seconds between requests

Best Practices for Implementing Rate Limiting

- Use clear error responses, typically '429 Too Many Requests'.

- Rate limit based on context and factors such as user roles, API endpoints, or subscription tiers.

- Granular limits at different levels (e.g., global, per-user, per-IP) depending on the needs of the API.

- Log and monitor rate limiting to identify potential abuse or misuse patterns.

- Use Redis or similar caching solutions for highly distributed systems.

- Use exponential backoff to retry with increasing delay intervals.

Conclusion

API Rate limiting is a critical aspect of API management that ensures performance, reliability and security. By choosing the appropriate strategy based on the system's needs and efficient monitoring of usage patterns, the health and performance of the APIs even under heavy traffic can be maintained. Rate limiting is not just a defensive measure; it's an integral part of building scalable and robust web services.

Opinions expressed by DZone contributors are their own.

Comments