Traffic Shadowing With Istio: Reducing the Risk of Code Release

Istio.io can control the routing of traffic between services, making it valuable for traffic control in applications with microservices.

Join the DZone community and get the full member experience.

Join For Freewe've been talking about istio and service mesh recently (follow along @christianposta for the latest) but one aspect of istio can be glossed over. one of the most important aspects of istio.io is its ability to control the routing of traffic between services . with this fine-grained control of application-level traffic, we can do interesting resilience things like routing around failures, routing to different availability zones when necessary etc. imho, more importantly, we can also control the flow of traffic for our deployments so we can reduce the risk of change to the system.

with a services architecture, our goal is to increase our ability to go faster so we do things like implement microservices, automated testing pipelines, ci/cd etc. but what good is any of this if we have bottlenecks getting our code changes into production? production is where we understand whether our changes have any positive impact on our kpis, so we should reduce the bottlenecks of getting code into production.

at the typical enterprise customers that i visit regularly (financial services, insurance, retail, energy, etc), risk is such a big part of the equation. risk is used as a reason for why changes to production get blocked. a big part of this risk is a code "deployment" is all or nothing in these environments. what i mean is there is no separation of deployment and release . this is such a hugely important distinction.

deployment vs release

a deployment brings new code to production but it takes no production traffic . once in the production environment, service teams are free to run smoke tests, integration tests, etc without impacting any users. a service team should feel free to deploy as frequently as it wishes.

a release brings live traffic to a deployment but may require signoff from "the business stakeholders". ideally, bringing traffic to a deployment can be done in a controlled manner to reduce risk. for example, we may want to bring internal-user traffic to the deployment first. or we may want to bring a small fraction, say 1%, of traffic to the deployment. if any of these release rollout strategies (internal, non-paying, 1% traffic, etc) exhibit undesirable behavior (thus the need for strong observability) then we can rollback.

please go read the two-part series titled "deploy != release" from the good folks at turbine.io labs for a deeper treatment of this topic.

dark traffic

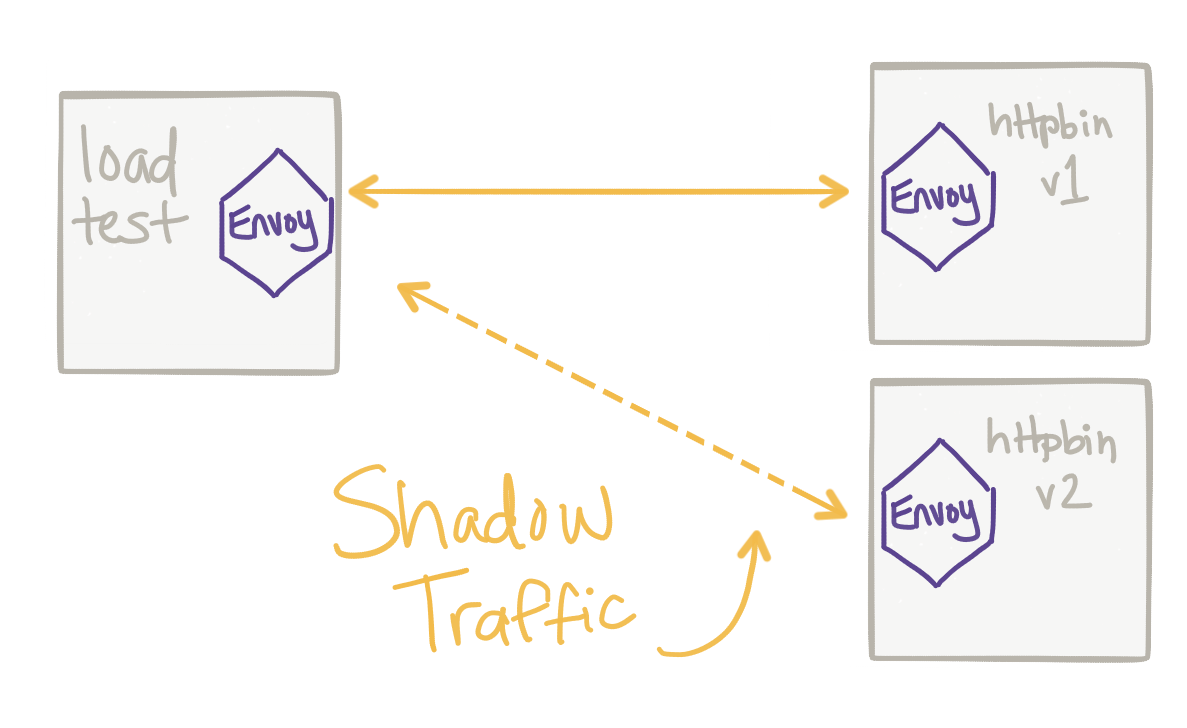

one strategy we can use to reduce risk for our releases, before we even expose to any type of user, is to shadow traffic live traffic to our deployment. with traffic shadowing, we can take a fraction of traffic and route it to our new deployment and observe how it behaves. we can do things like test for errors, exceptions, performance, and result parity. projects such as twitter diffy can be used to do comparisons between different released versions and unreleased versions.

with istio, we can do this kind of traffic control by mirroring traffic from one service to another. let's take a look at an example.

traffic mirroring with istio

with the istio 0.5.0 release , we have the ability to mirror traffic from one service to another, or from one version to a newer version.

we'll start by creating two deployments of an httpbin service.

$ cat httpbin-v1.yaml

apiversion: v1

kind: service

metadata:

name: httpbin

labels:

app: httpbin

spec:

ports:

- name: http

port: 8080

selector:

app: httpbin

---

apiversion: extensions/v1beta1

kind: deployment

metadata:

name: httpbin-v1

spec:

replicas: 1

template:

metadata:

labels:

app: httpbin

version: v1

spec:

containers:

- image: docker.io/kennethreitz/httpbin

imagepullpolicy: ifnotpresent

name: httpbin

command: ["gunicorn", "--access-logfile", "-", "-b", "0.0.0.0:8080", "httpbin:app"]

ports:

- containerport: 8080

we'll inject the iistio sidecar with

kube-inject

like this:

$ kubectl create -f <(istioctl kube-inject -f httpbin-v1.yaml)

version 2 of the

httpbin

service is similar except it has labels that denote that it's version 2:

---

apiversion: extensions/v1beta1

kind: deployment

metadata:

name: httpbin-v2

spec:

replicas: 1

template:

metadata:

labels:

app: httpbin

version: v2

spec:

containers:

- image: docker.io/kennethreitz/httpbin

imagepullpolicy: ifnotpresent

name: httpbin

command: ["gunicorn", "--access-logfile", "-", "-b", "0.0.0.0:8080", "httpbin:app"]

ports:

- containerport: 8080let's deploy httpbin-v2 also:

$ kubectl create -f <(istioctl kube-inject -f httpbin-v2.yaml)

lastly, let's deploy the

sleep

demo from

istio samples

so we can easily call into our

httpbin

service:

$ kubectl create -f <(istioctl kube-inject -f sleep.yaml)you should see three pods like this:

$ kubectl get pod

name ready status restarts age

httpbin-v1-2113278084-98whj 2/2 running 0 1d

httpbin-v2-2839546783-2dvhq 2/2 running 0 1d

sleep-1512692991-txrfn 2/2 running 0 1d

if we start sending traffic to the

httpbin

service, we'll see the default kubernetes behavior to load balance across both

v1

and

v2

since both pods will match the selector for the

httpbin

kubernetes service

. let's take a look at the default istio

route rule

to route all traffic to

v1

of our service:

apiversion: config.istio.io/v1alpha2

kind: routerule

metadata:

name: httpbin-default-v1

spec:

destination:

name: httpbin

precedence: 5

route:

- labels:

version: v1

let's create this

routerule

:

$ istioctl create -f routerules/all-httpbin-v1.yaml

if we start sending traffic into our

httpbin

service, we should only see traffic for the

httpbin-v1

deployment:

export sleep_pod=$(kubectl get pod -l app=sleep -o jsonpath={.items..metadata.name})

kubectl exec -it $sleep_pod -c sleep -- sh -c 'curl http://httpbin:8080/headers'

{

"headers": {

"accept": "*/*",

"content-length": "0",

"host": "httpbin:8080",

"user-agent": "curl/7.35.0",

"x-b3-sampled": "1",

"x-b3-spanid": "eca3d7ed8f2e6a0a",

"x-b3-traceid": "eca3d7ed8f2e6a0a",

"x-ot-span-context": "eca3d7ed8f2e6a0a;eca3d7ed8f2e6a0a;0000000000000000"

}

}

if we check the access logs for the

httpbin-v1

service, we should see a single access-log statement:

$ kubectl logs -f httpbin-v1-2113278084-98whj -c httpbin

127.0.0.1 - - [07/feb/2018:00:07:39 +0000] "get /headers http/1.1" 200 349 "-" "curl/7.35.0"

if we check the logs for the

httpbin-v2

service, we should see no access log statements.

let's mirror traffic from

v1

to

v2

. here's the istio route rule we'll use:

apiversion: config.istio.io/v1alpha2

kind: routerule

metadata:

name: mirror-traffic-to-httbin-v2

spec:

destination:

name: httpbin

precedence: 11

route:

- labels:

version: v1

weight: 100

- labels:

version: v2

weight: 0

mirror:

name: httpbin

labels:

version: v2a few things to note:

- we are explicitly telling istio to weight the traffic between v1 (100%) and v2 (0%)

- we are using labels to specify which version of httpbin service to which we want to mirror

let's create this

routerule

$ istioctl create -f routerules/mirror/mirror-traffic-to-httbin-v2.yamlwe should see routerules like this:

$ istioctl get routerules

$ istioctl get routerules

name kind namespace

httpbin-default-v1 routerule.v1alpha2.config.istio.io tutorial

httpbin-mirror-v2 routerule.v1alpha2.config.istio.io tutorial

now, if we start sending traffic in, we should see requests go to

v1

and requests shadowed to

v2

.

video demo

here's a video showing this:

istio mirroring demo from christian posta on vimeo .

please see the official istio docs for more details!

Published at DZone with permission of Christian Posta, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments