Use AWS Controllers for Kubernetes To Deploy a Serverless Data Processing Solution With SQS, Lambda, and DynamoDB

Discover how to use AWS Controllers for Kubernetes to create a Lambda function, SQS, and DynamoDB table and wire them together to deploy a solution.

Join the DZone community and get the full member experience.

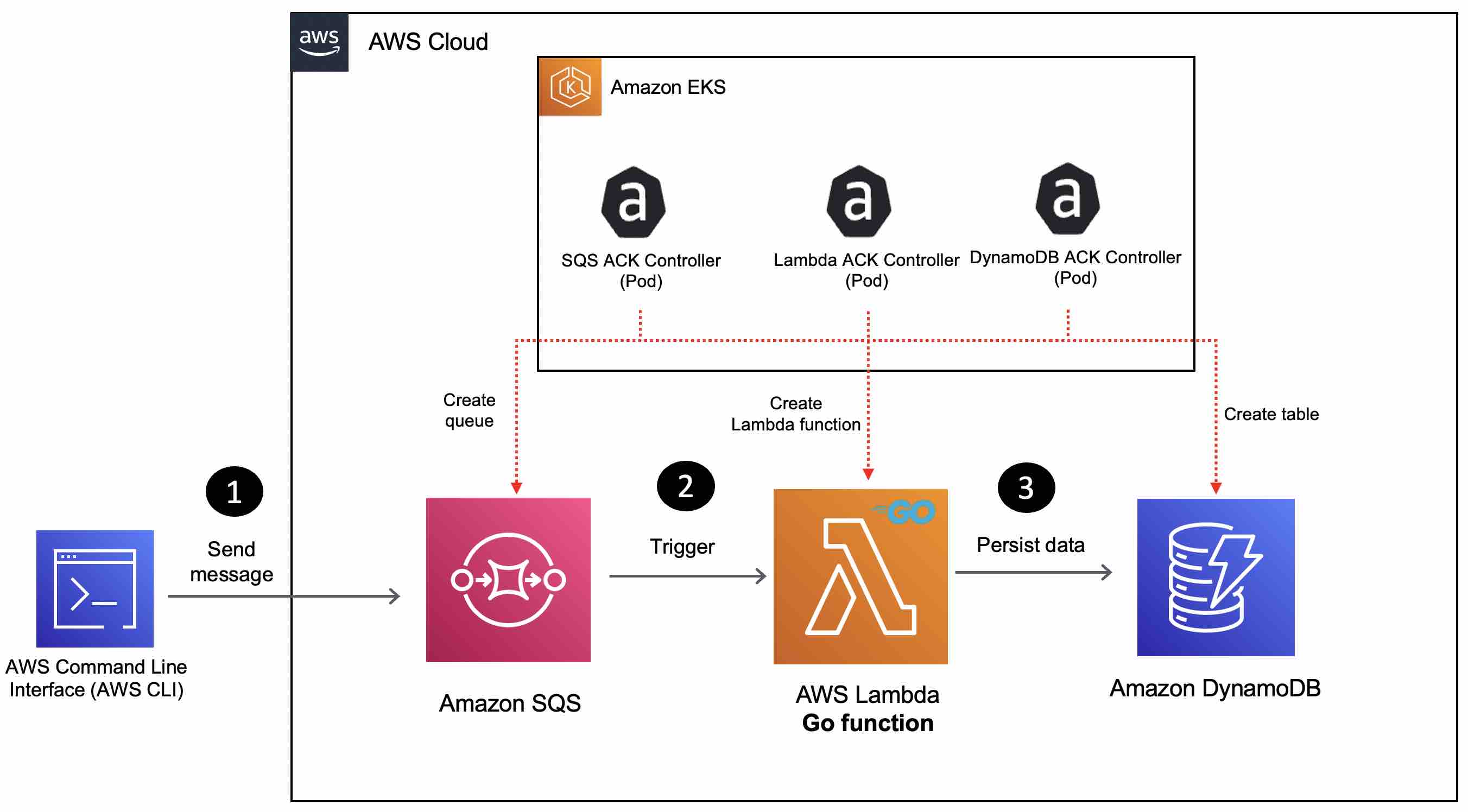

Join For FreeIn this blog post, you will be using AWS Controllers for Kubernetes on an Amazon EKS cluster to put together a solution wherein data from an Amazon SQS queue is processed by an AWS Lambda function and persisted to a DynamoDB table.

AWS Controllers for Kubernetes (also known as ACK) leverage Kubernetes Custom Resource and Custom Resource Definitions and give you the ability to manage and use AWS services directly from Kubernetes without needing to define resources outside of the cluster. The idea behind ACK is to enable Kubernetes users to describe the desired state of AWS resources using the Kubernetes API and configuration language. ACK will then take care of provisioning and managing the AWS resources to match the desired state. This is achieved by using Service controllers that are responsible for managing the lifecycle of a particular AWS service. Each ACK service controller is packaged into a separate container image that is published in a public repository corresponding to an individual ACK service controller.

There is no single ACK container image. Instead, there are container images for each individual ACK service controller that manages resources for a particular AWS API.

This blog post will walk you through how to use the SQS, DynamoDB, and Lambda service controllers for ACK.

Prerequisites

To follow along step-by-step, in addition to an AWS account, you will need to have AWS CLI, kubectl, and Helm installed.

There are a variety of ways in which you can create an Amazon EKS cluster. I prefer using eksctl CLI because of the convenience it offers. Creating an EKS cluster using eksctl can be as easy as this:

eksctl create cluster --name my-cluster --region region-code

For details, refer to Getting started with Amazon EKS – eksctl.

Clone this GitHub repository and change it to the right directory:

git clone https://github.com/abhirockzz/k8s-ack-sqs-lambda

cd k8s-ack-sqs-lambda

Ok, let's get started!

Setup the ACK Service Controllers for AWS Lambda, SQS, and DynamoDB

Install ACK Controllers

Log into the Helm registry that stores the ACK charts:

aws ecr-public get-login-password --region us-east-1 | helm registry login --username AWS --password-stdin public.ecr.aws

Deploy the ACK service controller for Amazon Lambda using the lambda-chart Helm chart:

RELEASE_VERSION_LAMBDA_ACK=$(curl -sL "https://api.github.com/repos/aws-controllers-k8s/lambda-controller/releases/latest" | grep '"tag_name":' | cut -d'"' -f4)

helm install --create-namespace -n ack-system oci://public.ecr.aws/aws-controllers-k8s/lambda-chart "--version=${RELEASE_VERSION_LAMBDA_ACK}" --generate-name --set=aws.region=us-east-1

Deploy the ACK service controller for SQS using the sqs-chart Helm chart:

RELEASE_VERSION_SQS_ACK=$(curl -sL "https://api.github.com/repos/aws-controllers-k8s/sqs-controller/releases/latest" | grep '"tag_name":' | cut -d'"' -f4)

helm install --create-namespace -n ack-system oci://public.ecr.aws/aws-controllers-k8s/sqs-chart "--version=${RELEASE_VERSION_SQS_ACK}" --generate-name --set=aws.region=us-east-1

Deploy the ACK service controller for DynamoDB using the dynamodb-chart Helm chart:

RELEASE_VERSION_DYNAMODB_ACK=$(curl -sL "https://api.github.com/repos/aws-controllers-k8s/dynamodb-controller/releases/latest" | grep '"tag_name":' | cut -d'"' -f4)

helm install --create-namespace -n ack-system oci://public.ecr.aws/aws-controllers-k8s/dynamodb-chart "--version=${RELEASE_VERSION_DYNAMODB_ACK}" --generate-name --set=aws.region=us-east-1

Now, it's time to configure the IAM permissions for the controller to invoke Lambda, DynamoDB, and SQS.

Configure IAM Permissions

Create an OIDC Identity Provider for Your Cluster

For the steps below, replace the EKS_CLUSTER_NAME and AWS_REGION variables with your cluster name and region.

export EKS_CLUSTER_NAME=demo-eks-cluster

export AWS_REGION=us-east-1

eksctl utils associate-iam-oidc-provider --cluster $EKS_CLUSTER_NAME --region $AWS_REGION --approve

OIDC_PROVIDER=$(aws eks describe-cluster --name $EKS_CLUSTER_NAME --query "cluster.identity.oidc.issuer" --output text | cut -d '/' -f2- | cut -d '/' -f2-)

Create IAM Roles for Lambda, SQS, and DynamoDB ACK Service Controllers

ACK Lambda Controller

Set the following environment variables:

ACK_K8S_SERVICE_ACCOUNT_NAME=ack-lambda-controller

ACK_K8S_NAMESPACE=ack-system

AWS_ACCOUNT_ID=$(aws sts get-caller-identity --query "Account" --output text)

Create the trust policy for the IAM role:

read -r -d '' TRUST_RELATIONSHIP <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Federated": "arn:aws:iam::${AWS_ACCOUNT_ID}:oidc-provider/${OIDC_PROVIDER}"

},

"Action": "sts:AssumeRoleWithWebIdentity",

"Condition": {

"StringEquals": {

"${OIDC_PROVIDER}:sub": "system:serviceaccount:${ACK_K8S_NAMESPACE}:${ACK_K8S_SERVICE_ACCOUNT_NAME}"

}

}

}

]

}

EOF

echo "${TRUST_RELATIONSHIP}" > trust_lambda.json

Create the IAM role:

ACK_CONTROLLER_IAM_ROLE="ack-lambda-controller"

ACK_CONTROLLER_IAM_ROLE_DESCRIPTION="IRSA role for ACK lambda controller deployment on EKS cluster using Helm charts"

aws iam create-role --role-name "${ACK_CONTROLLER_IAM_ROLE}" --assume-role-policy-document file://trust_lambda.json --description "${ACK_CONTROLLER_IAM_ROLE_DESCRIPTION}"

Attach IAM policy to the IAM role:

# we are getting the policy directly from the ACK repo

INLINE_POLICY="$(curl https://raw.githubusercontent.com/aws-controllers-k8s/lambda-controller/main/config/iam/recommended-inline-policy)"

aws iam put-role-policy \

--role-name "${ACK_CONTROLLER_IAM_ROLE}" \

--policy-name "ack-recommended-policy" \

--policy-document "${INLINE_POLICY}"

Attach ECR permissions to the controller IAM role. These are required since Lambda functions will be pulling images from ECR.

aws iam put-role-policy \

--role-name "${ACK_CONTROLLER_IAM_ROLE}" \

--policy-name "ecr-permissions" \

--policy-document file://ecr-permissions.json

Associate the IAM role to a Kubernetes service account:

ACK_CONTROLLER_IAM_ROLE_ARN=$(aws iam get-role --role-name=$ACK_CONTROLLER_IAM_ROLE --query Role.Arn --output text)

export IRSA_ROLE_ARN=eks.amazonaws.com/role-arn=$ACK_CONTROLLER_IAM_ROLE_ARN

kubectl annotate serviceaccount -n $ACK_K8S_NAMESPACE $ACK_K8S_SERVICE_ACCOUNT_NAME $IRSA_ROLE_ARN

Repeat the steps for the SQS controller.

ACK SQS Controller

Set the following environment variables:

ACK_K8S_SERVICE_ACCOUNT_NAME=ack-sqs-controller

ACK_K8S_NAMESPACE=ack-system

AWS_ACCOUNT_ID=$(aws sts get-caller-identity --query "Account" --output text)

Create the trust policy for the IAM role:

read -r -d '' TRUST_RELATIONSHIP <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Federated": "arn:aws:iam::${AWS_ACCOUNT_ID}:oidc-provider/${OIDC_PROVIDER}"

},

"Action": "sts:AssumeRoleWithWebIdentity",

"Condition": {

"StringEquals": {

"${OIDC_PROVIDER}:sub": "system:serviceaccount:${ACK_K8S_NAMESPACE}:${ACK_K8S_SERVICE_ACCOUNT_NAME}"

}

}

}

]

}

EOF

echo "${TRUST_RELATIONSHIP}" > trust_sqs.json

Create the IAM role:

ACK_CONTROLLER_IAM_ROLE="ack-sqs-controller"

ACK_CONTROLLER_IAM_ROLE_DESCRIPTION="IRSA role for ACK sqs controller deployment on EKS cluster using Helm charts"

aws iam create-role --role-name "${ACK_CONTROLLER_IAM_ROLE}" --assume-role-policy-document file://trust_sqs.json --description "${ACK_CONTROLLER_IAM_ROLE_DESCRIPTION}"

Attach IAM policy to the IAM role:

# for sqs controller, we use the managed policy ARN instead of the inline policy (unlike the Lambda controller)

POLICY_ARN="$(curl https://raw.githubusercontent.com/aws-controllers-k8s/sqs-controller/main/config/iam/recommended-policy-arn)"

aws iam attach-role-policy --role-name "${ACK_CONTROLLER_IAM_ROLE}" --policy-arn "${POLICY_ARN}"

Associate the IAM role to a Kubernetes service account:

ACK_CONTROLLER_IAM_ROLE_ARN=$(aws iam get-role --role-name=$ACK_CONTROLLER_IAM_ROLE --query Role.Arn --output text)

export IRSA_ROLE_ARN=eks.amazonaws.com/role-arn=$ACK_CONTROLLER_IAM_ROLE_ARN

kubectl annotate serviceaccount -n $ACK_K8S_NAMESPACE $ACK_K8S_SERVICE_ACCOUNT_NAME $IRSA_ROLE_ARN

Repeat the steps for the DynamoDB controller.

ACK DynamoDB Controller

Set the following environment variables:

ACK_K8S_SERVICE_ACCOUNT_NAME=ack-dynamodb-controller

ACK_K8S_NAMESPACE=ack-system

AWS_ACCOUNT_ID=$(aws sts get-caller-identity --query "Account" --output text)

Create the trust policy for the IAM role:

read -r -d '' TRUST_RELATIONSHIP <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Federated": "arn:aws:iam::${AWS_ACCOUNT_ID}:oidc-provider/${OIDC_PROVIDER}"

},

"Action": "sts:AssumeRoleWithWebIdentity",

"Condition": {

"StringEquals": {

"${OIDC_PROVIDER}:sub": "system:serviceaccount:${ACK_K8S_NAMESPACE}:${ACK_K8S_SERVICE_ACCOUNT_NAME}"

}

}

}

]

}

EOF

echo "${TRUST_RELATIONSHIP}" > trust_dynamodb.json

Create the IAM role:

ACK_CONTROLLER_IAM_ROLE="ack-dynamodb-controller"

ACK_CONTROLLER_IAM_ROLE_DESCRIPTION="IRSA role for ACK dynamodb controller deployment on EKS cluster using Helm charts"

aws iam create-role --role-name "${ACK_CONTROLLER_IAM_ROLE}" --assume-role-policy-document file://trust_dynamodb.json --description "${ACK_CONTROLLER_IAM_ROLE_DESCRIPTION}"

Attach IAM policy to the IAM role:

# for dynamodb controller, we use the managed policy ARN instead of the inline policy (like we did for Lambda controller)

POLICY_ARN="$(curl https://raw.githubusercontent.com/aws-controllers-k8s/dynamodb-controller/main/config/iam/recommended-policy-arn)"

aws iam attach-role-policy --role-name "${ACK_CONTROLLER_IAM_ROLE}" --policy-arn "${POLICY_ARN}"

Associate the IAM role to a Kubernetes service account:

ACK_CONTROLLER_IAM_ROLE_ARN=$(aws iam get-role --role-name=$ACK_CONTROLLER_IAM_ROLE --query Role.Arn --output text)

export IRSA_ROLE_ARN=eks.amazonaws.com/role-arn=$ACK_CONTROLLER_IAM_ROLE_ARN

kubectl annotate serviceaccount -n $ACK_K8S_NAMESPACE $ACK_K8S_SERVICE_ACCOUNT_NAME $IRSA_ROLE_ARN

Restart ACK Controller Deployments and Verify the Setup

Restart the ACK service controller Deployment using the following commands. It will update service controller Pods with IRSA environment variables.

Get list of ACK service controller deployments:

export ACK_K8S_NAMESPACE=ack-system

kubectl get deployments -n $ACK_K8S_NAMESPACE

Restart Lambda, SQS, and DynamoDB controller Deployments:

DEPLOYMENT_NAME_LAMBDA=<enter deployment name for lambda controller>

kubectl -n $ACK_K8S_NAMESPACE rollout restart deployment $DEPLOYMENT_NAME_LAMBDA

DEPLOYMENT_NAME_SQS=<enter deployment name for sqs controller>

kubectl -n $ACK_K8S_NAMESPACE rollout restart deployment $DEPLOYMENT_NAME_SQS

DEPLOYMENT_NAME_DYNAMODB=<enter deployment name for dynamodb controller>

kubectl -n $ACK_K8S_NAMESPACE rollout restart deployment $DEPLOYMENT_NAME_DYNAMODB

List Pods for these Deployments. Verify that the AWS_WEB_IDENTITY_TOKEN_FILE and AWS_ROLE_ARN environment variables exist for your Kubernetes Pod using the following commands:

kubectl get pods -n $ACK_K8S_NAMESPACE

LAMBDA_POD_NAME=<enter Pod name for lambda controller>

kubectl describe pod -n $ACK_K8S_NAMESPACE $LAMBDA_POD_NAME | grep "^\s*AWS_"

SQS_POD_NAME=<enter Pod name for sqs controller>

kubectl describe pod -n $ACK_K8S_NAMESPACE $SQS_POD_NAME | grep "^\s*AWS_"

DYNAMODB_POD_NAME=<enter Pod name for dynamodb controller>

kubectl describe pod -n $ACK_K8S_NAMESPACE $DYNAMODB_POD_NAME | grep "^\s*AWS_"

Now that the ACK service controller has been set up and configured, you can create AWS resources!

Create SQS Queue, DynamoDB Table, and Deploy the Lambda Function

Create SQS Queue

In the file sqs-queue.yaml, replace the us-east-1 region with your preferred region as well as the AWS account ID. This is what the ACK manifest for the SQS queue looks like:

apiVersion: sqs.services.k8s.aws/v1alpha1

kind: Queue

metadata:

name: sqs-queue-demo-ack

annotations:

services.k8s.aws/region: us-east-1

spec:

queueName: sqs-queue-demo-ack

policy: |

{

"Statement": [{

"Sid": "__owner_statement",

"Effect": "Allow",

"Principal": {

"AWS": "AWS_ACCOUNT_ID"

},

"Action": "sqs:SendMessage",

"Resource": "arn:aws:sqs:us-east-1:AWS_ACCOUNT_ID:sqs-queue-demo-ack"

}]

}

Create the queue using the following command:

kubectl apply -f sqs-queue.yaml

# list the queue

kubectl get queue

Create DynamoDB Table

This is what the ACK manifest for the DynamoDB table looks like:

apiVersion: dynamodb.services.k8s.aws/v1alpha1

kind: Table

metadata:

name: customer

annotations:

services.k8s.aws/region: us-east-1

spec:

attributeDefinitions:

- attributeName: email

attributeType: S

billingMode: PAY_PER_REQUEST

keySchema:

- attributeName: email

keyType: HASH

tableName: customer

You can replace the us-east-1 region with your preferred region.

Create a table (named customer) using the following command:

kubectl apply -f dynamodb-table.yaml

# list the tables

kubectl get tables

Build Function Binary and Create Docker Image

GOARCH=amd64 GOOS=linux go build -o main main.go

aws ecr-public get-login-password --region us-east-1 | docker login --username AWS --password-stdin public.ecr.aws

docker build -t demo-sqs-dynamodb-func-ack .

Create a private ECR repository, tag and push the Docker image to ECR:

AWS_ACCOUNT_ID=$(aws sts get-caller-identity --query "Account" --output text)

aws ecr get-login-password --region us-east-1 | docker login --username AWS --password-stdin $AWS_ACCOUNT_ID.dkr.ecr.us-east-1.amazonaws.com

aws ecr create-repository --repository-name demo-sqs-dynamodb-func-ack --region us-east-1

docker tag demo-sqs-dynamodb-func-ack:latest $AWS_ACCOUNT_ID.dkr.ecr.us-east-1.amazonaws.com/demo-sqs-dynamodb-func-ack:latest

docker push $AWS_ACCOUNT_ID.dkr.ecr.us-east-1.amazonaws.com/demo-sqs-dynamodb-func-ack:latest

Create an IAM execution Role for the Lambda function and attach the required policies:

export ROLE_NAME=demo-sqs-dynamodb-func-ack-role

ROLE_ARN=$(aws iam create-role \

--role-name $ROLE_NAME \

--assume-role-policy-document '{"Version": "2012-10-17","Statement": [{ "Effect": "Allow", "Principal": {"Service": "lambda.amazonaws.com"}, "Action": "sts:AssumeRole"}]}' \

--query 'Role.[Arn]' --output text)

aws iam attach-role-policy --role-name $ROLE_NAME --policy-arn arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole

Since the Lambda function needs to write data to DynamoDB and invoke SQS, let's add the following policies to the IAM role:

aws iam put-role-policy \

--role-name "${ROLE_NAME}" \

--policy-name "dynamodb-put" \

--policy-document file://dynamodb-put.json

aws iam put-role-policy \

--role-name "${ROLE_NAME}" \

--policy-name "sqs-permissions" \

--policy-document file://sqs-permissions.json

Create the Lambda Function

Update function.yaml file with the following info:

imageURI- The URI of the Docker image that you pushed to ECR, e.g.,<AWS_ACCOUNT_ID>.dkr.ecr.us-east-1.amazonaws.com/demo-sqs-dynamodb-func-ack:latestrole- The ARN of the IAM role that you created for the Lambda function, e.g.,arn:aws:iam::<AWS_ACCOUNT_ID>:role/demo-sqs-dynamodb-func-ack-role

This is what the ACK manifest for the Lambda function looks like:

apiVersion: lambda.services.k8s.aws/v1alpha1

kind: Function

metadata:

name: demo-sqs-dynamodb-func-ack

annotations:

services.k8s.aws/region: us-east-1

spec:

architectures:

- x86_64

name: demo-sqs-dynamodb-func-ack

packageType: Image

code:

imageURI: AWS_ACCOUNT_ID.dkr.ecr.us-east-1.amazonaws.com/demo-sqs-dynamodb-func-ack:latest

environment:

variables:

TABLE_NAME: customer

role: arn:aws:iam::AWS_ACCOUNT_ID:role/demo-sqs-dynamodb-func-ack-role

description: A function created by ACK lambda-controller

To create the Lambda function, run the following command:

kubectl create -f function.yaml

# list the function

kubectl get functions

Add SQS Trigger Configuration

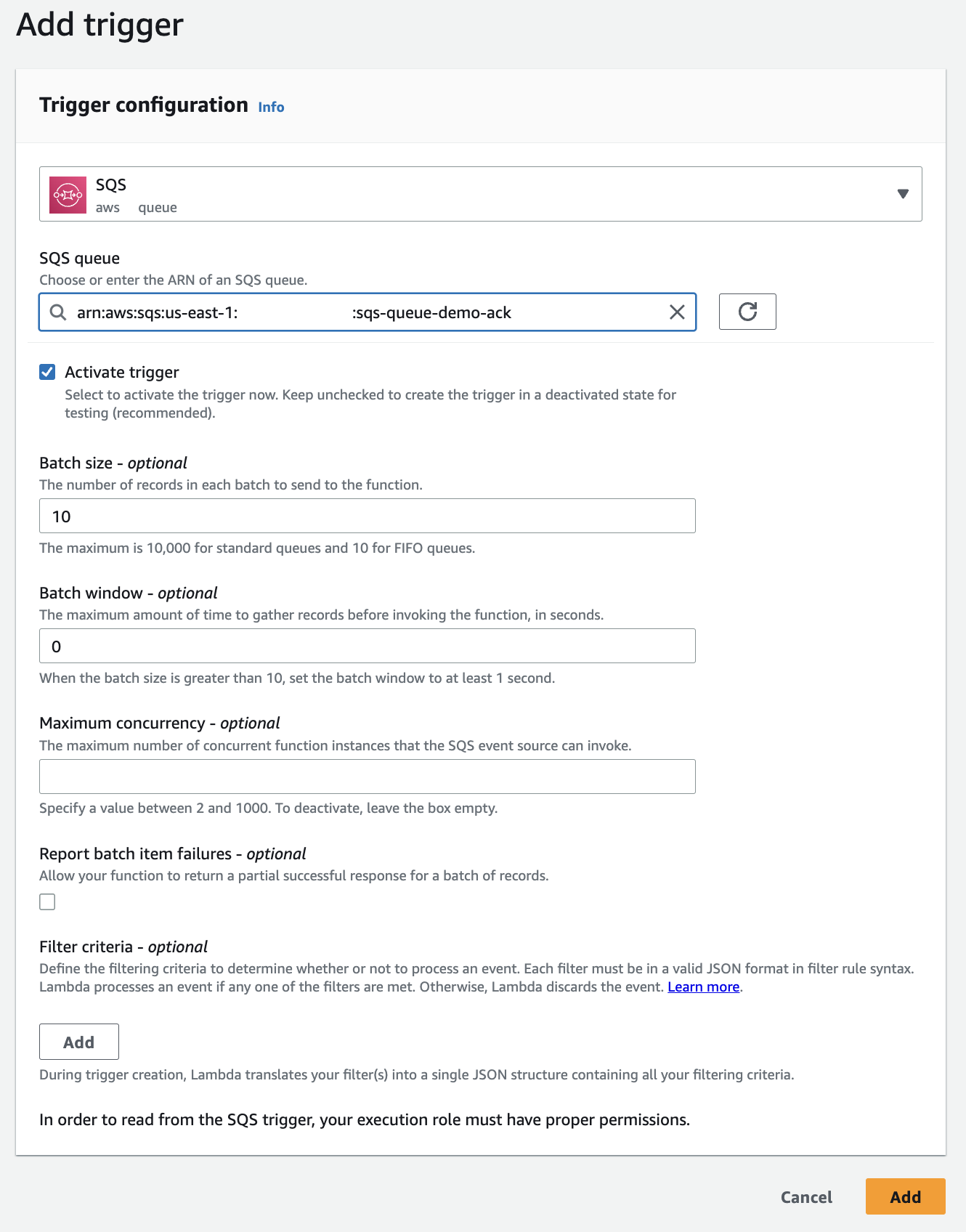

Add SQS trigger which will invoke the Lambda function when an event is sent to the SQS queue.

Here is an example using AWS Console:

Open the Lambda function in the AWS Console and click on the Add trigger button. Select SQS as the trigger source, select the SQS queue, and click on the Add button.

Now you are ready to try out the end-to-end solution!

Test the Application

Send a few messages to the SQS queue. For the purposes of this demo, you can use the AWS CLI:

export SQS_QUEUE_URL=$(kubectl get queues/sqs-queue-demo-ack -o jsonpath='{.status.queueURL}')

aws sqs send-message --queue-url $SQS_QUEUE_URL --message-body user1@foo.com --message-attributes 'name={DataType=String, StringValue="user1"}, city={DataType=String,StringValue="seattle"}'

aws sqs send-message --queue-url $SQS_QUEUE_URL --message-body user2@foo.com --message-attributes 'name={DataType=String, StringValue="user2"}, city={DataType=String,StringValue="tel aviv"}'

aws sqs send-message --queue-url $SQS_QUEUE_URL --message-body user3@foo.com --message-attributes 'name={DataType=String, StringValue="user3"}, city={DataType=String,StringValue="new delhi"}'

aws sqs send-message --queue-url $SQS_QUEUE_URL --message-body user4@foo.com --message-attributes 'name={DataType=String, StringValue="user4"}, city={DataType=String,StringValue="new york"}'

The Lambda function should be invoked and the data should be written to the DynamoDB table. Check the DynamoDB table using the CLI (or AWS console):

aws dynamodb scan --table-name customer

Clean Up

After you have explored the solution, you can clean up the resources by running the following commands:

Delete SQS queue, DynamoDB table and the Lambda function:

kubectl delete -f sqs-queue.yaml

kubectl delete -f function.yaml

kubectl delete -f dynamodb-table.yaml

To uninstall the ACK service controllers, run the following commands:

export ACK_SYSTEM_NAMESPACE=ack-system

helm ls -n $ACK_SYSTEM_NAMESPACE

helm uninstall -n $ACK_SYSTEM_NAMESPACE <enter name of the sqs chart>

helm uninstall -n $ACK_SYSTEM_NAMESPACE <enter name of the lambda chart>

helm uninstall -n $ACK_SYSTEM_NAMESPACE <enter name of the dynamodb chart>

Conclusion and Next Steps

In this post, we have seen how to use AWS Controllers for Kubernetes to create a Lambda function, SQS, and DynamoDB table and wire them together to deploy a solution. All of this (almost) was done using Kubernetes! I encourage you to try out other AWS services supported by ACK. Here is a complete list.

Happy building!

Published at DZone with permission of Abhishek Gupta, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments