What Developers Need to Know About Table Geo-Partitioning

We’ll experiment with the table geo-partitioning feature of PostgreSQL that automatically distributes application data across multiple locations.

Join the DZone community and get the full member experience.

Join For FreeIn the first two articles of the table partitioning series, we reviewed how the partition pruning and maintenance capabilities of PostgreSQL can speed up and facilitate the design of our applications. In this final post in the series, we’ll experiment with the table geo-partitioning feature that automatically distributes application data across multiple locations.

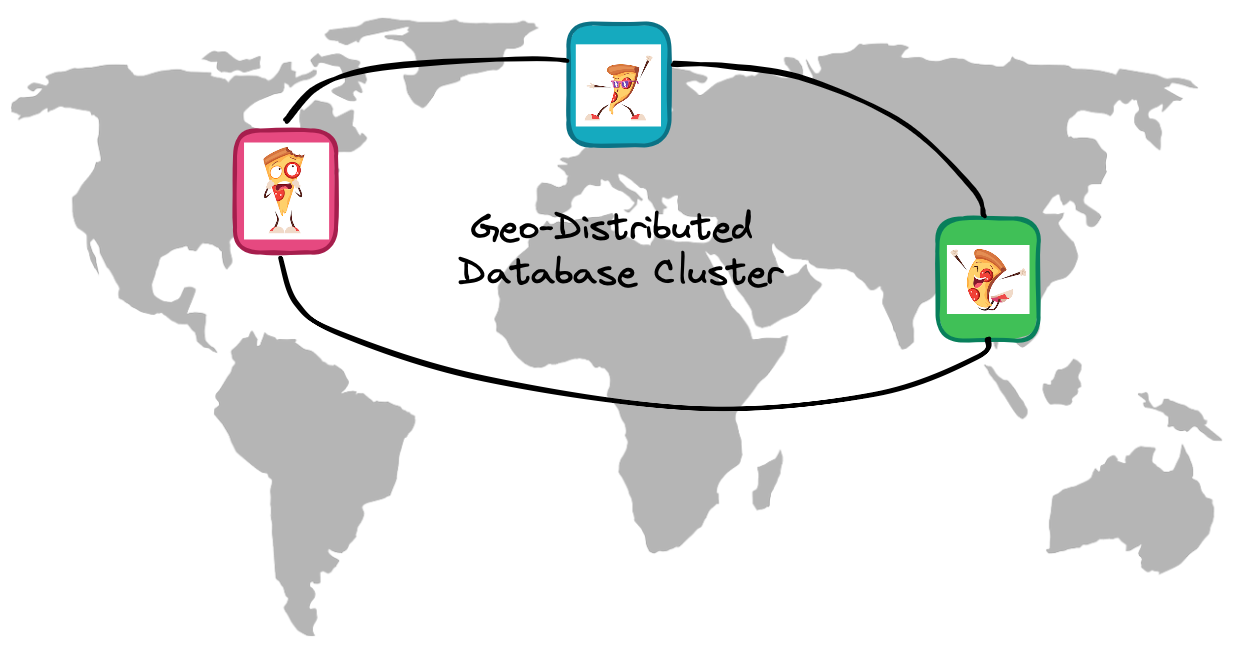

We’ll continue using a large pizza chain as an example. This pizza chain has branches in New York, London, and Hong Kong. It also uses a single centralized database cluster to track the orders of its delighted customers.

This time, the geo-distributed database cluster runs on YugabyteDB. For those who are not familiar with this database yet, it’s a Postgres-compliant distributed SQL database that distributes data evenly across a cluster of nodes. Additionally, it scales both read and write operations by utilizing all the cluster resources. YugabyteDB supports several multi-region deployments; however, in this article, we’ll focus on the geo-partitioned option.

Geo-Partitioned Table

Let’s take the PizzaOrders table again but now partition it by the Region column. The table tracks the order’s progress (introduced in the first article), and the newly added Region column defines a location of an order:

Orders_US: this partitioned table is for the orders placed in New York and other cities in the US region.Orders_EU: the branch in London will keep all its orders in this partition. Once the pizza chain opens new locations in Europe, orders from those branches will go to theOrders_EUas well.Orders_APAC: when Hong Kong customers order a pizza, the order goes to this partition.

As you can see, it’s straightforward to split the PizzaOrders into partitions that will be distributed by the database across distant geographical locations. But, before we get to the step-by-step instructions, let’s provide some rationale for this type of partitioning:

It’s good for performance and user experience: The speed and responsiveness of your pizza application will be similar for all the customers, regardless of their whereabouts. For instance, orders from Hong Kong will go through the

Orders_APACtable, where data is stored on the database nodes in APAC.It’s good for data regulation: All the orders and personal data of European customers won’t leave the boundaries of the EU. The data that belongs to the

Orders_EUpartition will be located on database nodes in Europe which satisfies the GDPR requirements.It’s good from a business perspective: The pizza chain headquarters has full control of a single database cluster that can be scaled in specific geographies once the load increases. Also, the app can easily query and join geo-distributed data through a single database endpoint.

Starting the Geo-Partitioned Cluster

Now, let’s experiment with geo-partitioned tables. First, deploy a geo-partitioned instance of YugabyteDB. Here you have two options.

Option #1: You can deploy and configure a geo-partitioned cluster through the YugabyteDB Managed interface:

Option #2: You can simulate a geo-partitioned cluster on your local machine with YugabyteDB open source and Docker.

mkdir ~/yb_docker_data

docker network create yugabytedb_network

# Starting a node in the US

docker run -d --name yugabytedb_node_us --net yugabytedb_network -p 7001:7000 -p 9000:9000 -p 5433:5433 \

-v ~/yb_docker_data/node_us:/home/yugabyte/yb_data --restart unless-stopped \

yugabytedb/yugabyte:latest bin/yugabyted start --listen=yugabytedb_node_us \

--base_dir=/home/yugabyte/yb_data --daemon=false \

--master_flags="placement_zone=A,placement_region=US,placement_cloud=CLOUD" \

--tserver_flags="placement_zone=A,placement_region=US,placement_cloud=CLOUD"

# Starting a node in Europe

docker run -d --name yugabytedb_node_eu --net yugabytedb_network \

-v ~/yb_docker_data/node_eu:/home/yugabyte/yb_data --restart unless-stopped \

yugabytedb/yugabyte:latest bin/yugabyted start --join=yugabytedb_node_us --listen=yugabytedb_node_eu \

--base_dir=/home/yugabyte/yb_data --daemon=false \

--master_flags="placement_zone=A,placement_region=EU,placement_cloud=CLOUD" \

--tserver_flags="placement_zone=A,placement_region=EU,placement_cloud=CLOUD"

# Starting a node in APAC

docker run -d --name yugabytedb_node_apac --net yugabytedb_network \

-v ~/yb_docker_data/node_apac:/home/yugabyte/yb_data --restart unless-stopped \

yugabytedb/yugabyte:latest bin/yugabyted start --join=yugabytedb_node_us --listen=yugabytedb_node_apac \

--base_dir=/home/yugabyte/yb_data --daemon=false \

--master_flags="placement_zone=A,placement_region=APAC,placement_cloud=CLOUD" \

--tserver_flags="placement_zone=A,placement_region=APAC,placement_cloud=CLOUD"

# Updating the nodes’ placement

docker exec -i yugabytedb_node_us \

yb-admin -master_addresses yugabytedb_node_us:7100,yugabytedb_node_eu:7100,yugabytedb_node_apac:7100 \

modify_placement_info CLOUD.US.A,CLOUD.EU.A,CLOUD.APAC.A 3We’ll use the latter option that starts a three-node cluster with one node in each geographic location:

yugabytedb_node_us: the node is placed in this locationplacement_region=USand will keep data of theOrders_USpartition.yugabytedb_node_eu: the node is located in Europe (placement_region=EU) and will store orders from theOrders_EUpartition.yugabytedb_node_apac: as you can guess, this node is for the orders of the APAC customers. Thus, it’s placed in theplacement_region=APACregion.

Once started, you can connect to the database instance using the following psql command:

psql -h 127.0.0.1 -p 5433 yugabyte -U yugabyte -wCreating Tablespaces

Tablespaces is a handy PostgreSQL feature. It allows you to define locations on the filesystem where the files representing a database object are stored. As a Postgres-compliant database, YugabyteDB supports this feature and lets you use the tablespaces for geo-partitioning needs.

So, your next step is to create tablespaces for the geo-partitioned PizzaOrders table:

CREATE TABLESPACE us_tablespace WITH (

replica_placement='{"num_replicas": 1, "placement_blocks":

[{"cloud":"CLOUD","region":"US","zone":"A","min_num_replicas":1}]}'

);

CREATE TABLESPACE eu_tablespace WITH (

replica_placement='{"num_replicas": 1, "placement_blocks":

[{"cloud":"CLOUD","region":"EU","zone":"A","min_num_replicas":1}]}'

);

CREATE TABLESPACE apac_tablespace WITH (

replica_placement='{"num_replicas": 1, "placement_blocks":

[{"cloud":"CLOUD","region":"APAC","zone":"A","min_num_replicas":1}]}'

);These commands create a tablespace for each location: the US, EU, and APAC. Each tablespace gets assigned to a node with similar placement information. For instance, the us_tablespace will be assigned to the yugabytedb_node_us node that you started earlier, as long as the placement info of that node (placement_zone=A,placement_region=US,placement_cloud=CLOUD) corresponds to the placement of us_tablespace.

Creating Partitions

The final configuration step is to get the PizzaOrders table split into three previously discussed partitions: Orders_US, Orders_EU, and Orders_APAC. You can use the commands below to do this:

CREATE TYPE status_t AS ENUM('ordered', 'baking', 'delivering', 'yummy-in-my-tummy');

CREATE TABLE PizzaOrders

(

order_id int,

order_status status_t,

order_time timestamp,

region text,

PRIMARY KEY (order_id, region)

) PARTITION BY LIST (region);

CREATE TABLE Orders_US

PARTITION OF PizzaOrders

FOR VALUES IN ('US') TABLESPACE us_tablespace;

CREATE TABLE Orders_EU

PARTITION OF PizzaOrders

FOR VALUES IN ('EU') TABLESPACE eu_tablespace;

CREATE TABLE Orders_APAC

PARTITION OF PizzaOrders

FOR VALUES IN ('APAC') TABLESPACE apac_tablespace;In fact, a combination of several capabilities enables geo-partitioning in YugabyteDB:

- First, the original table (

PizzaOrders) is partitioned using the LIST Partitioning method (PARTITION BY LIST (region)). - Second, each partition is assigned to one of the tablespaces. For instance, in the command above, the

Orders_APACpartition is assigned to the TABLESPACEapac_tablespace. - Lastly, each tablespace with its partitions is automatically mapped to (i.e., placed on) YugabyteDB nodes from the corresponding geography.

Alright, now let’s run this command just to make sure that the PizzaOrders table was, in fact, partitioned properly:

\d+ PizzaOrders;

Partitioned table "public.pizzaorders"

Column | Type | Collation | Nullable | Default | Storage | Stats target | Description

--------------+-----------------------------+-----------+----------+---------+----------+--------------+-------------

order_id | integer | | not null | | plain | |

order_status | status_t | | | | plain | |

order_time | timestamp without time zone | | | | plain | |

region | text | | not null | | extended | |

Partition key: LIST (region)

Indexes:

"pizzaorders_pkey" PRIMARY KEY, lsm (order_id HASH, region ASC)

Partitions: orders_apac FOR VALUES IN ('APAC'),

orders_eu FOR VALUES IN ('EU'),

orders_us FOR VALUES IN ('US')Testing Table Geo-Partitioning

You are now ready to do the final test. Go ahead and add a few orders into the database. As of now, put some data in the US-based pizza chain:

INSERT INTO PizzaOrders VALUES

(1, 'yummy-in-my-tummy', '2021-12-27 22:00:00', 'US'),

(2, 'yummy-in-my-tummy', '2022-05-15 13:00:00', 'US'),

(6, 'baking', '2022-06-24 8:45:00', 'US'),

(7, 'baking', '2022-06-24 9:00:00', 'US'); Make sure the data got placed in the Orders_US partition (refer to the tableoid column in the result):

SELECT tableoid::regclass,* from PizzaOrders

ORDER BY order_id;

tableoid | order_id | order_status | order_time | region

-----------+----------+-------------------+---------------------+--------

orders_us | 1 | yummy-in-my-tummy | 2021-12-27 22:00:00 | US

orders_us | 2 | yummy-in-my-tummy | 2022-05-15 13:00:00 | US

orders_us | 6 | baking | 2022-06-24 08:45:00 | US

orders_us | 7 | baking | 2022-06-24 09:00:00 | USNext, attempt to put pizza orders in but for customers from London (EU region) and Hong Kong (APAC region):

INSERT INTO PizzaOrders VALUES

(3, 'yummy-in-my-tummy', '2022-05-23 10:00:00', 'EU'),

(4, 'yummy-in-my-tummy', '2022-06-23 19:00:00', 'APAC'),

(5, 'delivering', '2022-06-24 8:30:00', 'APAC'),

(8, 'ordered', '2022-06-24 10:00:00', 'EU');

ERROR: Illegal state: Nonlocal tablet accessed in local transaction: tablet 49fb2ab17298424096d215f5f1f32515If you’ve been following these instructions, you will receive the above error message. This happens because our psql session is opened through a YugabyteDB node deployed in the US (yugabytedb_node_us). And, by default, YugabyteDB doesn’t let you perform transactions that span across several geographies. So what are your options? You can connect to the nodes in EU and APAC and insert data from there. Or, you can toggle the force_global_transaction on and insert the data from the US-based node:

SET force_global_transaction = TRUE;

INSERT INTO PizzaOrders VALUES

(3, 'yummy-in-my-tummy', '2022-05-23 10:00:00', 'EU'),

(4, 'yummy-in-my-tummy', '2022-06-23 19:00:00', 'APAC'),

(5, 'delivering', '2022-06-24 8:30:00', 'APAC'),

(8, 'ordered', '2022-06-24 10:00:00', 'EU'); Once the command succeeds, confirm that the orders are placed properly across the partitions and respective geographies (again refer to the tableoid column in the output):

SELECT tableoid::regclass,* from PizzaOrders

ORDER BY order_id;

tableoid | order_id | order_status | order_time | region

-------------+----------+-------------------+---------------------+--------

orders_us | 1 | yummy-in-my-tummy | 2021-12-27 22:00:00 | US

orders_us | 2 | yummy-in-my-tummy | 2022-05-15 13:00:00 | US

orders_eu | 3 | yummy-in-my-tummy | 2022-05-23 10:00:00 | EU

orders_apac | 4 | yummy-in-my-tummy | 2022-06-23 19:00:00 | APAC

orders_apac | 5 | delivering | 2022-06-24 08:30:00 | APAC

orders_us | 6 | baking | 2022-06-24 08:45:00 | US

orders_us | 7 | baking | 2022-06-24 09:00:00 | US

orders_eu | 8 | ordered | 2022-06-24 10:00:00 | EU

Wrapping Up

Alright, this is more than enough for starters when it comes to table geo-partitioning. Check out this article if you’d like to learn how to add new regions to a pre-existing geo-partitioned cluster or how to withstand region-level outages.

This article wraps up our series dedicated to the topic of table partitioning for application developers. As linked at the beginning of the article, make sure to check out the first two posts on partition pruning and partition maintenance.

Have fun building these apps, and stay tuned for some new content related to databases, distributed systems, and Java!

Opinions expressed by DZone contributors are their own.

Comments