What Is “Real-Time Debugging”?

For advanced debugging, consider the advantages and challenges of real-time debugging, which should minimize the impact on your work.

Join the DZone community and get the full member experience.

Join For FreeQuestions from students or readers of my articles are a great source for all kind of articles. And here is the ‘question of this week’: “What is real-time debugging”?

It’s a good question because the topic of ‘real-time’ and ‘debugging’ was a topic in the lectures this week. So this question gives me the opportunity to combine the two things of ‘real-time’ and ‘debugging’ — I love it!

Debugging is probably the part in the development where the most time is spent, for many reasons. It is the part of the development process that usually can be planned the least. If you are looking for an overview about different debugging techniques, or if you are wondering what ‘Real-time Debugging’ means, then I hope this article is useful for you.

‘Real-time debugging’ has two parts:

- Debugging

- Real-time

Both terms deserve a deep-dive.

What Is ‘Debugging’?

I’m still amazed how many students are not familiar with the concept and benefits of ‘debugging’. Debugging is defined by Wikipedia as:

“Debugging is the process of finding and resolving of defects that prevent correct operation of computer software or a system.”

And indeed it can be something like finding and removing a moth from a relay:

The first actual case of a bug being found

If the system is correct and operates correctly, then no debugging is needed. But how many systems are known to be correct in all cases? At least, the software I write is rarely correct the first time, so I’m using a debugger to inspect the system to find ‘ bugs’ in order to correct the system.

There are many ways to ‘debug’ and inspect the system:

- LED blinking: I could use an LED and watch the blinking to see if the system is operating correctly. I could use that LED in my code to show the status of the system, and based on the LED blinking, I would gain knowledge about what is going on. That certainly works for very simple and slow computer systems.

- Pin toggling: For faster systems, I could record the LED or microcontroller pin with an oscilloscope or logic analyzer. That allows me to inspect faster signals, and I can pack more information into the signal by, say, sending variable content in a binary format, or using multiple pins/signals:

- Printf debugging: I can issue more information by using a communication channel from the system, say sending and receiving text using a UART or USB connection. This is what I refer as ‘printf debugging’: By using, sending, and receiving text, I can inspect the target system and get visibility into its behavior:

- Stop-mode debugging: The above approaches inspect a system that is constantly running. Another approach to halt the target so I can inspect it. That way, I can inspect multiple things, and when I’m finished, I let the system run again. This is what I refer as ‘stop-mode debugging.’ For this, the hardware/microcontroller needs special hardware built-in that allows us to halt the system for inspection. A commonly used technology for this is JTAG (or SWD on ARM cores, which is similar, but with fewer pin numbers). Typical debug probes in the ARM Cortex world are the P&E Multilink or the Segger J-Link. This usually needs some special hardware ‘debug probes’ like a JTAG or SWD device, which is connected to the JTAG/SWD pins of the microcontroller. Another approach without using something like JTAG/SWD is ‘monitor-based debugging’: With this, a small ‘monitor’ program is running on the target ,to which I can send commands. That ‘monitor’ program then will allow me to stop/step/etc. the program. For both the ‘monitor’ or the ‘JTAG’ ways, I need some kind of ‘controller’ program on the host. With that special software on a host computer (a ‘debugger’), I have a tool to start or stop execution, step through my code, and inspect the variables and code running on the target system. That program can be a command line tool (like command line gdb, see Command Line Programming and Debugging with GDB), or I can use a graphical program like Eclipse, or programs like the SEGGER Ozone debugger (see First Steps with Ozone and the Segger J-Link Trace Pro):

- Software Trace: Sometimes ‘stop-mode’ debugging is not enough. Because stopping the system might change the system behavior, or stopping might not be possible (e.g. I cannot stop an engine in a car while I’m driving on the highway). For this, techniques like ‘tracing’ are used. Tracing means that the device under test constantly sends (‘streams’) debugging information to the attached host system — without stopping it. Streaming can be purely software based (see Tutorial: FreeMASTER Visualization and Run-Time Debugging) or use features of the running operating system (see FreeRTOS Continuous Trace Streaming). Plus there is the SEGGER J-Scope for this purpose:

- Hardware trace: Because ‘software’ streaming might affect the behavior of the running software, a ‘hardware trace’ solution has to use dedicated (and sometimes expensive) hardware and requires a more expert understanding of the system (see Tutorial: Getting ETM Instruction Trace with NXP Kinetis ARM Cortex-M4F).

- Live variable debugging: ‘Stop mode debugging’ allows me to step or stop the target. But many times, I want to see how things are changing over time. With ‘live variables’, the normal ‘stop-mode-debugger’ is extended with the ability to send/receive data while the target is ‘running’. An example of this is described in P&E ARM Cortex-M Debugging with FreeRTOS Thread Awareness and Real Time Expressions for GDB and Eclipse.

All the above debugging techniques have an impact on the device under debug, which is commonly referred as ‘intrusiveness’. Debugging might be more or less intrusive or disruptive for the device under test. For example, using stop-mode-debugging means bringing the system to a full stop, which might affect other systems around the system under debug (think about halting the engine in a car while driving). Meanwhile, using printf() style debugging will affect the resource usage and timing of the system — because the device under test needs time to put out all the needed data. Because debugging might impact the timing of the system, we have to have a closer look at the ‘time’ aspect.

The term ‘real-time’ is used in many ways (see Wikipedia). ‘Real-time’ is an attribute of a system. In the context of embedded systems or computer systems, a ‘real-time system’ can be defined as:

- Correctness: The correct result.

- Timeliness: At the correct time.

- Independent of current system load.

- In a deterministic and foreseeable way.

Point 1 and point 2 are the most important ones. Point 3 and point 4 are a logical consequence of the first two points. The definition is more around system theory, but for our question of ‘real-time debugging’, the debugger or the device under test (with the debugger attached to it) is ‘the system’.

Condition 1 (The correct result) is simple: if the system does not provide the correct result, the system is not correct and fails. What is ‘correct’ needs to be defined for the particular system. For example, for the clock in “Making-Of Sea Shell Sand Clock”, the clock should write the correct time:

Condition 2 (at the correct time) means that the correct result (from point 1) needs to be delivered at the specified time or within the specified time frame. ‘Real-time’ does not mean ‘as fast as possible’: it means: ‘at the correct time’, which includes ‘within the correct timing boundaries’, either relative or absolute.

There are two ‘flavors’ of real-time: hard real-time and soft real-time. For hard real-time, the system has to meet condition 2 100% of the tim. Otherwise, the system is not considered correct. For example, a hard real-time airbag system has to deploy the airbag in 100% of all cases — it is *not* ok that the airbag sometimes is deployed a too early, too late, or not deployed at all (you certainly would not use such a system, right?). In a soft real-time system, it is fine that the system sometimes violates the ‘correct time’ condition, such as a video streaming system delaying some frames or missing sending a few of them altogether. The system is still considered ‘correct’ (you still would use the system to watch a video), but the system would be considered ‘degraded’ or as ‘not so good’. For the sand clock project shown above, the time needs to be written every minute. It is a soft real-time system, as it would be ok (but not good) if the time would be written several seconds too late.

Condition 3 (Independent of current system load) is a logical result of the previous two points for computer systems. A computer or microcontroller can only do one single thing at a time. However, the computer system is attached to the ‘real world’ in which we are living, and in this real world, things are happening in parallel. For a computer system, dealing with that ‘real’ world means that the computer system has to divide its processing time to multiple things (‘multi-tasking’).

This condition basically means that the regardless how many things the computer system is doing, it needs to meet the timing and correctness conditions. Condition 3 is used as a ‘helper’ condition to qualify and quantify the scenarios under which the correct result has to be delivered at the correct time. For the example of the sand clock: It runs an operating system on a tinyK20, and it can communicate over USB to the outside world while it is constantly checking the internal real-time clock. Regardless of what the system is doing, it has to meet the ‘correct result at the correct time’ condition.

Condition 4 (In a deterministic and foreseeable way) is yet another supporting condition to meet conditions 1-3. It basically means that for every given state of the system, it is determined what the next state of the system will be. For every given state, it is foreseeable and defined what will come in the very next state. Or, in other words, the system is not behaving in a random way. This supporting condition ensures that the system can be fully described and proven in a ‘mathematical’ way to be correct and to meet the timing conditions.

‘Real-Time’ + ‘Debugging’ = ‘Real-Time Debugging’?

Combining ‘Real-time’ with ‘Debugging’ means that the debugger enables me to inspect and debug a device's real-time capabilities, under ‘real-time conditions’:

- Correctness: I can use the debugger to verify the correctness of the device, such as inspecting the device internal status, inspecting its internal and external signals and states, etc.

- Timeliness: Debugging gives me visibility into the concurrent or ‘quasi-concurrent’ events on the system. I can verify the timing and timing boundaries of the firmware and device under test. I can inspect the absolute and relative timing of the system. It gives me information on how the device timing relates to the real world time and timing.

- Independent of current system load: Debugging allows me to verify and see the system load of the device under debug. Using the debugger does not impact the timeliness or behavior of the device, or the effect is very small.

- In a deterministic and foreseeable way: The debugger can be used to verify the determinism of the device and does not affect the determinism of the device. For example, the debugger does not read registers that could cause a side effect. The impact of the debugger to the target is well-defined. I have control over what happens with the debugger and how it affects the target.

Or in other words, aside from target correctness verification, the debugger needs to be able to correlate the system/device timing and behavior with the ‘real world’ timing or the ‘real-time’.

Most real-time systems have timing boundaries in the milliseconds or microseconds. Such timing is definitely not able to be handled with pure software-based or ‘printf()’ style debugging, as that method is simply too slow and is not able to keep up with high-resolution timing. So I do not consider ‘printf()’ style debugging suitable for real-time debugging. ‘Pin toggling’ and ‘LED blinking’ can be used to some extent with an external hardware probe (oscilloscope/logic analyzer) capturing and measuring the signal, but the amount of data is very limited.

Usually, systems are much more complex. On a high level, I can use software tracing tools like Segger SystemView, which gives visibility into the system and interrupt timing. With this, I can correlate the system timing and behavior to the ‘real time’:

Another tool for this is the Percepio FreeRTOS+Trace, which records RTOS and application events: I can see how things are working quasi-parallel, with timing down to the microseconds:

To get such an exact timing from the system, usually, the device hardware itself provides some kind of timestamping (e.g. using a cycle count register, see Cycle Counting on ARM Cortex-M with DWT).

The other challenge is to get the data off the system in a timely manner. For this, typically, dedicated hardware and probes using JTAG/SWD are necessary.

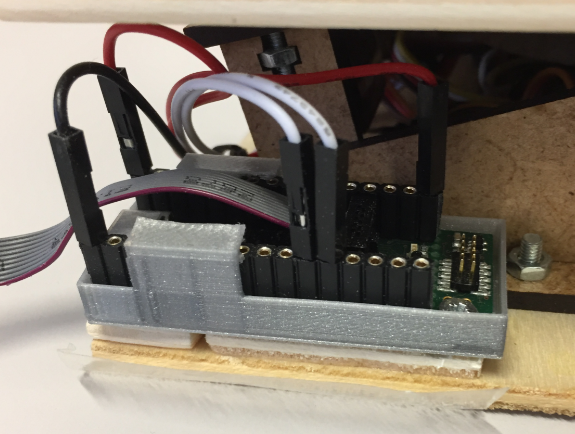

With a hardware JTAG/SWD debug probe, the debugger can take over the device under debug, with no or minimal/defined impact on the timeliness of the target:

Using such a JTAG/SWD debug probe, I can use the debugger to control the device under debug in ‘stop-mode’:

If I need to record and inspect the target while it is running, then I need things like ‘live view’, which allows me to see variables and expressions changing:

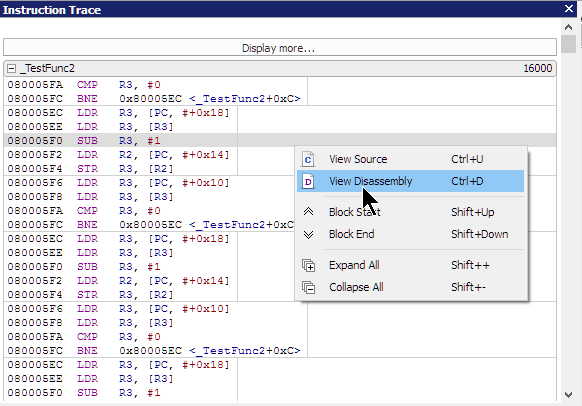

And to solve the hard problems and to get the most visibility, I need to do full instruction and data access tracing using dedicated trace hardware and tools:

In essence, ‘real-time debugging’ means be able to debug and inspect a device which is supposed to be real-time. For this, I need to closely map the device behavior and execution to the ‘real world time’. For most systems, a ‘printf()’ style debugging will not be enough, as it's too slow and too intrusive: It affects the system behavior too much. If I have to map system device timing to micro- and nanoseconds, I need something different.

For most debugging, I need deep control over the device under debug, which usually requires debugging using JTAG/SWD/SWO pins for stop-mode debugging. The next level is to use instruction and data tracing: This gives the most and best visibility into the system and its timing, but it requires dedicated tracing hardware and tools.

I’m using a combination of different debugging technologies: A mix of SWD/JTAG stop-mode debugging with software/hardware tracing.

What is your experience, and what works best for you?

Happy real-timing!

Published at DZone with permission of Erich Styger, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments