Accelerating Connection Handshakes in Trusted Network Environments

Follow an overview of methods like TCP FastOpen, TLSv1.3, 0-RTT, and HTTP/3 to reduce handshake delays and improve server response times in secure environments.

Join the DZone community and get the full member experience.

Join For FreeIn this article, I aim to discuss modern approaches used to reduce the time required to establish a data transmission channel between two nodes. I will be examining both plain TCP and TLS-over-TCP.

What Is a Handshake?

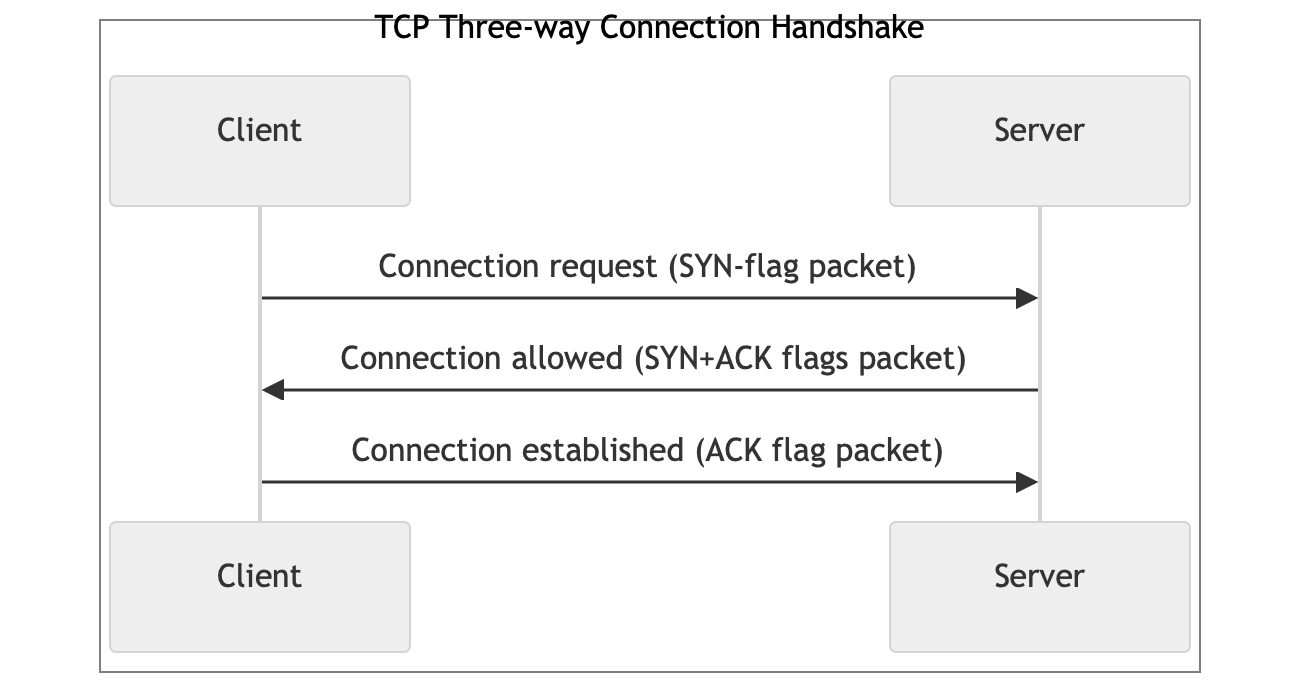

First, let’s define what a handshake is, and for that, an illustration of the TCP handshake serves very well:

The handshake is the process of exchanging messages between two nodes (such as a client and a server) to establish a connection and negotiate communication parameters before data transmission begins. The purpose of a handshake is to:

- Confirm the readiness of both nodes to communicate.

- Agree on connection parameters: protocol versions, encryption methods, authentication, and other technical details.

- Ensure the security and reliability of the connection before transmitting sensitive or important data.

The handshake is a critically important step in network interaction because it sets the foundation for subsequent communication, guaranteeing that both nodes "understand" each other and can securely exchange information.

Thus, no data could be exchanged between communication parties until the handshake is done.

Why Is Optimizing the Handshake Time Important?

Even if a handshake looks like a very short and simple procedure, it can take a very long time for long-distance communication. It's well known that the maximum distance between any two points on the Earth's surface is about 20,000 km, which is half the Earth’s circumference. If you take into account the speed of light (~300 km/sec), it means that the maximum RTT (round-trip-time) between the two points should be not more than 134ms. Unfortunately, that is not how global networks work. Imagine we have a client in the Netherlands and a server in Australia. The RTT between them in practice will be around 250 ms because the network will send your packets first to the US, then to Singapore, and only after that, deliver them to Australia. The channels are also usually quite loaded which could delay the packets even more. It means that a basic TCP handshake could take, let’s say, 300 ms easily. And this is probably more than the server will spend to generate an answer.

To illustrate all that, let me start a simple HTTP server somewhere in Sydney. The client will be an Apache Benchmark utility to generate a lot of sequential requests and to see the resulting timings.

$ ab -n 1000 -c 1 http://localhost/

…

Concurrency Level: 1

Time taken for tests: 0.087 seconds

Complete requests: 1000

Failed requests: 0

Total transferred: 205000 bytes

Requests per second: 11506.16 [#/sec] (mean)

Time per request: 0.087 [ms] (mean)As we can see, the service is blazingly fast and serves 11.5K RPS (requests per second). Now let’s move the client to Amsterdam and recheck the same service.

$ ab -n 1000 -c 1 http://service-in-sydney/

…

Concurrency Level: 1

Time taken for tests: 559.284 seconds

Complete requests: 1000

Failed requests: 0

Total transferred: 205000 bytes

Requests per second: 1.79 [#/sec] (mean)

Time per request: 559.284 [ms] (mean)The service is 6.5K times slower! And this is all about the latency between the nodes.

Of course, if your servers communicate a lot, they’ll probably have a pool of established connections and the problem won’t be that awful, but even in this situation, you have to reconnect from time to time. Also, no one keeps connections open to every partner service it uses, just the major ones. So, it can show severe delays from time to time.

Another approach is to multiplex multiple requests in a single HTTP connection. That also could work great — but for instance, in HTTP/2, a single stream can slow down all the other streams together. So, it is not always the best way to go.

Trusted Environments

We’ll talk about trusted environments only and this is for a reason. For each of the methods we’ll discuss, I’ll mention the security or privacy issues it has if any. To mitigate them, additional measures should be taken, which could be very complicated. That is why I’ll stick to an environment where no one will send any malicious packets to the services’ endpoints. Consider a VPN or at least a network with good Firewall protection. Local area networks are also fine, but usually handshake time optimizations there are not that beneficial.

TCP Fast Open

First, we will discuss the base-level optimization, suitable for every TCP-based higher-level protocol: TCP Fast Open.

History

In 2011, a group of engineers at Google, as part of efforts to improve network performance, published a project proposing an extension to the TCP protocol that allowed clients to add data to the SYN packet of the three-way handshake. In other words, for small requests, clients could include them directly in the very first TCP packet sent to the server and start receiving data one RTT faster. To understand how important this is, remember that companies like Google have numerous data centers on most continents, and the software services operating there need to communicate actively with each other. It is not always possible to stay within a single data center, and network packets often have to cross oceans.

The technology was first used within Google itself, and in the same year (2011), the first patches were submitted to the Linux kernel code. After checks and refinements, TCP Fast Open was included in the 3.6 release of the kernel in 2012. Subsequently, over the next few years, the extension was actively tested under real conditions and improved in terms of security and compatibility with devices based on other operating systems. Finally, in December 2014, TFO was standardized and published as RFC 7413.

For now, almost all the biggest IT companies use TFO in their data centers. You can find articles about having that from Google, Meta, Cloudflare, Netflix, Amazon, Microsoft, etc. It means the technology works, useful to have, and is quite reliable.

How It Works

There are a lot of good articles describing how TFO works. My favorite is this one, which clarifies in much detail all the possible scenarios. I will describe it very briefly.

First Connection: Explanation of Steps

- Client sends SYN (TFO Cookie Request):

- The client initiates a TCP connection by sending a SYN packet with a TFO option indicating a cookie request.

- No application data is sent at this stage because the client does not have the TFO cookie yet.

- Server responds with SYN-ACK (Including TFO Cookie):

- The server replies with a SYN-ACK packet, including a freshly generated TFO cookie in the TFO option.

- The cookie is a small piece of data used to validate future TFO connections from this client.

- Client sends ACK:

- The client acknowledges the receipt of the SYN-ACK by sending an ACK packet, completing the TCP three-way handshake.

- At this point, the TCP connection is established.

- Now that the connection is established, the client sends the HTTP request over the TCP connection.

- The server processes the HTTP request and sends back the HTTP response to the client.

Later Connections: Explanation of Steps

- The client initiates a new TCP connection by sending a SYN packet. The SYN packet includes:

- TFO cookie obtained from the previous connection

- HTTP request data (e.g., GET request) attached to the SYN packet; the size of the payload is limited to MSS and usually varies from 500 bytes to 1Kb

- Upon receiving the SYN packet, the server:

- Validates the TFO cookie to ensure it is legitimate

- Accepts the early data (HTTP request) included in the SYN packet

- The server sends a SYN-ACK packet back to the client.

- The server may send early HTTP response data if it processes the request quickly enough. Alternatively, the server may send the HTTP response after the handshake is complete.

- Client Sends ACK.

The Experiment

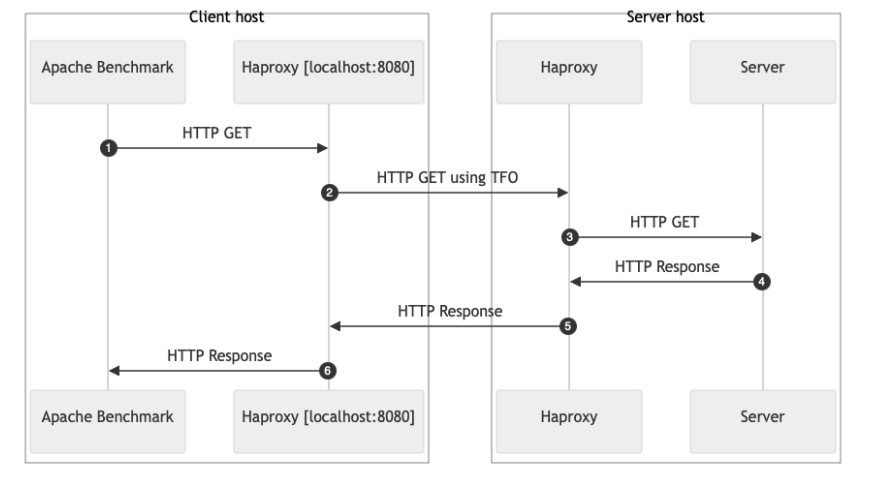

As you can see, all the later connections could save 1 RTT on getting the data. Let’s test it with our Amsterdam/Sydney client/server lab. As to not modify either the client or the server, I’ll add a reverse proxy service on top of the client and the server:

System-Wide Configuration

Before doing anything, you need to ensure TFO is enabled on your server and the client. To do that on Linux, take a look at the net.ipv4.tcp_fastopen bitmask-parameter. Usually, it will be set by default to one or three.

# enabling client and server support

$ sudo sysctl -w net.ipv4.tcp_fastopen=3

net.ipv4.tcp_fastopen = 3According to the kernel documentation, possible bits to enable the parameter are as follows:

|

0x1 |

(client) |

Enables sending data in the opening SYN on the client |

|

0x2 |

(server) |

Enables the server support, i.e., allowing data in a SYN packet to be accepted and passed to the application before 3-way handshake finishes |

|

0x4 |

(client) |

Send data in the opening SYN regardless of cookie availability and without a cookie option. |

|

0x200 |

(server) |

Accept data-in-SYN w/o any cookie option present. |

|

0x400 |

(server) |

Enable all listeners to support Fast Open by default without explicit TCP_FASTOPEN socket option. |

Note the bits 0x4 and 0x200. They allow bypassing the need for the client to have a cookie altogether. This enables accelerating even the first TCP handshake with a previously unknown client, which can be acceptable and useful in a trusted environment.

Option 0x400 allows forcing the server code, even if it knows nothing about TFO, to accept data received in the SYN packet. This is generally not safe but may be necessary if modifying the server code is not feasible.

Reverse Proxy Configuration

After configuring the system, let’s install and configure a reverse proxy. I will use HAProxy for that, but you can choose any.

HAProxy configuration on the server host:

frontend fe_main

bind :8080 tfo # TFO enabled on listening socket

default_backend be_main

backend be_main

mode http

server server1 127.0.0.1:8081 # No TFO towards the serverHAProxy configuration on the client’s host:

frontend fe_main

bind 127.0.0.1:8080 # No TFO on listening socket

default_backend be_main

backend be_main

mode http

retry-on all-retryable-errors

http-request disable-l7-retry if METH_POST

server server1 server-in-sydney:8080 tfo # TFO enabled towards the proxy on the serverAnd let’s test again:

$ ab -n 1000 -c 1 http://localhost:8080/

…

Concurrency Level: 1

Time taken for tests: 280.452 seconds

Complete requests: 1000

Failed requests: 0

Total transferred: 205000 bytes

Requests per second: 3.57 [#/sec] (mean)

Time per request: 280.452 [ms] (mean)As you can see we got a result twice as good as before. And except for the URL, we had nothing to change in the client or the server themselves. The reverse proxy handled all the TFO-related work and that is a great way to go.

Downsides of TFO

Now the sad part about TFO:

The first and biggest issue for TFO is that it is vulnerable to the so-called “replay” attacks. Imagine an intruder who sniffed the process of handshaking for some client and then started to send malicious requests on behalf of the client. Yes, the attacker won’t receive the results of the requests, but it can break something, make an amplification attack toward the client, or just exhaust the server's resources.

Another big issue is privacy. In www-world, clients get cookies. We all know how those cookies can be used to track clients. The same applies to TFO, unfortunately. As soon as your device gets a cookie from the server, it will use it for many hours for all the outgoing connections to that server, even if not early data to be sent there. And the cookie becomes the unique ID of your machine. A good paper with the investigation of these security flaws can be found here.

Also, even though the technology is quite mature, a lot of network devices still can block and drop SYN packets with payload, which causes issues.

That said, TFO is dangerous to use in the wild Internet and it is more suitable for safer internal networks.

TLS

TLS (Transport Layer Security) is a cryptographic protocol designed to provide end-to-end security for data transmitted over a network. It achieves this by encrypting the data between two communicating applications so eavesdroppers can't intercept or tamper with the information. During the TLS handshake, the client and server agree on encryption algorithms and exchange keys, setting up a secure channel for data transfer.

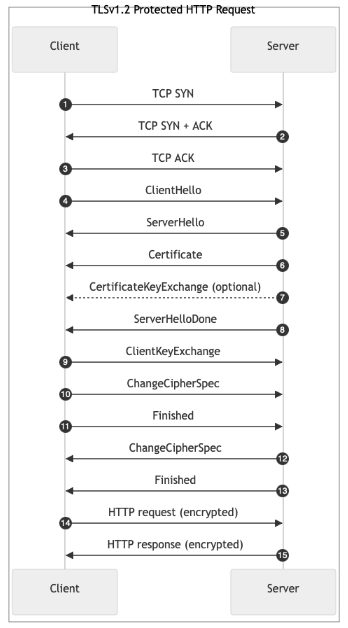

And, yes, there's the "handshake" word again. First, let’s take a look at the sequence diagram of a TLS 1.2 connection:

Protected HTTP Request: Explanation of Steps

TCP SYN: The client initiates a TCP connection by sending a SYN packet to the server.TCP SYN-ACK: The server responds to the client's SYN with a SYN-ACK packet to acknowledge the client's SYN and indicate readiness to establish a connection.TCP ACK: The client acknowledges the server's SYN-ACK by sending an ACK packet to complete the TCP three-way handshake, establishing the TCP connection.ClientHello: The client initiates the TLS handshake by sending aClientHellomessage over the established TCP connection. It contains the following:- Protocol version: Highest TLS version supported (e.g., TLS 1.2)

- Client random: A random value used in key generation

- Session ID: For session resumption (may be empty)

- Cipher suites: List of supported encryption algorithms

- Compression methods: Usually null (no compression)

- Extensions: Optional features (e.g., Server Name Indication)

ServerHello: The server responds with aServerHellomessage which contains:- Protocol version: Selected TLS version (must be ≤ client's version)

- Server random: Another random value for key generation

- Session ID: Chosen session ID (matches client's if resuming a session)

- Cipher suite: Selected encryption algorithm from the client's list

- Compression method: Selected compression method

- Extensions: Optional features agreed upon

Certificate: The server sends its X.509 Certificate which contains the server's public key and identity information to authenticate itself to the client. This could be a chain of certificates.ServerKeyExchange(optional): Sent if additional key exchange parameters are needed (e.g., for Diffie-Hellman key exchange)ServerHelloDone: Indicates that the server has finished sending its initial handshake messages and awaits the client's responseClientKeyExchange: The client sends key exchange information to the server to allow both client and server to compute the shared premaster secret.ChangeCipherSpec: The client signals that it will start using the newly negotiated encryption and keys.Finished: The client sends aFinishedmessage encrypted with the new cipher suite. It contains a hash of all previous handshake messages. It allows the server to verify that the handshake integrity is intact and that the client has the correct keys.ChangeCipherSpec: The server signals that it will start using the negotiated encryption and keys.Finished: The server sends aFinishedmessage encrypted with the new cipher suite. It contains a hash of all previous handshake messages and allows the client to verify that the server has successfully completed the handshake and that the keys match.- HTTP Request: Now that the connection is established, the client sends the HTTP request over the encrypted TLS connection.

- HTTP Response: The server processes the HTTP request and sends back the encrypted HTTP response to the client.

A big one, isn’t it? If you analyze the diagram, one can find that it is at least 4 RTTs required to establish a connection and to get a response to the request from the client. Let’s check it in our test lab with a well-known curl-utility.

The Experiment

To get various useful timings from curl, I usually create the following file somewhere in the filesystem of a server:

$ cat /var/tmp/curl-format.txt

\n

time_namelookup: %{time_namelookup}\n

time_connect: %{time_connect}\n

time_appconnect: %{time_appconnect}\n

time_pretransfer: %{time_pretransfer}\n

time_redirect: %{time_redirect}\n

time_starttransfer: %{time_starttransfer}\n

----------\n

time_total: %{time_total}\n

\n

size_request: %{size_request}\n

size_upload: %{size_upload}\n

size_header: %{size_header}\n

size_download: %{size_download}\n

speed_upload: %{speed_upload}\n

speed_download: %{speed_download}\n

\nNow let’s use it (-w parameter) and make a call using TLS 1.2 from Amsterdam to Sydney:

$ curl -w "@/var/tmp/curl-format.txt" https://server-in-sydney/ --http1.1 --tlsv1.2 --tls-max 1.2 -o /dev/null

time_namelookup: 0.001645

time_connect: 0.287356

time_appconnect: 0.867459

time_pretransfer: 0.867599

time_redirect: 0.000000

time_starttransfer: 1.154564

----------

time_total: 1.154633

size_request: 86

size_upload: 0

size_header: 181

size_download: 33

speed_upload: 0

speed_download: 28More than a second for a single request! Remember that the service is capable of serving 11.5K requests per second. Let’s try to do something with it.

TLSv1.3

This is the newest stable version of the TLS protocol defined in “RFC 8446 - The Transport Layer Security (TLS) Protocol Version 1.3.” And you know what? It is great and absolutely safe to use everywhere you can set it up. The only downside is not every client supports it for now, but servers could be configured to support both TLS 1.2 and 1.3 versions, so it is not a big deal.

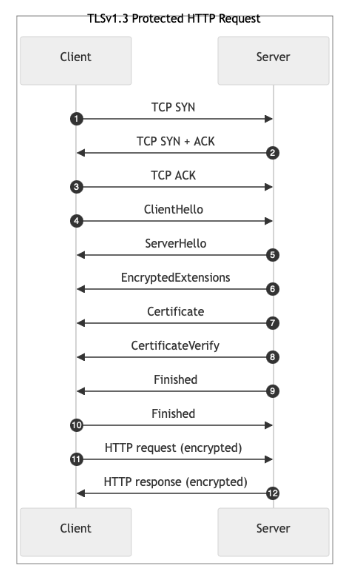

Now, let’s take a look at what is so great in TLSv1.3 for our handshake optimization. For that, here's a new sequence diagram.

Protected HTTP Request: Explanation of Steps

TCP SYN: The client initiates a TCP connection by sending a SYN packet to the server.TCP SYN-ACK: The server responds to the client's SYN with a SYN-ACK packet to acknowledge the client's SYN and indicate readiness to establish a connection.TCP ACK: The client acknowledges the server's SYN-ACK by sending an ACK packet to complete the TCP three-way handshake, establishing the TCP connection.ClientHello: The client initiates the TLS handshake by sending aClientHellomessage. It proposes security parameters and provides key material for key exchange. The message contains:- Protocol version: Indicates TLS 1.3

- Random value: A random number for key generation

- Cipher suites: List of supported cipher suites

- Key share extension: Contains the client's ephemeral public key for key exchange (e.g., ECDHE)

- Supported versions extension: Indicates support for TLS 1.3

- Other extensions: May include Server Name Indication (SNI), signature algorithms, etc.

- Function: Proposes security parameters and provides key material for key exchange

ServerHello: The server responds with aServerHellomessage to agree on security parameters and complete the key exchange. Its contents include:- Protocol version: Confirms TLS 1.3

- Random value: Another random number for key generation

- Cipher suite: Selected cipher suite from the client's list

- Key share extension: Contains the server's ephemeral public key

- Supported versions extension: Confirms use of TLS 1.3.

EncryptedExtensions: The server sendsEncryptedExtensionscontaining extensions that require confidentiality, like server parameters.Certificate: The server provides its certificate and any intermediate certificates to authenticate itself to the client.CertificateVerify: The server proves possession of the private key corresponding to its certificate by signing all the previous handshake messages.Finished: The server signals the completion of its handshake messages with an HMAC over the handshake messages, ensuring integrity.Finished: The client responds with its ownFinishedmessage with an HMAC over the handshake messages, using keys derived from the shared secret.- HTTP Request: Now that the connection is established, the client sends the HTTP request over the encrypted TLS connection.

- HTTP Response: The server processes the HTTP request and sends back the encrypted HTTP response to the client.

As you can see, TLSv1.3 reduces the handshake to one round trip (1-RTT) compared with the previous version of the protocol. It also allows only ephemeral key exchanges and simplified cipher suites. While it is very good for security, that is not our main concern here. For us, it means less data is required to be sent between the parties.

The Experiment

Let’s try it out with our curl command:

$ curl -w "@/var/tmp/curl-format.txt" https://server-in-sydney/ --http1.1 --tlsv1.3 -o /dev/null

time_namelookup: 0.003245

time_connect: 0.265230

time_appconnect: 0.533588

time_pretransfer: 0.533673

time_redirect: 0.000000

time_starttransfer: 0.795738

----------

time_total: 0.795832

size_request: 86

size_upload: 0

size_header: 181

size_download: 33

speed_upload: 0

speed_download: 41And it is all true: we cut one RTT from the response. Great!

Mixing the Things

We already cut one RTT by just upgrading the TLS protocol version to 1.3. Let’s remember we have TCP Fast Open available. With that, we can send a ClientHello message directly inside a SYN packet. Will it work? Let’s find out. Curl supports an option to enable TCP Fast Open towards the target. The target still has to support TFO.

$ curl -w "@/var/tmp/curl-format.txt" https://server-in-sydney/ --http1.1 --tlsv1.3 -o /dev/null --tcp-fastopen

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 33 0 33 0 0 57 0 --:--:-- --:--:-- --:--:-- 57

time_namelookup: 0.002215

time_connect: 0.002272

time_appconnect: 0.292444

time_pretransfer: 0.292519

time_redirect: 0.000000

time_starttransfer: 0.574951

----------

time_total: 0.575200

size_request: 86

size_upload: 0

size_header: 181

size_download: 33

speed_upload: 0

speed_download: 57And it does! We now halved the original TLSv1.2 timings. But let’s look at one more thing to consider.

Zero Round-Trip Time (0-RTT)

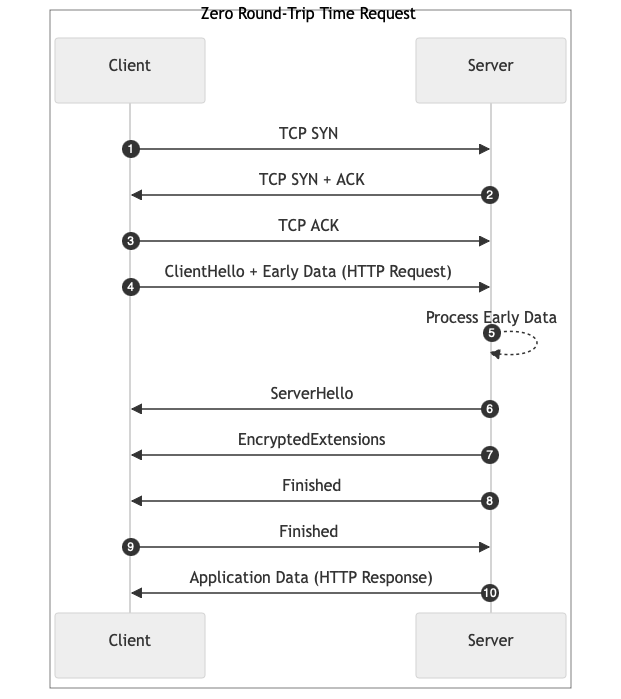

TLS 1.3 doesn’t only significantly streamline the handshake process, enhancing both security and performance. It also provides a new feature called Zero Round-Trip Time (or 0-RTT). 0-RTT allows a client to start transmitting data to a server immediately, without waiting for the full TLS handshake to complete. For that, a previous TLS connection had to exist and its keys are being reused. Let’s take a look at the sequence diagram:

Zero Round-Trip Time Request: Explanation of Steps

TCP SYN: The client initiates a TCP connection by sending a SYN packet to the server.TCP SYN-ACK: The server responds to the client's SYN with a SYN-ACK packet to acknowledge the client's SYN and indicate readiness to establish a connection.TCP ACK: The client acknowledges the server's SYN-ACK by sending an ACK packet to complete the TCP three-way handshake, establishing the TCP connection.ClientHellowith early data (0-RTT data): The client initiates the handshake and sends the HTTP request as early data (which could be an HTTP request). It contains the following:- Protocol version: Indicates support for TLS 1.3

- Cipher suites: List of supported cipher suites

- Key share extension: Contains the client's ephemeral public key for key exchange (e.g., ECDHE)

- Pre-shared key (PSK): Includes a session ticket or PSK obtained from a previous connection

- Early data indication: Signals the intention to send 0-RTT data

- Early data (0-RTT Data): Application data (e.g., HTTP request) sent immediately, encrypted using keys derived from the PSK

- The server analyzes the early data and can pass it to a backend for processing.

ServerHello: The server responds with itsServerHello, agreeing on protocol parameters. The response contains the following:- Protocol version: Confirms TLS 1.3

- Cipher suite: Selected cipher suite from the client's list

- Key share extension: Server's ephemeral public key

- Pre-shared key extension: Indicates acceptance of the PSK

- Early data indication (optional): Confirms acceptance or rejection of 0-RTT data

EncryptedExtensions: The server sends additional handshake parameters securely:- ALPN, supported groups, etc.

- Early data indication (optional): Officially accepts or rejects the early data.

Finished: The server signals the completion of its handshake messages with an HMAC over the handshake messages to ensure integrity.Finished: The client completes the handshake with an HMAC over the handshake messages.- HTTP Response: The server responds to the request received as early data.

No complete RTT saving here, but the request is being transferred as soon as possible. It gives more time to the server to process it and there are more chances that the response will be sent out right after the handshake completes. That is also a great thing.

Unfortunately, such an approach reduces the security as by TLS 1.3 design, session keys should not be reused at all. Also, it opens a surface for replay attacks. I’d suggest not using such a technology in a non-secure network environment unless you carefully implement security measures to compensate for those risks.

The Experiment That Didn’t Happen

I wasn’t able to make an experiment as my service is a very fast responding one and doesn’t benefit from 0-RTT. But if you want to test it yourself, mind that at the moment of preparing this article, curl didn’t support early data. But you can do the testing with “openssl s_client” utility. This is how it can be done:

-

Save TLS-session to the disk with the following command:

openssl s_client -connect example.com:443 -tls1_3 -sess_out /tmp/session.pem < /dev/null -

Create a file with the request:

echo -e "GET / HTTP/1.1\r\nHost: example.com\r\n\r\n" > /tmp/request.txt -

Use the saved session to query the server:

openssl s_client -connect example.com:443 -tls1_3 -sess_in /tmp/session.pem -early_data /tmp/request.txt -trace

HTTP/3

And the last thing I wanted to talk about is HTTP/3. First, it doesn’t use TCP and thus has no requirements for TCP handshake to happen. Second, it supports the same early data approach we’ve just seen in 0-RTT. Lastly, all the congestion and retransmission control is now outside of your kernel and clearly depends on how the server’s and the client’s developers built it.

It means that for the story of handshake latency, what you get are still 2 RTTs as TLSv1.3 + TFO provided, but with that, a new high-level protocol encapsulated in UDP. But give it a try; maybe it can help.

I will give a brief sequence diagram for HTTP/3, but without deep details, as they are very close to what we’ve seen for TLS1.3 and 0-RTT:

![mermaid-graph/neutral sequenceDiagram

box HTTP/3 Request participant c as Client participant s as Server end

autonumber

c->>s: Initial Packet (ClientHello, 0-RTT HTTP Request) s-->>s: [Process ClientHello & Buffer 0-RTT HTTP Request] s->>c: Initial Packet (ServerHello) s->>c: Handshake Packets (EncryptedExtensions, Certificate, CertificateVerify, Finished) c->>s: Handshake Packet (Finished) s->>s: [Process 0-RTT HTTP Request] s->>c: HTTP Response](https://dz2cdn1.dzone.com/storage/temp/17994520-1729539122857.png)

Conclusion

We took a dive into four available techniques intended to decrease the time spent on handshaking between a client and a server. The handshakes appear at TCP and TLS levels. The common idea for them is to send the data to the server as early as possible. Let’s recap:

- TCP FastOpen allows saving of one RTT putting the request into the SYN-packet. It is not very safe outside as it is prone to replay attacks, but stable and good to use in protected network environments. Be cautious for non-idempotent services: better to combine with TLS.

- TLSv1.3 is a newer version of the TLS protocol. It saves one RTT by reducing the amount of information exchanged during the handshake, is very safe, and great to be used inside and outside of trusted networks. It could be combined with TFO to save two RTTs instead of one.

- Zero Round-Trip Time (0-RTT) is an extension for TLSv1.3. It allows to send a client’s request very soon; thus, giving the server more time to process it. It comes with some security concerns but is mostly safe to use inside of a trusted network perimeter for idempotent services.

- HTTP/3 is the newest version of the HTTP protocol. It uses UDP and thus has no need for a TCP handshake. It can give you a response after two RTTs, so similar to TLSv1.3 + TFO.

I hope this article will be useful to make your service slightly faster. Thanks for reading!

Opinions expressed by DZone contributors are their own.

Comments