Big O Notation - Why? When? Where?

Why? Big O notation gives abstraction and the opportunity for generalizing. When? Whenever you want to measure one parameter from the changing volume of another. Where? It is best to look at fairly atomic sections of code that make one specific business logic.

Join the DZone community and get the full member experience.

Join For FreeWhat Is Big O?

This is a mathematical term that originated in the early 20th century in number theory and came almost immediately to computer science as questions arose with resource optimization. Wiki-defined Big O notation is a mathematical notation that describes the limiting behaviour of a function when the argument tends towards a particular value or infinity. Let's rephrase it, and make this definition a little simpler and closer to software development. Any task is solved according to one or another approach, one or another algorithm of action. To compare the effectiveness of heterogeneous solutions written in different programming languages using different approaches, you can start analyzing its execution through the notation. Next, let's look at the most common classes of frequently encountered time complexities.

O(1) - Сonstant

Nothing depends on the volume of input data, most likely the manipulation will not be performed on the entire volume, but a certain fixed part of them will be selected

Code example

const array = [1, 2, 5, 8, 100]

console.log(array[0]) // 1As an example, it is straightforward access to array elements. Unfortunately, there is no good example in algorithms. As you can see it is a pretty simple action, not sure if this could be named an algorithm.

O(n) - Linear

The complexity of the algorithm increases predictably and linearly with the amount of input. Accordingly, the efficiency of such an algorithm falls in direct proportion.

Code example

const array = [1, 2, 3, 4, 5]

let sum = 0

for (let i = 0; i < array.length; i++) {

sum += array[i]

}

console.log(sum) // 15As an algorithm example, here we could look at linear search. It is like a brute-force search by going through elements one by one from one end to another.

O(n^2) - Quadratic

The complexity of the algorithm implies passing through the data sample twice to get the result.

const matrix = [

[1, 2, 3],

[1, 2, 3],

[1, 2, 3],

]

let sum = 0

for (let i = 0; i < matrix.length; i++) {

for (let j = 0; j < matrix[i].length; j++) {

sum += matrix[i][j]

}

}

console.log(sum) // 18Among algorithms, many simple sorts satisfy such complexity. For example, Bubble sort and Insertion sort.

O(log n) - Logarithmic

The main feature of the complexity of the algorithm, by which it can be identified, is that after each iteration, the sample size will be reduced by about half

Code example:

function isContains(array, value) {

let left = 0

let right = array.length - 1

while (left <= right) {

const mid = Math.floor((left + right) / 2)

if (array[mid] === value) return true

else if (array[mid] < value) left = mid + 1

else right = mid - 1

}

return false

}

const array = [1, 2, 5, 8, 100]

console.log(isContains(array, 5)) // true

console.log(isContains(array, 9)) // falseIn this niche of complexity, you can find more advanced search algorithms, such as - Binary search.

O(n log n) - Linearithmic

This complexity is often compound, and can often, but not always, be decomposed into two nested loops, one with O(n) and O(log n) complexity. Algorithms of this type in the worst cases can degrade to the level of O(n^2).

Code example:

const matrix = [

[1, 2, 3],

[1, 2, 3],

[1, 2, 3],

]

let sum = 0

for (let i = 0; i < matrix.length; i++) {

for (j = 1; j < matrix[i].length; j *= 2) {

sum += matrix[i][j]

}

}

console.log(sum) // 15In the category of such complexity, there are advanced sort algorithms, such as - Quick sort, Merge sort, etc

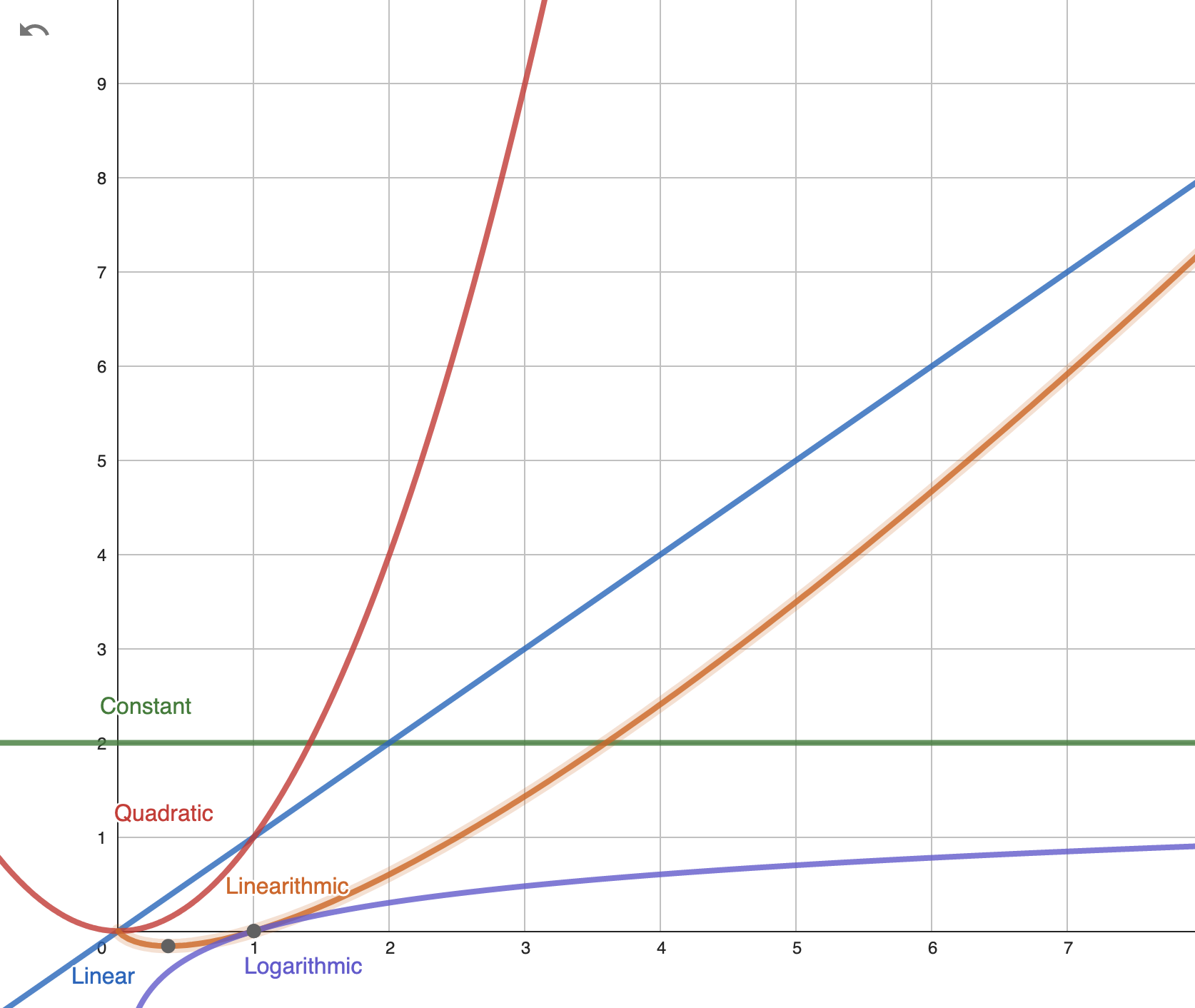

Conclusion

Let's summarize everything from above and put all the difficulties on one chart.

On a really big amount (n > billions of elements,) of data optimality scale from worst to best would look like this - O(n^2) -> O(n log n) -> O(n) -> O(log n) -> O(1).

I want to emphasize once again that these are the difficulties that are most often encountered in programming, but in fact, the classification of notations is bigger. You need to understand that one and the same task has a large number of solutions, and for evaluating and choosing the best one, this notation can be considered as one of the options. But do not forget that this is only one of the ways and far from the only one. If you write super-optimal code and arrive at a constant complexity score, but it's impossible or hard to maintain, then it's still bad code.

P.S. Useful cheat sheet for remembering time complexity and space complexity - BigoCheatSheet

Opinions expressed by DZone contributors are their own.

Comments