Building a Distributed Rate Limiter That Scales Horizontally

Rate limiting is important in distributed systems. Learn how Ably Realtime built scalable distributed rate limiting for their platform-as-a-service.

Join the DZone community and get the full member experience.

Join For FreeAbly Realtime is a distributed pub/sub messaging platform. As a platform-as-a-service (PaaS), we have various limits on our customers’ usage, to allow us to provision service capacity efficiently, to ensure one customer’s unexpected usage doesn’t affect any other customer, or just to enforce the hard limits of a given subscription plan. Some of these are applied on a monthly basis, some hourly, and some are instantaneous rate limits.

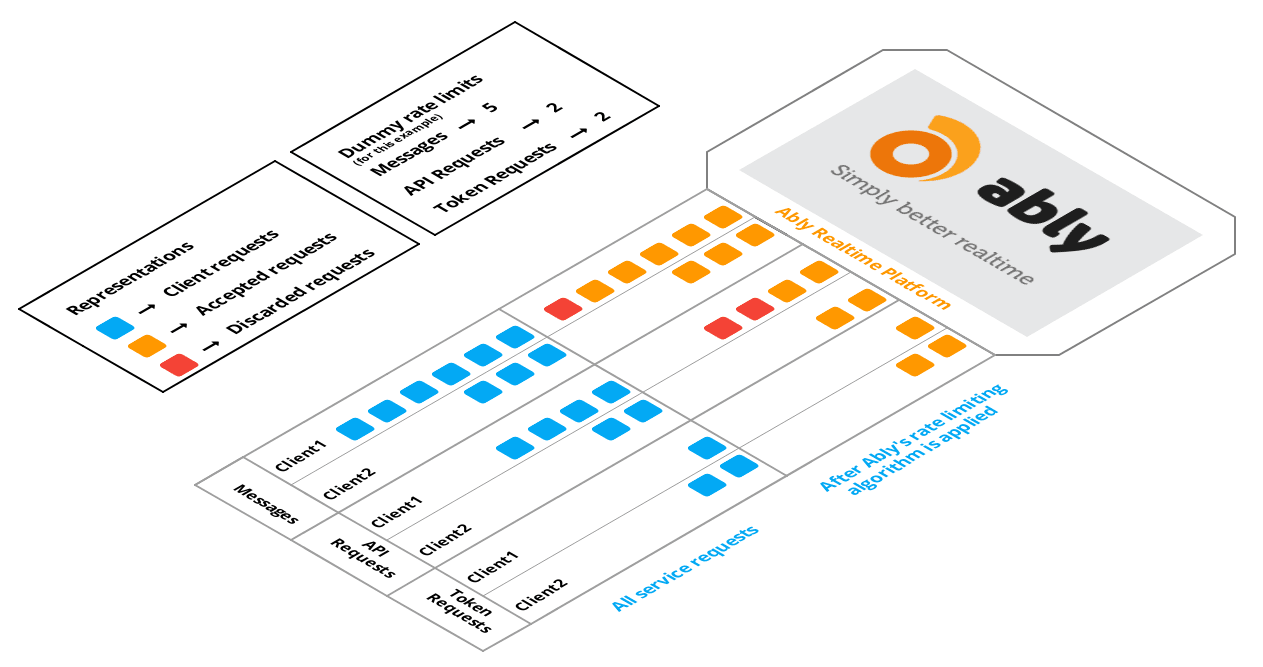

A simple illustration to understand rate-limiting.

A simple illustration to understand rate-limiting.

Previously, the account-wide instantaneous rate limits weren't really enforced. Or rather, they could be enforced locally-on the collective usage of any connections for an account that happened to land on some given node or channels that happen (using consistent hashing) to end up on the same node-but not system-wide. So enforcement was light touch: the 'true' achievable limit might effectively be many times the configured limit for the account.

For many things, we had no instantaneous rate limits at all. In particular, the total message usage for a given package had no instantaneous limit, it was only enforced on a monthly and hourly basis. The hourly limit isn't just a pro-rata translation of the monthly limit; typically it would permit bursts of up to 10x the pro-rata monthly rate, which means you can use your month's allocation in 3 days. But without a corresponding maximum instantaneous rate, there was nothing stopping anyone using up their entire hour's quota in a few seconds. Additionally, the threshold at which we actually impose an account restriction is 2.5x the nominal limit (to give customers plenty of margins to react to warnings without disrupting their own users). Combined, this means that someone with a package with (say) a hundred million messages/month could use 3.5 million messages in a few seconds without restriction.

This did occasionally happen; a customer (often one with the majority of its users connecting to one of our smaller datacenters) would publish a flurry of messages or presence operations on channels with very high fanout, resulting in a few million messages being sent or received in a few seconds, unacceptably increasing message latency for other customers in that datacenter.

While we had per-connection and per-channel rate limits (by default 50 messages per second per connection, both inbound and outbound, and 50 messages per second per channel), that only helps to control a single runaway connection or channel. An account with, say, twenty thousand active connections that suddenly starts a high-fanout publish a pattern that saturates every connection could use a million messages per second without hitting any per-connection limits.

The problem for us is the 'suddenly' part: if that same usage had ramped up gradually over a few minutes, it wouldn't have been a problem: our autoscaling would have kicked in and added enough capacity to handle it. Such high usage only causes issues if its very rapid onset means that autoscaling is unable to react in time.

So we decided we needed to introduce an account-wide, global, near-instantaneous message rate limit.

Design Constraints

We had a few constraints we wanted to satisfy:

1. Guaranteed Message Delivery

We never, ever silently drop messages. When we reject a message, it must be at the time of publishing, so that the publisher can be notified that the publish failed. The callback (or equivalent for the client library they're using) that they passed to publish() will be called with an error, with an error code indicating the specific reason that the publish was rejected, and the publisher can take some appropriate action — e.g. for a rate limit, perhaps waiting a few seconds and retrying. (For subscription-side issues, such as messages dropped due to the server-to-client rate limit of the subscriber, the publish isn't rejected - it might have succeeded for every other subscriber to that channel - instead, the subscriber is sent a notification that they have lost message continuity on that channel, and may want to use the history API to recover what they missed.)

2. No Blind Fanout Limit

Some use cases, such as providing realtime match updates for the Australian Open, involve millions of connections subscribing to a single channel. That channel can have a high message rate, and that's fine - there's only one channel, and we plan appropriate capacity for it. If rate-limiting was performed based only on measurements made at the level of individual channels, we would be unable to distinguish between that case and a problematic one in which there was a surge of similar traffic, but on many channels simultaneously.

3. Scalability

Any solution should not impose any burdens that affect our ability to scale up the cluster.

4. Throughput Stability

We wanted a solution that would give stable behavior under load. For example, we didn't want something like our hourly limit only shorter, that would just impose a total restriction on messages after an instantaneous rate had been exceeded, as that would just result in unstable behaviour, where message rate oscillates between zero and the excessive rate, over whatever interval the global rate is aggregated (eg every few seconds). That would be better than nothing, but we wanted something that gives sustainable, non-oscillatory throughput.

5. Predictability

An account that is well-behaved (stays strictly within its limits) should never have messages suppressed, no matter how close to the limits it gets. That is, we don't want to do any kind of predictive or heuristic limiting that might lead to messages being rejected in situations where, if left to its own devices, the account may not have breached any limits.

6. Fast Reaction Time

We want to start being able to enforce the rate limit within a few seconds of problematic activity.

Of course, some of these are in tension with each other (such as 5 and 6).

The Solution

For stable behavior under a demand that exceeds the permitted rate, we decided that we needed to continuously suppress a fraction of the attempted operations. For the mechanism, we decided to piggy-back on our existing stats functionality.

Every account in the system that's currently being used has an "Account Master" role somewhere in the cluster (determined by consistent hashing), responsible for stats collection, aggregation and limit enforcement. At most every two seconds, each of the clients of that role makes an RPC call to it with a rollup of any stats that have changed (how many messages of various kinds had been sent or received, current connection and channel counts, and so on) since the last call, if any. Since we'd be basing the limits on these stats, we just added a reply to the RPC call that contained a list of the operations that are subject to partial suppression, and a suppressionFactor (i.e. the fraction of operations to reject) to apply to each one.

The suppressionFactor is a float between 0 and 1 that determines the probability that that operation will be permitted. So, for example, if "messages.maxRate" had a suppressionFactor of 0.5, then each publish would (independently) have a 50% chance of being permitted.

This satisfies constraint 1: since the messages are rejected at publish time, we still uphold our guarantee to deliver every message we accept. Having suppression be probabilistic helps with constraint 4.

In our first proof-of-concept implementation, we set the suppression factor progressively based on the degree by which the account was beyond its limit, with a linear profile. So an account exactly at its rate limit would not be suppressed at all, one at (say) three times its rate limit would have 100% suppression, and one at twice its rate limit would, therefore, have 50% suppression.

Why the linear profile? Were we to calculate the suppression factor to bring the rate exactly down to the limit, after a full cycle with that suppression factor, the account's actual message usage would (by definition) be within the limit, so the suppression factor would go back to 0, allowing everything through again, and so on. We expected that a sufficiently long linear ramp-down (in particular, one longer than the limit), combined with the moving average, would reduce this: such a ramp would, for publish rates less than L(R - 1) (where L is the limit and R is the ramp factor, the length of the ramp-down as a proportion of the limit), have suppression rate smaller than it would be in a bring-it-down-to-the-limit approach (by a factor equal to the ramp factor minus 1), damping the oscillation. We'd end up with, if not quite a stable equilibrium, at least some damped oscillation around some value above the limit.

To further minimize oscillation, the rate damper was based on a moving average of the rate measured over 24 seconds (comprising 4 sub-intervals of 6 seconds, each with a known instantaneous rate).

In testing, it didn't work. Or, more accurately, we realized we were focused on the wrong thing.

We were thinking about getting stable behavior for accounts that creep up to just over the limit - or even twice the limit, or something of that order. But that wasn't the behavior that had been causing us issues. It was the accounts that suddenly went way, way over their limit (or rather, what the rate limit would have been had there been one) that were affecting service for other customers. For that behaviour, the linear ramp-down was basically irrelevant — the deluge is big enough to trigger full 0-to-100-and-back-again oscillation, with a 24s period.

(We could have further increased the ramp size and/or the moving average window, but that would have just pushed the problem further out, not to mention make it too light-touch for lesser breaches.)

After kicking around a few tweaks to the mechanism — such as nonlinear ramp-down curves - we concluded that there wasn't any sensible way to avoid oscillation completely if we constrained ourselves to calculate it based on the achieved (i.e. post-suppression) message rate

So we decided to remove that constraint. We started sending the Account Master a stat for how many messages would have been generated, had they succeeded, by those publish attempts that were in fact rejected for breaching an instantaneous rate limit.

For example, if you publish to a channel with 1000 subscribers, then that uses 1001 messages from your package allocation if it succeeds (one for the publish and one for each subscriber). We started sending that number (in a separate stat that isn't aggregated into package usage) even if the publish attempt is rejected. This means that the rate limiter can now know the total that would have been sent and received had there been no suppression, which means it can just do a naive calculation of the suppression rate that would be needed to ensure that the successful publish would result in aggregate message rate exactly at the limit. This avoids the oscillation problem entirely.

This was a big improvement. Combined with a few further tweaks, this worked well enough to roll it out to production. (One such tweak was to use a modified moving average that ignores any in-progress sub-interval if the current data point is below the unadjusted mean. This means that we don't get an oscillation due to the moving average aggregating over 6s discrete intervals, but it can still react quickly if there's a sudden spike in the current interval.)

There is still more work to be done. For example, even with the tweaks, the feedback loop still takes a few seconds longer than we'd like.

Our plan for the next step is to add a per-channel limiter that can rate-limit its channel if the activity on that channel alone would be sufficient to exceed the account-wide rate limit (or possibly a similar one that aggregates from all channels for a given account that happen to share a given node, and so can be aggregated without any network delays). That will mean we won't need the full distributed feedback loop to make limiting decisions that could have been made at a local level.

Published at DZone with permission of Simon Woolf, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments