DDD: Interchange Context and Microservices

Learn how to use interchange context to solve an error in a microservice that bleeds out to corrupt other services.

Join the DZone community and get the full member experience.

Join For FreeIn my previous two articles, I described a lot about bounded context and context maps, where we learned how to segregate the domain models using bounded context. We also learned how to use context maps judiciously to understand the relationship between two contexts. Here, "relationship" is a broader statement — it does not only represent a technical relationship, it also defines the relationship between owners of the services, who are in a commendable position, who act as downstream, etc. Also, we learned different strategies based on the relationships, like partnerships, upstream/downstream, anti-corruption layer, etc.

In this article, we will be dealing with a special case: when something goes wrong while designing a microservice, and how that error bleeds and corrupts other services. Then we will learn how to solve that problem using interchange context.

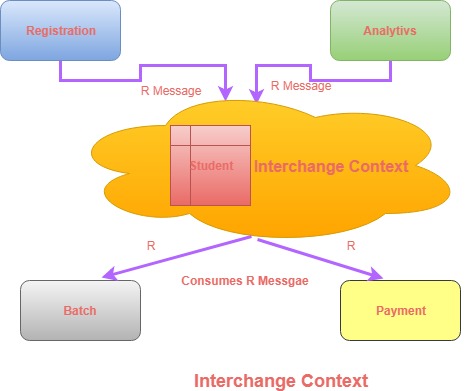

Take an example: in the case of our online student registration module, where the Registration Module is in a commendable position, as it has a partnership relationship with the analytics module. The payment module has a downstream relationship with it, and batch scheduler has a downstream relationship with it, too. It is clear that the registration module is in a commendable position, so everyone has to follow the language it publishes. Whatever message the registration module API publishes, the batch and payment module have to receive the same message, so if the Registration module publishes an R language, every other module has to consume that R language. In this system, R is the defacto language standard. You can think of this situation on the real-world basis of British people conquering the world in ancient times, so the language they spoke, English, is the defacto language standard for the world. A British person and an Indian person automatically communicate with English, not the reverse. Even an Indian person and a Chinese person talk to each other in English (where no British people are involved).

Now, the problem occurs if the Registration module has issues in design; say, the boundary is not properly defined, the message structure is not properly designed, or edge cases/exceptions are not handled properly. In that case, in spite of a physical boundary (bounded context) these problems bleed into all dependent services, and the whole context map is polluted due the error in the Registration module. The Registration module is now acting as a big ball of mud. (BBoM). As per Eric Evans, he called it a small ball of mud. One thing is clear: if an upstream/commendable system is in danger, then the whole microservice architecture become a mess. As an architect, we have to prevent the bleeding of errors from upstream microservices/systems to downstream services.

If we dig through the DDD patterns, we can find that ACL is doing the same (anti-corruption layer), which sits in front of the consumer microservice/system and translates the producer/upstream message structure to the consumer microservice/system message structure. It also handles any errors, so ACL protects the consumer services from external systems.

But, think about a system where two services are in a partnership relationship. They produce and consume each other's messages, both dependent on each other and with other services dependent on them. In that case, rather than putting ACL in front of both partners, we can use a new concept called Interchange Context.

Interchange Context is a technique where both partners agree upon the model and message structure, so there is one ubiquitous language for both partners. They publish one message together and other systems consume that message. In this way, two partners can independently change their internal code and internal message structure, but in Interchange context, they create a common terminology/ubiquitous language which is understood by these two partners. Every other service dependent on these two partners can consume message from the Interchange Context.

Conclusion

Interchange Context is more robust than the anti-corruption layer. It stops the error from bleeding from upstream services. If upstream services have a direct consumer, the upstream service developer should be very careful about the API; any changes in the API or errors will break others, so Interchange Context is kind of a centralized facade where partners create a ubiquitous language and others consume messages from the interchange context. Partners and dependent services are all free to modify their own data model and message structure, but when they communicate to the interchange context, the internal model should be converted to Interchange context message structures.

Published at DZone with permission of Shamik Mitra, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments