How I Taught OpenAI a New Programming Language With Fine-Tuning

We fine-tuned OpenAI to understand a programming language it had no prior knowledge of. This article explains what we learned.

Join the DZone community and get the full member experience.

Join For FreeIf you go to ChatGPT and ask it about Hyperlambda, at best, it will hallucinate wildly and generate something that resembles a bastardized mutation between Bash and Python. For the record, Claude is not any better here. The reason is that there's simply not enough Hyperlambda out there for the website scrapers from OpenAI and Anthropic to pick up its syntax. This was a sore thumb for me for a long time, so I realised I just had to do something about it. So, I started fine-tuning OpenAI on Hyperlambda.

If I had known what a job this would be, I'd probably never have started. To teach Hyperlambda to the point where it does "most simple things correct," it took me 3,500 training snippets and about 550 validation snippets. Each snippet is, on average, about 20 lines of code; multiplied by 4,000, we're up to 80 KLOC of code.

Notice that these are 80,000 lines of code with absolutely no purpose whatsoever besides being a requirement to teach an LLM a new programming language. So, as you can guess, it wasn't the most motivating job. If you're interested in seeing my training snippets, I've made them publicly available in a GitHub repo.

When was the last time you created 80,000 lines of unusable code? KNOWING they would be completely useless too may I add as you started out...

Lessons Learned

The first and most important thing I can tell you is to completely ignore everything ChatGPT tells you about fine-tuning. The same is true for Claude. If you ask ChatGPT for help to set your hyperparameters, it will tell you that you should set the learning rate multiplier at 0.1 to 0.2. This might be OK if you're OpenAI, and you're scraping the entire web, but for teaching GPT-40-mini a new programming language, you have to turn this number up significantly!

The defaults (1.8) are definitely the LR multiplier that gives me the best result. So, I chose the defaults for all hyperparameters, resulting in a medium-good LLM capable of "doing most simple things correctly."

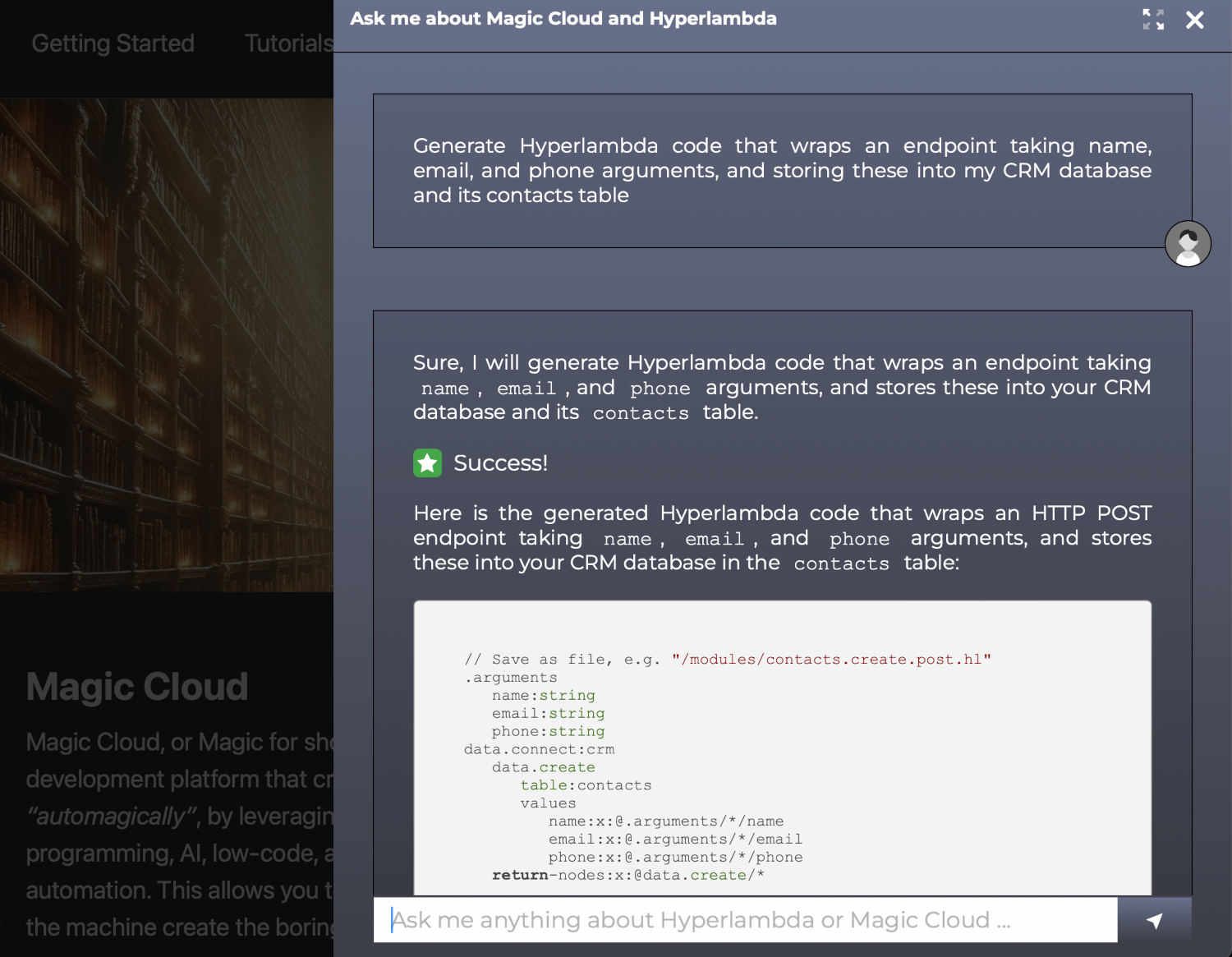

FYI, the above is 100% correct Hyperlambda, and for "simple requests" such as illustrated above, the model produces an accuracy of roughly 80 to 90%.

Training Snippets

If you want to fine-tune OpenAI, you will need to create structured training snippets. These are divided into "prompt" and "completion" values, where the prompt is an imagined question, and the completion is the expected result from the LLM. Below is a screenshot.

Pssst... imagine writing 4,000 of these, and you get an idea of the magnitude of the work.

However, don't go overboard with rephrasing your prompts. If you've got high-quality file-level comments explaining what a file does, that's probably good enough. The LLM will extrapolate these into an understanding that allows it to answer questions regardless of how your "prompts" are formatted.

For me that implied I could start out with my system folder, which contains all core building blocks for the magic backend. Then I use the CRUD generator from Magic, which allows me to generate CRUD HTTP web API endpoints wrapping whatever database you want to wrap. This gave me roughly 1,000 high quality training snippets, leaving only 2,500 having to be implemented by me and my team.

In addition, I created a custom GPT, which I used internally to take a single Hyperlambda file and generate multiple "variations" from it. This allowed me to take a snippet storing name, email, and phone into a CRM/contacts database, and generate five similar snippets saving other object types into the database. This helped the LLM to understand what arguments are, and which arguments are changing according to my table structure, etc. ChatGPT and generative AI were still beneficial during this process, albeit not as much as you'd typically think.

The bulk of the snippets still had to be generated from a rich and varied code base, solving all sort of problems, from database access, to sending emails, to generating Fibonacci numbers and calculating even numbers using Magic's math libraries.

Each training snippet should also contain a "system" message, such as for instance; "You are a Hyperlambda software development assistant. Your task is to generate and respond with Hyperlambda."

Cost

Training GPT-40-mini will cost you $2 to $5 per run with ~2MB of data in your training files and validation files. Training on GPT-40 (the big model) will cost you $50 to $100 per run.

I did several runs with the big GPT-40, and the results were actually worse than GPT-40-mini. My suggestion is to use the mini model unless you've got very deep pockets. I spent a total of $1,500 to pull this through, which required hundreds of test runs and QA iterations, weeding out errors and correcting them as I went forward.

Personally, I would not even consider trying to fine-tune GPT-40 (the big model) today for these reasons.

Conclusion

Fine-tuning an LLM is ridiculously hard work, and if you search for it, the only real information you'll find is rubbish blog posts about how to fine-tune the model to become "sarcastic". This takes 10 snippets. Training the LLM on a completely new domain requires thousands of snippets. A new programming language, well, below are the figures.

- 3,500 snippets become a "tolerable LLM doing more good than damage," which is where we're at now.

- 5,000 snippets. Now, it is good.

- 10,000 snippets. At this point, the LLM will understand your new language almost as well as it understands Python or JavaScript.

If you want to fine-tune an LLM, I can tell you it is possible, but unless you've got a lot of existing data that can easily be transformed into OpenAI's "prompt and completion" structure, the job is massive. Personally, I spent one month writing Hyperlambda code for some 3 to 6 hours per day, 7 days a week — and at this point, it's still only "tolerable."

Do I recommend it? Well, for 99% of your stuff simply adding RAG to the LLM is the correct solution. But for a programming language such as Hyperlambda it's not working, and you have to use fine tuning.

I did not cover the structure of your training files in this article, but basically these are assumed to be JSONL files (each line in your file is a single JSON object). If you're interested in seeing this, you can find information about this on OpenAI's website.

However, if you start down this path, I have to want you. It is an insane amount of work! And I doubt I'd want to do it again if I knew just how much work it actually was. But the idea is to fill in with more and more training snippets over time until I reach the sweet spot of 5,000 snippets, at which point we've got a 100% working backend AI-generator solution for creating backend code and APIs.

If you want to try out the Hyperlambda generator, you can try it out in our AI chatbot. However, you have to phrase your question starting out with something like "Generate Hyperlambda that..." to put your backend requirements into the prompt.

Opinions expressed by DZone contributors are their own.

Comments