First Steps With GCP Kubernetes Engine

Follow this introductory tutorial to get your start with creating and deploying an application in GCP Kubernetes Engine.

Join the DZone community and get the full member experience.

Join For FreeThe past year, we wrote some articles using Minikube as a Kubernetes cluster in order to experiment with it. In this post, we will take our first steps into Google Cloud Platform (GCP) and more specificall, with Kubernetes Engine. Let’s see whether going to the Cloud makes our lives even easier. We will create a GCP account, create a Kubernetes cluster, deploy our application manually and deploy by means of Helm.

1. Create a GCP Account

The first thing to do is to create a GCP account. Therefore, we navigate to the GCP website. Choose Try GCP for Free where you will be asked to log in with your Google account (you will have to create one first if you don’t have one already). After logging in, you will be asked for your credit card details. The latter will only be used for verification, you will not be billed without your explicit approval. When the verification is finished, you are ready to go and you will receive $300 of free credits for 12 months in order to experiment with paid services. The GCP Cloud Console, which is your main entry for the GCP services, is accessible here.

2. Create the Kubernetes Cluster

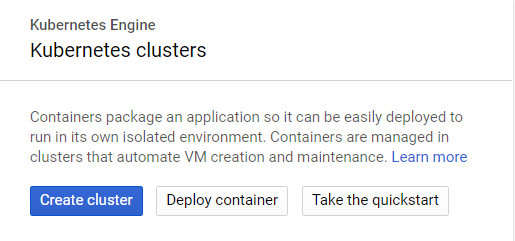

We create a project MyKubernetesPlanet, navigate to the Menu and choose Kubernetes Engine:

The following message will be shown when choosing Kubernetes Engine for the first time: Kubernetes Engine API is being enabled. This may take a minute or more.

The next step is to create the Kubernetes cluster by clicking the Create cluster button. A cluster consists of at least of one master machine and several nodes.

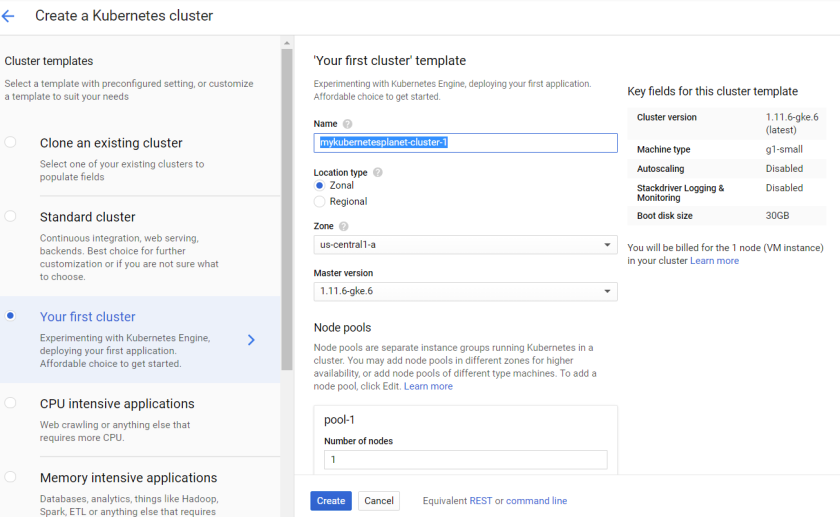

This will bring us to a screen where we can choose from several Cluster templates. We will start modestly and choose the Your first cluster template. We change the name into mykubernetesplanet-cluster-1 and leave the other settings at their default values. In order to finish, we click the Create button.

That’s it! In just a few minutes, we have an up-and-running Kubernetes cluster. Isn’t that great?!

Open the Cloud Shell at the right top of the page:

![]()

This will open a shell in the browser.

Enter the following command in order to set credential information:

$ gcloud container clusters get-credentials mykubernetesplanet-cluster-1 --zone us-central1-a

Fetching cluster endpoint and auth data.

kubeconfig entry generated for mykubernetesplanet-cluster-1.Now we are able to use kubectl commands:

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

gke-mykubernetesplanet-cluster-pool-1-83f61445-tpfj Ready 35m v1.11.6-gke.63. Deploy Manually

In the next section, we will deploy a simple Spring Boot application running in a Docker container. We will use the mykubernetesplanet Docker image which we created in a previous post. The sources are available at GitHub and the Docker image is available at DockerHub. Version 0.0.1-SNAPSHOTcontains a hello URL which prints a Hello Kubernetes welcome message, version 0.0.2-SNAPSHOT also prints the host name where the application is running.

3.1 Deploy a Docker Image

By means of Cloud Shell, deploy the mykubernetesplanet:0.0.1-SNAPSHOTDocker image:

$ kubectl run mykubernetesplanet --image=mydeveloperplanet/mykubernetesplanet:0.0.1-SNAPSHOT --port=

8080

deployment.apps "mykubernetesplanet" createdExpose the application publicly:

$ kubectl expose deployment mykubernetesplanet --type="LoadBalancer"

service "mykubernetesplanet" exposedRetrieve the external IP:

$ kubectl get service mykubernetesplanet --watch

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

mykubernetesplanet LoadBalancer 10.47.244.210 35.238.243.92 8080:31838/TCP 48sThe hello URL is accessible via your browser at: http://35.238.243.92:8080/hello

This will return our Hello Kubernetes welcome message: Hello Kubernetes!

3.2 Update a Docker Image

Let’s update our application to version 0.0.2-SNAPSHOT:

$ kubectl set image deployments/mykubernetesplanet mykubernetesplanet=mydeveloperplanet/mykubernet

planet:0.0.2-SNAPSHOT

deployment.apps "mykubernetesplanet" image updatedThis will automatically update our application. Browse to the hello URL and the response is now:

Hello Kubernetes! From host: mykubernetesplanet-74ffc8d4b5-7jzz6/10.44.0.10

3.3 Scale Up Our Application

Currently, our application is running on 1 Pod. Let’s scale up our application to 2 Pods:

$ kubectl scale deployment mykubernetesplanet --replicas=2

deployment.extensions "mykubernetesplanet" scaledCheck the number of Pods:

$ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

mykubernetesplanet-74ffc8d4b5-7jzz6 1/1 Running 0 3m 10.44.0.10 gke-mykubernetesplanet-cluster-pool-1-83f61445-tpfj

mykubernetesplanet-74ffc8d4b5-j9jb9 1/1 Running 0 1m 10.44.0.11 gke-mykubernetesplanet-cluster-pool-1-83f61445-tpfjAlso, check the deployment settings which show us the status of our deployment:

$ kubectl get deployment

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

mykubernetesplanet 2 2 2 2 12m4. Explore the GCP Console

With Minikube, the dashboard provided us a visual representation of the configuration of our cluster and the ability to change the configuration of deployment, services, etc. The GCP Console provides us similar functionality.

The Cluster section shows us some general cluster information:

The Workloads section shows us information about our deployment:

The Services section shows us information about how our application is exposed to the outside world:

After our experiment, we removed the cluster in order to avoid extra charges against our free credits. Navigate to the Cluster and press the recycle bin icon in order to remove the cluster. The actions in this section reduced our free credits with a few cents.

5. Deploy With Helm

In the previous section, we deployed our application manually to the Kubernetes cluster. In a previous post, we explored how we can deploy by means of Helm. It is advised to read part 1 and part 2 before continuing if you are not acquainted with Helm.

The application we will deploy is the same as in the previous section. Additionally, we will make use of the Helm Chart which is available at GitHub.

Before we start, we need to create a Kubernetes cluster just as we did above and set the credential information in Cloud Shell.

5.1 Install Helm

In order to use Helm, we need to install the Helm Client locally in our GCP account and we need to install the Helm Server (Tiller) application into our cluster.

Download the Helm binary:

$ wget https://storage.googleapis.com/kubernetes-helm/helm-v2.12.3-linux-amd64.tar.gzExtract the zipped file:

$ tar zxfv helm-v2.12.3-linux-amd64.tar.gzCopy the Helm binary to your home directory:

$ cp linux-amd64/helm .Your user account must have the cluster-admin role in order to install Tiller:

$ kubectl create clusterrolebinding user-admin-binding --clusterrole=cluster-admin --user=$(gcloud config get-value account)

Your active configuration is: [cloudshell-8955]

clusterrolebinding.rbac.authorization.k8s.io "user-admin-binding" createdA service account must be created for Tiller:

$ kubectl create serviceaccount tiller --namespace kube-system

serviceaccount "tiller" createdTiller also must be granted the cluster-admin role:

$ kubectl create clusterrolebinding tiller-admin-binding --clusterrole=cluster-admin --serviceaccount=kube-system:tiller

clusterrolebinding.rbac.authorization.k8s.io "tiller-admin-binding" createdInitialize Helm in order to install Tiller into the Kubernetes cluster:

$ ./helm init --service-account=tiller

$ ./helm repo updateThis will install Tiller in an insecure manner. For our test, this does not really matter, but you should incorporate security when using Tiller in production.

5.2 Deploy the Helm Chart

We will deploy the Helm Chart v0.1.0 which makes use of 1 Pod and is exposed as a NodePort Service.

In Cloud Shell, clone the Helm Chart git repository:

$ git clone https://github.com/mydeveloperplanet/myhelmchartplanet.gitEnter the git directory and checkout the v0.1.0 tag:

$ cd myhelmchartplanet/

$ git checkout v0.1.0Execute the following command from outside your git repository, the command will search for a directory with the name of the Helm Chart from where it is issued. This will deploy the Helm Chart in a local Chart Repository (username is your GCP account).

$ ./helm package myhelmchartplanet

Successfully packaged chart and saved it to: /home/username/myhelmchartplanet-0.1.0.tgzCheck whether our Helm package is available in the local Chart Repository:

$ ./helm search myhelmchartplanet

NAME CHART VERSION APP VERSION DESCRIPTION

local/myhelmchartplanet 0.1.0 0.0.2-SNAPSHOT A Helm chart for KubernetesInstall the Helm Chart into our Kubernetes cluster:

$ ./helm install myhelmchartplanetThe notes section of the output of the above command allow us to retrieve the IP address and Port:

NOTES:

1. Get the application URL by running these commands:

export NODE_PORT=$(kubectl get --namespace default -o jsonpath="{.spec.ports[0].nodePort}" services smelly-zorse-myhelmchartplanet)

export NODE_IP=$(kubectl get nodes --namespace default -o jsonpath="{.items[0].status.addresses[0].address}")

echo http://$NODE_IP:$NODE_PORTThis returns us the following: http://10.128.0.4:30036

This isn’t the IP address which is accessible from outside the cluster. We have to do some extra things in order to get it working. First, retrieve the external IP address of our Pod:

$ kubectl get nodes --output wide

NAME STATUS ROLES AGE VERSION EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

gke-mykubernetesplanet-cluster-pool-1-e2401053-0v6r Ready 1h v1.11.7-gke.4 35.188.104.39 Container-Optimized OS from Google 4.14.89+ docker://17.3.2Now that we have retrieved our external IP, we need to create a firewall rule to allow TCP traffic to our NodePort:

$ gcloud compute firewall-rules create test-node-port --allow tcp:30036

Creating firewall...⠼Created [https://www.googleapis.com/compute/v1/projects/mykubernetesplanet/global/firewalls/test-node-port].

Creating firewall...done.

NAME NETWORK DIRECTION PRIORITY ALLOW DENY DISABLED

test-node-port default INGRESS 1000 tcp:30036 FalseThe hello URL is accessible via our browser: http://35.188.104.39:30036/hello

This returns the following:Hello Kubernetes! From host: smelly-zorse-myhelmchartplanet-f4bc8586-jhlp8/10.44.0.10

More information on exposing applications via GCP can be found here.

6. Conclusion

In this post, we explored the capabilities of using GCP Kubernetes Engine. We created a cluster, manually deployed an application and used Helm in order to deploy our application. All of these actions went very smoothly and it took us only a few minutes to get it up and running.

Published at DZone with permission of Gunter Rotsaert, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments