How to Automate Hyperparameter Optimization

In this tutorial, see how to automate hyperparameter optimization.

Join the DZone community and get the full member experience.

Join For FreeA Beginner’s Guide to Using Bayesian Optimization With Scikit-Optimize

In the machine learning and deep learning paradigm, model “parameters” and “hyperparameters” are two frequently used terms where “parameters” define configuration variables that are internal to the model and whose values can be estimated from the training data and “hyperparameters” define configuration variables that are external to the model and whose values cannot be estimated from the training data (What is the Difference Between a Parameter and a Hyperparameter? ). Thus, the hyperparameter values need to be manually assigned by the practitioner.

Every machine learning and deep learning model that we make has a different set of hyperparameter values that need to be fine-tuned to be able to obtain a satisfactory result. Compared to machine learning models, deep learning models tend to have a larger number of hyperparameters that need optimizing in order to get the desired predictions due to its architectural complexity over typical machine learning models.

Repeatedly experimenting with different value combinations manually to derive the optimal hyperparameter values for each of these hyperparameters can be a very time consuming and tedious task that requires good intuition, a lot of experience, and a deep understanding of the model. Moreover, some hyperparameter values may require continuous values, which will have an undefined number of possibilities, and even if the hyperparameters require a discrete value, the number of possibilities is enormous, thus manually performing this task is rather difficult. Having said all that, hyperparameter optimization might seem like a daunting task but thanks to several libraries that are readily available in the cyberspace, this task has become more straightforward. These libraries aid in implementing different hyperparameter optimization algorithms with less effort. A few such libraries are Scikit-Optimize, Scikit-Learn, and Hyperopt.

There are several hyperparameter optimization algorithms that have been employed frequently throughout the years, they are Grid Search, Random Search, and automated hyperparameter optimization methods. Grid Search and Random Search both set up a grid of hyperparameters but in Grid Search every single value combination will be exhaustively explored to find the hyperparameter value combination that gives the best accuracy values making this method very inefficient. On the other hand, Random Search will repeatedly select random combinations from the grid until the specified number of iterations is met and is proven to yield better results compared to the Grid Search. However, even though it manages to give a good hyperparameter combination we cannot be certain that it is, in fact, the best combination. Automated hyperparameter optimization uses different techniques like Bayesian Optimization that carries out a guided search for the best hyperparameters (Hyperparameter Tuning using Grid and Random Search). Research has shown that Bayesian optimization can yield better hyperparameter combinations than Random Search (Bayesian Optimization for Hyperparameter Tuning).

In this article, we will be providing a step-by-step guide into performing a hyperparameter optimization task on a deep learning model by employing Bayesian Optimization that uses the Gaussian Process. We used the gp_minimize package provided by the Scikit-Optimize (skopt) library to perform this task. We will be performing the hyperparameter optimization on a simple stock closing price forecasting model developed using TensorFlow.

Scikit-Optimize (skopt)

Scikit-Optimize is a library that is relatively easy to use than other hyperparameter optimization libraries and also has better community support and documentation. This library implements several methods for sequential model-based optimization by reducing expensive and noisy black-box functions.

Bayesian Optimization using the Gaussian Process

Bayesian optimization is one of the many functions that skopt offers. Bayesian optimization finds a posterior distribution as the function to be optimized during the parameter optimization, then uses an acquisition function (eg. Expected Improvement-EI, another function etc) to sample from that posterior to find the next set of parameters to be explored. Since Bayesian optimization decides the next point based on more systematic approach considering the available data it is expected to yield achieve better configurations faster compared to the exhaustive parameter optimization techniques such as Grid Search and Random Search. You can read more about the Bayesian Optimizer in skopt from here.

Code Alert!

So, enough with the theory, let’s get down to business!

This example code is done using python and TensorFlow. Furthermore, the goal of this hyperparameter optimization task is to obtain the set of hyperparameter values that can give the lowest possible Root Mean Square Error (RMSE) for our deep learning model. We hope this will be very straight forward for any first-timer.

First, let us install Scikit-Optimize. You can install it using pip by executing this command.

pip install scikit-optimizePlease note that you will have to make some adjustments to your existing deep learning model code in order to make it work with the optimization.

First, let’s do some necessary imports.

import skopt

from skopt import gp_minimize

from skopt.space import Real, Integer

from skopt.utils import use_named_args

import tensorflow as tf

import numpy as np

import pandas as pd

from math import sqrt

import atexit

from time import time, strftime, localtime

from datetime import timedelta

from sklearn.metrics import mean_squared_error

from skopt.plots import plot_convergenceWe will now set the TensorFlow and Numpy seed as we want to get reproducible results.

randomState = 46

np.random.seed(randomState)

tf.set_random_seed(randomState)Shown below are some essential python global variables that we have declared. Among the variables, we have also declared the hyperparameters that we are hoping to optimize (the 2nd set of variables).

input_size=1

features = 2

column_min_max = [[0, 2000],[0,500000000]]

columns = ['Close', 'Volume']

num_steps = None

lstm_size = None

batch_size = None

init_learning_rate = None

learning_rate_decay = None

init_epoch = None

max_epoch = None

dropout_rate = NoneThe “input_size” depicts a part of the shape of the prediction. The “features” depict the number of features in the data set and the “columns” list has the header names of the two features. The “column_min_max” variable contains the upper and lower scaling bounds of both the features (this was taken by examining validation and training splits).

After declaring all these variables it’s finally time to declare the search space for each of the hyperparameters we are hoping to optimize.

lstm_num_steps = Integer(low=2, high=14, name='lstm_num_steps')

size = Integer(low=8, high=200, name='size')

lstm_learning_rate_decay = Real(low=0.7, high=0.99, prior='uniform', name='lstm_learning_rate_decay')

lstm_max_epoch = Integer(low=20, high=200, name='lstm_max_epoch')

lstm_init_epoch = Integer(low=2, high=50, name='lstm_init_epoch')

lstm_batch_size = Integer(low=5, high=100, name='lstm_batch_size')

lstm_dropout_rate = Real(low=0.1, high=0.9, prior='uniform', name='lstm_dropout_rate')

lstm_init_learning_rate = Real(low=1e-4, high=1e-1, prior='log-uniform', name='lstm_init_learning_rate')If you look closely you will be able to see that we have declared the ‘lstm_init_learning_rate’ prior to log-uniform without just putting uniform. What this does is that, if you had put prior as uniform, the optimizer will have to search from 1e-4 (0.0001 ) to 1e-1 (0.1) in a uniform distribution. But when declared as log-uniform, the optimizer will search between -4 and -1, thus making the process much more efficient. This has been advised when assigning the search space for learning rate by the skopt library.

There are several data types using which you can define the search space. Those are Categorical, Real and Integer. When defining a search space that involves floating point values you should go for “Real” and if it involves integers, go for “Integer”. If your search space involves categorical values like different activation functions, then you should go for the “Categorical” type.

We are now going to put down the parameters that we are going to optimize in the ‘dimensions’ list. This list will be passed to the ‘gp_minimize’ function later on. You can see that we have also declared the ‘default_parameters’. These are the default parameter values we have given to each hyperparameter. Remember to type in the default values in the same order as you listed the hyperparameters in the ‘dimensions’ list.

dimensions = [lstm_num_steps, size, lstm_init_epoch, lstm_max_epoch,

lstm_learning_rate_decay, lstm_batch_size, lstm_dropout_rate, lstm_init_learning_rate]

default_parameters = [2,128,3,30,0.99,64,0.2,0.001]The most important thing to remember is that the hyperparameters in the “default_parameters” list will be the starting point of your optimization task. The Bayesian Optimizer will use the default parameters that you have declared in the first iteration and depending on the result, the acquisition function will determine which point it wants to explore next.

It can be said that if you have run the model several times previously and found a decent set of hyperparameter values, you can put them as the default hyperparameter values and start your exploration from there. What this may do is that it will help the algorithm find the lowest RMSE value faster (fewer iterations). However, do keep in mind that this might not always be true. Also, remember to assign a value that is within the search space that you have defined when assigning the default values.

What we have done up to now is setting up all the initial work for the hyperparameter optimization task. We will now focus on the implementation of our deep learning model. We will not be discussing the data pre-processing of the model development process as this article only focuses on the hyperparameter optimization task. We will include the GitHub link of the complete implementation at the end of this article.

However, to give you a little bit more context, we divided our data set into three splits for training, validation, and testing. The training set was used to train the model and the validation set was used to do the hyperparameter optimization task. As mentioned before, we are using the Root Mean Square Error (RMSE) to evaluate the model and perform the optimization (minimize RMSE).

The accuracy assessed using the validation split cannot be used to evaluate the model since the selected hyperparameters minimizing the RMSE with validation split can be overfitted to the validation set during the hyperparameter optimization process. Therefore, it is standard procedure to use a test split that has not used at any point in the pipeline to measure the accuracy of the final model.

Shown below is the implementation of our deep learning model:

def setupRNN(inputs, model_dropout_rate):

cell = tf.contrib.rnn.LSTMCell(lstm_size, state_is_tuple=True, activation=tf.nn.tanh,use_peepholes=True)

val1, _ = tf.nn.dynamic_rnn(cell, inputs, dtype=tf.float32)

val = tf.transpose(val1, [1, 0, 2])

last = tf.gather(val, int(val.get_shape()[0]) - 1, name="last_lstm_output")

dropout = tf.layers.dropout(last, rate=model_dropout_rate, training=True,seed=46)

weight = tf.Variable(tf.truncated_normal([lstm_size, input_size]))

bias = tf.Variable(tf.constant(0.1, shape=[input_size]))

prediction = tf.matmul(dropout, weight) + bias

return predictionThe “setupRNN” function contains our deep learning model. Still, you may not want to understand those details, as Bayesian optimization considers that function as a black-box that takes certain hyperparameters as the inputs and then outputs the prediction. So if you are not interested in understanding what we have inside that function, you may skip the next paragraph.

Our deep learning model contains an LSTM layer, a dropout layer and an output layer. The necessary information required for the model to work needs to be sent to this function (in our case, it was the input and the dropout rate). You can then proceed with implementing your deep learning model inside this function. In our case, we used an LSTM layer to identify the temporal dependencies of our stock data-set.

We then fed the last output of the LSTM to the dropout layer for regularization purposes and obtained the prediction through the output layer. Finally, remember to return this prediction (in a classification task this can be your logit) to the function that will be passed to the Bayesian Optimization ( “setupRNN” will be called by this function).

If you are performing a hyperparameter optimization for a machine learning algorithm (using a library like Scikit-Learn) you will not need a separate function to implement your model as the model itself is already given by the library and you will only be writing code to train and obtain predictions. Therefore, this code can go inside the function that will be returned to the Bayesian Optimization.

We have now come to the most important section of the hyperparameter optimization task, the ‘fitness’ function.

@use_named_args(dimensions=dimensions)

def fitness(lstm_num_steps, size, lstm_init_epoch, lstm_max_epoch,

lstm_learning_rate_decay, lstm_batch_size, lstm_dropout_rate, lstm_init_learning_rate):

global iteration, num_steps, lstm_size, init_epoch, max_epoch, learning_rate_decay, dropout_rate, init_learning_rate, batch_size

num_steps = np.int32(lstm_num_steps)

lstm_size = np.int32(size)

batch_size = np.int32(lstm_batch_size)

learning_rate_decay = np.float32(lstm_learning_rate_decay)

init_epoch = np.int32(lstm_init_epoch)

max_epoch = np.int32(lstm_max_epoch)

dropout_rate = np.float32(lstm_dropout_rate)

init_learning_rate = np.float32(lstm_init_learning_rate)

tf.reset_default_graph()

tf.set_random_seed(randomState)

sess = tf.Session()

train_X, train_y, val_X, val_y, nonescaled_val_y = pre_process()

inputs = tf.placeholder(tf.float32, [None, num_steps, features], name="inputs")

targets = tf.placeholder(tf.float32, [None, input_size], name="targets")

model_learning_rate = tf.placeholder(tf.float32, None, name="learning_rate")

model_dropout_rate = tf.placeholder_with_default(0.0, shape=())

global_step = tf.Variable(0, trainable=False)

prediction = setupRNN(inputs,model_dropout_rate)

model_learning_rate = tf.train.exponential_decay(learning_rate=model_learning_rate, global_step=global_step, decay_rate=learning_rate_decay,

decay_steps=init_epoch, staircase=False)

with tf.name_scope('loss'):

model_loss = tf.losses.mean_squared_error(targets, prediction)

with tf.name_scope('adam_optimizer'):

train_step = tf.train.AdamOptimizer(model_learning_rate).minimize(model_loss,global_step=global_step)

sess.run(tf.global_variables_initializer())

for epoch_step in range(max_epoch):

for batch_X, batch_y in generate_batches(train_X, train_y, batch_size):

train_data_feed = {

inputs: batch_X,

targets: batch_y,

model_learning_rate: init_learning_rate,

model_dropout_rate: dropout_rate

}

sess.run(train_step, train_data_feed)

val_data_feed = {

inputs: val_X,

}

vali_pred = sess.run(prediction, val_data_feed)

vali_pred_vals = rescle(vali_pred)

vali_pred_vals = np.array(vali_pred_vals)

vali_pred_vals = vali_pred_vals.flatten()

vali_pred_vals = vali_pred_vals.tolist()

vali_nonescaled_y = nonescaled_val_y.flatten()

vali_nonescaled_y = vali_nonescaled_y.tolist()

val_error = sqrt(mean_squared_error(vali_nonescaled_y, vali_pred_vals))

return val_errorAs shown above, we are passing the hyperparameter values to a function named “fitness.” The “fitness” function will be passed to the Bayesian hyperparameter optimization process (gp_minimize). Note that in the first iteration, the values passed to this function will be the default values that you defined and from there onward Bayesian Optimization will choose the hyperparameter values on its own. We then assign the chosen values to the python global variables we declared at the beginning so that we will be able to use the latest chosen hyperparameter values outside the fitness function.

We then come to a rather critical point in our optimization task. If you have used TensorFlow prior to this article, you would know that TensorFlow operates by creating a computational graph for any kind of deep learning model that you make.

During the hyperparameter optimization process, in each iteration, we will be resetting the existing graph and constructing a new one. This process is done to minimize the memory taken for the graph and prevent the graphs from stacking on top of each other. Immediately after resetting the graph you will have to set the TensorFlow random seed in order to obtain reproducible results. After the above process, we can finally declare the TensorFlow session.

After this point, you can start adding code responsible for training and validating your deep learning model as you normally would. This section is not really related to the optimization process but the code after this point will start utilizing the hyperparameter values chosen by the Bayesian Optimization.

The main point to remember here is to return the final metric value (in this case the RMSE value) obtained for the validation split. This value will be returned to the Bayesian Optimization process and will be used when deciding the next set of hyperparameters that it wants to explore.

Note: if you are dealing with a classification problem you would want to put your accuracy as a negative value (eg. -96) because, even though the higher the accuracy the better the model, the Bayesian function will keep trying to reduce the value as it is designed to find the hyperparameter values for the lowest value that is returned to it.

Let us now put down the execution point for this whole process, the “main” function. Inside the main function, we have declared the “gp_minimize” function. We are then passing several essential parameters to this function.

if __name__ == '__main__':

start = time()

search_result = gp_minimize(func=fitness,

dimensions=dimensions,

acq_func='EI', # Expected Improvement.

n_calls=11,

x0=default_parameters,

random_state=46)

print(search_result.x)

print(search_result.fun)

plot = plot_convergence(search_result,yscale="log")

atexit.register(endlog)

logger("Start Program")The “func” parameter is the function you would want to finally model using the Bayesian Optimizer. The “dimensions” parameter is the set of hyperparameters that you are hoping to optimize and the “acq_func” stands for the acquisition function and is the function that helps to decide the next set of hyperparameter values that should be used. There are 4 types of acquisition functions supported by gp_minimize. They are:

- LCB: lower confidence bound

- EI: expected improvement

- PI: probability of improvement

- gp_hedge: probabilistically choose one of the above three acquisition functions at every iteration

The above information was extracted from the documentation. Each of these has its own advantages but if you are a beginner to Bayesian Optimization, try using “EI” or “gp_hedge” as “EI” is the most widely used acquisition function and “gp_hedge” will choose one of the above-stated acquisition functions probabilistically thus, you wouldn't have to worry too much about that.

Keep in mind that when using different acquisition functions there might be other parameters that you might want to change that affects your chosen acquisition function. Please refer the parameter list in the documentation for this.

Back to explaining the rest of the parameters, the “n_calls” parameter is the number of times you would want to run the fitness function. The optimization task will start by using the hyperparameter values defined by “x0", the default hyperparameter values. Finally, we are setting the random state of the hyperparameter optimizer as we need reproducible results.

Now when you run the gp_optimize function the flow of events will be:

The fitness function will use with the parameters passed to x0. The LSTM will be trained with the specified epochs and the validation input will be run to get the RMSE value for its predictions. Then depending on that value, the Bayesian optimizer will decide what the next set of hyperparameter values it wants to explore with the help of the acquisition function.

In the 2nd iteration, the fitness function will run with the hyperparameter values that the Bayesian optimization has derived and the same process will repeat until it has iterated “n_call” times. When the complete process comes to an end, the Scikit-Optimize object will get assigned to the “search _result” variable.

We can use this object to retrieve useful information as stated in the documentation.

- x [list]: location of the minimum.

- fun [float]: function value at the minimum.

- models: surrogate models used for each iteration.

- x_iters [list of lists]: location of function evaluation for each iteration.

- func_vals [array]: function value for each iteration.

- space [Space]: the optimization space.

- specs [dict]`: the call specifications.

- rng [RandomState instance]: State of the random state at the end of minimization.

The “search_result.x” gives us optimal hyperparameter values and using “search_result.fun” we can obtain the RMSE value of the validation set corresponding to the obtained hyperparameter values (The lowest RMSE value obtained for the validation set).

Shown below are the optimal hyperparameter values that we obtained for our model and the lowest RMSE value of the validation set. If you are finding it hard to figure out the order in which the hyperparameter values are being listed when using “search_result.x”, it is in the same order as you specified your hyperparameters in the “dimensions” list.

Hyperparameter Values:

- lstm_num_steps: 6

- lstm_size: 171

- lstm_init_epoch: 3

- lstm_max_epoch: 58

- lstm_learning_rate_decay: 0.7518394019565194

- lstm_batch_size: 24

- lstm_dropout_rate: 0.21830825193089087

- lstm_init_learning_rate: 0.0006401363567813549

Lowest RMSE: 2.73755355221523

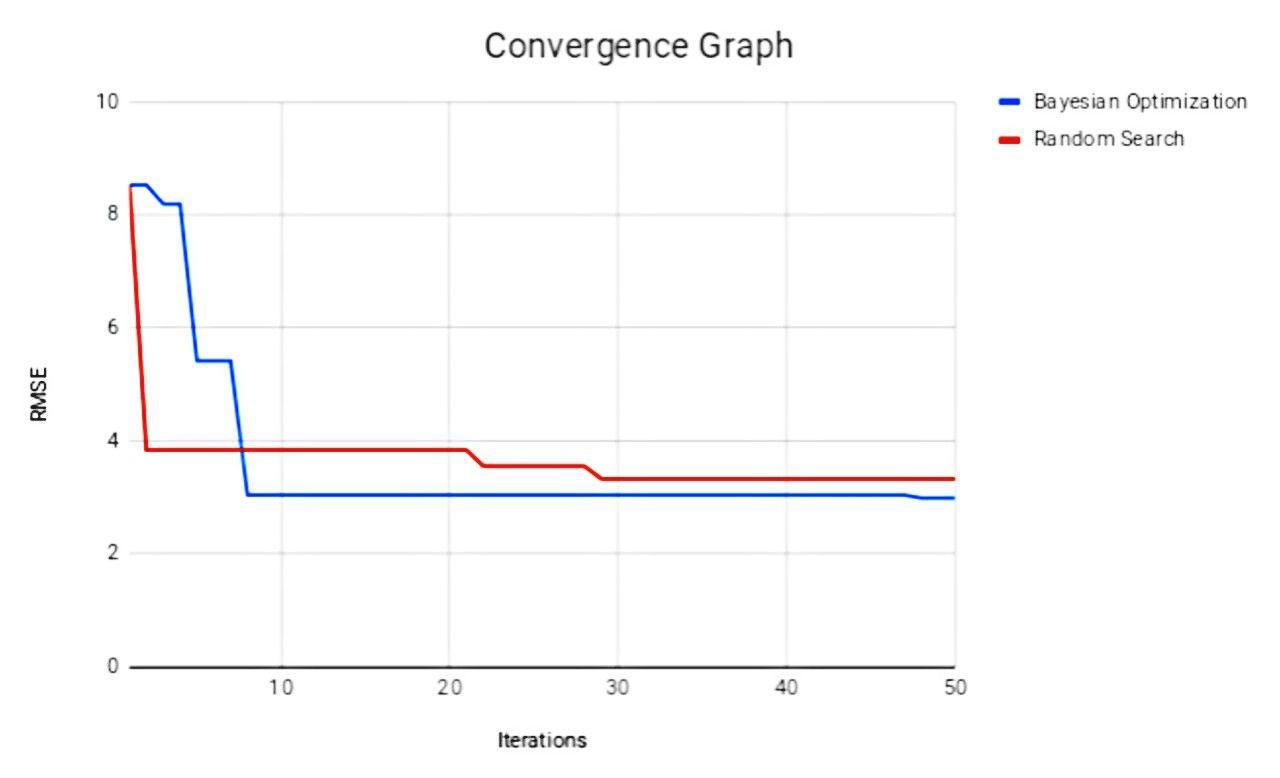

Convergence Graph

The hyperparameters that produced the lowest point of the Bayesian Optimization in this graph is what we get as the optimal set of hyperparameter values.

The graph shows a comparison of the lowest RMSE values recorded for each iteration (50 iterations) in Bayesian Optimization and Random Search. We can see that the Bayesian Optimization has been able to converge rather better than the Random Search. However, in the beginning, we can see that Random search has found a better minimum faster than the Bayesian Optimizer. This can be due to random sampling being the nature of Random Search.

We have finally come to the end of this article, so to conclude, we hope this article made your deep learning model building task easier by showing you a better way of finding the optimal set of hyperparameters. Here’s to no more stressing over hyperparameter optimization. Happy coding, fellow geeks!

Useful Materials:

Opinions expressed by DZone contributors are their own.

Comments