How to Write Simple, Powerful Script Data Sources for BIRT Reports

Learn how to write simple, powerful script data sources for BIRT reports.

Join the DZone community and get the full member experience.

Join For Free1. preface: jvm-based sql functions and stored procedures

some databases, such as mysql, don’t have analytic functions. some others, such as vertica, don’t support stored procedures. they turn to external python, r script, or other languages to deal with complicated data computations. but the scripting languages and java, the mainstream programming language, are integration-unfriendly. often, a lengthy java script that tries to replace sql functions or stored procedures aims at achieving a certain computing goal and is unreusable.

it’s not easy to implement complicated logics even with analytic functions. here’s a common computing task: find the first n customers whose sales account for half of the total sum and sort them by amount in descending order. oracle implements it this way:

|

with a as

(selectcustom,salesamount,row_number() over (order by salesamount) ranking from sales) select custom,salesamount from (select custom,salesamount,sum(salesamount) over (order by ranking) accumulativeamount from a) where accumulativeamount>(select sum(salesamount)/2 from sales) order by salesamount desc |

the oracle script sorts records by sales amount in ascending order and then finds the customers whose sales amount to half of the total sum in an opposite direction according to the condition that the accumulated amount is greater than half of the total sum. in order to avoid window function’s mistake in handling the same sales amounts when calculating the accumulated value, we calculate the sales amounts rankings in the first subquery.

esproc script:

| a | b | |

|---|---|---|

| 1 | =connect("verticalink") | /connect to vertica database |

| 2 | =a1.query("select * from sales").sort(salesamount:-1) | /get the sales records and sort them by sales amount in descending order |

| 3 | =a2.cumulate(salesamount) | /calculate a sequence of accumulated values; the function is a replacement of database window function |

| 4 | =a3.m(-1)/2 | /calculate half of total sales amount |

| 5 | =a3.pselect(~>=a4) | /find the position in the accumulated value sequence where half of the total sales amount falls |

| 6 | =a2(to(a5)) | /get the record where half of total sales amount falls and records before it |

| 7 | >a1.close() | /close database connection |

| 8 | return a6 | /return a6’s result |

instead of the complicated nested sql plus window function, esproc uses concise syntax to implement the computing logic. being applicable to all databases (data sources), the code is more universal.

esproc is driven by a jvm-based scripting language intended to handle structured data. as sql functions and stored procedures, it can be integrated with a java application to create migratable, versatile, and database-independent computing logics. such a computing logic runs as a middle layer and is separated from the data logic run in the database (data source) layer. the separation makes the overall application more scalable, more flexible, and more maintainable.

2. application scenario: report data preparation

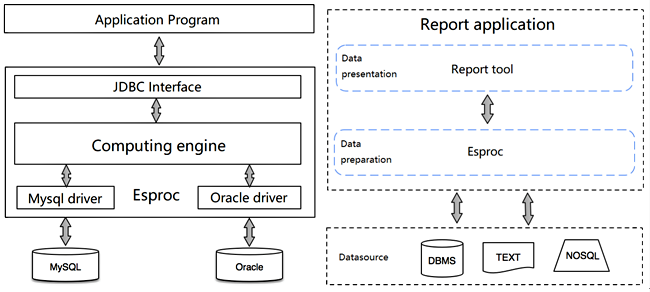

2.1 reporting architecture

an esproc script embedded into the reporting layer is like a local logical database that doesn’t need deploying a server specifically. it stands as a data preparation layer between the reporting tool and data source for performing various complicated computations.

2.2 integration

let’s look at how to integrate esproc as the data preparation layer (take vertica and birt as the example).

i. integration of basic jars

esproc jdbc has three basic jars, which are situated in [installation directory]\esproc\lib :

dm.jar esproc computing engine and jdbc driver

jdom.jar parse configuration files

icu4j_3_4_5.jar handle internationalizationbesides, there are jars for achieving specific functionalities. to use databases as the data sources in esproc jdbc, their driver jars are required. as vertica is the data source here, the corresponding jars are needed (take vertica 9.1.0 as an example).

vertica-jdbc-9.1.0-0.jar download it from vertica website.

those jars should be copied and placed under birt’s [installation directory]\plugins\org.eclipse.birt.report.data.oda.jdbc_4.6.0.v20160607212 .

ii. deploy the configuration file

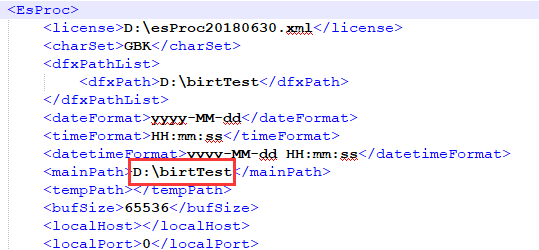

the configuration file, raqsoftconfig.xml , contains license information, script file path, data source connection configuration information, and etc.

it is located in [esproc installation directory]\esproc\config and needs to be copied and placed under birt designer class path [installation directory]\plugins\org.eclipse.birt.report.data.oda.jdbc_4.6.0.v20160607212 .

the file’s name must not be changed.

2.3 birt development environment

1. copy all the required jars under birt’s web-inf\lib;

2. copy raqsoftconfig.xml under birt’s web-inf\classes.

2.3.1 example 1: normal call

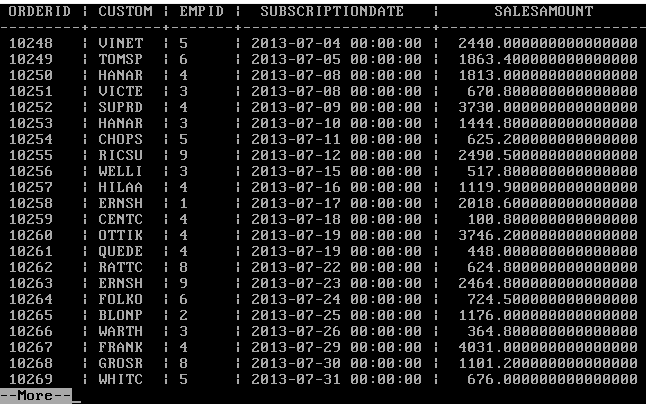

1. below is the

sales

table in the vertica database. (the table contains data of the years 2013, 2014, and 2015 and queried via vsql)

2. create an esproc script

(1)put vertica jdbc driver jars into esproc designer path

download jdbc driver jar (

vertica-jdbc-9.1.0-0.jar

, for instance) from the vertica website, and put it under [

esproc installation directory]\common\jdbc.

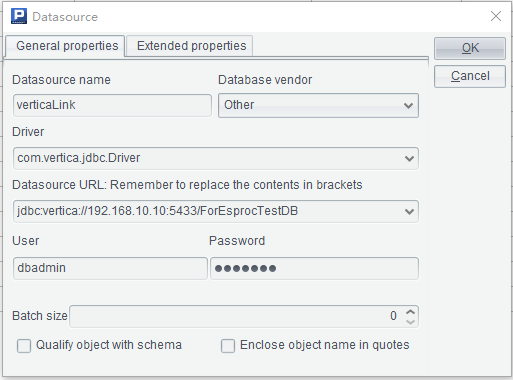

(2) add vertica data source

open esproc designer, click

tool -> datasource

to add the vertica data source in jdbc way.

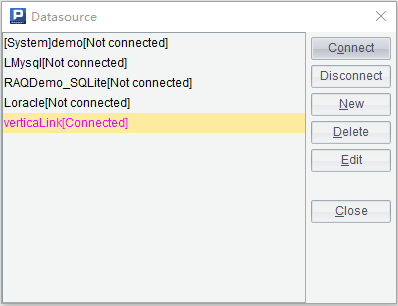

click ok to save the configuration and then connect to the data source.

the data source is successfully connected once the data source name turns pink.

(3) create an algorithm script (saved as verticaexternalprocedures.dfx ) through file – >new .

| a | b | |

|---|---|---|

| 1 | =connect("verticalink") | /connect to vertica database |

| 2 | =a1.query("select * from sales").sort(salesamount:-1) | /get the sales records and sort them by sales amount in descending order |

| 3 | =a2.cumulate(salesamount) | /calculate a sequence of accumulated values; the function is a replacement of database window function |

| 4 | =a3.m(-1)/2 | /calculate half of total sales amount |

| 5 | =a3.pselect(~>=a4) | /find the position in the accumulated value sequence where half of the total sales amount falls |

| 6 | =a2(to(a5)) | /get the record where half of total sales amount falls and records before it |

| 7 | >a1.close() | /close database connection |

| 8 | return a6 | /return a6’s result to birt as the report source data set |

3. deploy the script

put the script file under the script file main directory configured in

raqsoftconfig.xml

.

4. configure data source connection: verticalink , in raqsoftconfig.xml

<db name="verticalink">

<property name="url" value="jdbc:vertica://192.168.10.10:5433/foresproctestdb"/>

<property name="driver" value="com.vertica.jdbc.driver"/>

<property name="type" value="0"/>

<property name="user" value="dbadmin"/>

<property name="password" value="runqian"/>

<property name="batchsize" value="0"/>

<property name="autoconnect" value="false"/>

<property name="useschema" value="false"/>

<property name="addtilde" value="false"/>

<property name="needtranscontent" value="false"/>

<property name="needtranssentence" value="false"/>

<property name="casesentence" value="false"/>

</db>

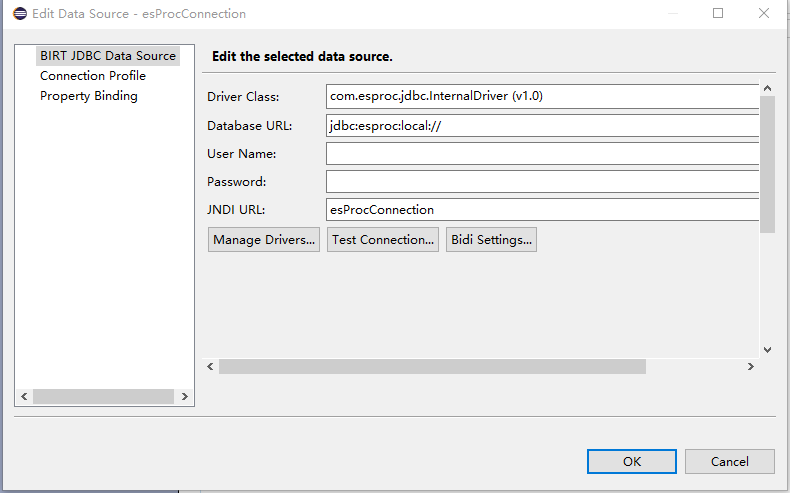

5. create a new report birt report designer and add esproc data source:

esprocconnection

.

the driver class is com.esproc.jdbc.internaldriver(v1.0) , which needs dm.jar and other jars. database url is jdbc:esproc:local://

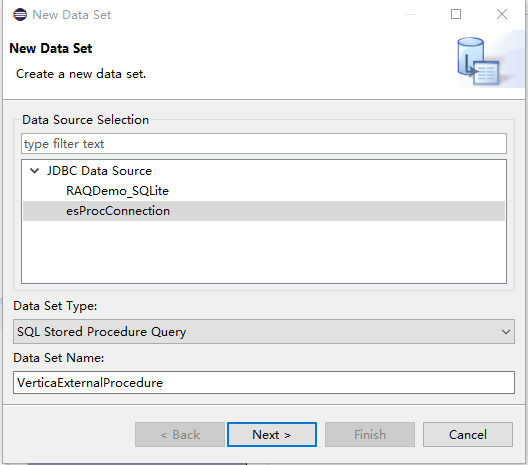

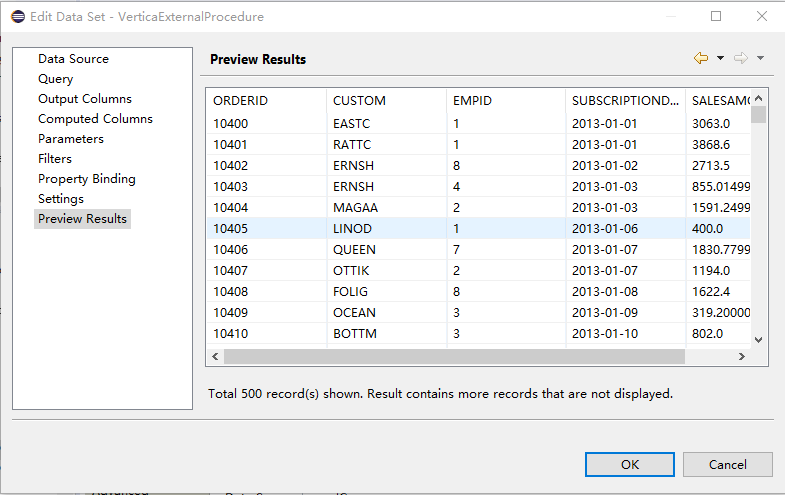

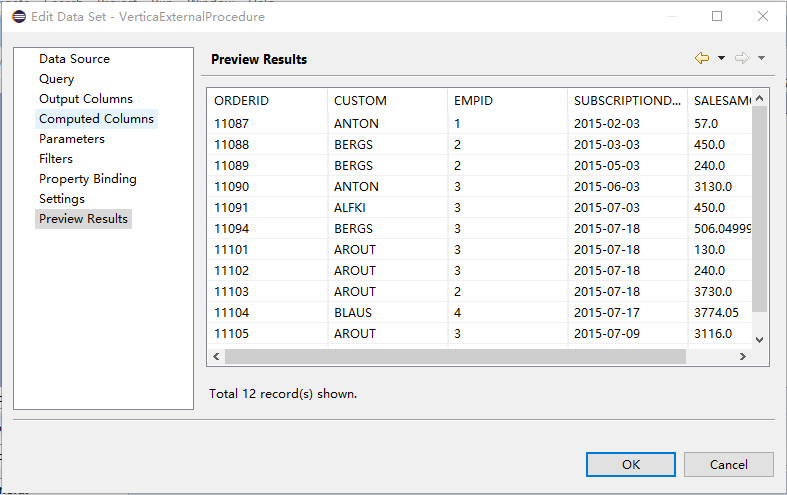

6. birt calls esproc data set (vertica’s external stored procedure)

create a new dataset; select the esproc data source (

esprocconnection

); the data set type is

sql stored procedure query

.

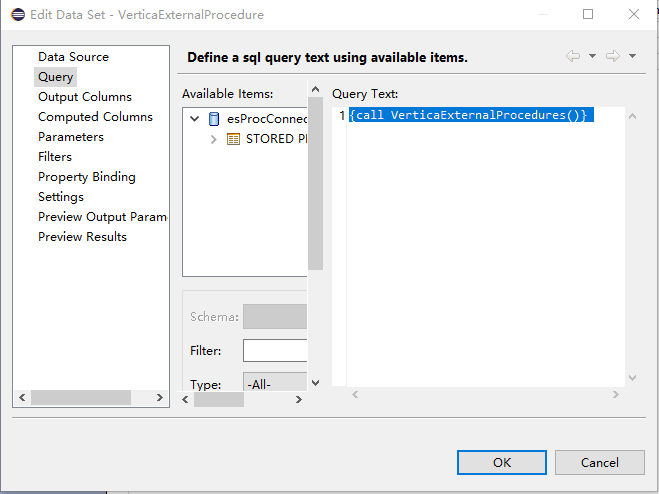

next, enter

{call verticaexternalprocedures()}

under

query text

.

verticaexternalprocedures

is esproc script file name.

now we can preview the computing result with

preview results

.

that’s the process of how to use esproc script as vertica’s external stored procedure to prepare data source for a report.

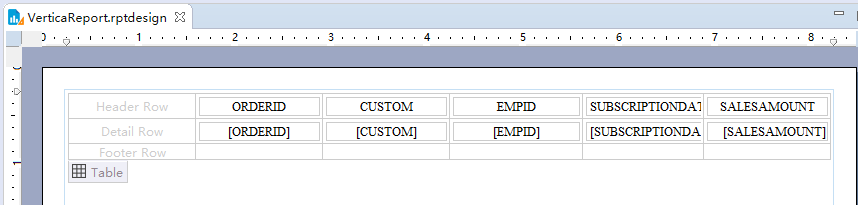

7. web presentation

take a grid report as an example. below is the report design:

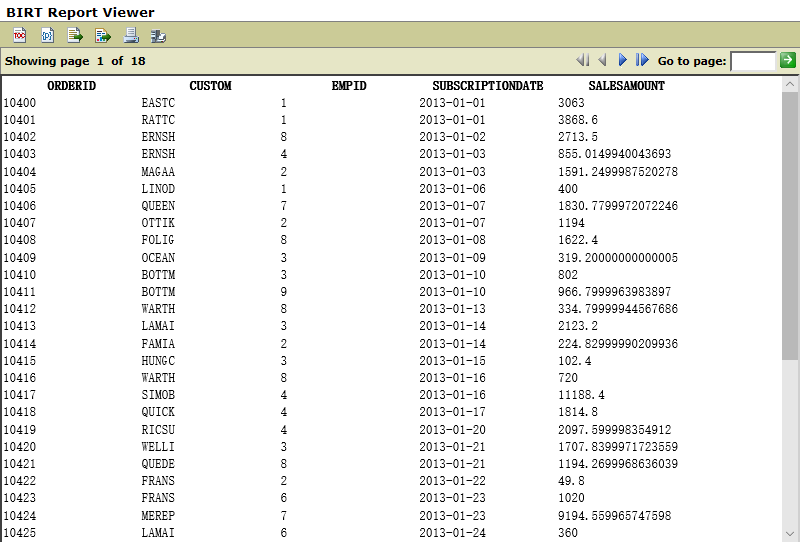

publish preview:

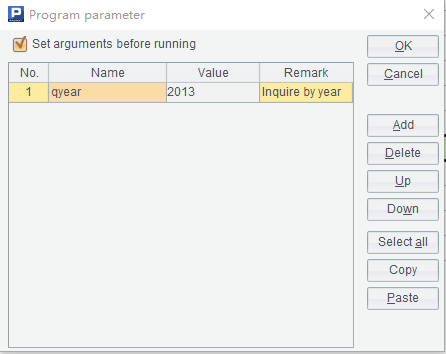

2.3.2 example 2: parameter-based call

we change the above computing task a bit. find the first n customers whose sales account for half of the total sum by year and sort them by amount in descending order. the task requires a parameter filtering.

1. add a year parameter for filtering.

open esproc designer and click

program –> parameter –> add

to add parameter

qyear

(the name can be different from a report parameter).

modified script:

| a | b | |

|---|---|---|

| 1 | =connect("verticalink") | /connect to vertica database |

| 2 | =a1.query("select * from sales where year(subscriptiondate)=?",qyear).sort(salesamount:-1) | /qyear is the parameter receiving a typed year to find the corresponding sales records and sort them by sales amount in descending order |

| 3 | =a2.cumulate(salesamount) | /calculate a sequence of accumulated values; the function is a replacement of database window function |

| 4 | =a3.m(-1)/2 | /calculate half of total sales amount |

| 5 | =a3.pselect(~>=a4) | /find the position in the accumulated value sequence where half of the total sales amount falls |

| 6 | =a2(to(a5)) | /get the record where half of total sales amount falls and records before it |

| 7 | >a1.close() | /close database connection |

| 8 | return a6 | /return a6’s result to birt as the report source data set |

a2 performs conditional filtering.

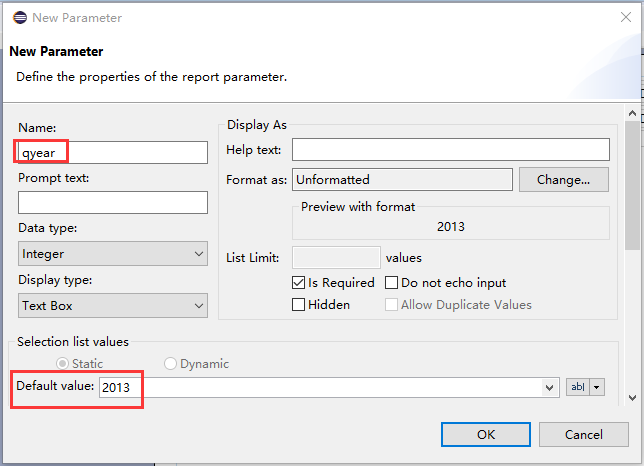

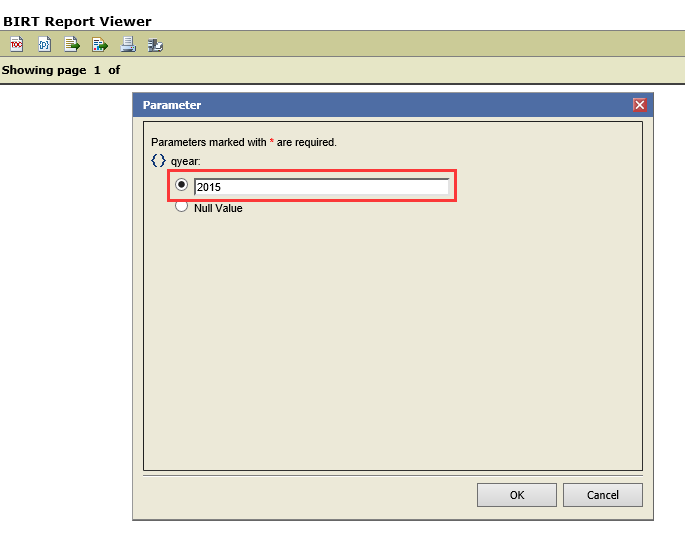

2. define a year parameter for the report

define an input parameter named

qyear

for the report.

open the report, click

data explorer –> report parameter –> new parameter

to add the parameter.

the second red box is the default value of parameter qyear .

3. add a data set parameter and link it with the report parameter

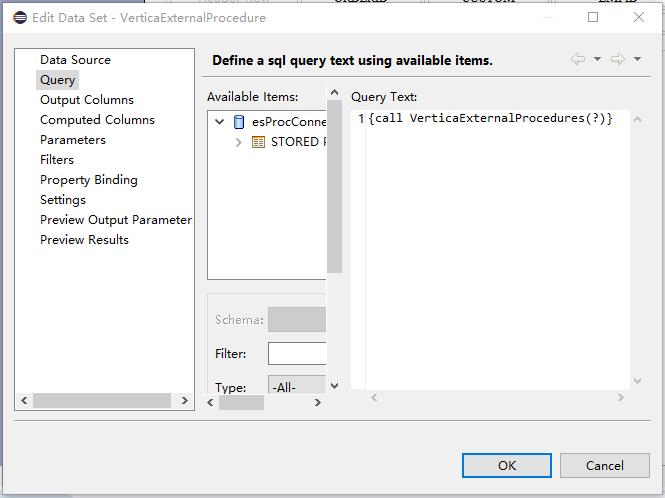

create data set

verticaexternalprocedures

.

there is a bit different about the

query text

, which is

{call verticaexternalprocedures(?)}

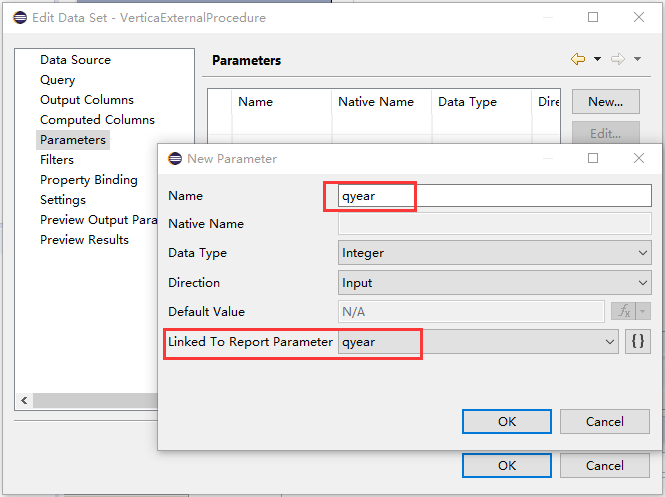

. the question mark (?) is a placeholder for an input year parameter. under

parameters

, add data set parameter

qyear

and link it with report parameter

qyear

.

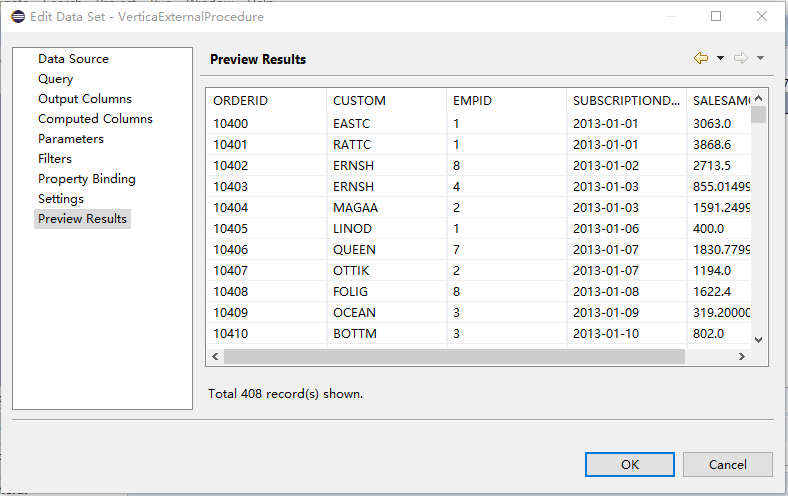

under preview results, query data of the year 2013 according to the default value of qyear .

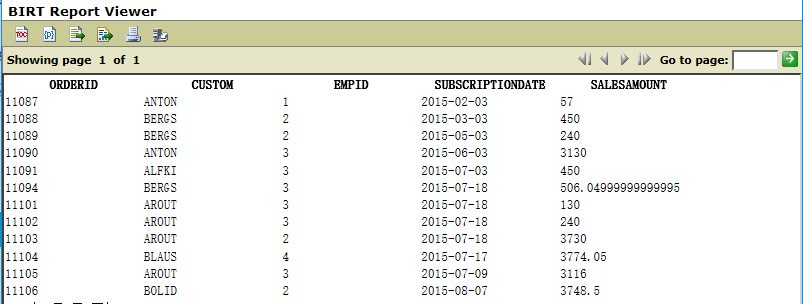

after passing the value “2015” to the parameter:

4. web presentation

query data of the year 2015:

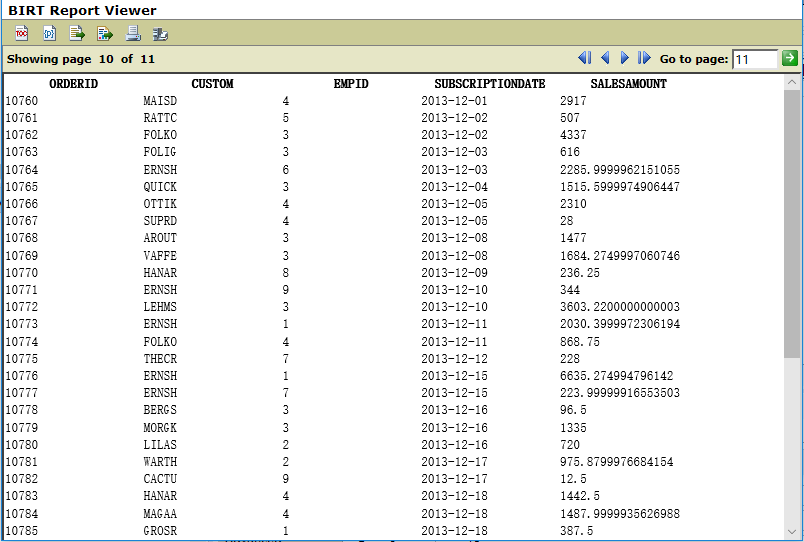

after modifying the url or passing “2013” to

qyear

:

let me know your thoughts in the comments.

Opinions expressed by DZone contributors are their own.

Comments