Identify and Resolve Stragglers in Your Spark Application

Know about potential stragglers in your Spark application and how they affect the overall application performance.

Join the DZone community and get the full member experience.

Join For FreeStragglers are detrimental to the overall performance of Spark applications and lead to resource wastages on the underlying cluster. Therefore, it is important to identify potential stragglers in your Spark Job, identify the root cause behind them, and put required fixes or provide preventive measures.

What Is a Straggler in a Spark Application?

A straggler refers to a very very slow executing Task belonging to a particular stage of a Spark application (Every stage in Spark is composed of one or more Tasks, each one computing a single partition out of the total partitions designated for the stage). A straggler Task takes an exceptionally high time for completion as compared to the median or average time taken by other tasks belonging to the same stage. There could be multiple stragglers in a Spark Job being present either in the same stage or across multiple stages.

How Stragglers Hurt

Even if one straggler is present in a Spark stage, it would considerably delay the execution of the stage with its presence. Delayed execution of one or more stages (due to stragglers), in turn, would have a domino effect on the overall execution performance of the Spark App.

Further, stragglers could also lead to wastage of cluster resources if static resources are configured for the Job. This is due to the fact that unless the stragglers are finished, the already freed static resources of the stage can’t be put to use in the future stages that are dependent on the straggler affected stage.

How to Identify Them

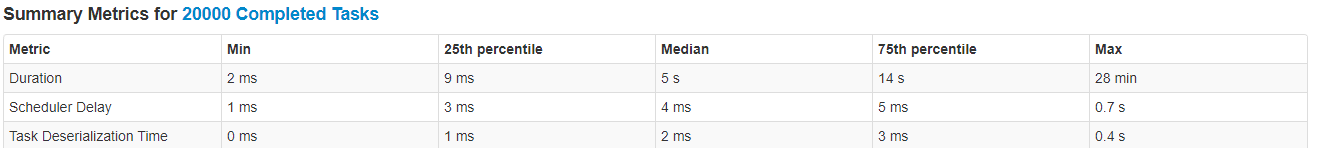

The presence of stragglers can be felt when you observe that a stage progress bar (available in the Spark UI) corresponding to an executed stage gets stuck in the end. To confirm the same, you could open the stage-specific details listing the summary of Task metrics of the completed tasks in the stage. If the Task metric shows that ‘Max duration’ among the completed tasks is exceptionally higher than the ‘Median’ or ‘75th percentile’ value, then it indicates the possibility of stragglers in the corresponding Job. Here is an example showing Task metrics snapshot of a straggler inflicted stage of a Job:

In the above snapshot, it is clearly evident that the corresponding stage is suffering from the stragglers, as the ‘Max’ Task duration, which is 28 min, is exceptionally higher than the ‘75th percentile’ value which is only a meager 14 seconds. Further, the individual stragglers can be identified from the detailed Task description section of the stage-specific page. All those tasks whose execution duration lies above the ‘75th percentile’ value can be classified as stragglers for the stage.

What Causes Stragglers?

Here are some of the common reasons for stragglers problem:

Skewed partitioning: This is one of the widespread and the most common cause of stragglers. Skewed partitioning results in skewed partitions (skewness with respect to data size mapped to each of the partition). And, if the partition data directly reflects the data to be computed upon, then skewed partitions result in a skewed distribution of computation time among tasks assigned for them thereby giving birth to stragglers.

Skewed partitioning can arise in multiple scenarios, the most common being repartitioning of a Dataset or RDD on the basis of partitioning key which in turn is having a skewed distribution. Skewed partitioning could also result when a bunch of unsplittable files, skewed by the size, are read into a Dataset/RDD.

Skewed Computation: This is another widespread reason for giving birth to stragglers. If you are doing custom computation on partition data, it may happen that the computation gets skewed among the partitions owning to certain attributes/properties of the data even when the data among partitions is fairly distributed. Once the computation gets skewed, again it could potentially result in stragglers.

Imbalanced/Skewed computation can arise when the computing work in the computing routine of the partition is directly proportional to certain properties of the data records. An example would be a case where a Dataset points to a collection of FileWrappers Objects, and in a particular partition of this Dataset, all corresponding FileWrapper Objects refer to relatively big files as compared to FileWrapper Objects residing in the other partitions. The particular partition in the example could give rise to a straggler task.

Slow disk reads/writes: Stragglers can potentially arise for stages, involving disk read/writes, when some of the corresponding tasks are hosted on a server which is suffering from slow disk read/writes. Stages would require a disk read/write operation when they execute a shuffle read/write operations or save an intermediate RDD/Dataset on to the disk.

Higher Number of Cores per Executor: Provisioning a higher number of cores per executor, in ranges such as 4~8, could also sometimes lead to potential stragglers. This may happen because this could lead to a possibility of simultaneous execution of compute/resource heavy tasks on all the cores of an executor. Such a situation could lead to fighting for common resources, such as memory, within the executor leading to the appearance of performance deteriorating scenarios such as heavy garbage collection which in turn could give rise to stragglers in the stage.

Possible Remedies

Fair Partitioning: You need to ensure that the data is fairly partitioned among all partitions if the computation intensity is directly related to the size of data contained in a partition. If there is flexibility in choosing a re-partitioning key, one should go for a record attribute, as a key, which provides higher cardinality and is evenly distributed among data records. If there is no such flexibility available and the repartitioning key distribution is highly skewed, one can opt and try salting techniques.

Fair Computation: You also need to ensure that computation is evenly distributed among partitions where computation intensity is not directly related to the size of data in a partition but more on the certain attribute(s) or field(s) in each of the data records.

Increased Number of Partitions: Increasing the number of stage partitions could decrease the magnitude of performance penalty afflicted by the stragglers. However, this would help to a certain extent only. ( To know more about the Spark partitioning tuning, you could refer, “Guide to Spark Partitioning: Spark Partitioning Explained in Depth” )

Turning on the Spark speculation feature: Spark speculation feature, which actively identifies slow-running tasks, kills them, and again re-launches the same, can also optionally be turned on to deal with stragglers. It helps in dealing with stragglers that arise due to resource crunch on executors or due to slow disk/network read/writes on the hosting server. By default, the feature is turned off, one can enable the same by setting the spark config, ‘spark. speculation’ to true. Further, the speculation feature provides various other knobs too (in terms of spark config) to fine-tune the straggler identification and killing behavior. Here is the description of these knobs:

a) spark.speculation.interval (default: 100ms): How often Spark will check for tasks to speculate. You need not touch the same.

b) spark.speculation.multiplier (default: 1.5): How many times slower a task is than the median to be considered for speculation. This is used as a criterion for identifying the stragglers and can be tuned based on Spark application behavior.

c) spark.speculation.quantile (default: 0.75): Fraction of tasks which must be complete before speculation is enabled for a particular stage. This is used to decide when to launch the attack and kill the stragglers and further re-launch them, this can also be tuned based on Spark application behavior.

Although speculation seems to be a readymade feature to address the straggler menace, try it only after you study your application behavior. Rather, I would advise you to first find the root cause for stragglers and provide fixes accordingly, because killing and re-launch of tasks, at times, could result in inconsistencies in application output.

Here is the Task metrics snapshot of the earlier example after the corresponding application is re-worked to address the straggler’s problem.

As you can be from the snapshot, the max time has now been reduced to 1.7 minutes from the earlier 28 minutes.

Opinions expressed by DZone contributors are their own.

Comments