Kafka Link: Ingesting Data From MongoDB to Capella Columnar

The blog focuses on integrating Capella Columnar with MongoDB, emphasizing the link that enables real-time data ingestion.

Join the DZone community and get the full member experience.

Join For FreeNavigating the dynamic landscape of Analytics and OLAP Systems involves the intricate art of querying and extracting valuable insights from operational databases. Couchbase has recently unveiled Capella Columnar, a cutting-edge addition to its NoSQL Analytics offerings. Capella Columnar goes beyond the conventional, supporting a diverse array of data sources, including MongoDB, DynamoDB, and MySQL.

This blog embarks on a fascinating exploration into the integration of Capella Columnar with MongoDB, shining a spotlight on the Link that orchestrates real-time data ingestion from MongoDB collections to Capella Columnar collections. This seamless integration sets the stage for conducting analytical queries on the ingested data, unlocking new capability dimensions within Capella Columnar.

To decipher the wealth of data stored in MongoDB collections, creating a Link with MongoDB as the source becomes paramount. The forthcoming section serves as your visual compass, offering an illuminating guide through the effortless process of establishing a Link for MongoDB Atlas on Capella Columnar. This requires minimal effort and promises a smooth and efficient flow of data, elevating your analytical endeavors to new heights.

As elucidated in the Link documentation, ensuring seamless integration between Capella Columnar and MongoDB Atlas necessitates careful attention to the following prerequisites. These prerequisites primarily revolve around configuring network access and defining appropriate database role permissions within the MongoDB Atlas environment:

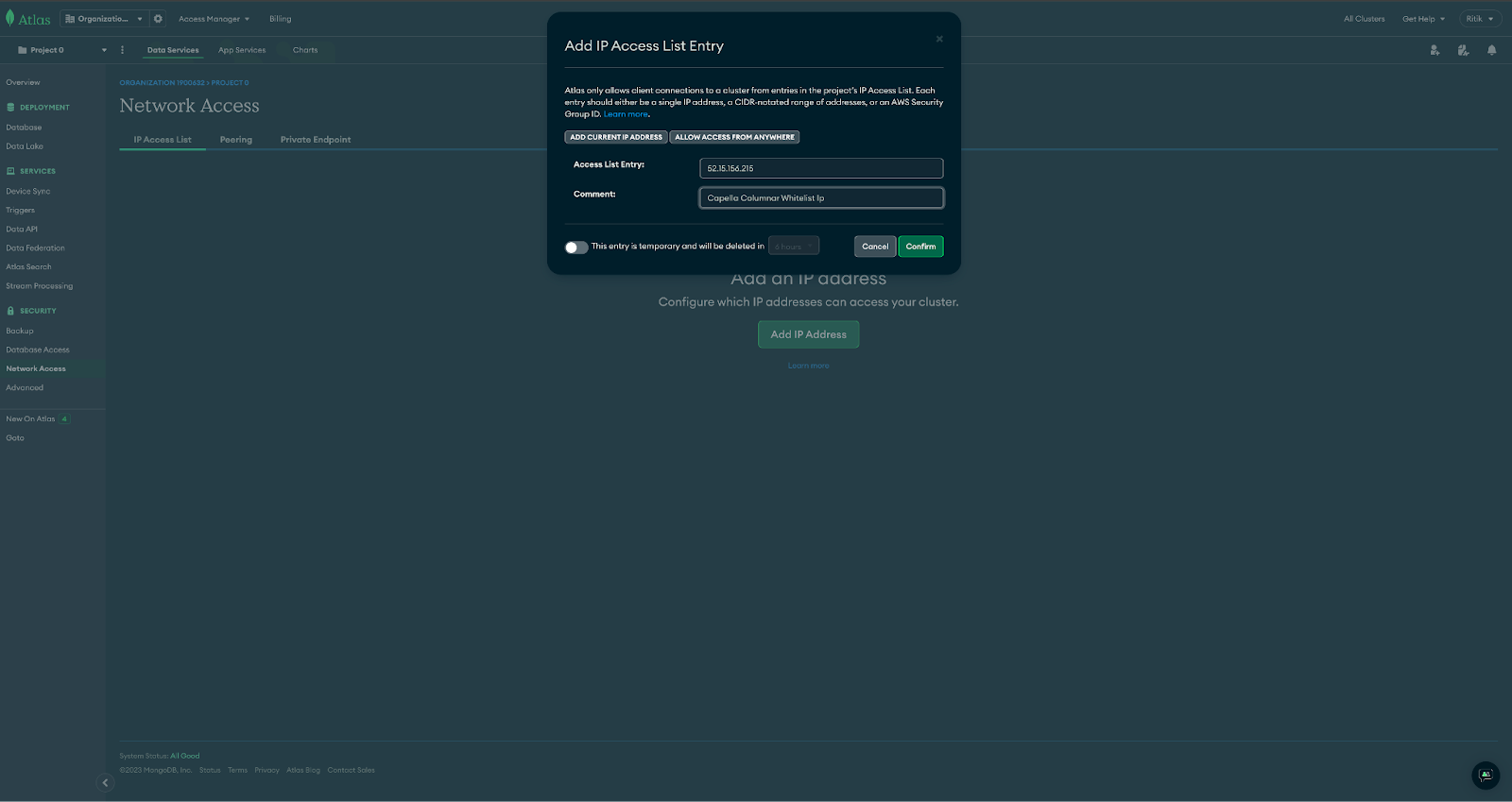

Granting Network Access

Include the IP address 52.15.156.215 in the IP access list. This process involves whitelisting the mentioned IP in MongoDB for proper network access. IP WhiteListing in Mongo

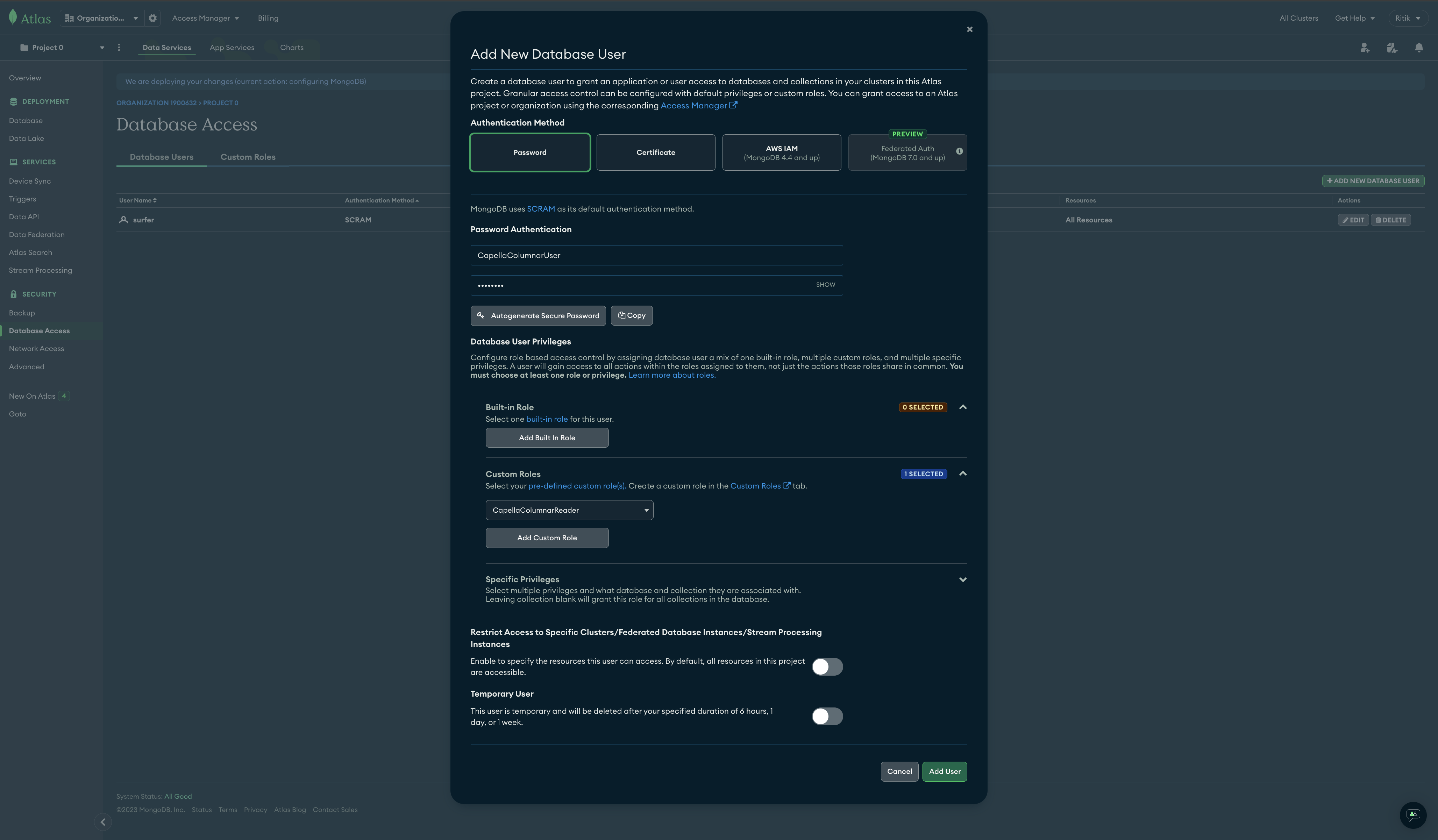

Establishing Minimum Role for Reading MongoDB ChangeStream

Generate the necessary role in MongoDB Atlas to ensure adequate permissions for reading the Mongo ChangeStream. Creating a Role in Mongo Atlas

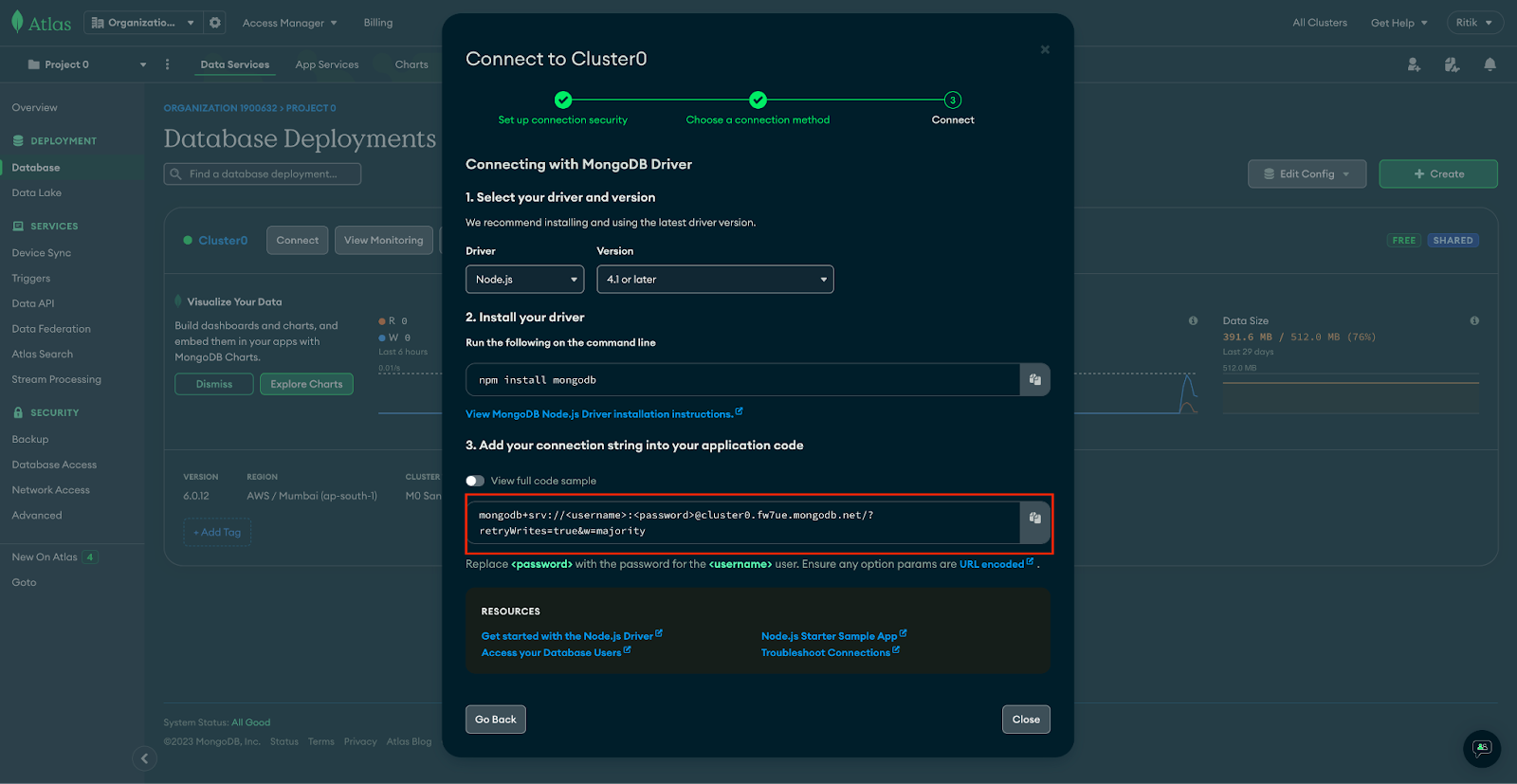

Obtain the MongoDB Connection String

Retrieve the MongoDB connection string, a crucial element for establishing the connection between Kafka Link and MongoDB Atlas.

Steps Involved in Creating Links to MongoDB

Create a Capella Columnar Database

Navigate to the Capella Columnar tab and initiate the creation of a new database.

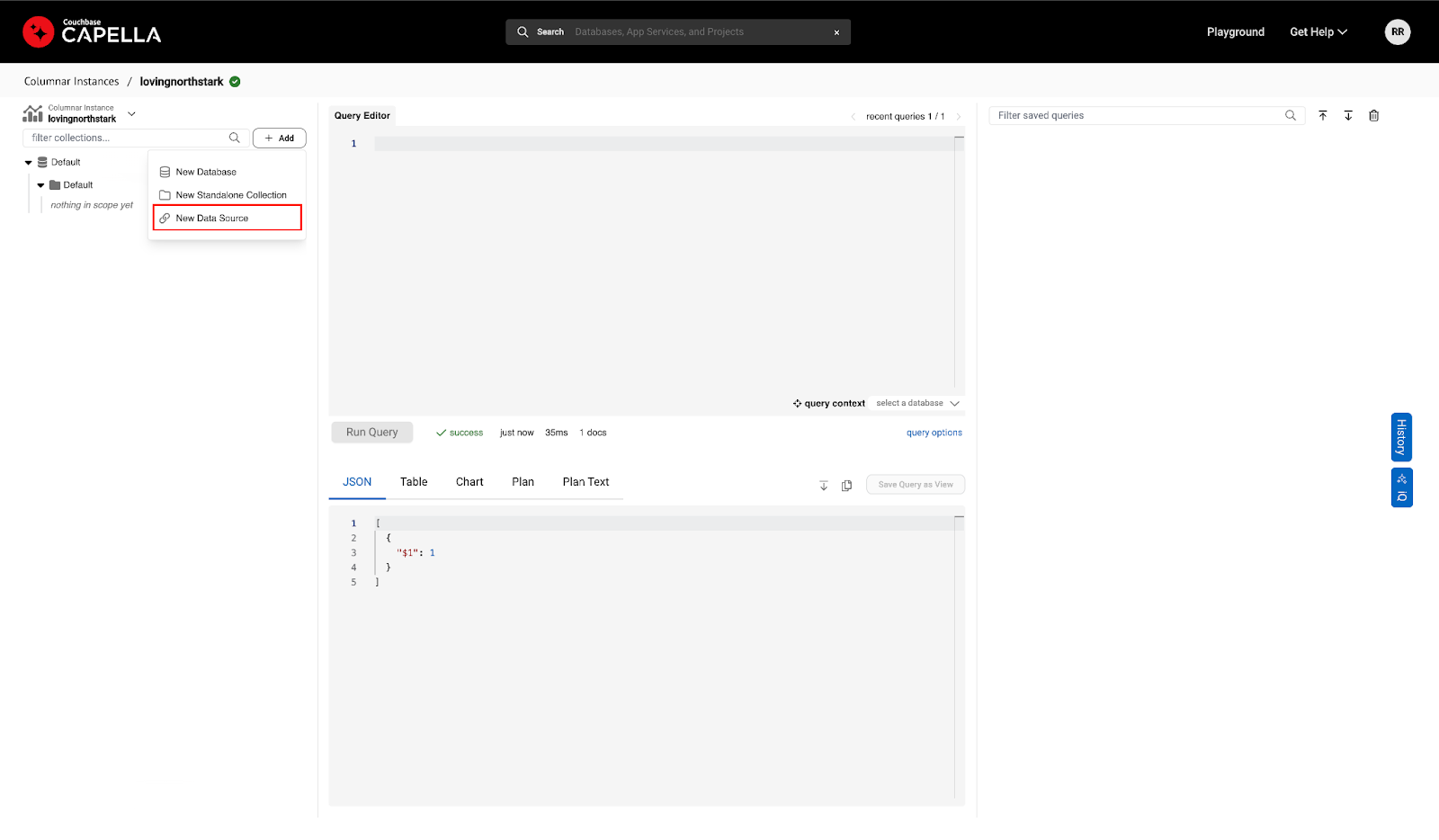

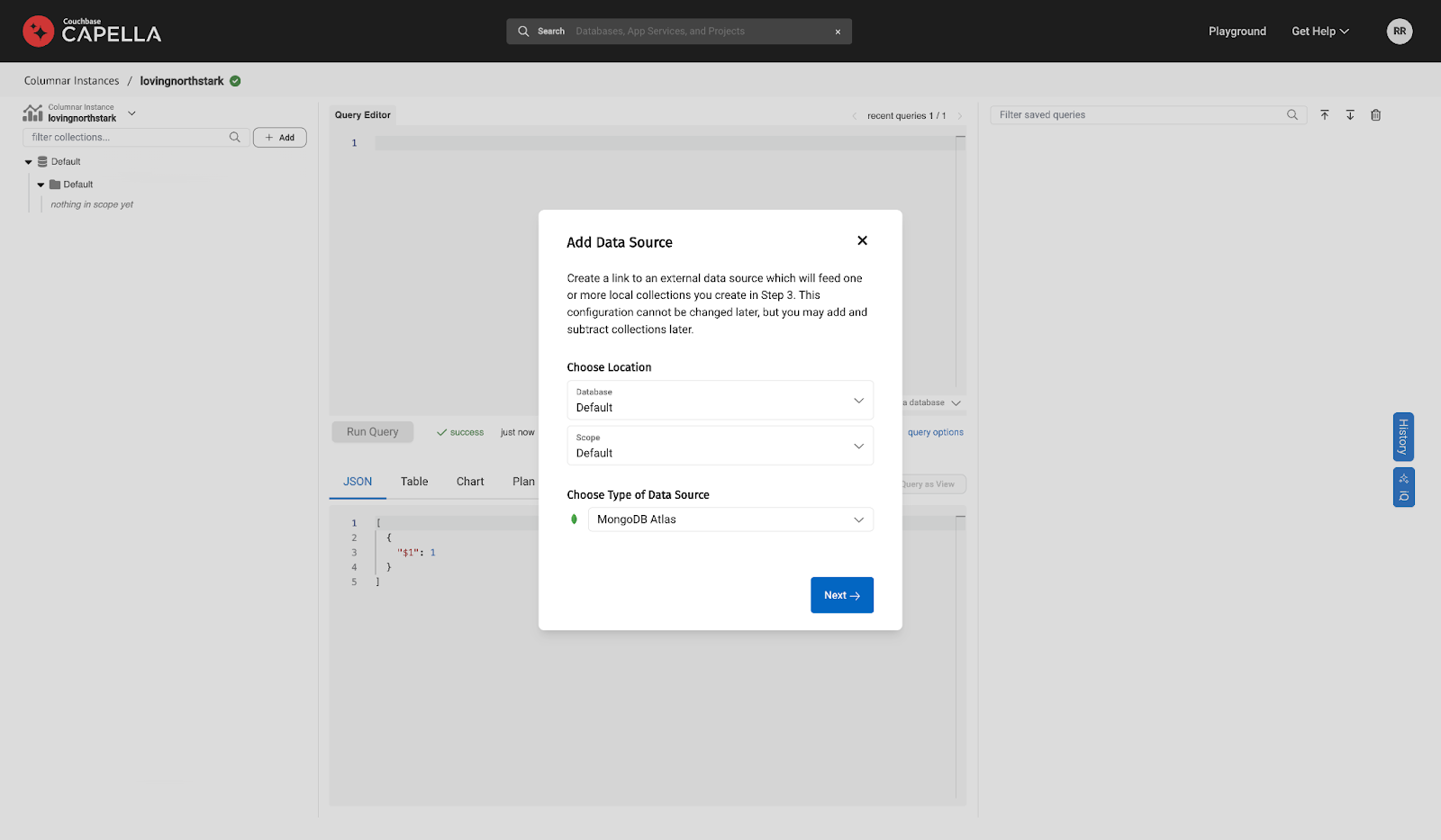

Create a Datasource

Click on "Create a Datasource" to begin setting up the Link.

Select the Required SourceDB

Choose MongoDB from the list of available databases.

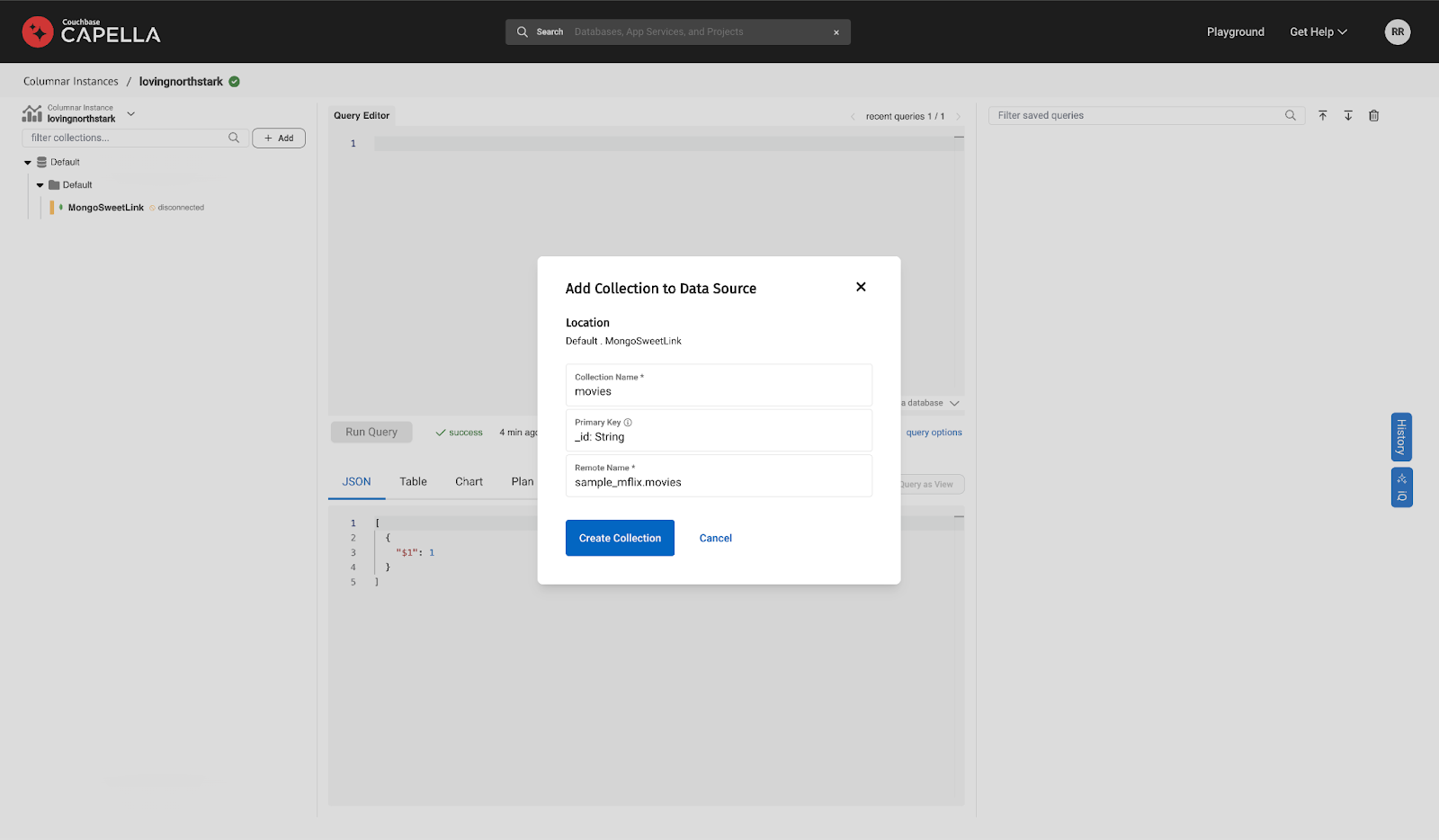

Provide MongoDB Source Details

Fill in the required details related to MongoDB and the primary key of the target Capella Columnar collection.

- Collection Name: Create the destination collection where the data will be ingested.

- Primary Key: Specify the primary key along with the Type for the Capella Columnar collection.

- Remote Name: Provide the name of the collection on Mongo Atlas.

Note: Ensure that the primary key and the associated type are consistent between the Mongo Atlas Collection and the Capella Columnar Collection for proper ingestion. For MongoAtlas, in this illustration, use _id as Primary key, which is of type ObjectId on the Atlas.

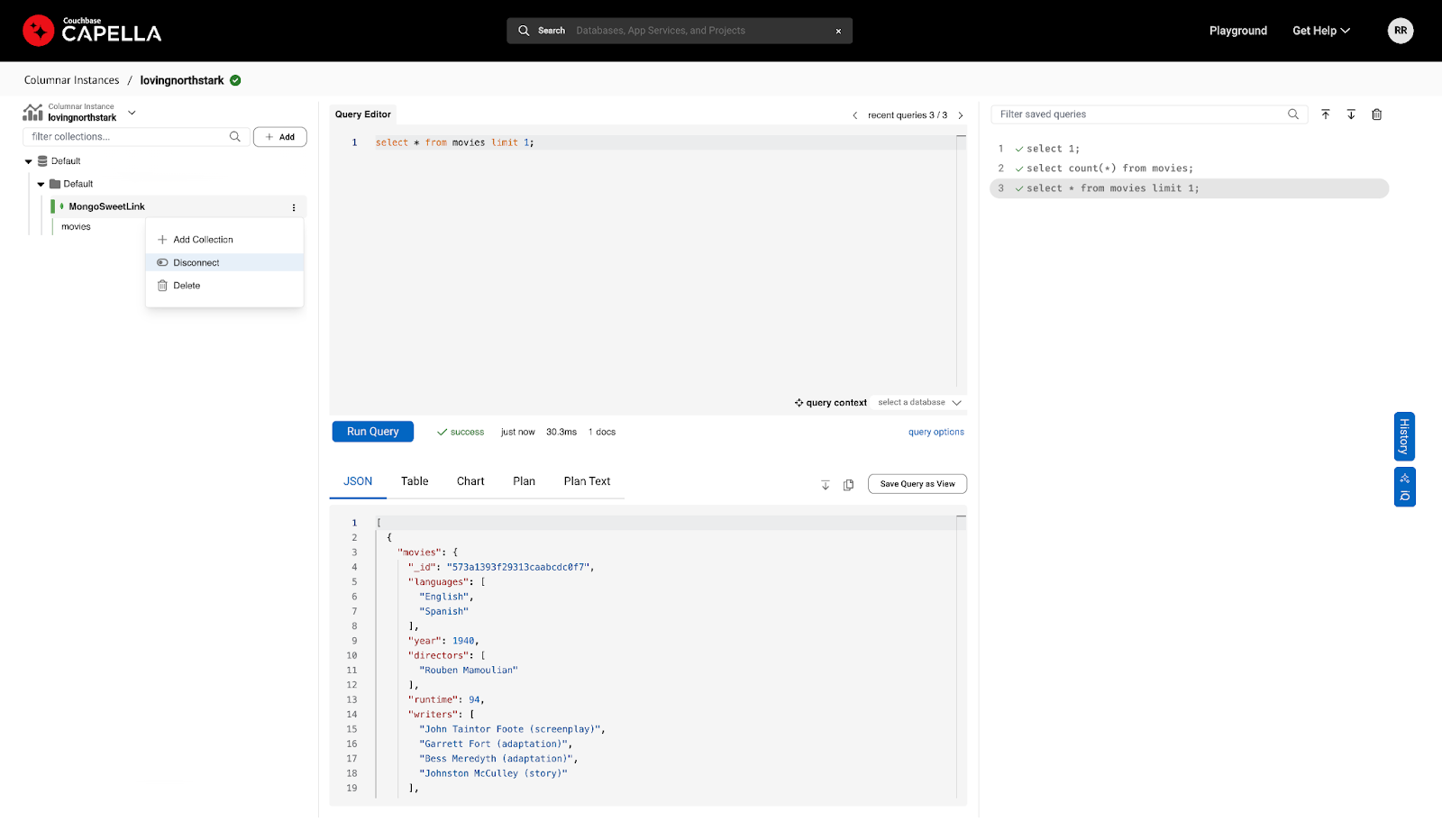

Time To Connect the Link

After providing the necessary details, connect the Link and allow a few minutes for data flow initiation.

Monitor Connection State

Monitor the connection state, which will transition from DISCONNECTED to CONNECTED once the data starts flowing.

Analyze Real-Time Data Changes

With the Link connected, changes in the Mongo Collection will be reflected in the Capella Columnar dataset in real-time.

Disconnect the Link (Optional)

To stop data ingestion, disconnect the Link. This halts the flow of data, but the ingested data remains accessible for analytical queries. By following these steps, you can easily initiate data ingestion in Capella Columnar, empowering you to perform real-time analytics and gain valuable insights from your data.

By following these steps, you can easily initiate data ingestion in Capella Columnar, empowering you to perform real-time analytics and gain valuable insights from your data.

Additional Resources

Follow the provided links to learn more about how Capella Columnar addresses specific needs.

- Read Couchbase Capella Columnar Adds Real-time Data Analytics Service

- Watch Couchbase Announces New Capella Columnar Service

- Read the Advantages of Couchbase for real-time analytics and sign up for the preview

Opinions expressed by DZone contributors are their own.

Comments