MuleSoft Logs Integration With Datadog

In this article, learn how to integrate MuleSoft application logs with the external data system, Datadog, using the Log4j2 file.

Join the DZone community and get the full member experience.

Join For FreeIn this article, we will see how we can integrate MuleSoft application logs with the external data system using the Log4j2 file.

Some organizations want to persist logs for more than 30 days or want to build custom dashboards based on the logs; hence, there is a need to store these logs in an external data system. The easiest and most recommended way to integrate the logs with external systems is through the Log4j2 file.

This article will integrate the MuleSoft application logs with one of the external systems, which is Datadog. The Datadog Log Management unifies logs, metrics, and traces in a single view, giving you rich context for analyzing log data.

Integration of MuleSoft Logs With Datadog

To integrate the MuleSoft Logs with Datadog follow the steps below:

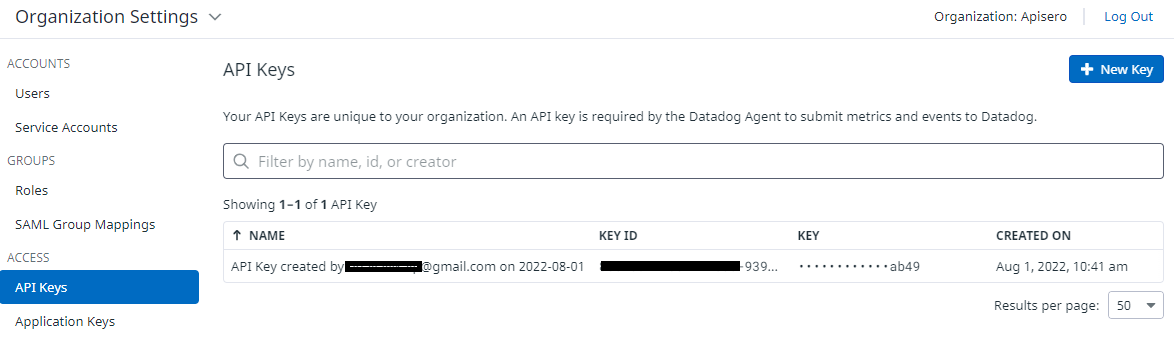

2. Now we want to get the API keys. Go to Organization Settings and copy the API key. You can also choose to create a new key if you have more than one source pushing logs to Datadog.

3. Now we need to modify the Log4J2 file and add the Datadog HTTP appender in the appenders section.

<Http name="DATADOG"

url="https://http-intake.logs.datadoghq.com/api/v2/logs?host=${sys:hostName}&ddsource=Mulesoft&service=${sys:application.name}&ddtags=env:${sys:env}">

<Property name="Content-Type" value="application/json" />

<Property name="DD-API-KEY" value="${sys:ddapikey}" />

<JsonLayout compact="false" properties="true">

</JsonLayout>

</Http>You can add more tags and JSON parameters as per the organization's need.

Refer to the documentation for more information on the Datadog HTTP API or for JSON layout.

4. Now we need to refer to the above Datadog appender.

<AsyncRoot level="INFO">

<AppenderRef ref="file" />

<AppenderRef ref="DATADOG" />

</AsyncRoot>5. Once the changes are done in the Log4J2, we will have to pass the below parameters at runtime:

- application.name

- ddapikey (This we copied in step 2.)

- env

Above are the user-defined parameters. You can name them as per the naming convention defined in your organization.

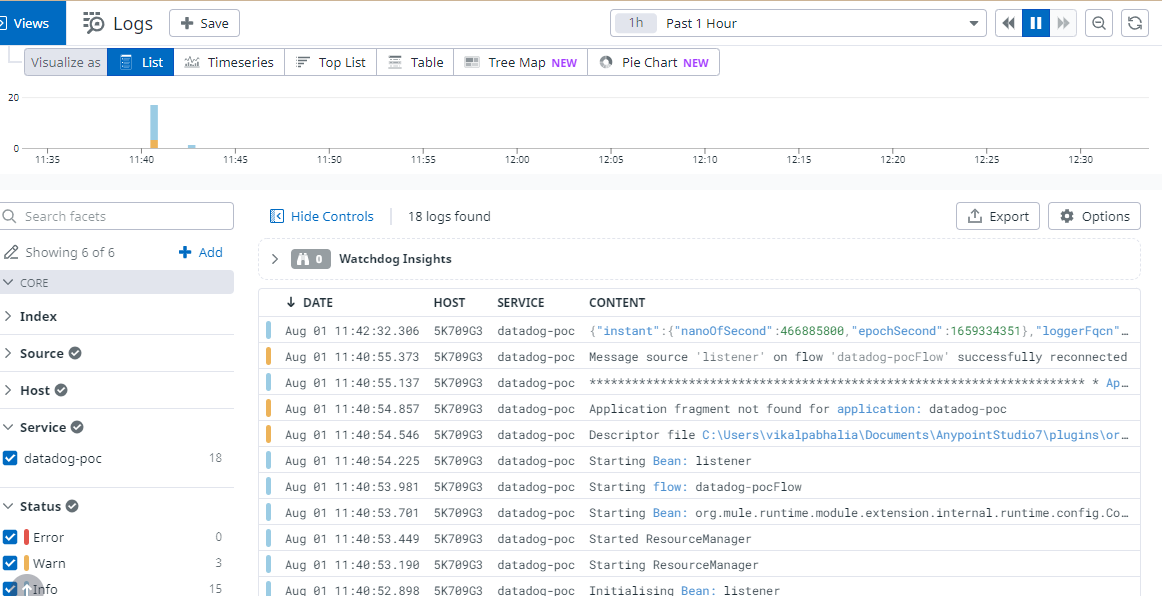

6. Once you deploy the application, you will see logs flowing in the Datadog.

7. To view logs in the Datadog:

- Login to Datadog.

- Click on the logs and you will see the MuleSoft logs.

![MuleSoft logs]()

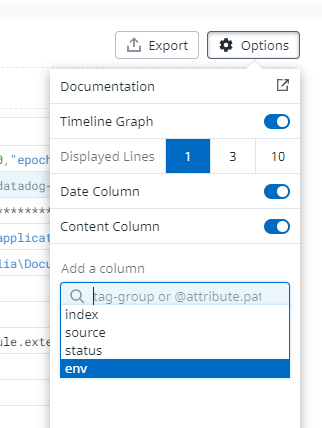

8. You can choose the column that you to see. Click on the Options button and select the columns.

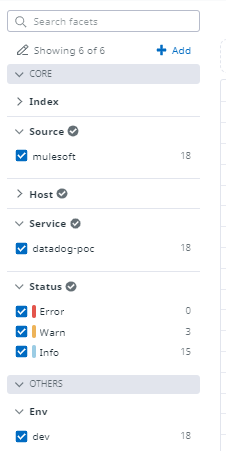

9. You can filter logs based on the left panel:

Enable Custom Log4j2 in the CloudHub Runtime

When we deploy the application on the CloudHub runtime, the Log4j2 file packed in the application package is ignored and a default Log4j2 file is used by Mule runtime.

To enable the custom Log42 file, we need to select the Disable Cloudhub logs check box in the CloudHub runtime.

If then you need to enable the CloudHub logs and Datadog at the same time, we will have to add and refer CloudHub Log Appender and the Datadog appender in the Log4j2 file.

Follow the steps mentioned in the MuleSoft documentation site to enable the CloudHub log appender as well in the Log4j2 file. I am attaching a complete Log4j2 file for reference:

<?xml version="1.0" encoding="utf-8"?>

<Configuration>

<!--These are some of the loggers you can enable. There are several more

you can find in the documentation. Besides this log4j configuration, you

can also use Java VM environment variables to enable other logs like network

(-Djavax.net.debug=ssl or all) and Garbage Collector (-XX:+PrintGC). These

will be append to the console, so you will see them in the mule_ee.log file. -->

<Appenders>

<RollingFile name="file"

fileName="${sys:mule.home}${sys:file.separator}logs${sys:file.separator}datadog-poc.log"

filePattern="${sys:mule.home}${sys:file.separator}logs${sys:file.separator}datadog-poc-%i.log">

<PatternLayout

pattern="%-5p %d [%t] [processor: %X{processorPath}; event: %X{correlationId}] %c: %m%n" ></PatternLayout>

<SizeBasedTriggeringPolicy size="10 MB" ></SizeBasedTriggeringPolicy>

<DefaultRolloverStrategy max="10" ></DefaultRolloverStrategy>

</RollingFile>

<Http name="DATADOG"

url="https://http-intake.logs.datadoghq.com/api/v2/logs?host=${sys:hostName}&ddsource=Mulesoft&service=${sys:application.name}&ddtags=env:${sys:env}">

<Property name="Content-Type" value="application/json" ></Property>

<Property name="DD-API-KEY" value="${sys:ddapikey}" ></Property>

<JsonLayout compact="false" properties="true">

</JsonLayout>

</Http>

<Log4J2CloudhubLogAppender name="CLOUDHUB"

addressProvider="com.mulesoft.ch.logging.DefaultAggregatorAddressProvider"

applicationContext="com.mulesoft.ch.logging.DefaultApplicationContext"

appendRetryIntervalMs="${sys:logging.appendRetryInterval}"

appendMaxAttempts="${sys:logging.appendMaxAttempts}"

batchSendIntervalMs="${sys:logging.batchSendInterval}"

batchMaxRecords="${sys:logging.batchMaxRecords}"

memBufferMaxSize="${sys:logging.memBufferMaxSize}"

journalMaxWriteBatchSize="${sys:logging.journalMaxBatchSize}"

journalMaxFileSize="${sys:logging.journalMaxFileSize}"

clientMaxPacketSize="${sys:logging.clientMaxPacketSize}"

clientConnectTimeoutMs="${sys:logging.clientConnectTimeout}"

clientSocketTimeoutMs="${sys:logging.clientSocketTimeout}"

serverAddressPollIntervalMs="${sys:logging.serverAddressPollInterval}"

serverHeartbeatSendIntervalMs="${sys:logging.serverHeartbeatSendIntervalMs}"

statisticsPrintIntervalMs="${sys:logging.statisticsPrintIntervalMs}">

<PatternLayout pattern="[%d{MM-dd HH:mm:ss}] %-5p %c{1} [%t]: %m%n"></PatternLayout>

</Log4J2CloudhubLogAppender>

</Appenders>

<Loggers>

<!-- Http Logger shows wire traffic on DEBUG -->

<!--AsyncLogger name="org.mule.service.http.impl.service.HttpMessageLogger"

level="DEBUG"/ -->

<AsyncLogger name="org.mule.service.http" level="WARN" ></AsyncLogger>

<AsyncLogger name="org.mule.extension.http" level="WARN" ></AsyncLogger>

<!-- Mule logger -->

<AsyncLogger

name="org.mule.runtime.core.internal.processor.LoggerMessageProcessor"

level="INFO" ></AsyncLogger>

<AsyncRoot level="INFO">

<AppenderRef ref="file" ></AppenderRef>

<AppenderRef ref="DATADOG" ></AppenderRef>

<AppenderRef ref="CLOUDHUB" ></AppenderRef>

</AsyncRoot>

<AsyncLogger name="com.gigaspaces" level="ERROR"></AsyncLogger>

<AsyncLogger name="com.j_spaces" level="ERROR"></AsyncLogger>

<AsyncLogger name="com.sun.jini" level="ERROR"></AsyncLogger>

<AsyncLogger name="net.jini" level="ERROR"></AsyncLogger>

<AsyncLogger name="org.apache" level="WARN"></AsyncLogger>

<AsyncLogger name="org.apache.cxf" level="WARN"></AsyncLogger>

<AsyncLogger name="org.springframework.beans.factory" level="WARN"></AsyncLogger>

<AsyncLogger name="org.mule" level="INFO"></AsyncLogger>

<AsyncLogger name="com.mulesoft" level="INFO"></AsyncLogger>

<AsyncLogger name="org.jetel" level="WARN"></AsyncLogger>

<AsyncLogger name="Tracking" level="WARN"></AsyncLogger>

</Loggers>

</Configuration>

In this article, we have learned to integrate the MuleSoft logs with an external system. Happy learning.

Opinions expressed by DZone contributors are their own.

Comments