Performance Engineering in the Age of Agile and DevOps

Performance has to be closer than ever for companies that wish to release faster than ever.

Join the DZone community and get the full member experience.

Join For FreeMany organizations are moving towards an Agile methodology for reducing time to market, for a better quality of the deliverables, and for aligning business goals with technology. Agile adoption help business groups provide feedbacks while the product is being built, unlike the waterfall methodology. As the software is released in an iterative manner with shorter release cycles, this often leads to shorter performance testing windows. This poses challenges to conventional performance testing and engineering methodology adopted in the traditional waterfall model.

This article talks about different aspects of performance testing and engineering in an Agile environment and innovative ways to mitigate various challenges

Performance Testing in Waterfall vs. Agile SDLC

In the traditional waterfall model, performance testing happens towards the end, just before user acceptance testing (UAT) or in parallel with system integration testing (SIT). This often results in applications not meeting the performance requirements. Also, performance issues are mitigated by expensive hardware sizing methods rather than code optimization. This sometimes results in production issues with a direct impact on end user’s experience and business.

In the case of the Agile model, the functionality is delivered in an incremental manner. As the release cycles are short, most of the activities (requirements gathering, development, and testing) are carried out in parallel. Performance testing of the release/sprints needs to be completed in a very short time frame (1 to 2 weeks depending on release cycle).

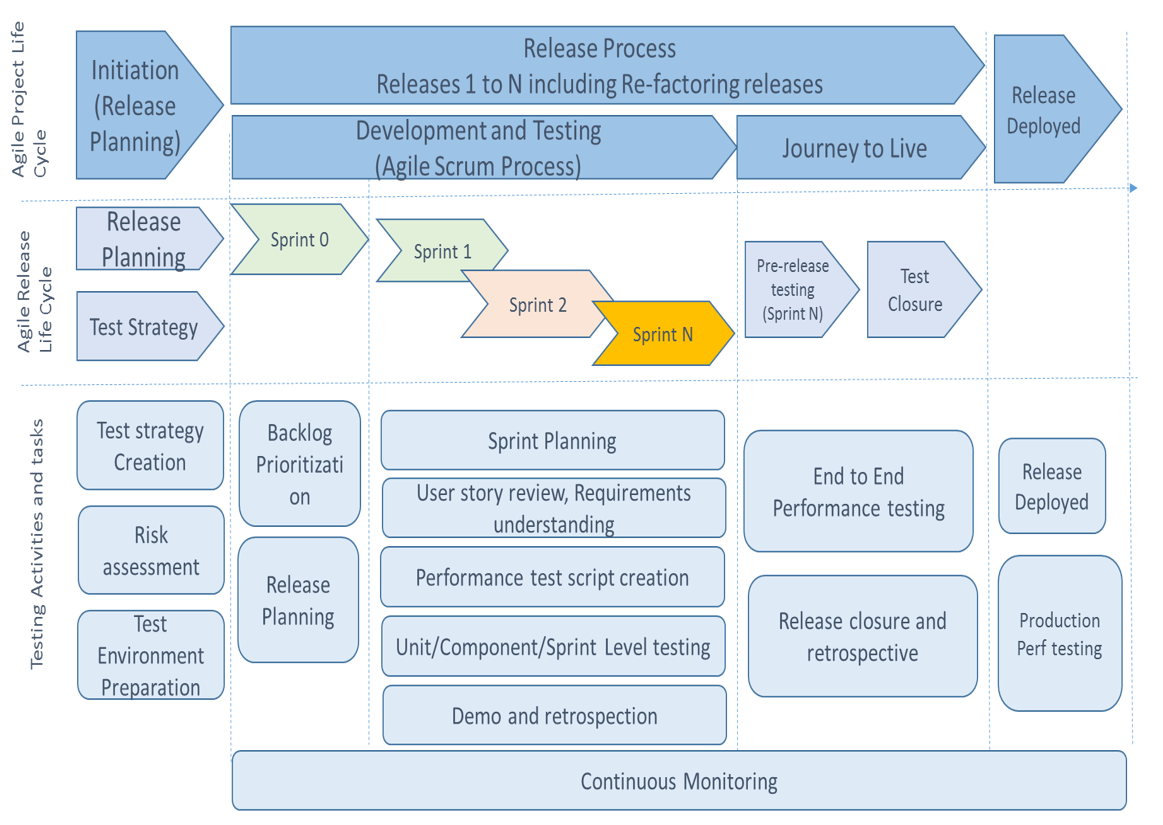

Performance assurance is an integral part of the SDLC. When it comes to Agile, it takes higher priority than other non-functional requirements. Performance assessment is done at every stage in an incremental way. PT&E becomes a part of everyone jobs, be they program manager, development manager, developers, SDET or network operations team. The following diagram indicates PT&E activities in Agile lifecycle.

Performance Engineering Activities in Agile

Performance Testing and Engineering Toolsets in Agile World

Automation at every stage being the key, as it is essential to have a toolset that facilitates quick integrations with each other. Let us have a quick look at the widely used tools from PT&E perspective:

Application Lifecycle Management |

Source code Management |

Build and Continuous Integration |

Code Analysis |

Load Simulation |

Performance Monitoring and KPI trend |

|

|

|

|

|

|

PT&E Challenges

The key challenges faced in performance engineering exercise in Agile SDLC can be attributed to people, processes, and technology. The following table indicates the key challenges:

Category |

Description |

Technology |

Full-scale environment is not available for performance testing |

Process |

Limited time available for environment setup, load test script and data setup to execute full-scale load test |

Process |

Unstable builds: Since development and testing activities go simultaneously break-off time is needed within a Sprint. If major changes occur after the break-off time, then those changes will need to be tested for the next sprint. |

Process |

Limited or no support for developers to fix broken environments or help in understanding how a feature works |

Process |

Very little documentation to understand feature design |

People |

Challenges in identifying talents with the right skill set |

Let us understand how we can overcome these challenges by deploying a framework consisting of features which facilitate effective performance risk analysis, early performance testing, and apprpriate usage of technology and tools. Key strategic elements of this framework are:

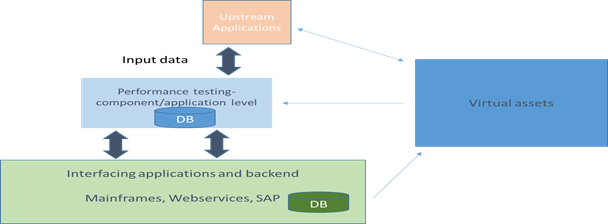

Service Virtualization Usage in PT&PE

Service virtualization can help speed up performance testing activities comprehensively by using virtual services by emulating the behavior of components even before they are available or developed. Service virtualization helps fill in the gaps of missing system components by simulating the responses in a pre-determined manner and facilitates early performance validation. The ost popular tools are CA LISA and TOSCA.

Service virtualization can be used to emulate the third-party services, even whole backend systems for Performance and Integration tests

ML Lead Performance Validation

While ML is next-generation automation, Agile enables faster release cycles. Thus Agile and AI are coherent technologies. It is possible to detect anomalies, draw patterns from sprint level test results, and find defects using ML techniques. ML can also be leveraged for post-production analysis. It can also be leveraged for trend analysis across sprints. For example, the clustering model can be used to cluster VMs or container CPU usages and see if all VMs/containers of an application are utilized at the same level. It can help is separating abnormal VMs/Container that needs to be analyzed further. Similarly, a predictive model can be used to develop a correlation between resource usage and response time. This model will give predicted value for a given load. This predicted value can be compared with actual value from the test and call out any abnormally as part of the automated analysis process.

This same technique can be used in even production for live monitoring and alerting. Today we have many AI/ML powered APM tools like Dynatrace, Instana, Newrelic, AppDynamics that can be used as well.

Continuous Performance Testing Strategy

It has been observed that 70-80% of performance defects actually do not require a full-scale performance testing in a performance lab. These defects can be found using a signal user or small scale load test in the lower environment if a good APM tool is used to profile and record execution statistics at each stage of SDLC. As Agile aims at progressive elaboration, continuous performance validation approach needs to be deployed to progressively expand the test coverage through multiple iterations. Continuous performance testing approach comprised of 3 distinct phases:

Shift Left enables teams to do the right level of load testing in the build development phase. The target of this shift left approach is to find and fix all code related performance defects as development is in process.

Make sure all feature shave performance success criteria along function requirement

Make sure basic performance best practices are followed, like:

Timeout setting should be implemented at each interaction level.

API/service calls

DB calls

Unit performance test of each component should be part of “task completion” definition.

Use a good APM tool like Dynatrace, AppDynamics, NewRelic, etc. in all environments.

Analyze performance data and trend the same build by build automatically from unit and automated system test [behind the scene performance analysis].

Create a self-service job to execute performance test at component level/API level on demand and as part of build pipeline.

Build success should include performance threshold as well. These should be lower of insolation or component level test. These jobs can be used by developers to quickly gain performance insight without waiting for the full-scale load by perf engineering.

Include performance monitoring or telemetry change for operations in the development phase

PT Phase (Performance Testing in performance environment): the performance environment should be used for performance evaluation of changes that need full-scale performance testing

Infrastructure upgrade

New components that need scalability and capacity assessment before production release

Changes in technologies stack, like moving a search engine from SOLR to Elastic Search

Database migration

Holiday- or peak season-specific feature or holiday readiness performance validation.

These tests in performance environment are same as the traditional performance test exercise. However, activities need to be automated. This can be done with tools like Jenkins or GO pipeline. Performance analysis, data trend, abnormally detection, and cutting down performance analysis by more than 50% if we use machine learning algorithm.

Shift right enables performance testing in production. In Agile methodology, since full-scale performance test is not done all the time in a performance lab, production performance testing is highly critical to make sure the system can handle user traffic without any issues. It also helps in verifying how an application will scale and how much infrastructure is needed for peak load. High availability and failover test cases can be directly executed in production.

There are a few points that need to be considered for implementing production performance testing process:

An application must be designed to differentiate between test traffic and real user traffic based on cookies/header value. In the case of test traffic, the application can invoke virtualized/stub endpoints to make sure test is not creating data in the backend system.

Newer testing methodologies such A/B testing methodologies must be evaluated for gauging performance

New features/changes are only visible to a certain set of users. The percentage of the user set is increased gradually if there are no issues

If there are issues found, either we do not increase the load further or new features will be pulled back.

Tests must be designed to mimic real user patterns in term of

User session pattern

Data

Geolocation

Mobile vs browser vs mobile app traffic vs IoT traffic (e.g. Amazon alexa/googlehome or location specific push/pull traffic)

The right load test tool must be selected that supports

Dynamic scaling of load

Can generate load from various location and platform

Some of the security rules must be updated whitelist load generator IPs to allow load on limit IP addresses.

Collaboration with operations and external team is another essential aspect

Live Monitoring

Right tools, Alerts, dashboards should be set up to monitor and analysis test and its impact on real user traffic live.

An automated framework using a machine learning algorithm to monitor patterns and detect abnormally, compare the data set with patterns, and use the predictive model to predict performance as the load is increased dynamically will greatly reduce test analysis time and also reduce the chance of impacting real users.

Stop the test if real user experience degrades below the predefined threshold.

Post-test activities

Data clean up if any

Test impact analysis and recommendation

Communication to all stakeholders about test finding and next steps.

The benefits of proactive and continuous performance testing & engineering using shift left and shift right approach include:

Identification of performance issues/bottlenecks in early phases, which helps in fast resolution with less cost. This is a proactive approach instead of a reactive approach.

Awareness of application performance status along with functionality even before actual performance testing in a dedicated performance environment.

It is easy to identify the root cause of the performance issue

It is possible to fix performance issues at the code level during the development stage

The development team will get adequate time to fix performance issues and optimize code

Can monitor the progress of work at any time by looking into product and sprint backlog

Software delivered will be of better quality and also in a relatively shorter duration.

PT & PE Team Involvement

Performance testing has higher precedence when it comes to Agile and the goal should be to test performance early and often. So the PT&E team needs to be involved right from the inception. The following table indicates a typical RACI matrix in any Agile implementation.

Key Activity |

Business Owner (s) |

Program / Portfolio Manager |

Sponsor |

PT&E Team |

Identify all the stakeholders |

C |

A, R |

S |

C |

Collect inputs based on business needs, market drivers and feedback from end users |

C |

A, R |

C |

|

Performance Engineering Best Practices, Guidelines, Goal, approach/strategy |

C |

C |

A, R |

|

Assess Current Infrastructure capacity and performance |

C |

C |

A, R |

|

Review and update existing workload models or create new as needed |

C |

C |

A, R |

|

Performance prototyping and tool POCs |

C |

C |

A, R |

|

Define performance KPIs at the feature level |

C |

C |

A, R |

|

Active review of intermediate design |

C |

A, R |

C |

|

Code review from performance POV |

C |

A, R |

C |

|

Automate performance Test Activities |

C |

C |

A, R |

|

Performance test framework integration with the build server. |

C |

C |

A, R |

|

Component performance testing |

C |

C |

A, R |

|

Code profiling and debugging |

C |

C |

A, R |

|

Performance Test Design |

C |

C |

A, R |

|

Performance Test Execution |

C |

C |

A, R |

|

Performance Monitoring changes - - requirement for telemetry - dashboard updated - defining incident and alert for performance KPI |

C |

C |

A, R |

|

Production performance analysis and finding potential issues/hot spots |

C |

C |

A, R |

|

Planning to execute performance test in production if applicable |

C |

C |

A, R |

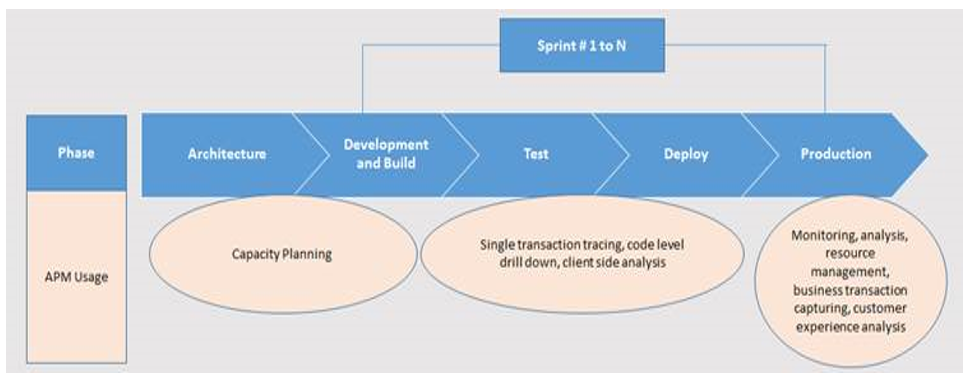

Extensive Usage of APM tools

The success of any Agile implementation is dependent on the right choice of the toolset, APM tools being the key ones. There are multiple ways in which APM tools can solve Agile challenges:

- Isolate and fix performance issues in coding phase at sprint level

- Analyze performance trends before and after code deployment at sprint level

- Continuous synthetic monitoring

- Increase online collaboration between dev and test teams. Need for detailed triage meeting in WAR rooms is minimized by tracing the sessions, pinpointing exact issues

- Consistent metrics to analyze performance issues across the environment and it is accessible to development, testing teams, business users all the time.

The following diagram depicts how APM tools help in every Agile lifecycle stage:

Performance Risk Assessment

User story performance risk assessment not only helps identify key user stories which should be performance tested but also helps decide which user stories can be given lower priority. The following table indicates the parameters that decide the probability and impact of failure. The higher the risk factor, the higher the priority:

Probability/Impact |

Parameter |

User Story # 1 |

User Story # 2 |

Factor#1 |

Degree of change from the previous sprint |

1 |

1 |

Factor#2 |

Stringent Performance SLA |

5 |

4 |

Factor#3 |

No. of components impacted |

4 |

4 |

Factor#4 |

Any Regulatory requirement |

3 |

3 |

Factor#5 |

Heavy customizations and complex business logic |

5 |

4 |

Factor#6 |

Technical Complexity |

5 |

5 |

Weighted Probability |

Probability |

5 |

4 |

Factor#1 |

Is this a Strategic change? |

1 |

2 |

Factor#2 |

Business Importance and criticality |

5 |

2 |

Factor#3 |

No. of Users impacted |

4 |

3 |

Factor#4 |

Public Visibility and impact on the brand |

4 |

3 |

Weighted Impact |

Impact of Failure |

4 |

2 |

Risk Factor |

Risk Factor |

20 |

8 |

Right Skillset

In Agile manifesto, performance engineers need to be a good developers with data science tools expertise to apply ML and automate tasks, analyze, and derive useful insights from data.

Skillset |

Details |

Ability to view larger/bigger picture |

Performance engineer needs to have the ability to understand business requirements, priorities, and impact |

Have strong technical and business acumen |

Performance engineer should be able to translate business requirements into technical features and understand the business benefits that improved performance can deliver e.g. customer retention, increased revenue etc. |

Coding experience |

Performance engineer needs to have development experience with good understanding of architecture, infrastructure components |

Continuous improvement mindset |

As the key objective of every release is to have better performance, performance engineer needs to look for continuous improvement opportunities from application performance and overall process perspectives |

Conclusion

Continuous performance assurance to improve application quality with reduced cycle times is essential in Agile environments. This can be achieved by embedding APM tools leveraging next-generation analytics technologies, service virtualization tools, testing early and often, continuous intelligent automation, and deploying robust gating criteria for each lifecycle phase.

Opinions expressed by DZone contributors are their own.

Comments