Tips for Running the TICK Stack Using Docker

Learn how to run the TICK stack on Linux containers and Docker for OS-level virtualization for your applications.

Join the DZone community and get the full member experience.

Join For FreeDocker? Docker Docker Docker.

While the Docker buzz has faded a bit, replaced by new words like "Kubernetes" and "Serverless," there is no arguing that Docker is the default toolchain for developers looking to get started with Linux containers, as it is fairly ubiquitous and tightly integrated with a variety of platforms.

Linux containers are an abstraction built from several pieces of underlying Linux functionality like namespaces and cgroups, which together provide a type of OS-level virtualization; to your applications, it seems like each one of them is running alone on their own copy of the OS. As a result, Docker provides a variety of benefits over running software directly on a host machine; it isolates your applications from the rest of your system, and each other, and makes it easier to deploy applications across a variety of operating systems. In general, it just keeps things clean and tidy and well-partitioned.

Personally, I run Docker on the desktop and deploy as many of my applications there as possible; not only does it keep cruft off my host-I often need to bring up a new copy of the stack to test a particular issue, develop a new feature, or present a demo, which Docker makes exceptionally easy.

I also have an instance of the TICK Stack running 24/7 on a Raspberry Pi, collecting data from various sensors around my apartment. Docker makes it easy to keep my system clean and to deploy and upgrade software.

Linux containers and Docker are great tools that should be part of every developer's arsenal. So how can we use them to run the TICK Stack?

The Pieces

You'll need Docker and Docker Compose installed on your local machine. The Docker website has documentation for installation on macOS and Windows, both of which include Docker Compose as part of the install. There are also instructions for several varieties of Linux in the Docker CE installation documentation. On Linux, Docker Compose is a separate install.

Since Docker is an implementation of Linux containers, it requires a Linux OS to run. When you install Docker for Windows or macOS, it will set up and manage a virtual machine with the Linux kernel which will run all of your containers. On macOS, the virtual machine is set up by using HyperKit, while on Windows virtualization is provided by Hyper-V. Unfortunately, Hyper-V is only supported on Windows 10 Enterprise, Professional, and Education; users running Windows Home will need to use the legacy Docker Toolbox.

You can find official images on the Docker hub for the full TICK Stack: Telegraf, InfluxDB, Chronograf, and Kapacitor. It can sometimes take a little longer for images to show up there compared to how quickly we can publish them as packages on our downloads page, so if time is of the essence you might want to maintain your own pipeline for building Docker images, but that is outside the scope of this post.

Running Containers

There are a few ways to interact with Docker and manage your containers. The first is using the docker executable to issue commands like docker run and docker stop. The second, which we'll cover later in this post, is using Docker Compose.

If you followed the installation instructions for Docker through to the end, you should have already launched the hello-world container using the following command:

$ docker run hello-worldWhen running more complex software, we often have to provide additional arguments to the docker run command in order to configure the container environment. This can include exposing ports in the container to the Docker host, or mounting volumes for persistent storage.

Docker Networks

First, though, we're going to set up a new Docker network. Docker comes with a built-in network that all containers are attached to by default, but creating a new network for our TICK deployment will keep them isolated while allowing them to communicate with one another. Isolation is good for a number of things, but this can be especially helpful for bringing up multiple instances of the stack for testing or development. You can read more about the differences between the default network and a user defined one in the documentation.

We'll create a new network using the following command:

$ docker network create --driver bridge tick-netwhich creates a new network using the bridge driver, and gives it the name tick-net.

When we execute our docker run command to start the container, we'll add the following argument to connect it to our newly created network:

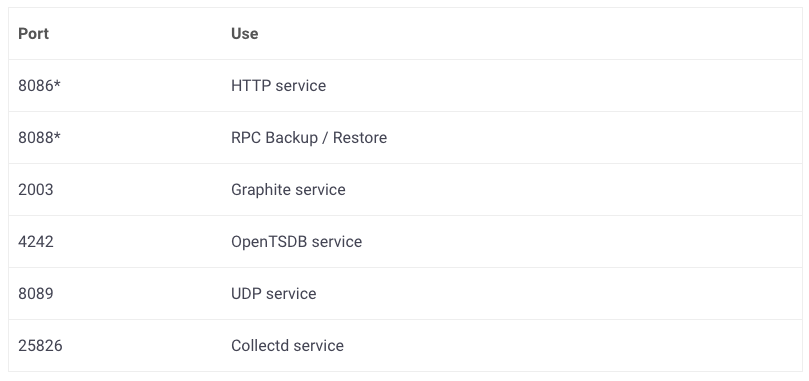

--network tick-netWe'll also want to expose a number of ports from the container to the Docker host, so we can communicate with the applications in our containers from the outside world. We'll use InfluxDB as an example. The database communicates over six different ports, two of which are enabled by default (marked with an *):

We’re not going to be running any of the disabled services, which provide additional methods of writing to InfluxDB, but we want to make the other two available. We’ll do this using the publish flag, --publish or -p, and add the following two lines to our docker run command:

-p 8086:8086

-p 8088:8088Persistent Storage

Next up, we'll need to make some decisions about where we want our configuration files to live, and where we're going to store container data for those containers that need it.

Continuing to build out our InfluxDB run command, the database expects that it will have access to two file locations on Linux, /var/lib/influxdb, where it stores its data, and /etc/influxdb, where it looks for its configs. It's possible to customize these locations, but for us the defaults are good enough.

We're going to mount local folders into those locations in the container by adding the volumes flag, --volume or -v, to docker run. If you wanted, you could let Docker manage a volume for you, but binding a folder on the local machine ensures that we'll be able to access the data from our host OS.

The bind points on the Docker host will vary based on the machine you're running on. For the sake of example, we'll pretend we have a folder in the fprefect user's home directory called influxdb, and two subfolders within that called data and config. We'll provide the following arguments to mount those two folders inside the container:

-v /Users/fprefect/influxdb/data/:/var/lib/influxdb

-v /Users/fprefect/influxdb/config:/etc/influxdbPutting It All Together

We're just about ready to fire up our first container. We just need to add a few more things to our docker run command. We're going to use the -d flag to start the container in "detached" mode, which runs it in the background, and we're going to give it a name with the --name parameter. Finally, we'll run the most recent version of the influxdb container, tagged 1.5.4.

Here's what it looks like all together:

$ docker run -d \

--network tick-net \

-p 8086:8086 \

-p 8082:8082 \

-p 8089:8089 \

-v /Users/fprefect/influxdb/data/:/var/lib/influxdb \

-v /Users/fprefect/influxdb/config:/etc/influxdb \

--name influxdb \

influxdb:1.5.4Go ahead and run that at the command line. If it succeeds, it should return a unique hash that identifies the running container.

Operating Containers

Now that we have a running container, let's talk about some additional operational details. The first thing we generally need to check is whether our containers are running. For this, we can use the docker ps command:

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

d20b788291ea influxdb:1.5.4 "/entrypoint.sh infl…" 3 seconds ago Up 1 second 0.0.0.0:8086->8086/tcp, 0.0.0.0:8088->8088/tcp influxdbGreat! Our container is up and running. But maybe it's not behaving like we expect? We might want to dig in and take a look at the logs. We can get those using the docker logs command and providing the name of the container. We'll also add a --tail=5 argument to limit the output to the last 5 lines. Without this, we'd get all the logs that have been outputted since the program has launched, which could be a lot!

docker logs influxdb --tail=5

ts=2018-06-28T21:43:26.862912Z lvl=info msg="Compacted file" log_id=08z5Suul000 engine=tsm1 tsm1_strategy=full tsm1_optimize=false trace_id=08z5T3NG000 op_name=tsm1_compact_group tsm1_index=0 tsm1_file=/var/lib/influxdb/data/_internal/monitor/4/000000005-000000002.tsm.tmp

ts=2018-06-28T21:43:26.863008Z lvl=info msg="Finished compacting files" log_id=08z5Suul000 engine=tsm1 tsm1_strategy=full tsm1_optimize=false trace_id=08z5T3NG000 op_name=tsm1_compact_group groups=2 files=1 duration=49.357ms

ts=2018-06-28T21:43:26.863071Z lvl=info msg="TSM compaction (end)" log_id=08z5Suul000 engine=tsm1 tsm1_strategy=full tsm1_optimize=false trace_id=08z5T3NG000 op_name=tsm1_compact_group op_event=end op_elapsed=49.407ms

ts=2018-06-28T21:43:40.037174Z lvl=info msg="Post http://a7a2fee5bc09:80/write?consistency=&db=_internal&precision=ns&rp=monitor: dial tcp: lookup a7a2fee5bc09 on 127.0.0.11:53: no such host" log_id=08z5Suul000 service=subscriber

ts=2018-06-28T21:43:50.024014Z lvl=info msg="Post http://a7a2fee5bc09:80/write?consistency=&db=_internal&precision=ns&rp=monitor: dial tcp: lookup a7a2fee5bc09 on 127.0.0.11:53: no such host" log_id=08z5Suul000 service=subscriberEverything looks good with the InfluxDB container, so let's see if we can connect to the database using the Influx CLI. We could connect using a copy of the CLI on our local host, but what if we wanted to launch the CLI within the container itself?

So let's execute the influx CLI within our influxdb container:

$ docker stop influxdb influxdb $ docker rm influxdb influxdb $ docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMESOne thing you might notice, though, is that the arrow keys don’t work as they’re supposed to within the CLI. When pressing the up arrow, for example, ^]]A is printed to the screen. This is an issue with the way the influx CLI detects and interacts with the terminal environment. If you execute a bash shell first, however, and then run the influx CLI, the arrow keys should work as expected:

$ docker exec -it influxdb /bin/bash

root@f7f7292006d0:/# influx

Connected to http://localhost:8086 version 1.5.4

InfluxDB shell version: 1.5.4

>Let’s stop and delete our container before moving on to the next section. You can use the docker stop and docker rm commands, along with either the unique hash that identifies the container, or the container name, as follows:

$ docker stop influxdb

influxdb

$ docker rm influxdb

influxdb

$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMESDocker Compose

Docker Compose is "a tool for defining multi-container Docker applications." It lets us bring up multiple containers and connect them together automatically, and we can use it to help us roll out and manage the complete TICK Stack more easily. We'll start by defining the various services that are part of the TICK Stack within a docker-compose.yml file, then deploy those services using the Compose command line tool.

version: '3'

services:

influxdb:

image: influxdb:latest

volumes:

# Mount for influxdb data directory

- ./influxdb/data:/var/lib/influxdb

# Mount for influxdb configuration

- ./influxdb/config/:/etc/influxdb/

ports:

# The API for InfluxDB is served on port 8086

- "8086:8086"

- "8082:8082"

chronograf:

image: chronograf:latest

volumes:

# Mount for chronograf database

- ./chronograf/data/:/var/lib/chronograf/

ports:

# The WebUI for Chronograf is served on port 8888

- "8888:8888"

depends_on:

- influxdbThe file also defines the mount points and ports we want to publish to our Docker host. The chronograf service has another useful section: depends_on. This will ensure that the influxdb container is up and running before the chronograf container is launched.

To deploy these services, run docker-compose up -d (like docker run, the -d argument starts the containers in headless "detached" mode). docker-compose uses the directory where it is executed to name the various components it manages, so putting your docker-compose.yml file into a well-named directory is a good idea. For this example, we'll place our docker-compose.yml file in a directory called tick.

Running docker-compose up -d will do a number of things: first, it will create a new Docker network named tick_network, and then it will bring up a container for each of the services we defined, naming them tick_influxdb_1 and tick_chronograf_1 respectively.

$ docker-compose up -d

Creating network "tick_default" with the default driver

Creating tick_influxdb_1 ... done

Creating tick_chronograf_1 ... done

$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

88b0263b7e74 chronograf:latest "/entrypoint.sh chro…" 11 seconds ago Up 9 seconds 0.0.0.0:8888->8888/tcp tick_chronograf_1

dcfa7a03eccb influxdb:latest "/entrypoint.sh infl…" 11 seconds ago Up 9 seconds 0.0.0.0:8082->8082/tcp, 0.0.0.0:8086->8086/tcp tick_influxdb_1You should be able to navigate to the Chronograf GUI by visiting http://localhost:8888, and connect it to InfluxDB using the address http://influxdb:8086, which uses Docker Compose’s built-in DNS resolution to connect to the influxdb container.

Getting logs functions much the same as using Docker without Compose. You can access logs from an individual service by using the docker-compose logs servicename command, substituting the name of your service for servicename. You can limit the number of logs using --tail=5, as follows:

$ docker-compose logs --tail=5 influxdb

Attaching to tick_influxdb_1

influxdb_1 | ts=2018-06-28T22:30:01.069954Z lvl=info msg="Listening on HTTP" log_id=08z87b10000 service=httpd addr=[::]:8086 https=false

influxdb_1 | ts=2018-06-28T22:30:01.070023Z lvl=info msg="Starting retention policy enforcement service" log_id=08z87b10000 service=retention check_interval=30m

influxdb_1 | ts=2018-06-28T22:30:01.070057Z lvl=info msg="Storing statistics" log_id=08z87b10000 service=monitor db_instance=_internal db_rp=monitor interval=10s

influxdb_1 | ts=2018-06-28T22:30:01.070263Z lvl=info msg="Listening for signals" log_id=08z87b10000

influxdb_1 | ts=2018-06-28T22:30:01.070313Z lvl=info msg="Sending usage statistics to usage.influxdata.com" log_id=08z87b10000You can also get logs for all services by leaving out the container name at the end of the command:

$ docker-compose logs --tail=2

Attaching to tick_chronograf_1, tick_influxdb_1

chronograf_1 | time="2018-06-28T22:30:01Z" level=info msg="Serving chronograf at http://[::]:8888" component=server

chronograf_1 | time="2018-06-28T22:30:01Z" level=info msg="Reporting usage stats" component=usage freq=24h reporting_addr="https://usage.influxdata.com" stats="os,arch,version,cluster_id,uptime"

influxdb_1 | ts=2018-06-28T22:30:01.070263Z lvl=info msg="Listening for signals" log_id=08z87b10000

influxdb_1 | ts=2018-06-28T22:30:01.070313Z lvl=info msg="Sending usage statistics to usage.influxdata.com" log_id=08z87b10000Executing a command is slightly easier with Docker compose, since it will automatically assign a Pseudo-TTY and give you an interactive session, but otherwise looks much like the standard Docker command:

$ docker-compose exec influxdb /bin/bash

root@dcfa7a03eccb:/#To tear everything down, we just need to execute docker-compose down from the appropriate directory.

TICK Sandbox

InfluxData provides a “distribution” of the TICK stack which we call the Sandbox. It provides a docker-compose.yml file with all the latest components, including those that are still in development, like Flux. It also has a pair of helper scripts to help those who aren’t familiar with Docker compose get up and running quickly. And since it uses Docker Compose, it will have its own network and containers and won’t conflict with other instances of the TICK Stack that you happen to be running in Docker.

Wrapping Up

Don't forget to clean up any containers you created while following along with this blog post! Another thing to keep track of while using Docker is the container images themselves; images aren't automatically deleted, and so over time as you upgrade the versions of the software you're using, old containers can accumulate and start eating up disk space. There are some scripts out there which will manage this for you, but for the most part just being aware of the potential issue and cleaning up manually every now and then will be good enough.

As always, if you have questions or comments please feel free to reach out to me on Twitter @noahcrowley.

Published at DZone with permission of Noah Crowley, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments