Understanding Prompt Injection and Other Risks of Generative AI

By prioritizing security, organizations can enhance trust, resilience, and reliability in their cloud and AI environments.

Join the DZone community and get the full member experience.

Join For FreeSecurity plays a central role in cloud computing and artificial intelligence, safeguarding data, infrastructure, and systems against cyber threats, ensuring compliance with regulations, addressing ethical considerations, and managing risk effectively. By prioritizing security, organizations can enhance trust, resilience, and reliability in their cloud and AI environments.

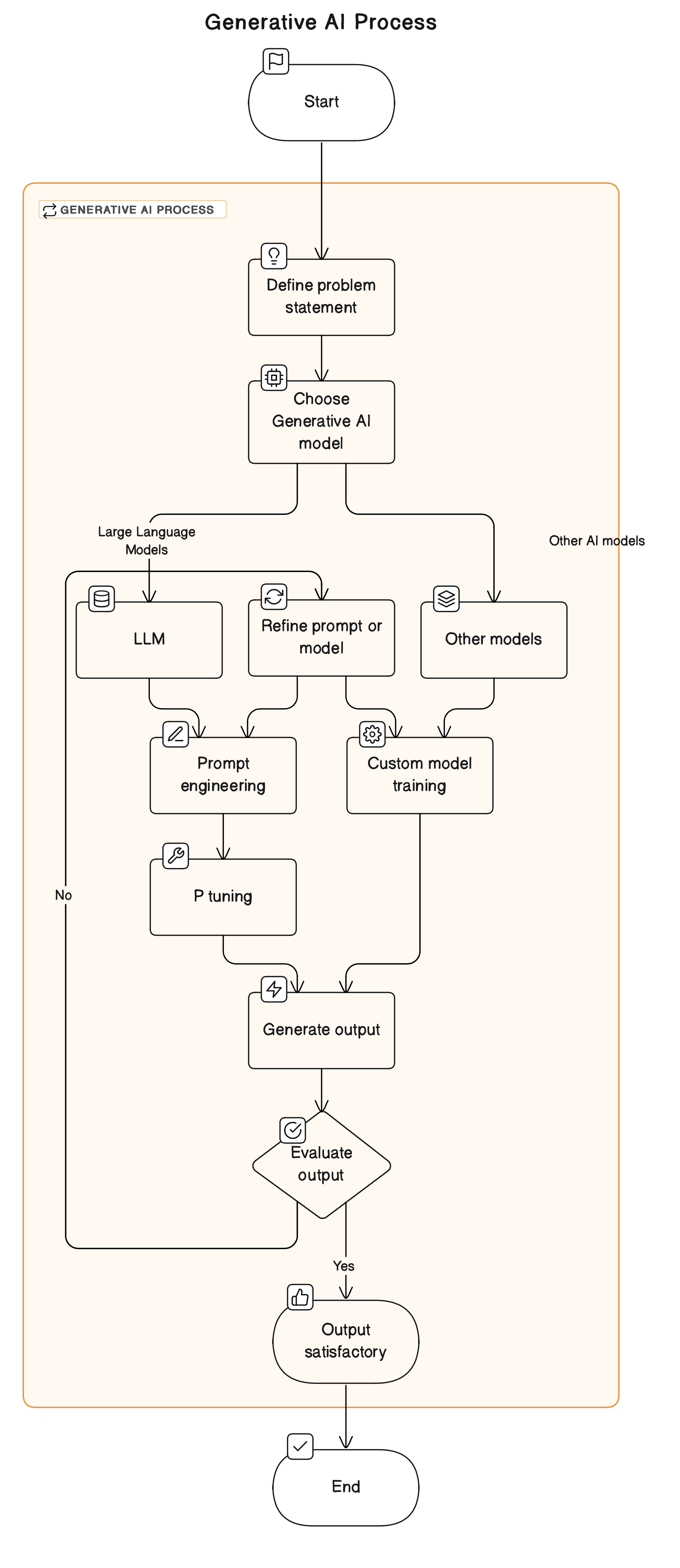

Security is not only critical in cloud environments but also in the AI world. Generative AI is the new buzzing word in the technology world today and security is the key consideration that every organization proactively thinks about before incorporating Generative AI into their products. Before jumping directly into the risks of Generative AI, you need to understand the underpinnings of Generative AI and what the Generative AI process is. Generative AI processes, particularly those involving Language Model-based methods (LLMs), often rely on prompting to generate new text. LLMs are powerful models trained on large datasets of text, such as GPT (Generative Pre-trained Transformer) models. Here's how the generative AI process works with LLMs and prompting:

If you are curious to understand the what is prompt engineering and P-tuning, here's a good resource you need to check.

Generative Artificial Intelligence (AI) has revolutionized the way we interact with technology, enabling unprecedented levels of creativity and innovation. From generating text to crafting images and music, generative AI systems have demonstrated remarkable capabilities. However, beneath the surface of this digital marvel lies a realm of potential risks and vulnerabilities that every user should be aware of. In this article, we explore one such peril — Prompt Injection — alongside other critical risks in the world of Generative AI, shedding light on the importance of user education and proactive measures for safeguarding against these threats.

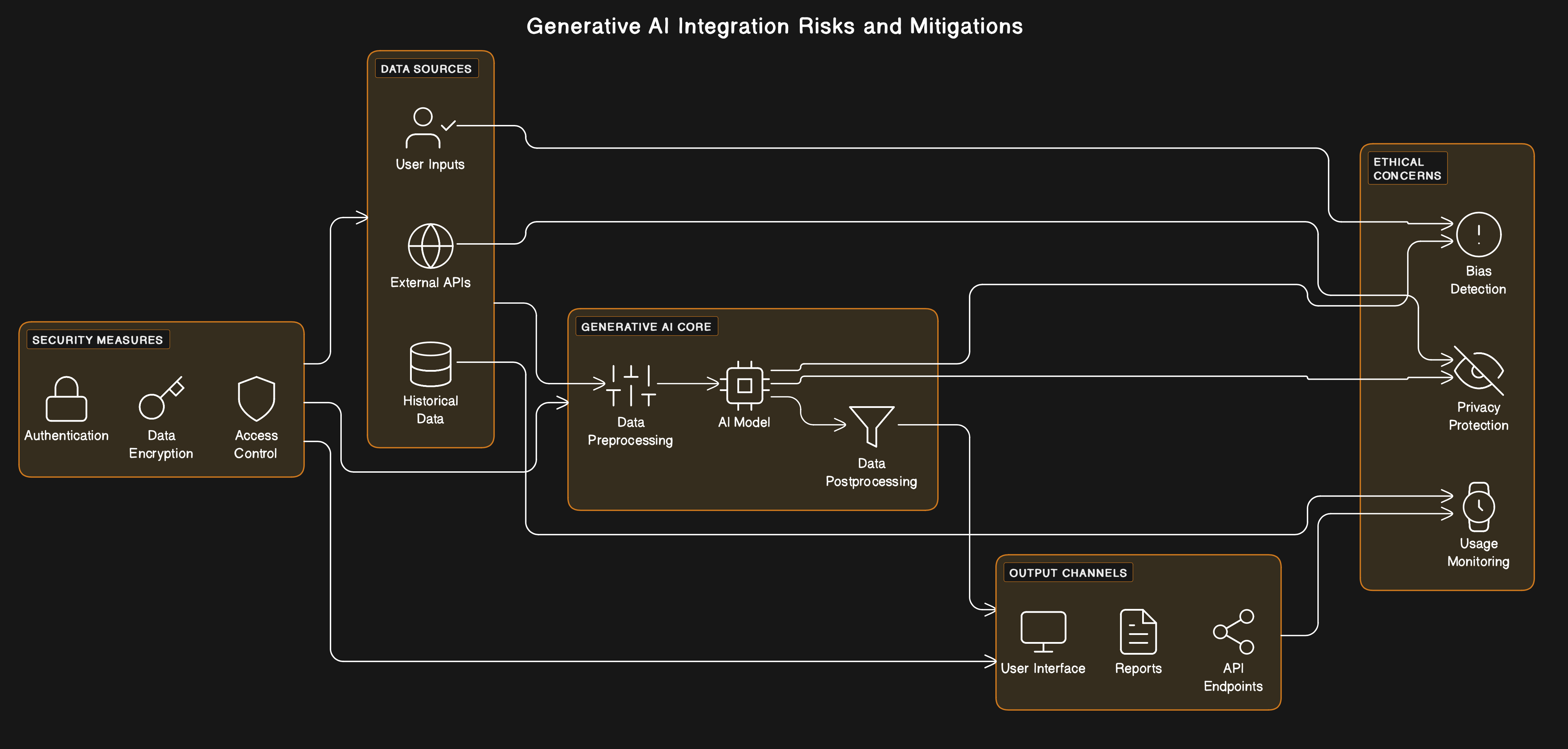

Integrating Generative AI is only one part of the equation. The architecture below shows the risks and mitigations involved with Generative AI integration.

Understanding Prompt Injection in Generative AI

Prompt Injection in the context of Generative AI refers to the manipulation of input prompts to steer the output generated by AI models in unintended or malicious directions. Unlike traditional software prompts, which primarily interact with users, Generative AI prompts serve as input cues guiding the output generated by AI models. Prompt Injection exploits this mechanism by subtly altering or appending prompts to induce AI models to produce undesirable or harmful content.

Prompt Injection in Generative AI can manifest in various forms, ranging from subtle manipulations to outright distortions of input prompts. Malicious actors may exploit vulnerabilities in AI models or their training data to craft deceptive prompts that elicit biased, offensive, or misleading outputs. Moreover, Prompt Injection can be orchestrated through social engineering tactics, enticing users to input prompts that inadvertently trigger undesirable AI responses.

One of the guides that outline the other risks and misuses of LLMs is promptingguide. Promptingguide outlines the types of prompt injection like prompt leaking, and jailbreaking with examples under the adversarial prompting in the LLMs section of the prompt engineering guide. Here's an excerpt from the guide for your quick understanding of adversarial prompting.

Adversarial prompting is an important topic in prompt engineering as it could help to understand the risks and safety issues involved with LLMs. It's also an important discipline to identify these risks and design techniques to address the issues.

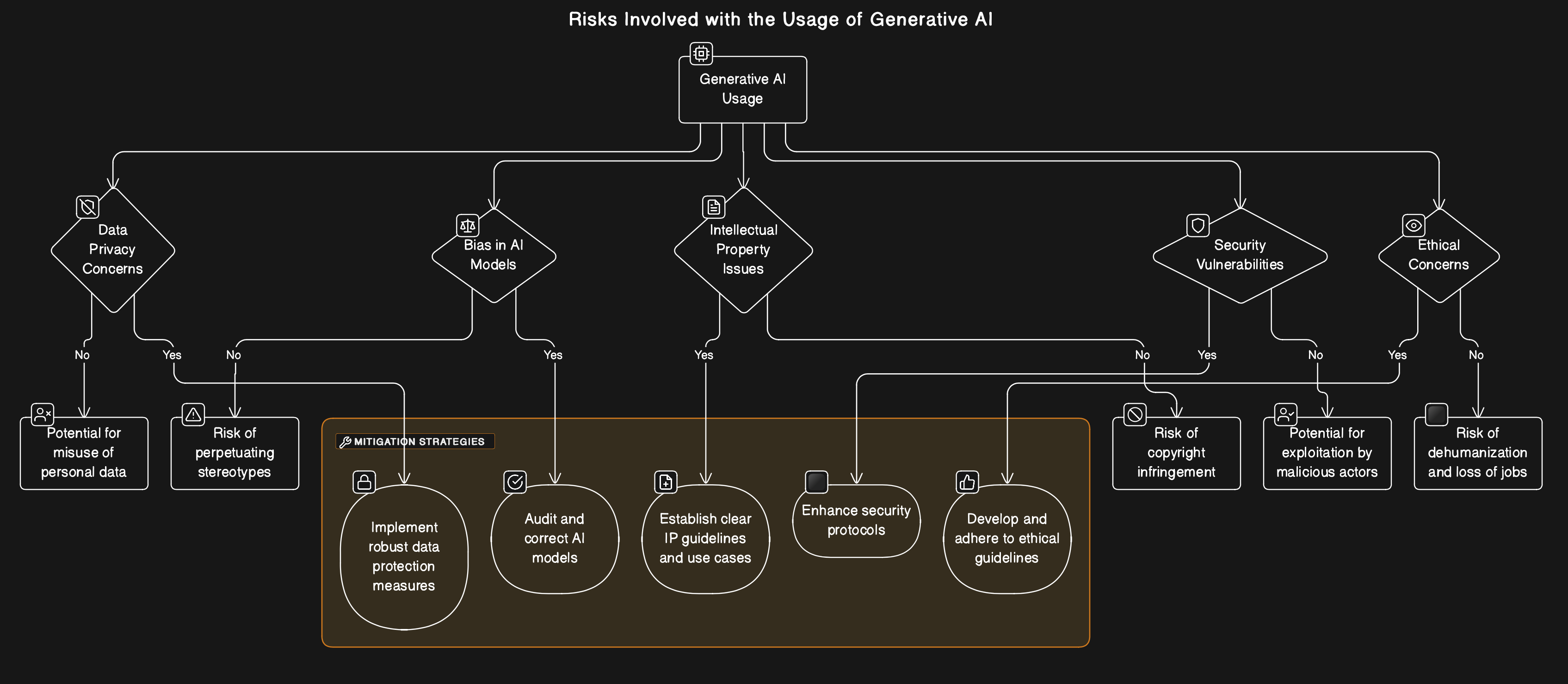

Other Risks in the Generative AI Landscape

Beyond Prompt Injection, several other risks loom in the realm of Generative AI, posing threats to individuals, organizations, and society at large. These include:

- Bias and discrimination: Generative AI models trained on biased or incomplete data may perpetuate and amplify societal biases, leading to discriminatory outcomes in generated content.

- Misinformation and manipulation: Malevolent actors can exploit Generative AI to generate fake news, forged documents, or manipulated media, undermining trust and exacerbating misinformation.

- Privacy violations: Generative AI models trained on sensitive data may inadvertently disclose personal or confidential information through generated content, compromising user privacy.

- Intellectual property infringement: Unauthorized use of copyrighted material or proprietary information in generated content can result in intellectual property disputes and legal ramifications.

Empowering Users Through Education and Awareness

In light of these risks, user education and awareness are paramount in mitigating the potential harm posed by Generative AI technologies. By fostering a deeper understanding of Prompt Injection and other vulnerabilities, users can adopt informed practices to mitigate risks and enhance their digital resilience. Key strategies include:

- Critical thinking: Encouraging users to critically evaluate the credibility and authenticity of generated content, particularly in contexts where manipulation or bias may be present.

- Ethical usage: Promoting responsible and ethical use of Generative AI technologies, emphasizing the importance of respecting privacy, avoiding misinformation dissemination, and upholding intellectual property rights.

- Technical vigilance: Empowering users with tools and resources to detect and respond to potential instances of Prompt Injection or other malicious activities in Generative AI systems.

Generative AI holds immense promise for driving innovation and creativity across diverse domains. However, with great power comes great responsibility. As users navigate the dynamic landscape of Generative AI, users must remain vigilant to the risks posed by Prompt Injection and other vulnerabilities. By fostering a culture of education, awareness, and ethical stewardship, we can harness the transformative potential of Generative AI while safeguarding against its inherent perils. In doing so, we pave the way for a more secure and resilient digital future.

Further Reading

Opinions expressed by DZone contributors are their own.

Comments