Voron & Time Series: Working with Real Data

Join the DZone community and get the full member experience.

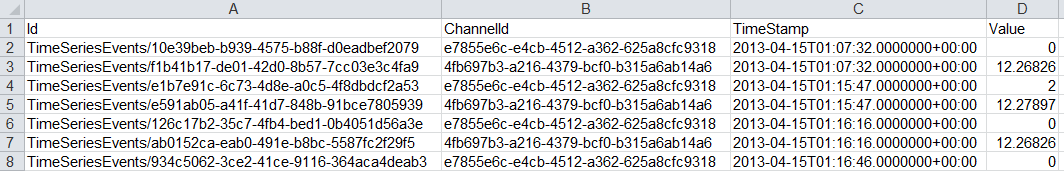

Join For Freedan liebster has been kind enough to send me a real world time series database. the data has been sanitized to remove identifying issues, but this is actually real world data, so we can learn a lot more about this.

this is what this looks like:

the first thing that i did was take the code in this post , and try it out for size. i wrote the following:

int i = 0;

using (var parser = new textfieldparser(@"c:\users\ayende\downloads\timeseries.csv"))

{

parser.hasfieldsenclosedinquotes = true;

parser.delimiters = new[] {","};

parser.readline();//ignore headers

var startnew = stopwatch.startnew();

while (parser.endofdata == false)

{

var fields = parser.readfields();

debug.assert(fields != null);

dts.add(fields[1], datetime.parseexact(fields[2], "o", cultureinfo.invariantculture), double.parse(fields[3]));

i++;

if (i == 25*1000)

{

break;

}

if (i%1000 == 0)

console.write("\r{0,15:#,#} ", i);

}

console.writeline();

console.writeline(startnew.elapsed);

}

note that we are using a separate transaction per line , which means that we are really doing a lot of extra work. but this simulate very well incoming events coming one at a time. we were able to process 25,000 events in 8.3 seconds. at a rate of just over 3 events per millisecond .

now, note that we have in here the notion of “channels”. from my investigation, it seems clear that some form of separation is actually very common in time series data. we are usually talking about sensors or some such, and we want to track data across different sensors over time. and there is little if any call for working over multiple sensors / channels at the same time.

because of that, i made a relatively minor change in voron, that allows it to have an infinite number of separate trees. that means that i can use as many trees as you want, and we can model a channel as a tree in voron. i also changed things so we instead of doing a single transaction per line, we will do a transaction per 1000 lines. that dropped the time to insert 25,000 lines to 0.8 seconds. or a full order of magnitude faster.

that done, i inserted the full data set, which is just over 1,096,384 records. that took 36 seconds. in the data set i have, there are 35 channels.

i just tried, and reading all the entries in a channel with 35,411 events takes 0.01 seconds. that allows doing things like doing averages over time, comparing data, etc.

you can see the code implementing this in the following link .

Published at DZone with permission of Oren Eini, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments