WSO2 Identity Server in OpenShift (OKD)

Take a look at this tutorial that shows how to run the WSO2 Identity Server in OpenShift.

Join the DZone community and get the full member experience.

Join For FreeThis is an end-to-end article where I am going to show how to run WSO2 Identity Server in OpenShift Origin (OKD). Feel free to use Minishift if you prefer.

TL;DR

Please download the OKD artifacts from the GitHub repository. Read the Docker Image section of this article to create the Docker image. Then execute the install.sh to make the deployment.

Software Needed

The following software will be used in this tutorial.

OpenShift Origin 3.9.0 (aka OKD)

Docker 17.09.1-ce

WSO2 Identity Server 5.7.0

OpenShift Installation

The OKD (OpenShift Origin) installation can be divided into two parts: first the Docker, and then OKD installation.

Docker Installation

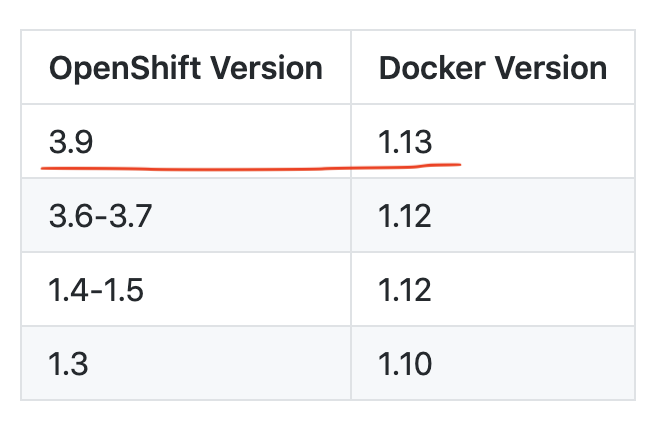

For OKD installation you must have Docker in your system. We are going to install OKD 3.9.0 and for that, the compatible Docker version is 1.13 as said by the official documentation.

However, I tested with Docker 17.09.1 and it worked. Download Docker (17.09.1) from this page and install it in your system. (In my case I am using macOS).

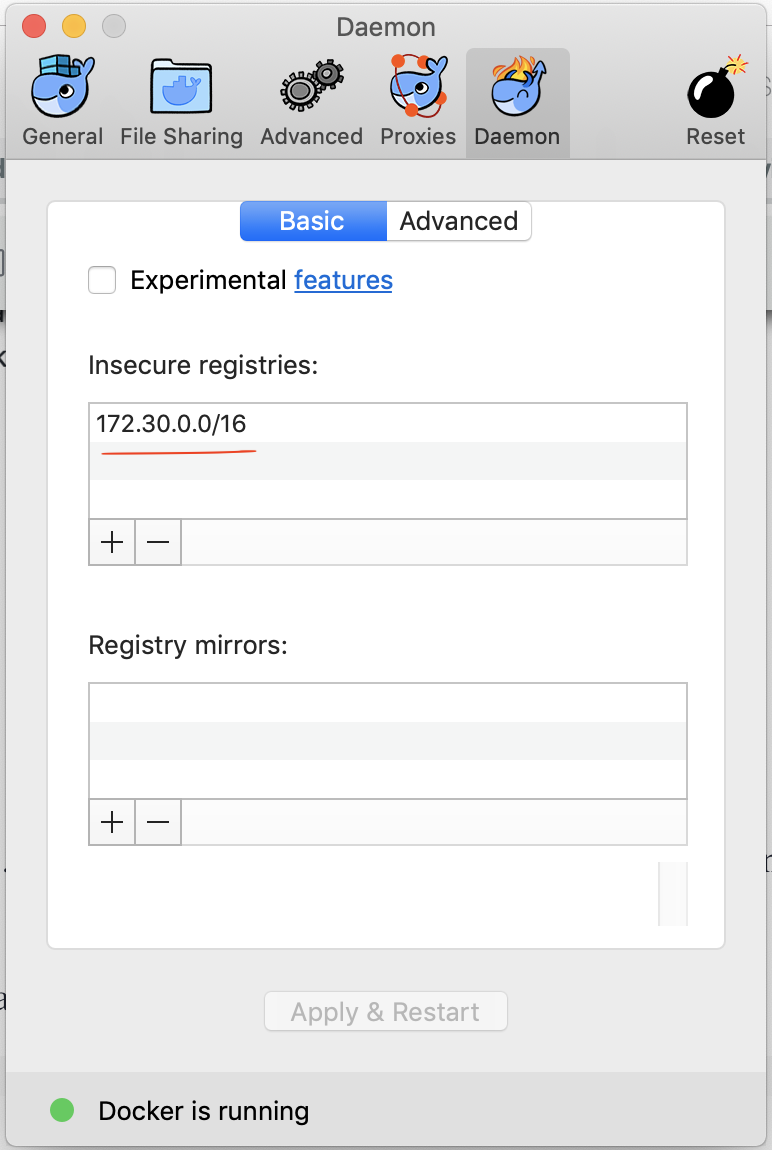

Once the installation is done, configure the Docker daemon with an insecure registry parameter of

172.30.0.0/16as shown below.

Alternatively, you can do it in the daemon.json file of Docker. In macOS, it can be found under the following location.

/Users/anupamgogoi/.dockerHere is the content of the file.

{

"debug" : true,

"insecure-registries" : [

"172.30.0.0/16"

],

"experimental" : false

}Once configured, restart the Docker.

OpenShift Installation

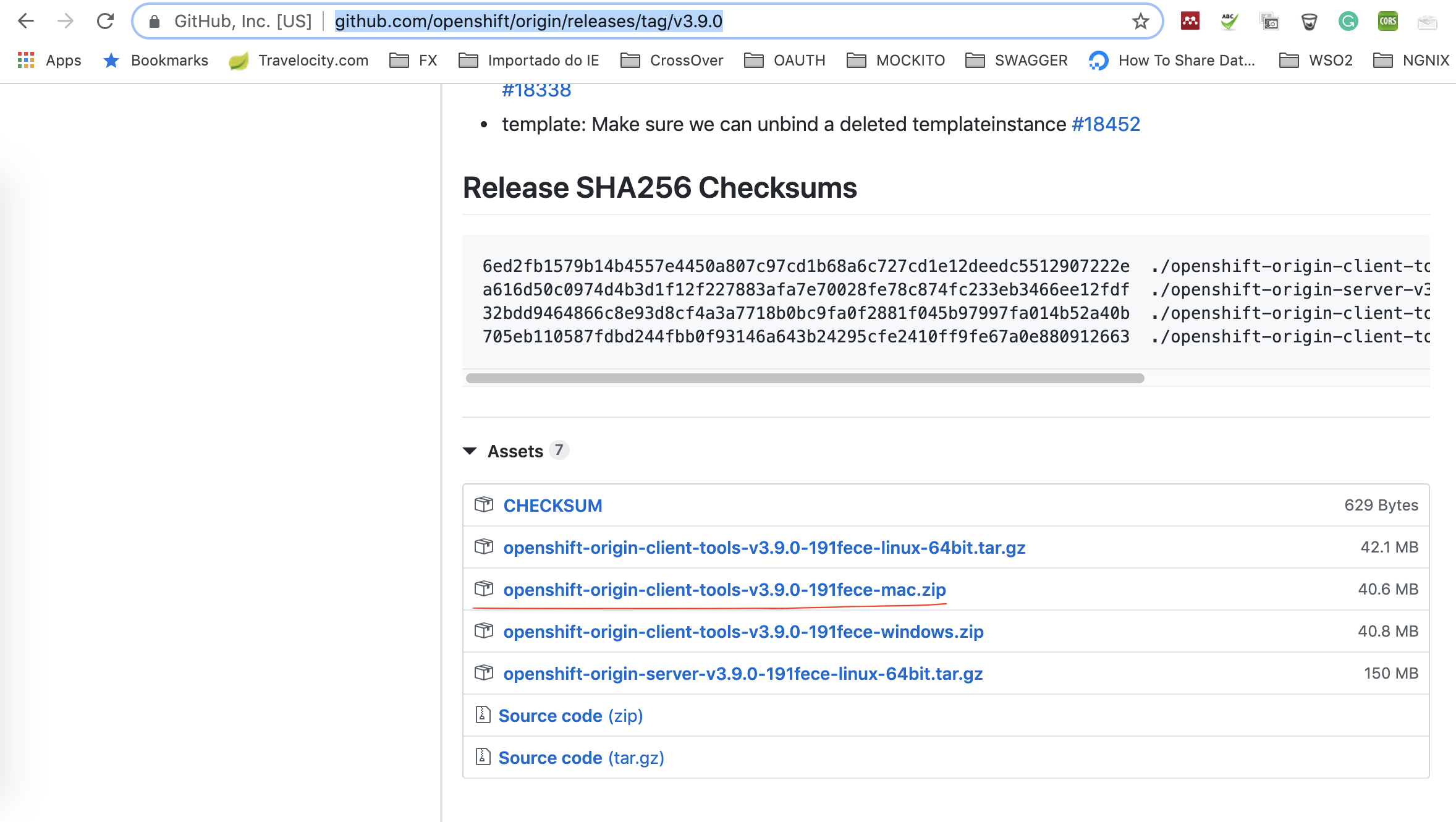

Download OKD from this link. Note that you need only the client tools as shown below.

Unzip it in some directory and you can see the oc tool.

Execute the following command to run the OKD cluster.

$ oc cluster upFor more information, please refer to the official documentation.

WSO2 Identity Server Preparation

In this section, we are going to create a Docker image for WSO2 Identity Server (WSO2 IS) and then subsequently the OpenShift deployment artifacts.

Docker Image

In this article, we are going to create a minimal Docker image for WSO2 IS. For a complete Docker image, please refer the WSO2 official image.

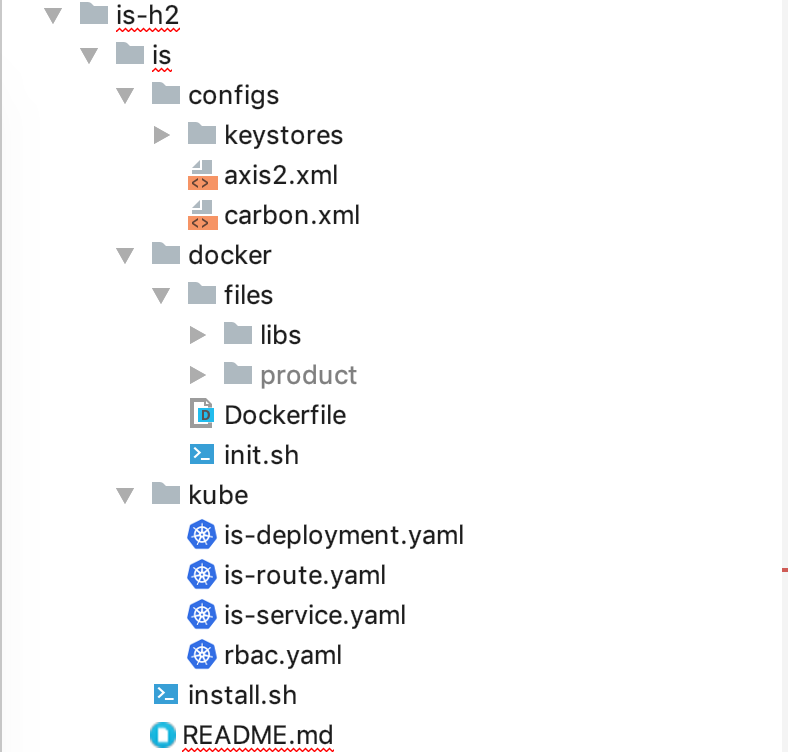

The deployment artifacts used in this article can be found under this GitHub repository. Here is the directory structure of the artifacts.

Product Preparation

Download the WSO2 Identity Server and then extract it inside the following directory.

$ is-h2/is/docker/files/productGenerating Keystore and Truststore

Navigate to the following folder and create the Keystore and client-truststore.jks

$ is-h2/is/docker/files/product/wso2is-5.7.0/repository/resources/securityDelete the existing wso2carbon.jks and create a new one:

$ keytool -genkey -alias wso2carbon -keyalg RSA -keysize 2048 -keystore wso2carbon.jks -storepass wso2carbonIn this example, I am using CN="*.wso2.com".

Export the public certificate of the private key:

$ keytool -export -alias wso2carbon -keystore wso2carbon.jks -file wso2pub.pem -storepass wso2carbonDelete the existing wso2carbon alias from the client-truststore.jks

$ keytool -delete -alias wso2carbon -keystore client-truststore.jks -storepass wso2carbonImport the public certificate to the alias wso2caron in client-truststore.jks

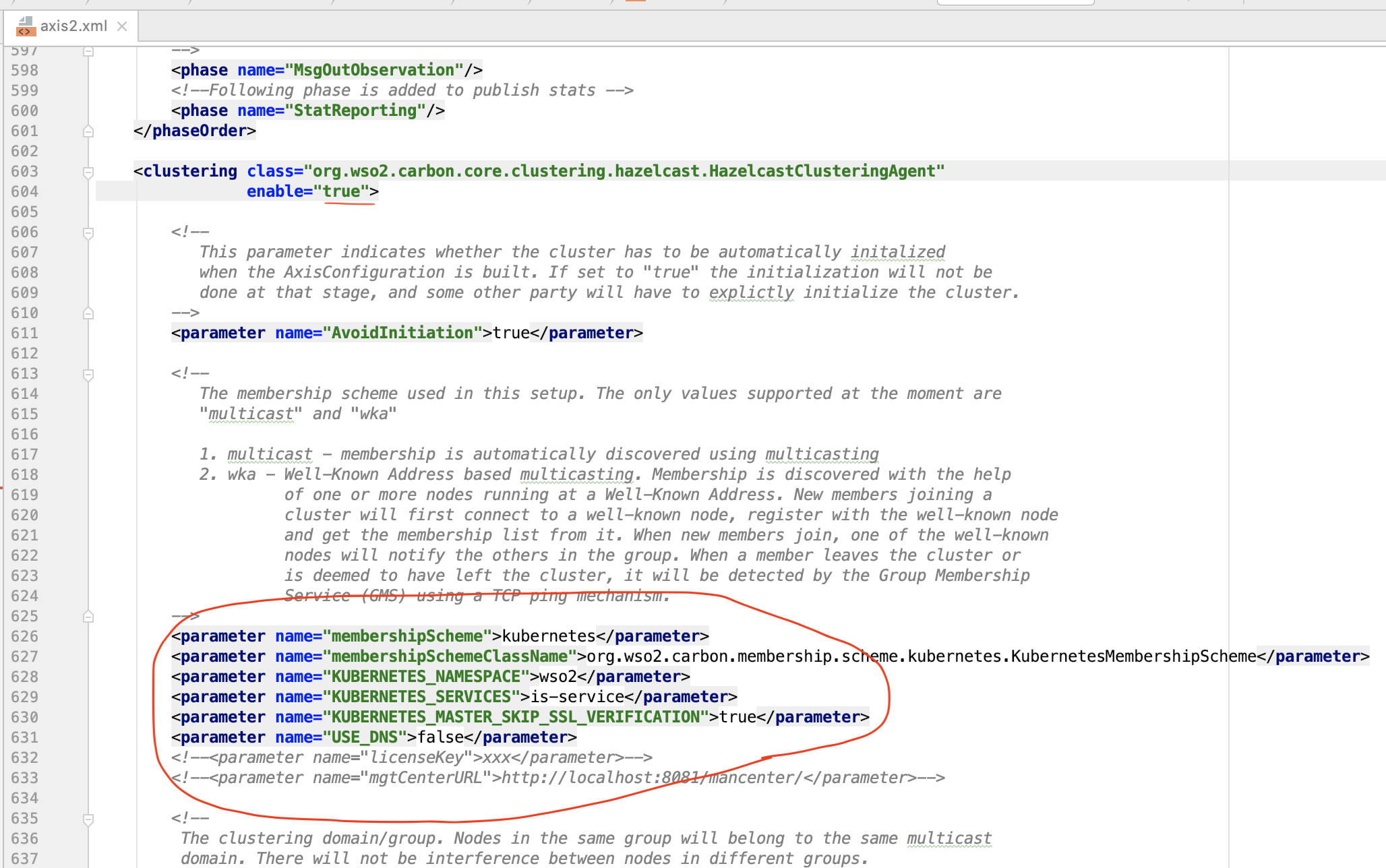

$ keytool -import -alias wso2carbon -file wso2pub.pem -keystore client-truststore.jks -storepass wso2carbonModify axis2.xml

Copy this provided is-h2/is/configs/axis2.xml and paste it in:

$ is-h2/is/docker/files/product/wso2is-5.7.0/repository/conf/axis2/axis2.xmlLet's have a look at the configuration.

This configuration makes the WSO2 IS pod (container) call the OpenShist APIs internally to discover another WSO2 IS pods and thus create a cluster.

Dockerfile

Here is the Dockerfile for WSO2 IS.

FROM adoptopenjdk/openjdk8:jdk8u192-b12-alpine

MAINTAINER anupam@wso2.com

# Set user configurations

ARG USER=wso2carbon

ARG USER_ID=802

ARG USER_GROUP=wso2

ARG USER_GROUP_ID=802

ARG USER_HOME=/home/${USER}

ARG WSO2_SERVER_HOME=${USER_HOME}/wso2is-5.7.0

# create a user group and a user

RUN addgroup -g ${USER_GROUP_ID} ${USER_GROUP}

RUN adduser -u ${USER_ID} -D -g '' -h ${USER_HOME} -G ${USER_GROUP} ${USER}

# Copy wso2 product and kubernetes membership libs.

COPY files/product/wso2is-5.7.0 ${WSO2_SERVER_HOME}/

COPY files/libs/dnsjava-2.1.8.jar ${WSO2_SERVER_HOME}/repository/components/lib/

COPY files/libs/kubernetes-membership-scheme-1.0.5.jar ${WSO2_SERVER_HOME}/repository/components/dropins/

# Change owner and permission

RUN chmod 777 -R ${WSO2_SERVER_HOME}/

RUN chown -R wso2carbon:wso2 ${WSO2_SERVER_HOME}/

# copy init script to user home

COPY init.sh ${USER_HOME}/

RUN chown -R wso2carbon:wso2 ${USER_HOME}/init.sh

RUN chmod +x ${USER_HOME}/init.sh

ENV WSO2_SERVER_HOME=${WSO2_SERVER_HOME}

USER ${USER_ID}

WORKDIR ${USER_HOME}

EXPOSE 9763 9443

ENTRYPOINT ["/home/wso2carbon/init.sh"]

Note that at the ENTRYPOINT we are calling init.sh.

init.sh

In the init.sh, we are extracting the IP address of the container at runtime and modifying the localMemberHost parameter in the axis2.xml file. This is necessary for creating a cluster.

#!/bin/sh

set -e

docker_container_ip=$(awk 'END{print $1}' /etc/hosts)

echo "#######Docker container IP#######" + ${docker_container_ip}

sed -i "s#<parameter\ name=\"localMemberHost\".*<\/parameter>#<parameter\ name=\"localMemberHost\">${docker_container_ip}<\/parameter>#" ${WSO2_SERVER_HOME}/repository/conf/axis2/axis2.xml

sh ${WSO2_SERVER_HOME}/bin/wso2server.sh "$@"Create Docker Image

Browse to the following directory:

$ is-h2/is/dockerExecute the following command:

$ docker build -t is .At this point, our Docker image is created and it's located in our local registry. To use it in OKD we have yet to push it to the OKD internal docker registry. We will do it later in the article.

OpenShift Artifacts

All OpenShift artifacts can be found under the following directory:

$ is-h2/is/kubeRBAC Configuration

This is an important configuration.

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

namespace: wso2

name: endpoints-reader-role

rules:

- apiGroups: [""]

verbs: ["get", "list"]

resources: ["endpoints"]

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: wso2-endpoints-reader-role-binding

namespace: wso2

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: endpoints-reader-role

subjects:

- kind: ServiceAccount

name: wso2svc-account

namespace: wso2We have defined a role named endpoints-reader-role and gave permissions to read the endpoints resources. Next, we have applied this role to a service account called wso2svc-account.

The service account is necessary because internally the WSO2 IS container will call APIs of OKD. And we must somehow give it permissions to access the APIs. The service accounts serve this purpose.

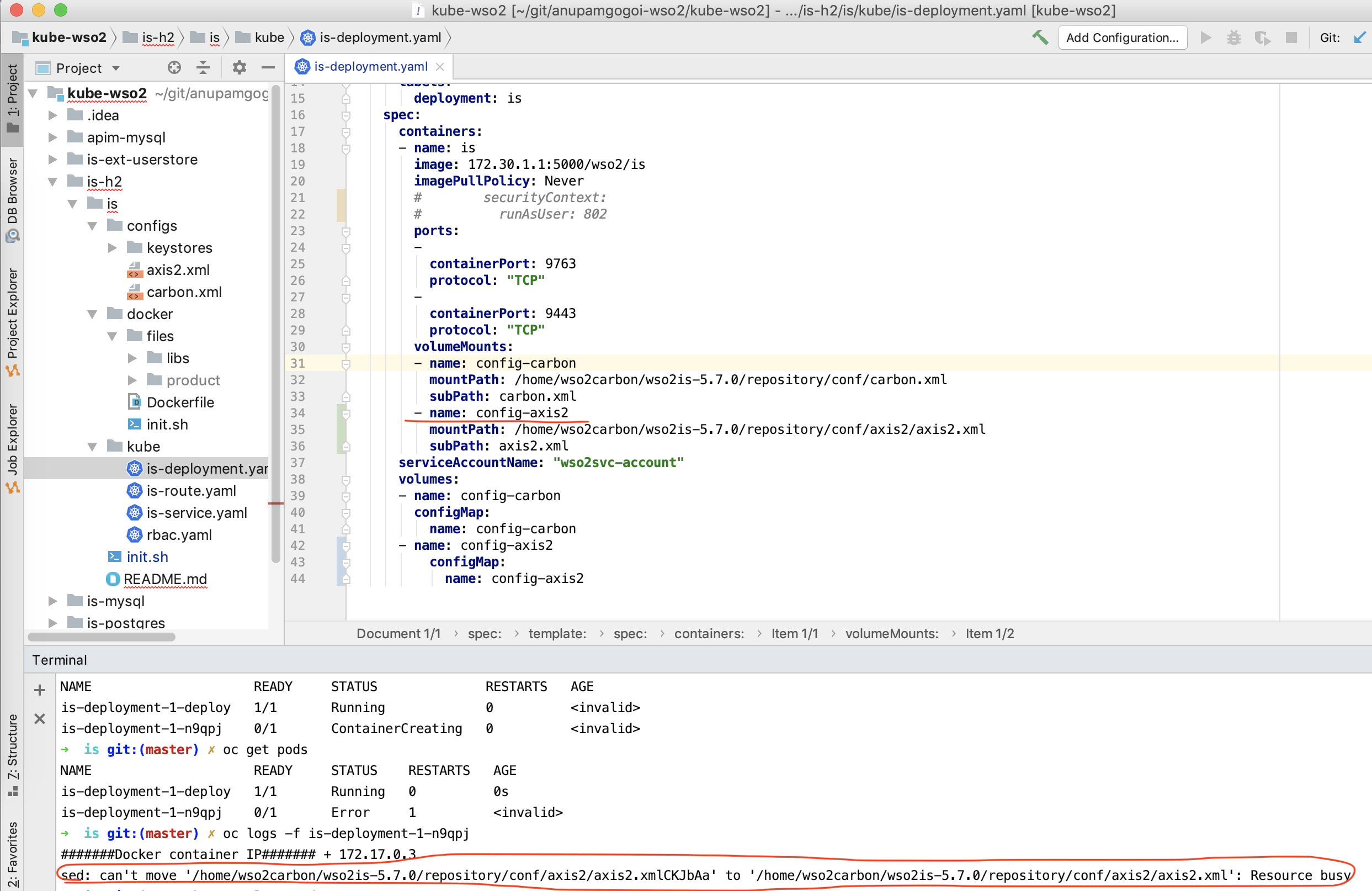

Deployment Config

Here is the deployment config file.

apiVersion: v1

kind: DeploymentConfig

metadata:

name: is-deployment

spec:

replicas: 1

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 0

type: Rolling

template:

metadata:

labels:

deployment: is

spec:

containers:

- name: is

image: 172.30.1.1:5000/wso2/is

imagePullPolicy: Never

# securityContext:

# runAsUser: 802

ports:

-

containerPort: 9763

protocol: "TCP"

-

containerPort: 9443

protocol: "TCP"

volumeMounts:

- name: config-carbon

mountPath: /home/wso2carbon/wso2is-5.7.0/repository/conf/carbon.xml

subPath: carbon.xml

serviceAccountName: "wso2svc-account"

volumes:

- name: config-carbon

configMap:

name: config-carbon

It can be seen that the carbon.xml configuration file is provided externally using a config map. However, the necessary configuration in carbon.xml could be provided at the time of Docker image creation. It's up to you to choose the best option.

Also, we could have externalized the configuration of axis2.xml (cluster configuration) using a config map. But, the problem is that config maps are immutable. It means that once a file (or a directory) is config mapped it's no longer modifiable. In our case, the init.sh will modify the axis2.xml at runtime. If it were config mapped in the deployment configuration, you would receive the following error:

sed: can't move '/home/wso2carbon/wso2is-5.7.0/repository/conf/axis2/axis2.xmlCKJbAa' to '/home/wso2carbon/wso2is-5.7.0/repository/conf/axis2/axis2.xml': Resource busy

Here is a screenshot.

That's why one solution to this problem is to add the necessary cluster configuration in axis2.xml at the time of Docker image creation and not use config map for it.

Another solution is to use a config map to copy the external axis2.xml file to a temporary directory inside the container, then copy it to the following directory:

/home/wso2carbon/wso2is-5.7.0/repository/conf/axis2By doing this, we are avoiding config map to mount axis2.xml and thus it's mutable for farther modifications.

Service Configuration

Here is the simple service configuration file:

apiVersion: v1

kind: Service

metadata:

name: is-service

spec:

selector:

deploymentconfig: is-deployment

ports:

-

name: servlet-http

protocol: TCP

port: 9763

-

name: servlet-https

protocol: TCP

port: 9443Route Configuration

Expose the service to the outside world.

apiVersion: route.openshift.io/v1

kind: Route

metadata:

namespace: wso2

name: is-route

spec:

host: is.wso2.com

port:

targetPort: servlet-https

tls:

termination: passthrough

to:

kind: Service

name: is-service

Note that the host is.wso2.com must exist. In our case, add it to the /etc/hosts file as shown below.

# IS Test

127.0.0.1 is.wso2.comOpenShift (OKD) Preparation

Check the status of the OpenShift cluster by executing the following command:

$ oc cluster statusIf it is not running execute the following command to run it:

$ oc cluster upThe console of the OKD can be accessed via the URL: https://127.0.0.1:8443/console

Steps for OKD Artifacts Deployment

1. Login To OKD

$ oc login -u developer -p developer2. Create a Project (Namespace)

$ oc new-project wso23. Push Docker Images to OKD Docker Registry

Login to OKD Docker registry:

$ docker login -u developer -p $(oc whoami -t) 172.30.1.1:5000Tag the Docker image in the following format:<REGISTRY_URL>/<PROJECT>/<IMAGE_NAME>

$ docker tag is 172.30.1.1:5000/wso2/isPush the image to OKD Docker registry:

$ docker push 172.30.1.1:5000/wso2/is4. Create a Service Account

$ oc create serviceaccount wso2svc-account -n wso25. Create Config Maps

We have only one config map for this simple deployment.

$ oc create cm config-carbon --from-file=./configs/carbon.xml -n wso26. Deploy artifacts

Deploy the artifacts in the following order.

$ oc apply -f kube/rbac.yaml -n wso2

$ oc apply -f kube/is-service.yaml -n wso2

$ oc apply -f kube/is-route.yaml -n wso2

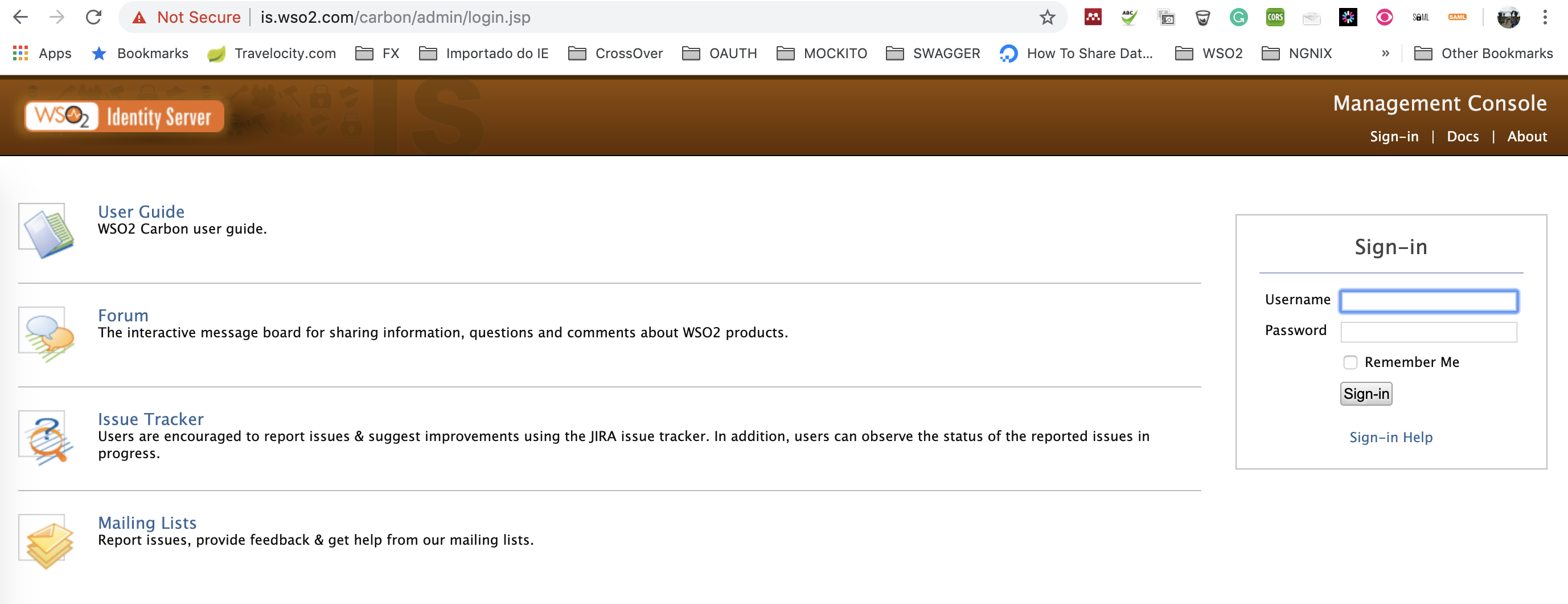

$ oc apply -f kube/is-deployment.yaml -n wso2It will take some time for the deployment. Once up and running you will be able to access the WSO2 Identity Server at the following URL:

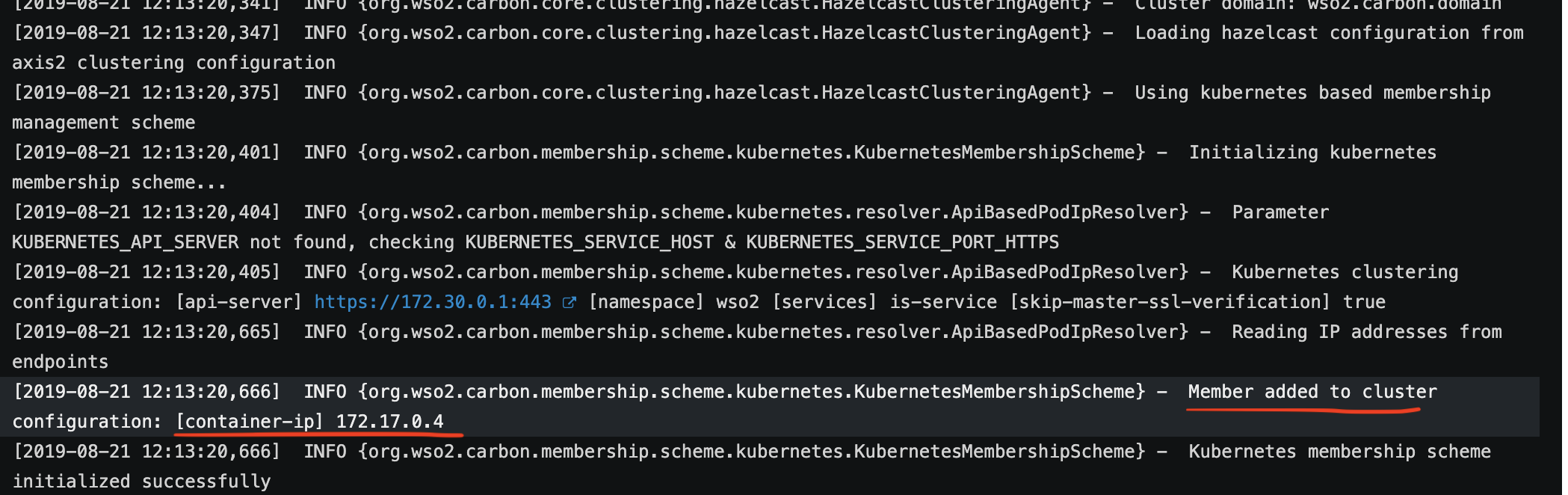

Verify the Cluster

Check the logs of the WSO2 IS pod in the OpenShift console. You should see logs as shown below. It says that the cluster formation is OK.

Conclusion

In this article, I have demonstrated how to install OpenShift Origin (OKD) and run the WSO2 Identity Server in it. The same procedure can be adopted for other WSO2 products with slight modifications.

Please let me know if you could make it work. Thanks.

Opinions expressed by DZone contributors are their own.

Comments