Clean Up Event Data in Ansible Event-Driven Automation

Clean and normalize event data in Ansible Event-Driven Automation (EDA) with the ansible.eda.dashes_to_underscores filter for smoother, more reliable automation.

Join the DZone community and get the full member experience.

Join For FreeIn the past few articles, we explored how to use different event sources in Ansible Event-Driven Automation (EDA). In this demo, we'll focus on how event filters can help clean up and simplify event data, making automation easier to manage. Specifically, we'll explore the ansible.eda.dashes_to_underscores event filter and how it works.

When using Ansible EDA with tools like webhooks, Prometheus, or cloud services, events often come in as JSON data. These JSON payloads usually have keys with dashes in their names, like alert-name or instance-id. While this is fine in JSON, it becomes a problem in Ansible because variable names with dashes can't be used directly in playbooks or Jinja2 templates. The dashes_to_underscores filter helps solve this issue by converting those dashed keys into names that Ansible can work with more easily.

The dashes_to_underscores filter in Ansible EDA automatically replaces dashes (-) in all keys of an event payload with underscores (_). This transformation ensures that variable names conform to Ansible's requirements, making them easier to reference directly in conditions and playbooks.

Testing the dashes_to_underscores Filter with a Webhook

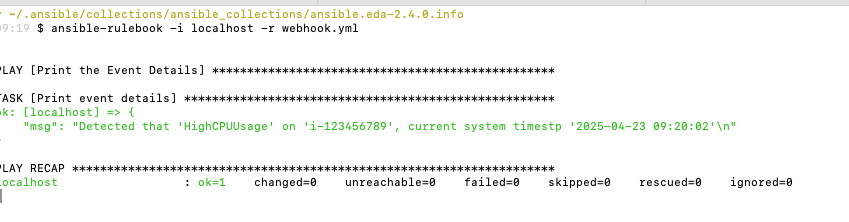

To demonstrate how the ansible.eda.dashes_to_underscores filter operates, we'll send a sample JSON payload to a webhook running on port 9000. This payload includes keys with dashes, such as alert-name and instance-id, which are common in JSON data but can pose challenges in Ansible due to variable naming conventions.

webhook.yml

- name: Event Filter dashes_to_underscores demo

hosts: localhost

sources:

- ansible.eda.webhook:

port: 9000

host: 0.0.0.0

filters:

- ansible.eda.dashes_to_underscores:

rules:

- name: Run the playbook if it alert_name matches High CPU Usage

condition: event.payload.alert_name == "HighCPUUsage"

action:

run_playbook:

name: print-event-vars.ymlprint-event-vars.yml

---

- name: Print the Event Details

hosts: localhost

gather_facts: false

tasks:

- name: Print event details

debug:

msg: >

Detected that '{{ ansible_eda.event.payload.alert_name }}' on '{{ ansible_eda.event.payload.instance_id }}', current system timestp '{{ now(fmt='%Y-%m-%d %H:%M:%S') }}'Triggering the Webhook with a Sample JSON Payload

curl --header "Content-Type: application/json" \

--request POST \

--data '{ "alert-name": "HighCPUUsage", "instance-id": "i-123456789" }' \

http://localhost:9000/Inspecting Events with --print-events Flag

Upon receiving this payload, the dashes_to_underscores filter will automatically convert the keys: alert-name becomes alert_name and instance-id becomes instance_id

This transformation ensures compatibility with Ansible's variable naming requirements, allowing for straightforward access to these variables in your playbooks and rulebooks.

Ruleset: Event Filter dashes_to_underscores demo

Event:

{'meta': {'endpoint': '',

'headers': {'Accept': '*/*',

'Content_Length': '62',

'Content_Type': 'application/json',

'Host': 'localhost:9000',

'User_Agent': 'curl/8.7.1'},

'received_at': '2025-04-23T16:20:40.248651Z',

'source': {'name': 'ansible.eda.webhook',

'type': 'ansible.eda.webhook'},

'uuid': '8dcf64a6-2ab2-429b-a81a-6dd0e3a48308'},

'payload': {'alert_name': 'HighCPUUsage', 'instance_id': 'i-123456789'}}

Conclusion

The ansible.eda.dashes_to_underscores filter helps clean up event data in Ansible's EDA. It automatically changes keys with dashes (like alert-name) into keys with underscores (like alert_name), making them easier to use in Ansible playbooks and rulebooks. This is important because Ansible prefers variable names that only have letters, numbers, and underscores. Using this filter makes your automation scripts clearer and reduces the chance of errors. We can also combine it with other filters, like json_filter, to better control and format incoming event data. Overall, this filter helps you work more smoothly with different types of event data in automation tasks.

Note: The views expressed in this article are my own and do not necessarily reflect the views of my employer.

Opinions expressed by DZone contributors are their own.

Comments