Cognitive Services: Convert Text to Speech in Multiple Languages Using Asp.Net Core and C#

Let's learn how to convert text to speech in multiple languages using one of the important Cognitive Services APIs called Microsoft Text to Speech Service API.

Join the DZone community and get the full member experience.

Join For FreeIn this article, we are going to learn how to convert text to speech in multiple languages using one of the important Cognitive Services APIs called Microsoft Text to Speech Service API (one of the APIs in Speech API). The Text to Speech (TTS) API of the Speech service converts input text into natural-sounding speech (also called speech synthesis). It supports text in multiple languages and gender based voice(male or female)

You can also refer the following articles on Cognitive Service.

Prerequisites

- Subscription key (Azure Portal) or Trail Subscription Key

- Visual Studio 2015 or 2017

Convert Text to Speech API

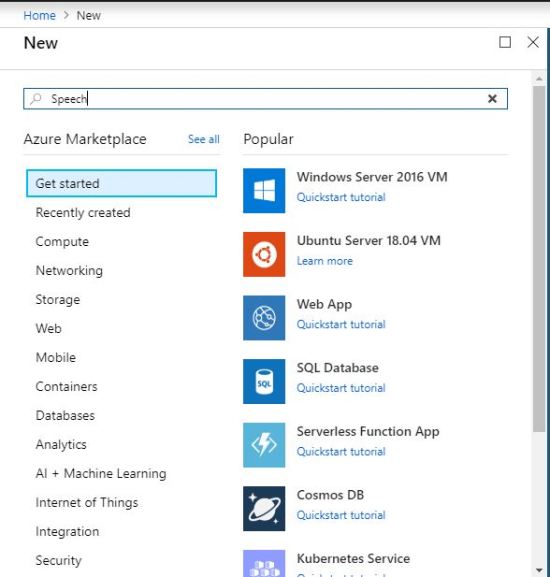

First, we need to log into the Azure Portal with our Azure credentials. Then we need to create an Azure Speech Service API in the Azure portal.

So please click on the "Create a resource" on the left top menu and search "Speech" in the search bar on the right side window or top of Azure Marketplace.

Now we can see there are a few speech related "AI + Machine Learning " categories listed in the search result.

Click on the "create" button to create Speech Service API.

Provision a Speech Service API (Text to Speech) Subscription Key

Provision a Speech Service API (Text to Speech) Subscription Key

After clicking the "Create," it will open another window. There, we need to provide the basic information about Speech API.

Name: Name of the Translator Text API (Eg. TextToSpeechApp).

Subscription: We can select our Azure subscription for Speech API creation.

Location: We can select the location of resource group. The best thing is we can choose a location closest to our customer.

Pricing tier: Select an appropriate pricing tier for our requirement.

Resource group: We can create a new resource group or choose from an existing one ( We created a new resource group as "SpeechResource" ).

Now click on the "TextToSpeechApp" in dashboard page and it will redirect to the detailed page of TextToSpeechApp ("Overview"). Here, we can see the "Keys" (Subscription key details) menu in the left side panel. Then click on the "Keys" menu and it will open the Subscription Keys details. We can use any of the subscription keys or regenerate the given key for text to speech conversion using Microsoft Speech Service API.

Authentication

Authentication

A token ( bearer ) based authentication is required in the Text To Speech conversion using Speech Service API. So we need to create an authentication token using "TextToSpeechApp" subscription keys. The following "endPoint" will help to create an authentication token for Text to speech conversion. Each access token is valid for 10 minutes and after that we need to create a new one for the next process.

"https://westus.api.cognitive.microsoft.com/sts/v1.0/issueToken” Speech Synthesis Markup Language (SSML)

The Speech Synthesis Markup Language (SSML) is an XML-based markup language that provides a way to control the pronunciation and rhythm of text-to-speech. More about SSML.

SSML Format:

<speak version='1.0' xml:lang='en-US'><voice xml:lang='ta-IN' xml:gender='Female' name='Microsoft Server Speech Text to Speech Voice (ta-IN, Valluvar)'>

நன்றி

</voice></speak>How to Make a Request

This is a very simple process, HTTP request is made in POST method. So that means we need to pass secure data in the request body and that will be a plain text or an SSML document. As per the documentation, it is clearly mentioned in most cases that we need to use SSML body as a request. The maximum length of the HTTP request body is 1024 characters and the following is the endPoint for our HTTP Post method.

"https://westus.tts.speech.microsoft.com/cognitiveservices/v1”

The following are the HTTP headers required in the request body.

Pic source: https://docs.microsoft.com/en-us/azure/cognitive-services/speech-service/how-to-text-to-speech

Index.html

The following html contains the binding methodology that we have used in our application by using the latest Tag helpers of ASP.Net Core.

Model

Model

The following model contains the Speech Model information.

using Microsoft.AspNetCore.Mvc.Rendering;

using System.Collections.Generic;

using System.ComponentModel;

namespace TextToSpeechApp.Models

{

public class SpeechModel

{

public string Content { get; set; }

public string SubscriptionKey { get; set; } = "< Subscription Key >";

[DisplayName("Language Selection :")]

public string LanguageCode { get; set; } = "NA";

public List<SelectListItem> LanguagePreference { get; set; } = new List<SelectListItem>

{

new SelectListItem { Value = "NA", Text = "-Select-" },

new SelectListItem { Value = "en-US", Text = "English (United States)" },

new SelectListItem { Value = "en-IN", Text = "English (India)" },

new SelectListItem { Value = "ta-IN", Text = "Tamil (India)" },

new SelectListItem { Value = "hi-IN", Text = "Hindi (India)" },

new SelectListItem { Value = "te-IN", Text = "Telugu (India)" }

};

}

}Interface

The "ITextToSpeech" contains one signature for converting text to speech based on the given input. So we have injected this interface in the ASP.NET Core "Startup.cs" class as a "AddTransient."

using System.Threading.Tasks;

namespace TextToSpeechApp.BusinessLayer.Interface

{

public interface ITextToSpeech

{

Task<byte[]> TranslateText(string token, string key, string content, string lang);

}

}Text to Speech API Service

We can add the valid Speech API Subscription key and authentication token into the following code.

///

<summary>

/// Translate text to speech

/// </summary>

/// <param name="token">Authentication token</param>

/// <param name="key">Azure subscription key</param>

/// <param name="content">Text content for speech</param>

/// <param name="lang">Speech conversion language</param>

/// <returns></returns>

public async Task<byte[]> TranslateText(string token, string key, string content, string lang)

{

//Request url for the speech api.

string uri = "https://westus.tts.speech.microsoft.com/cognitiveservices/v1";

//Generate Speech Synthesis Markup Language (SSML)

var requestBody = this.GenerateSsml(lang, "Female", this.ServiceName(lang), content);

using (var client = new HttpClient())

using (var request = new HttpRequestMessage())

{

request.Method = HttpMethod.Post;

request.RequestUri = new Uri(uri);

request.Headers.Add("Ocp-Apim-Subscription-Key", key);

request.Headers.Authorization = new AuthenticationHeaderValue("Bearer", token);

request.Headers.Add("X-Microsoft-OutputFormat", "audio-16khz-64kbitrate-mono-mp3");

request.Content = new StringContent(requestBody, Encoding.UTF8, "text/plain");

request.Content.Headers.Remove("Content-Type");

request.Content.Headers.Add("Content-Type", "application/ssml+xml");

request.Headers.Add("User-Agent", "TexttoSpeech");

var response = await client.SendAsync(request);

var httpStream = await response.Content.ReadAsStreamAsync().ConfigureAwait(false);

Stream receiveStream = httpStream;

byte[] buffer = new byte[32768];

using (Stream stream = httpStream)

{

using (MemoryStream ms = new MemoryStream())

{

byte[] waveBytes = null;

int count = 0;

do

{

byte[] buf = new byte[1024];

count = stream.Read(buf, 0, 1024);

ms.Write(buf, 0, count);

} while (stream.CanRead && count > 0);

waveBytes = ms.ToArray();

return waveBytes;

}

}

}

}Download

Demo

Output

The given text is converted into speech in desired language listed in a drop-down list using Microsoft Speech API.

Reference

Reference

See Also

You can download other source codes from MSDN Code, using the link, mentioned below.

Summary

From this article, we have learned how to convert text to speech in multiple languages using Asp.Net Core and C# as per the API documentation using one of the important Cognitive Services API (Text to Speech API is a part of Speech API). I hope this article is useful for all Azure Cognitive Services API beginners.

Published at DZone with permission of Rajeesh Menoth, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments